Getting Started

Introduction and getting started with jBPM

1. Overview

1.1. What is jBPM?

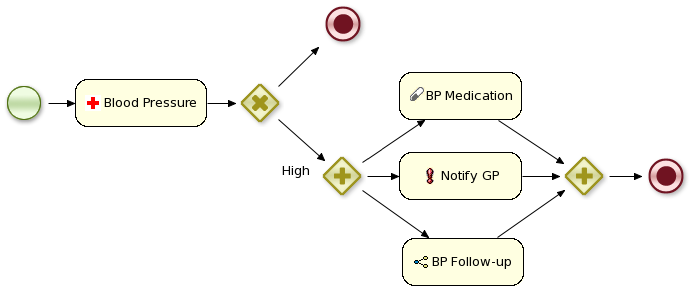

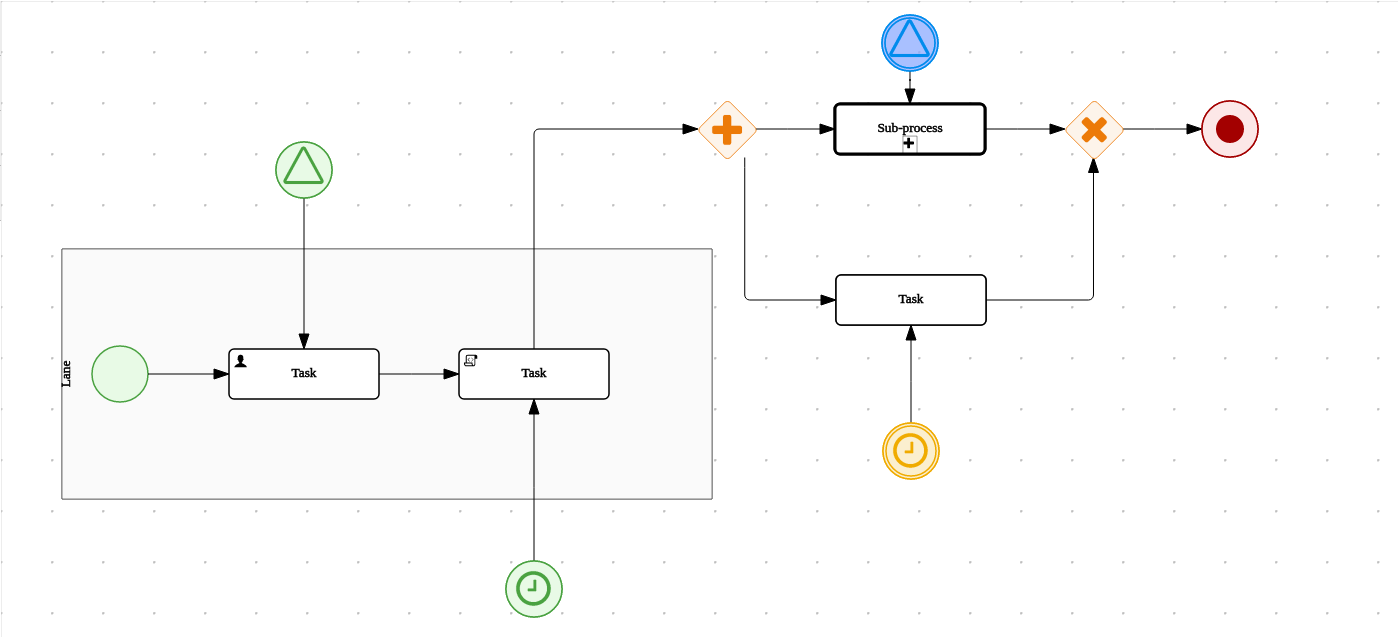

jBPM is a flexible Business Process Management (BPM) Suite. It is light-weight, fully open-source (distributed under Apache License 2.0) and written in Java. It allows you to model, execute, and monitor business processes and cases throughout their life cycle.

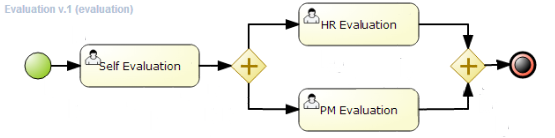

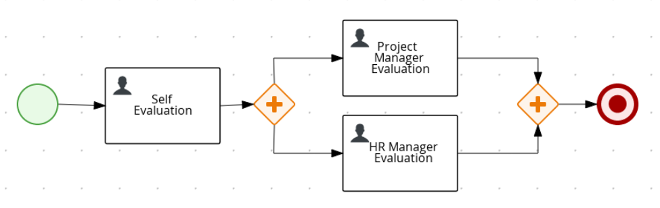

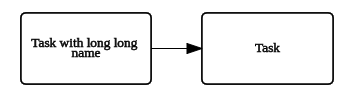

A business process allows you to model your business goals by describing the steps that need to be executed to achieve those goals, and the order of those goals is depicted using a flow chart. This process greatly improves the visibility and agility of your business logic. jBPM focuses on executable business processes, which are business processes that contain enough detail so they can actually be executed on a BPM jBPM engine. Executable business processes bridge the gap between business users and developers as they are higher-level and use domain-specific concepts that are understood by business users but can also be executed directly.

Business processes need to be supported throughout their entire life cycle: authoring, deployment, process management and task lists, and dashboards and reporting.

The core of jBPM is a light-weight, extensible workflow engine written in pure Java that allows you to execute business processes using the latest BPMN 2.0 specification. It can run in any Java environment, embedded in your application or as a service.

On top of the jBPM engine, a lot of features and tools are offered to support business processes throughout their entire life cycle:

-

Pluggable human task service based on WS-HumanTask for including tasks that need to be performed by human actors.

-

Pluggable persistence and transactions (based on JPA / JTA).

-

Case management capabilities added to the jBPM engine to support more adaptive and flexible use cases

-

Web-based process designer to support the graphical creation and simulation of your business processes (drag and drop).

-

Web-based data modeler and form modeler to support the creation of data models and task forms

-

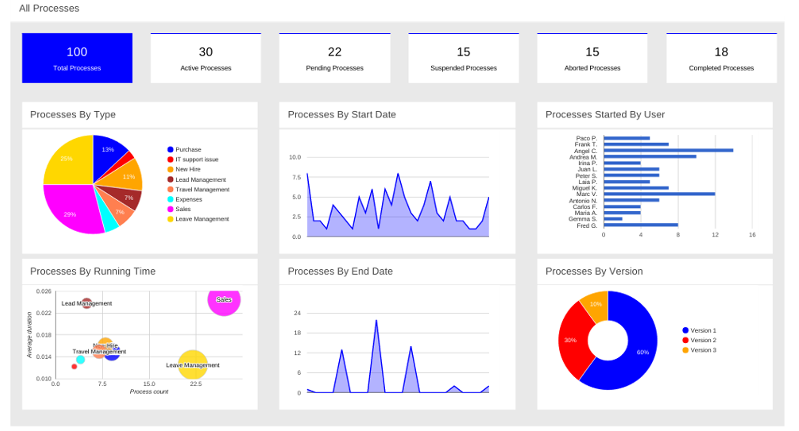

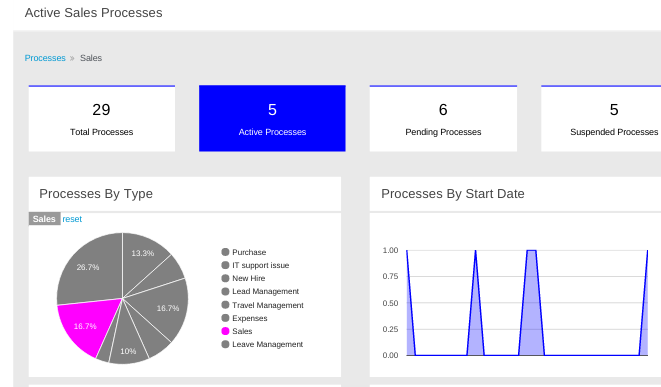

Web-based, customizable dashboards and reporting

-

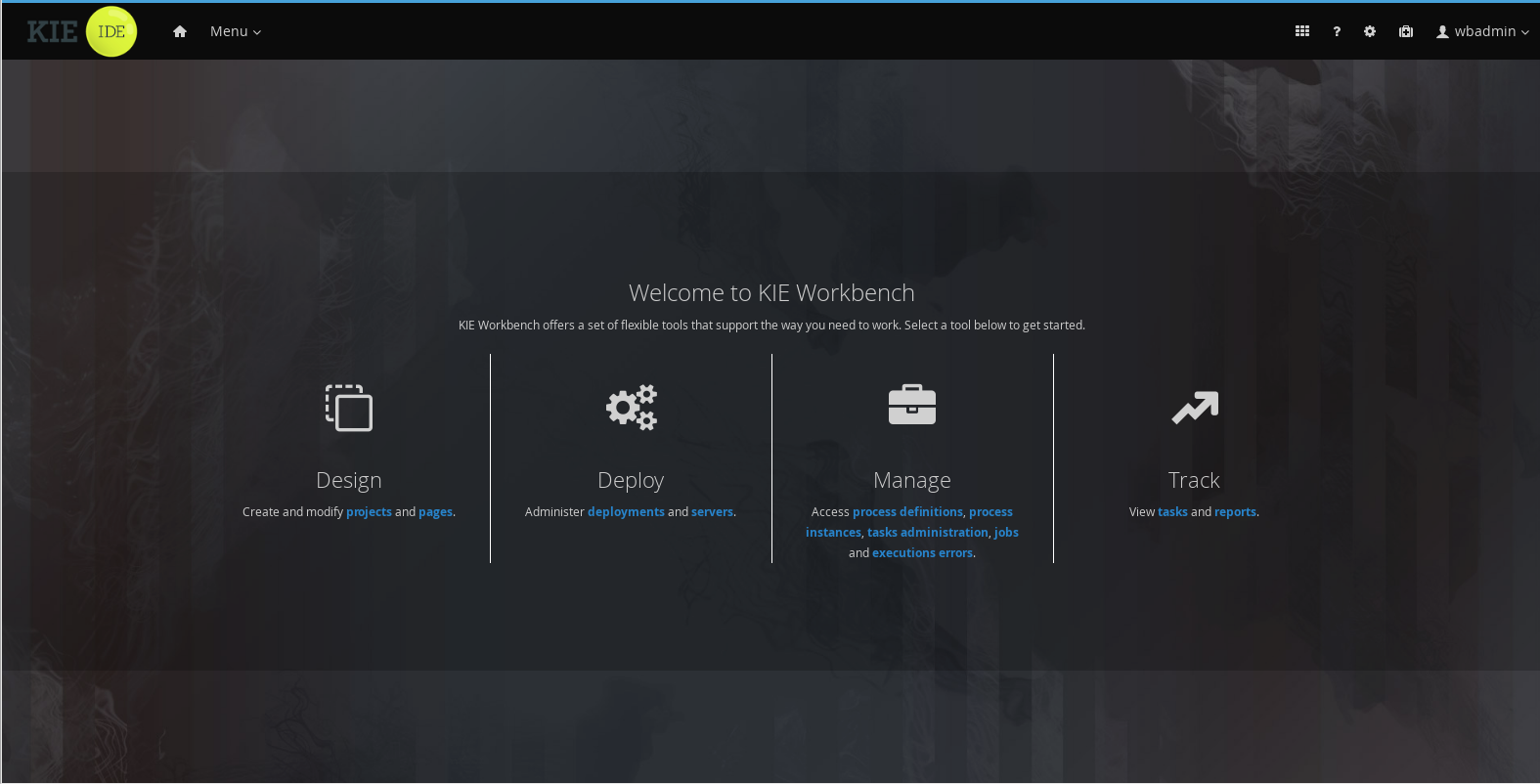

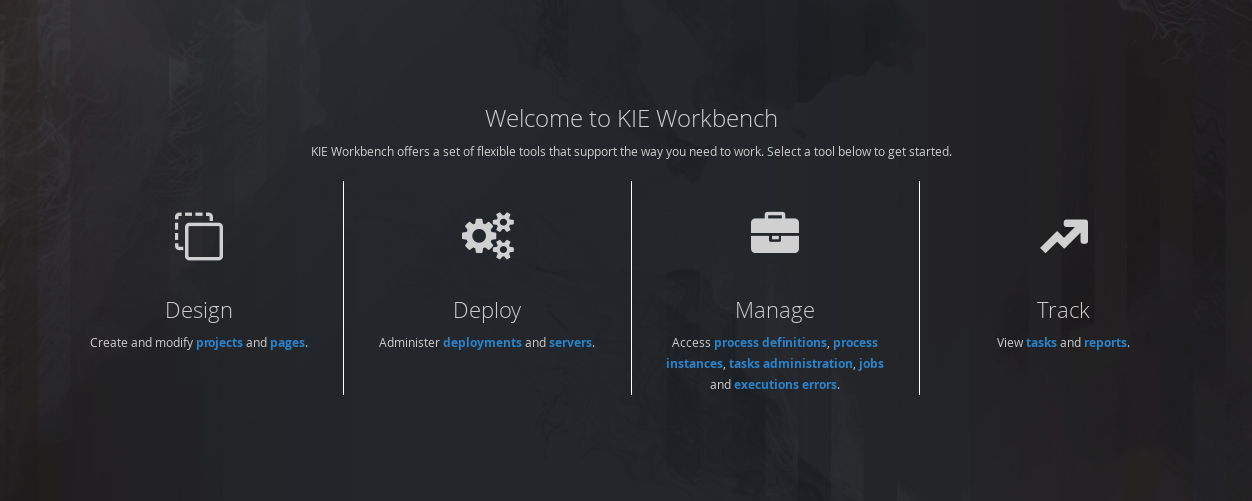

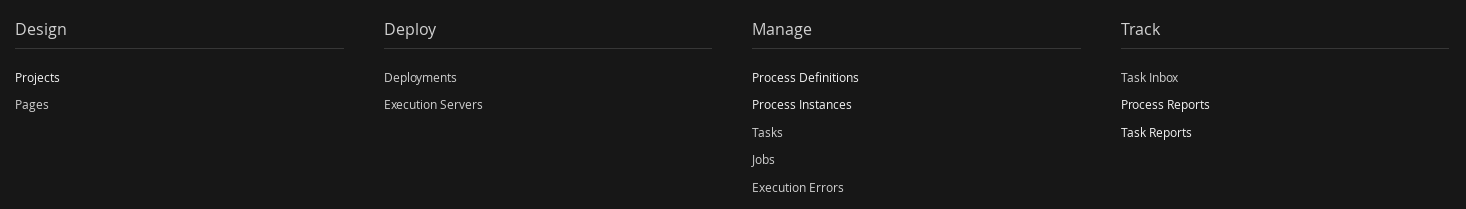

All combined in one web-based Business Central application, supporting the complete BPM life cycle:

-

Modeling and deployment - author your processes, rules, data models, forms and other assets

-

Execution - execute processes, tasks, rules and events on the core runtime engine

-

Runtime Management - work on assigned task, manage process instances, etc

-

Reporting - keep track of the execution using Business Activity Monitoring capabilities

-

-

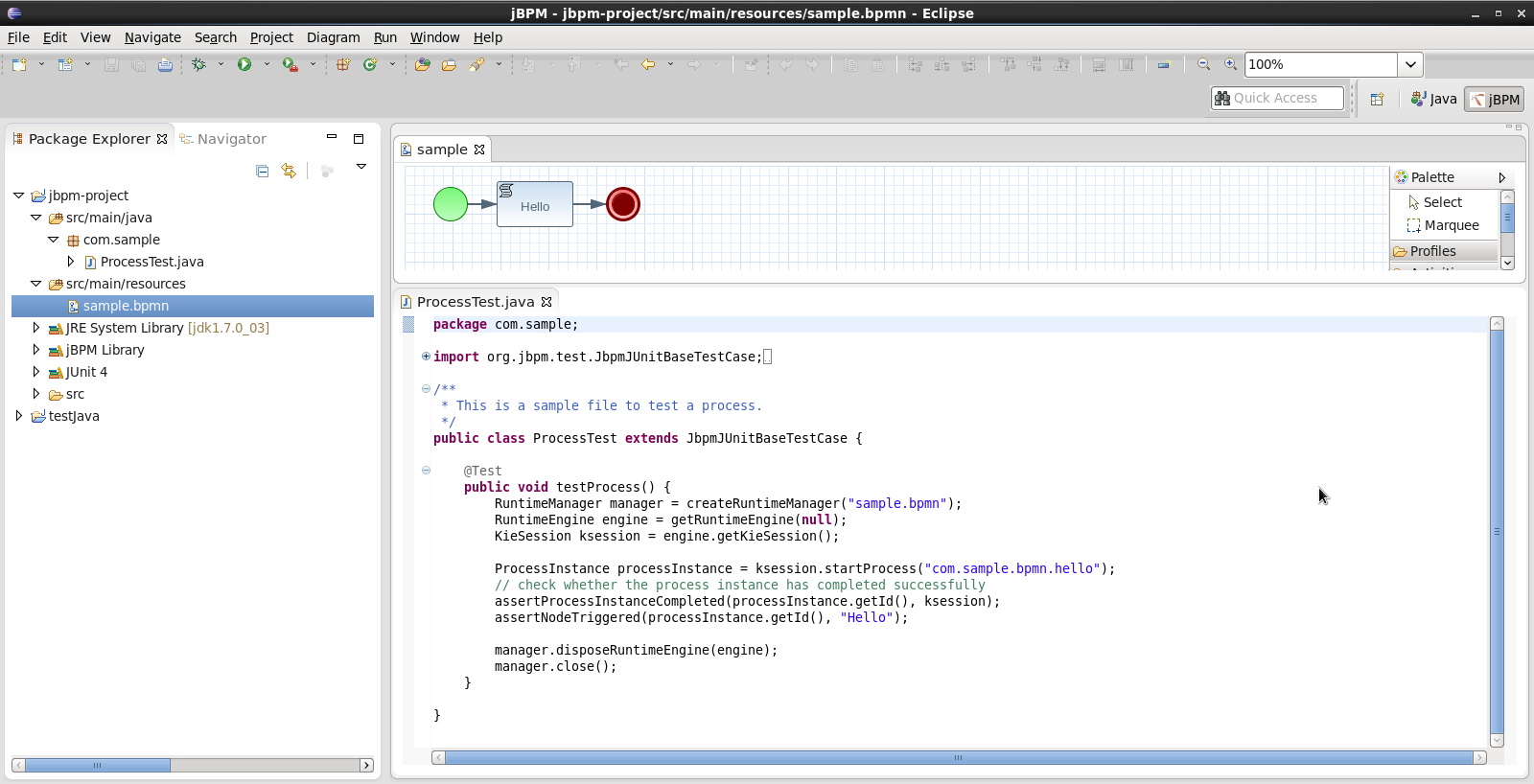

Eclipse-based developer tools to support the modeling, testing and debugging of processes

-

Remote API to jBPM engine as a service (REST, JMS, Remote Java API)

-

Integration with Maven, Spring, OSGi, etc.

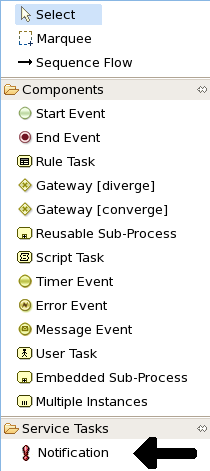

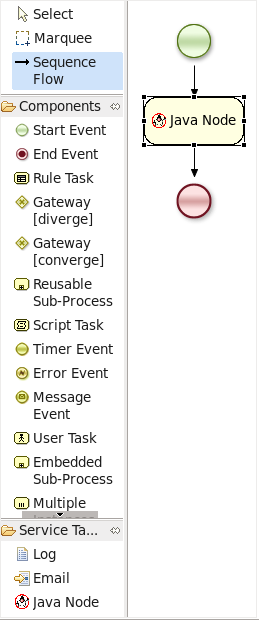

BPM creates the bridge between business analysts, developers and end users by offering process management features and tools in a way that both business users and developers like. Domain-specific nodes can be plugged into the palette, making the processes more easily understood by business users.

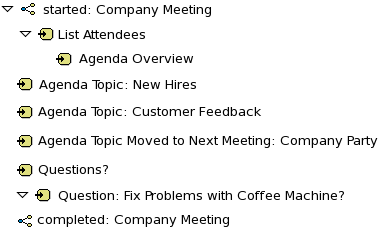

jBPM supports case management by offering more advanced features to support adaptive and dynamic processes that require flexibility to model complex, real-life situations that cannot easily be described using a rigid process. We bring control back to the end users by allowing them to control which parts of the process should be executed; this allows dynamic deviation from the process.

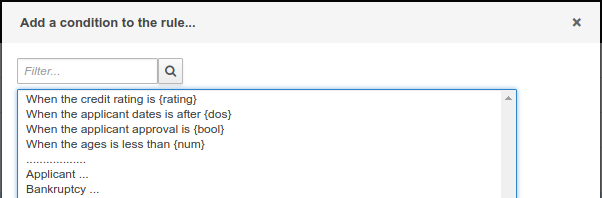

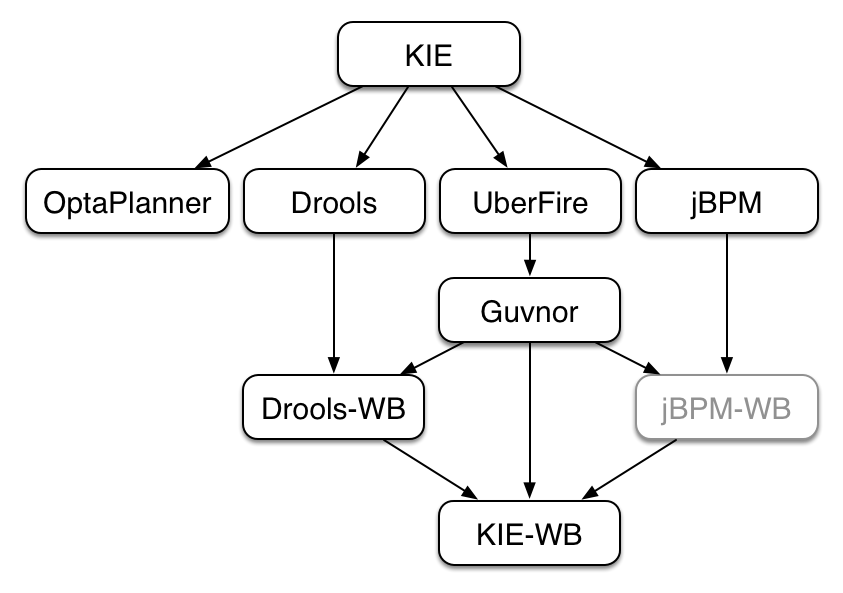

jBPM is not just an isolated jBPM engine. Complex business logic can be modeled as a combination of business processes with business rules and complex event processing. jBPM can be combined with the Drools project to support one unified environment that integrates these paradigms where you model your business logic as a combination of processes, rules and events.

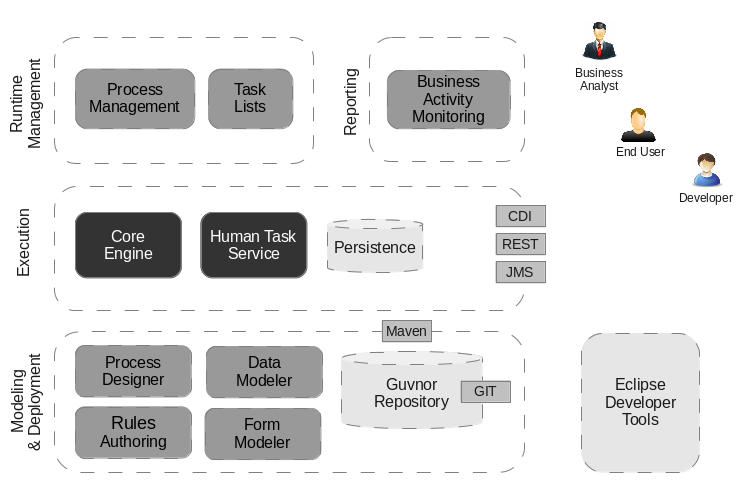

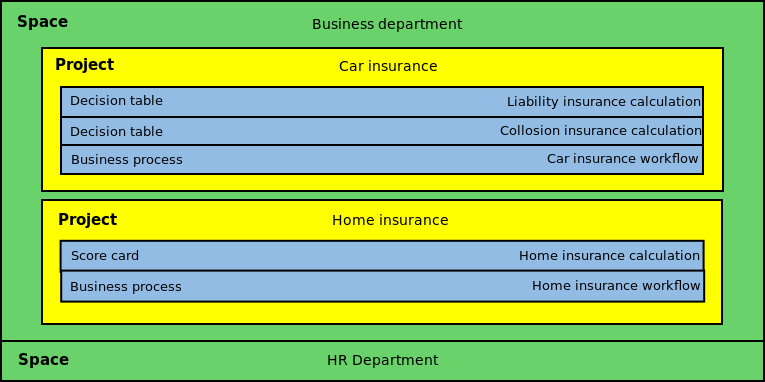

1.2. Overview

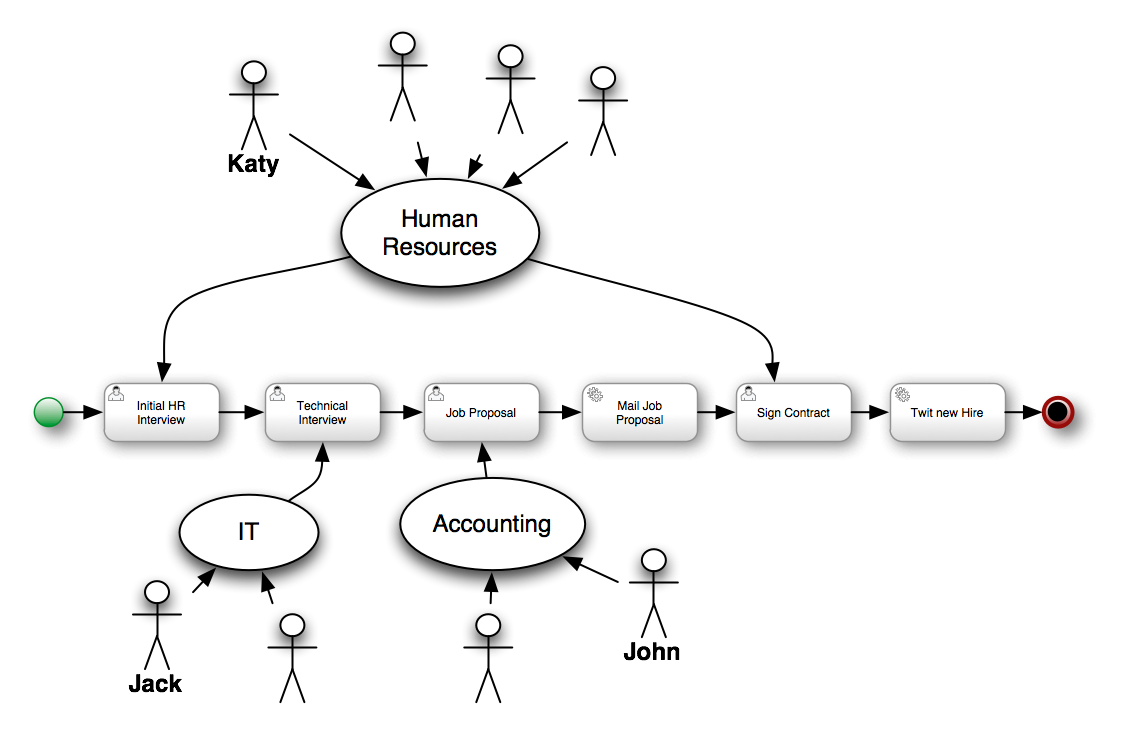

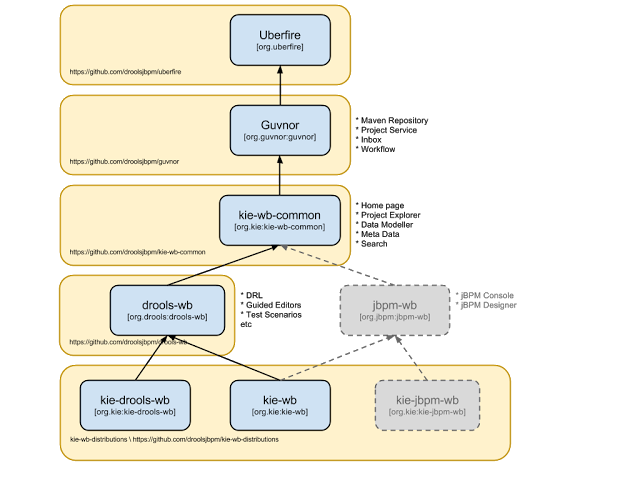

This figure gives an overview of the different components of the jBPM project.

-

The core engine is the heart of the project and allows you to execute business processes in a flexible manner. It is a pure Java component that you can choose to embed as part of your application or deploy it as a service and connect to it through the web-based UI or remote APIs.

-

An optional core service is the human task service that will take care of the human task life cycle if human actors participate in the process.

-

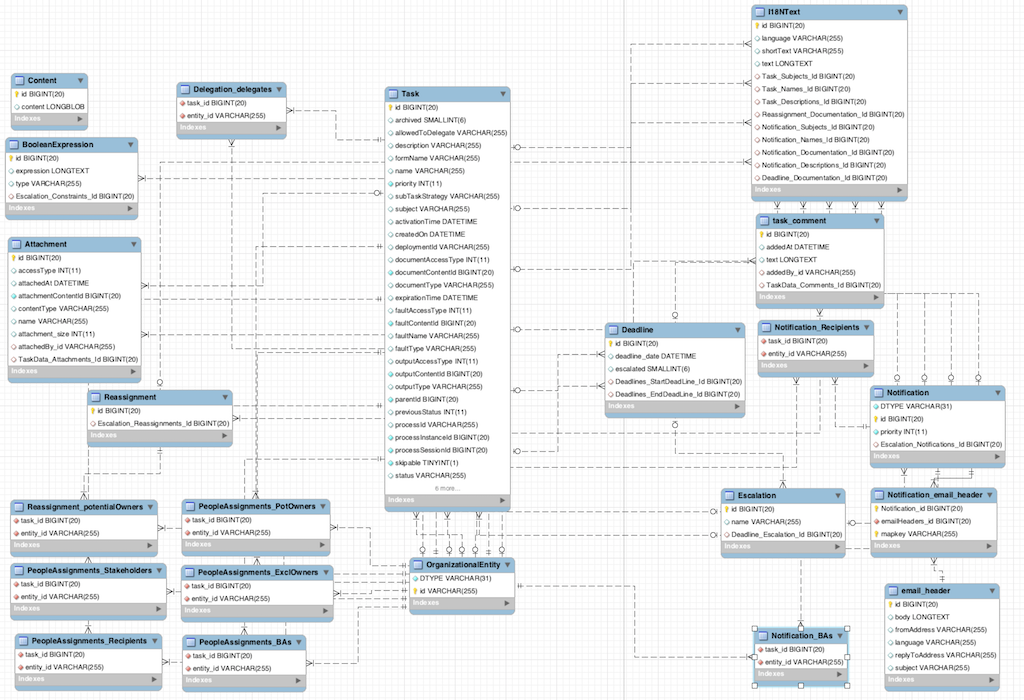

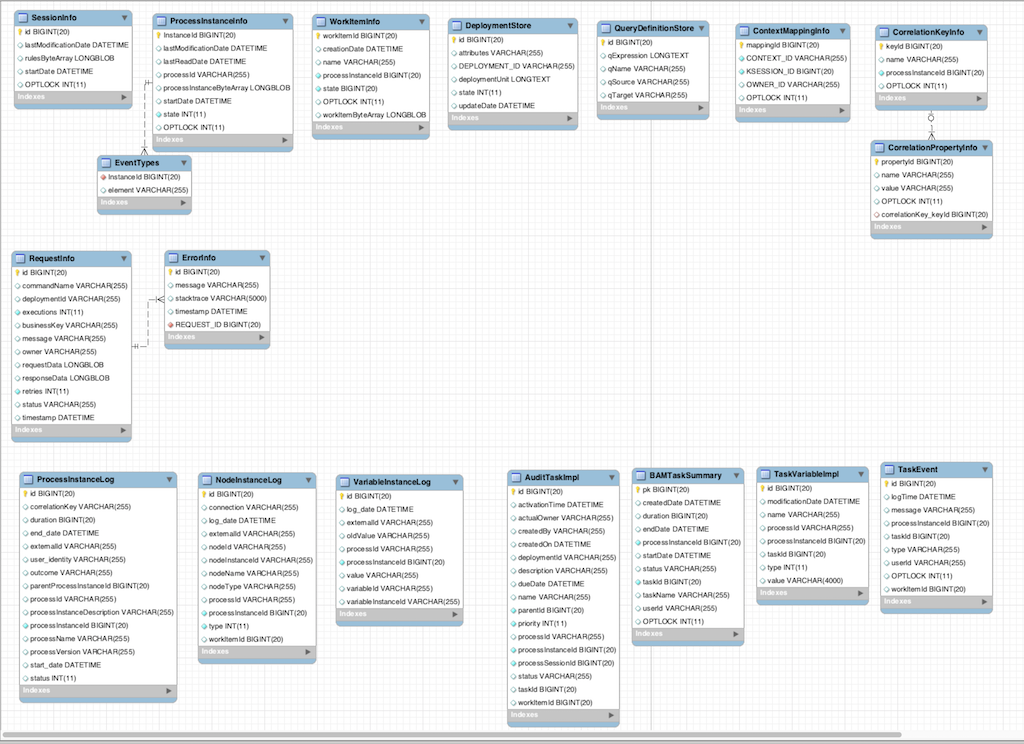

Another optional core service is runtime persistence; this will persist the state of all your process instances and log audit information about everything that is happening at runtime.

-

Applications can connect to the core engine through its Java API or as a set of CDI services, but also remotely through a REST and JMS API.

-

-

Web-based tools allow you to model, simulate and deploy your processes and other related artifacts (like data models, forms, rules, etc.):

-

The process designer allows business users to design and simulate business processes in a web-based environment.

-

The data modeler allows non-technical users to view, modify and create data models for use in your processes.

-

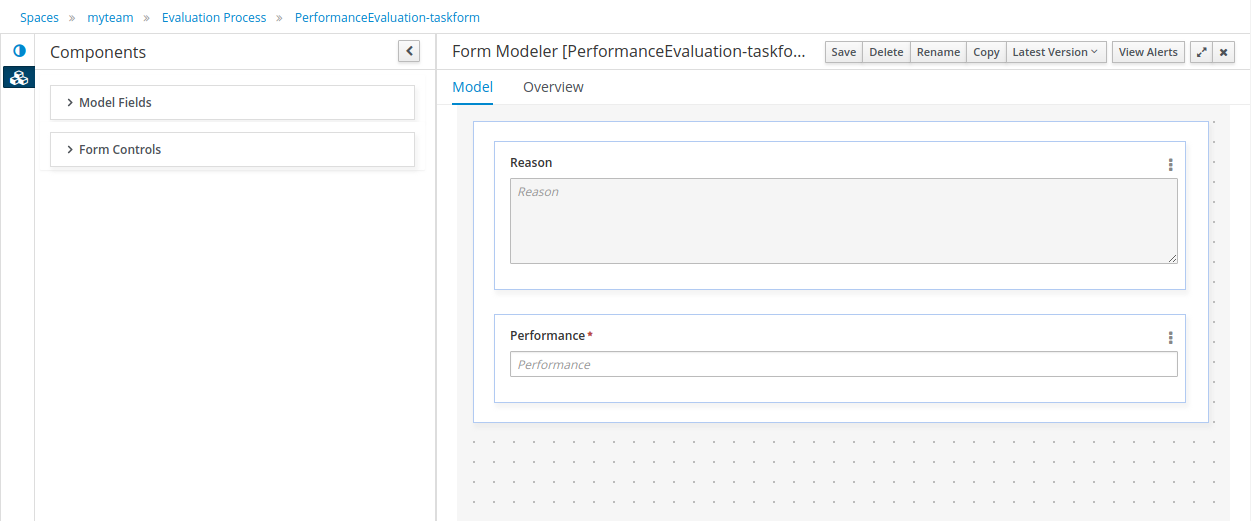

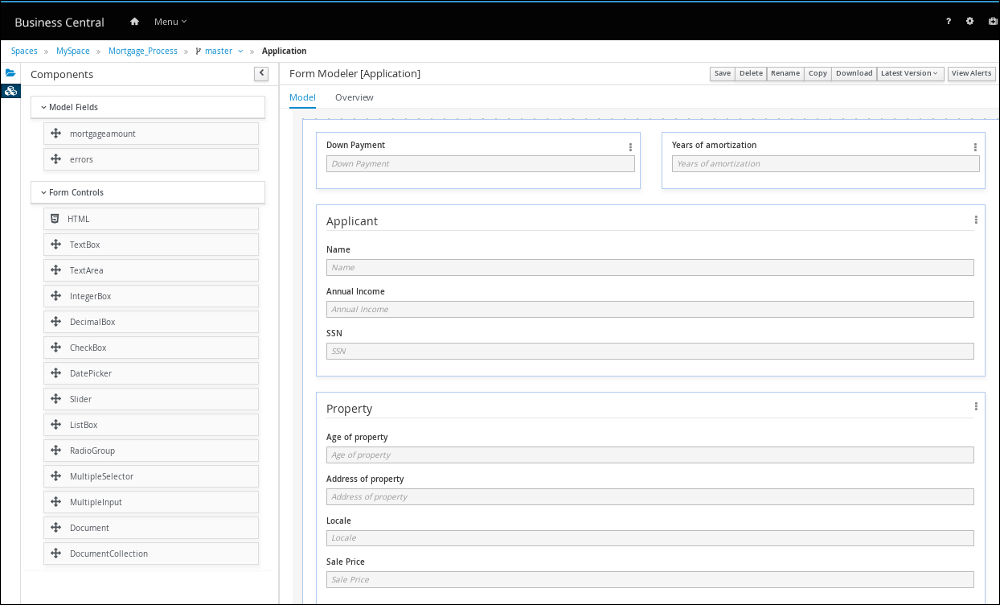

A web-based form modeler also allows you to create, generate or edit forms related to your processes (to start the process or to complete one of the user tasks).

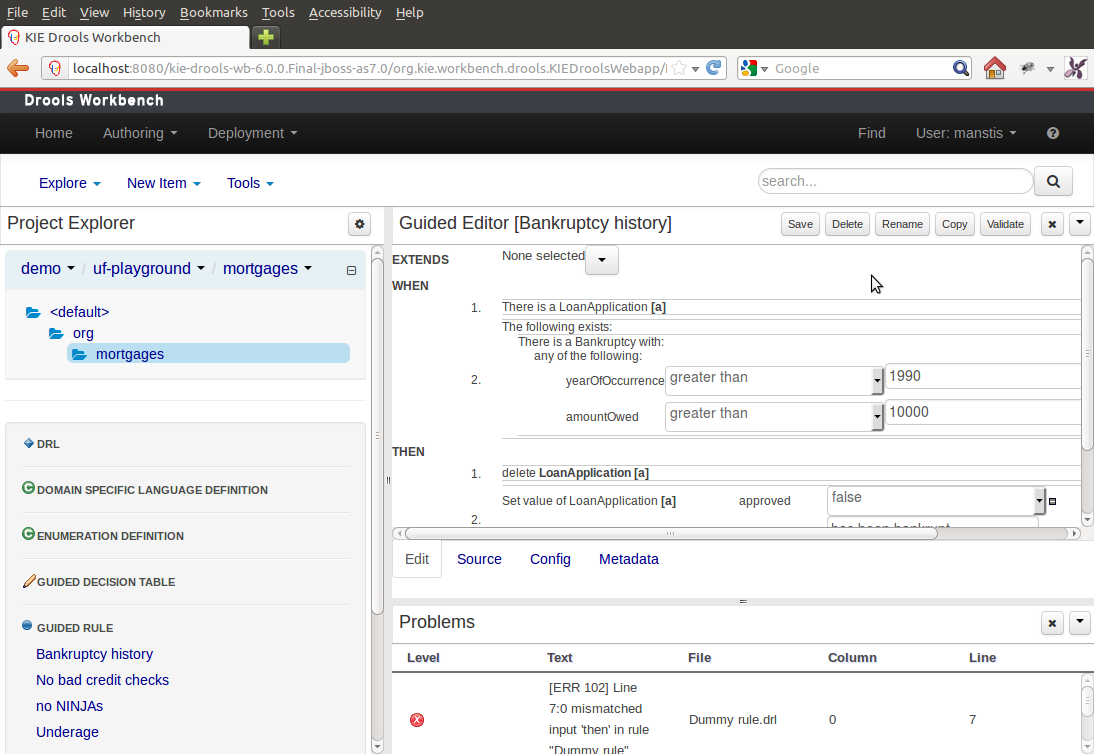

-

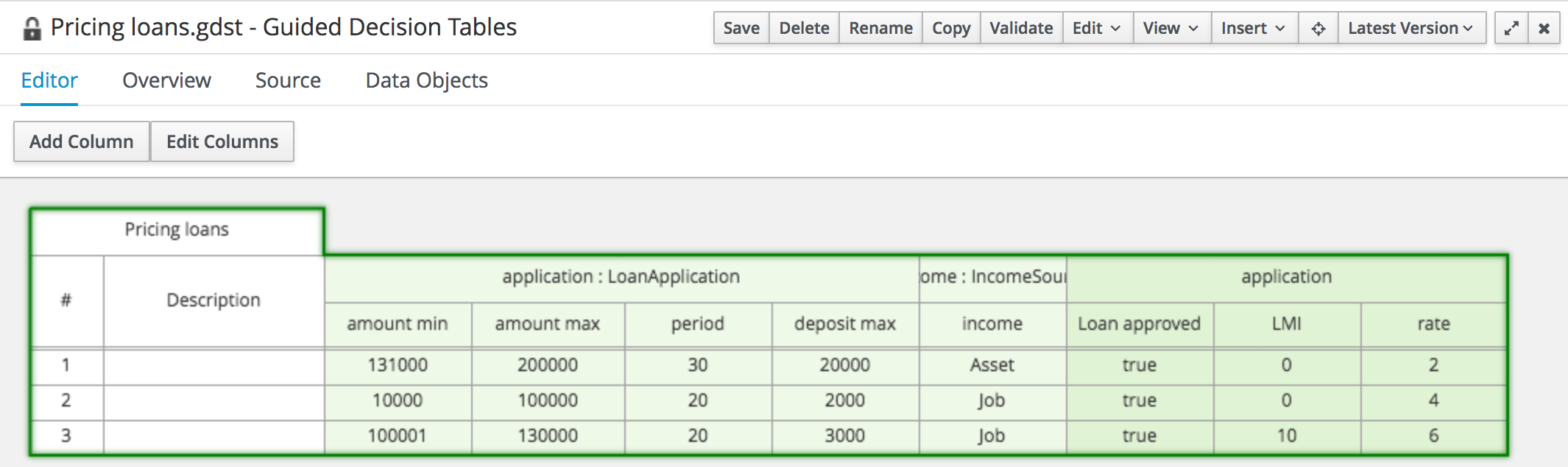

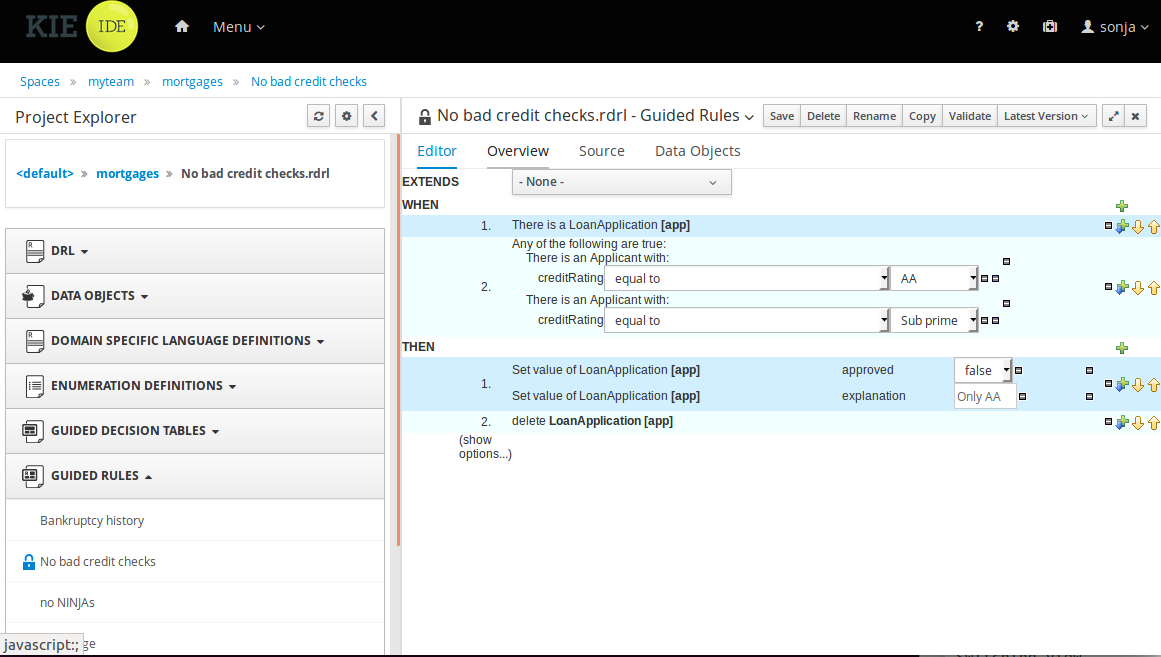

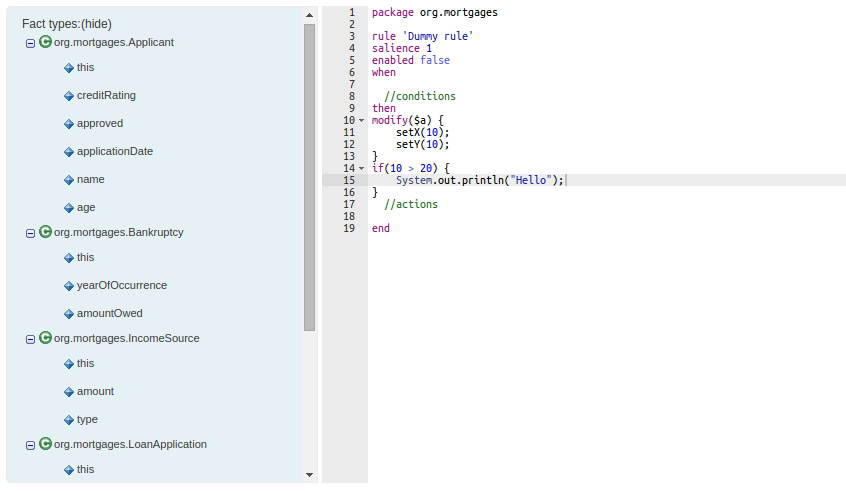

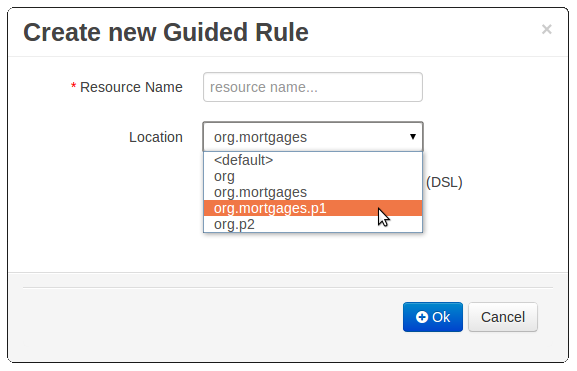

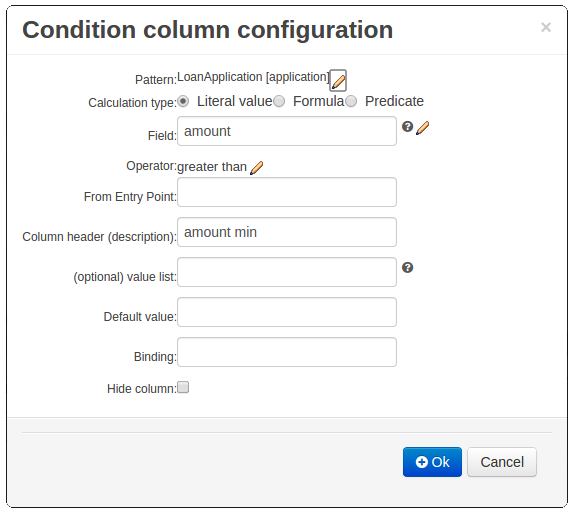

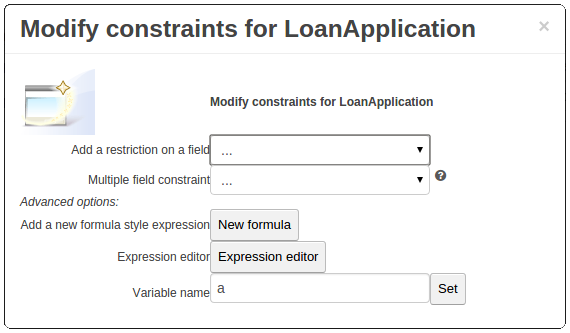

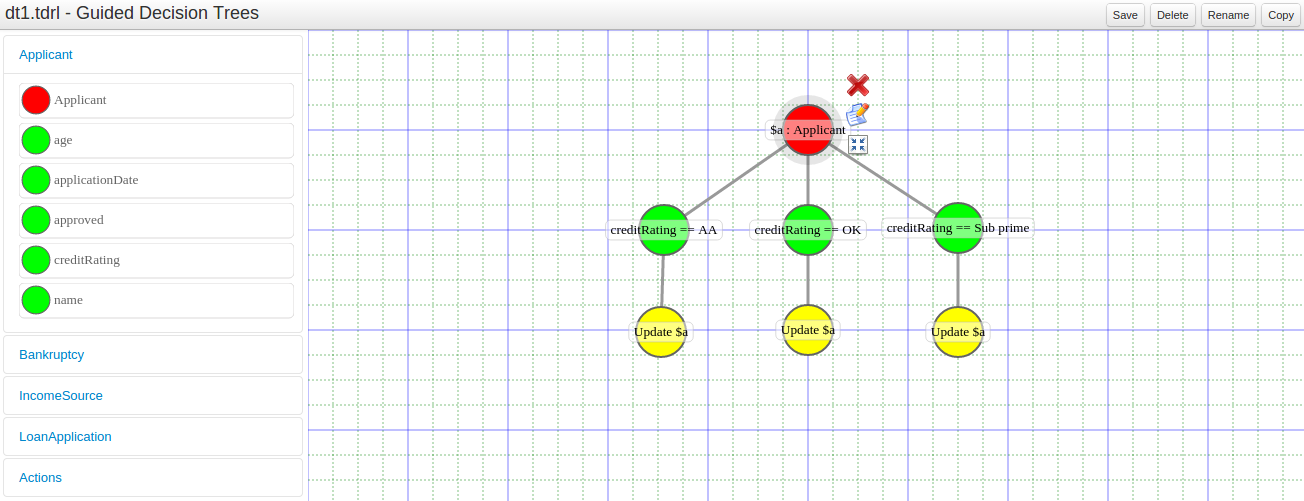

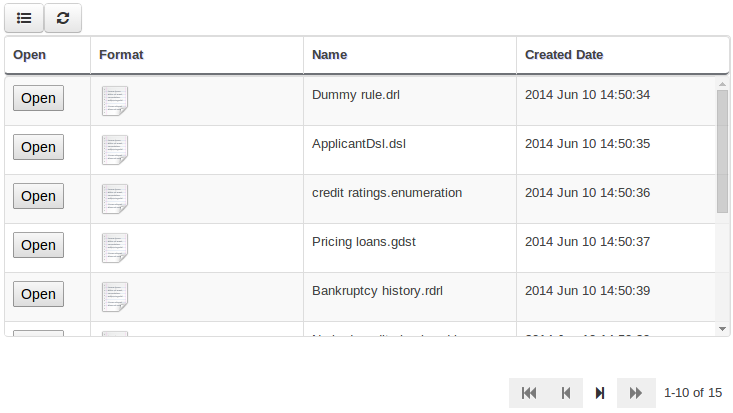

Rule authoring allows you to specify different types of business rules (decision tables, guided rules, etc.) for combination with your processes.

-

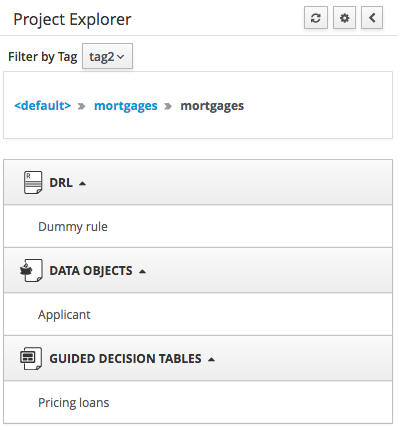

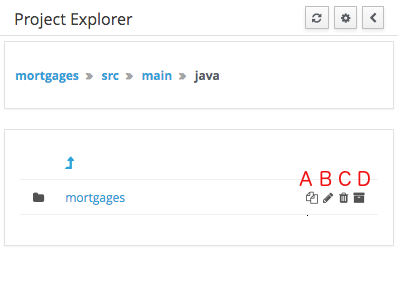

All assets are stored and managed by the Guvnor repository (exposed through Git) and can be managed (versioning), built and deployed.

-

-

The web-based management console allows business users to manage their runtime (manage business processes like start new processes, inspect running instances, etc.), to manage their task list and to perform Business Activity Monitoring (BAM) and see reports.

-

The Eclipse-based developer tools are an extension to the Eclipse IDE, targeted towards developers, and allows you to create business processes using drag and drop, test and debug your processes, etc.

Each of the component is described in more detail below.

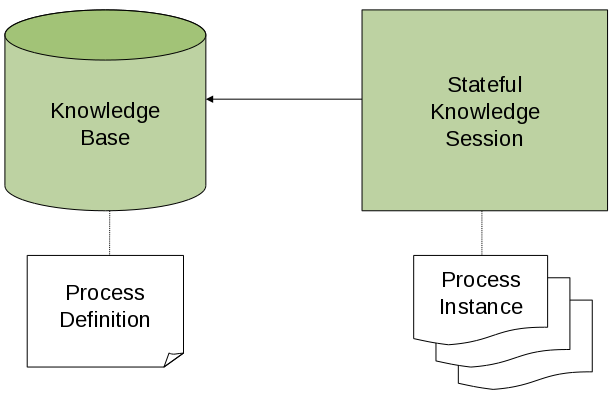

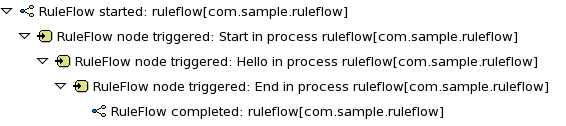

1.3. Core Engine

The core engine is the heart of the project. It’s a light-weight workflow engine that executes your business processes. It can be embedded as part of your application or deployed as a service (possibly in the cloud). Its most important features are the following:

-

Solid, stable core engine for executing your process instances.

-

Native support for the latest BPMN 2.0 specification for modeling and executing business processes.

-

Strong focus on performance and scalability.

-

Light-weight (can be deployed on almost any device that supports a simple Java Runtime Environment; does not require any web container at all).

-

(Optional) pluggable persistence with a default JPA implementation.

-

Pluggable transaction support with a default JTA implementation.

-

Implemented as a generic jBPM engine, so it can be extended to support new node types or other process languages.

-

Listeners to get notified about various events.

-

Ability to migrate running process instances to a new version of their process definition

The jBPM engine can also be integrated with a few other (independent) core services:

-

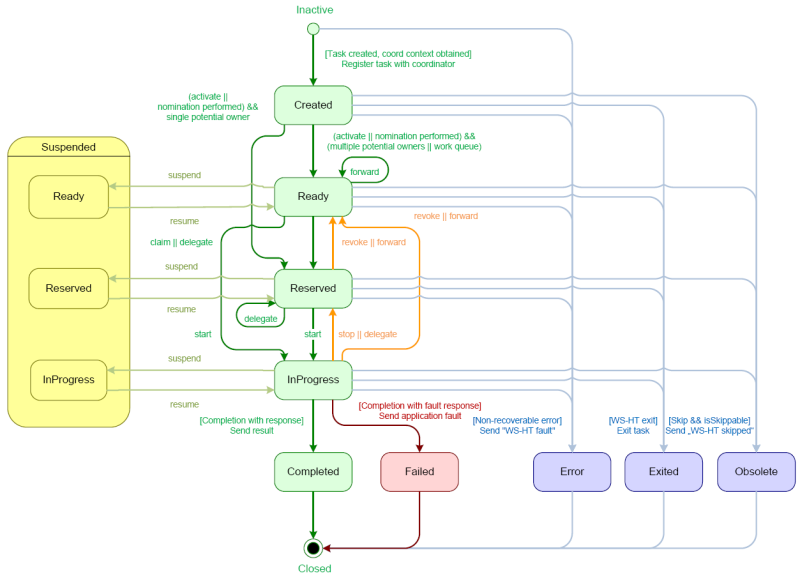

The human task service can be used to manage human tasks when human actors need to participate in the process. It is fully pluggable and the default implementation is based on the WS-HumanTask specification and manages the life cycle of the tasks, task lists, task forms, and some more advanced features like escalation, delegation, rule-based assignments, etc.

-

The history log can store all information about the execution of all the processes in the jBPM engine. This is necessary if you need access to historic information as runtime persistence only stores the current state of all active process instances. The history log can be used to store all current and historic states of active and completed process instances. It can be used to query for any information related to the execution of process instances, for monitoring, analysis, etc.

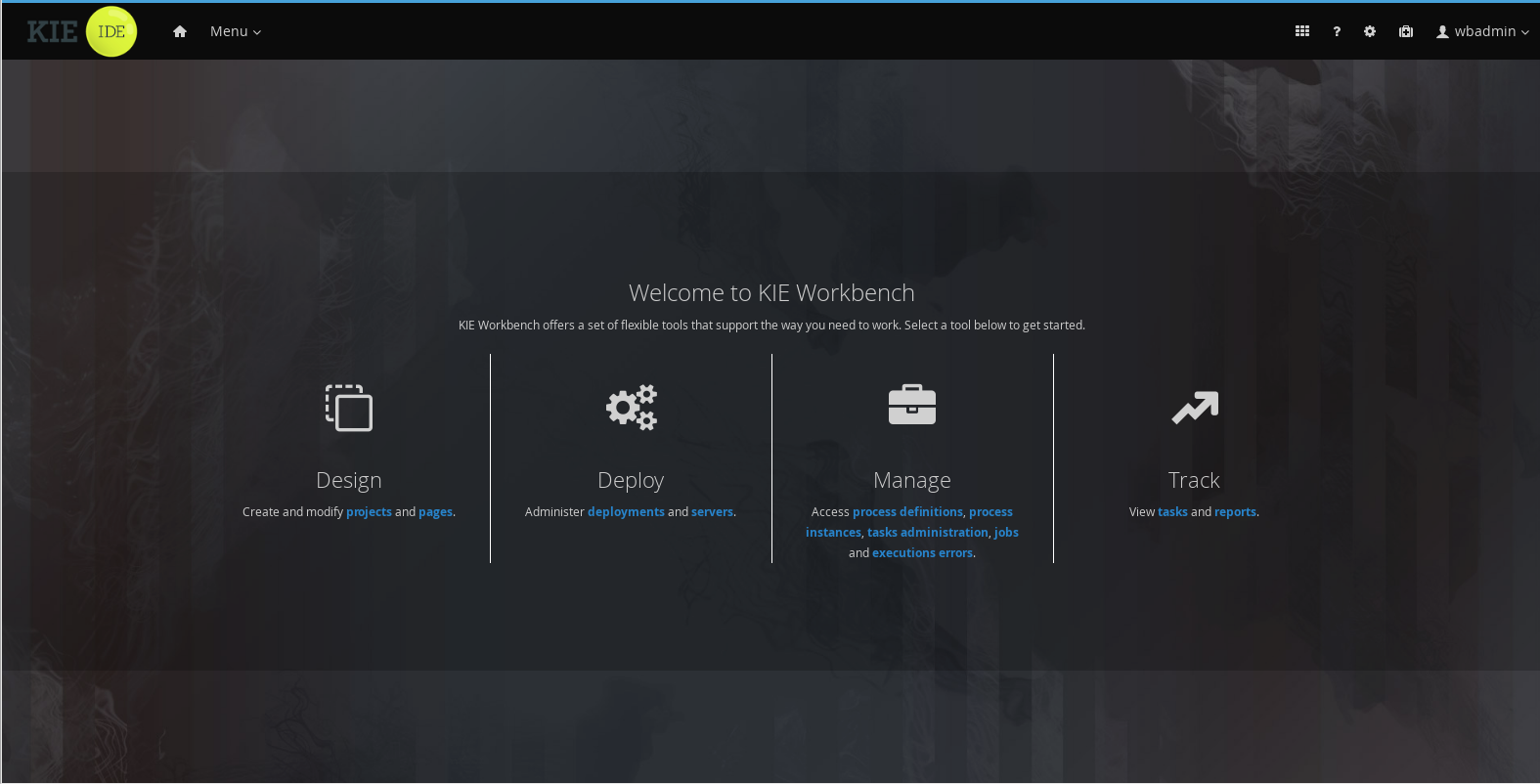

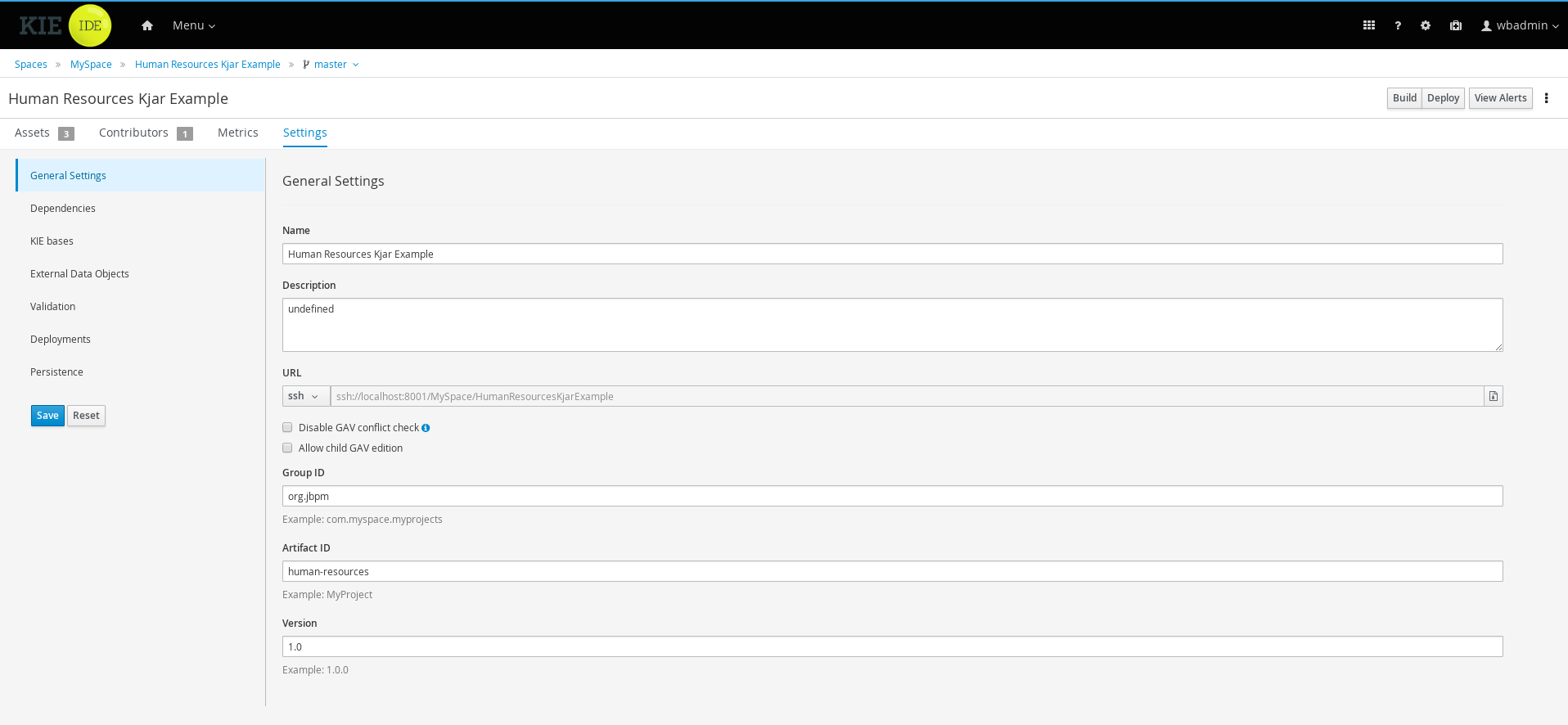

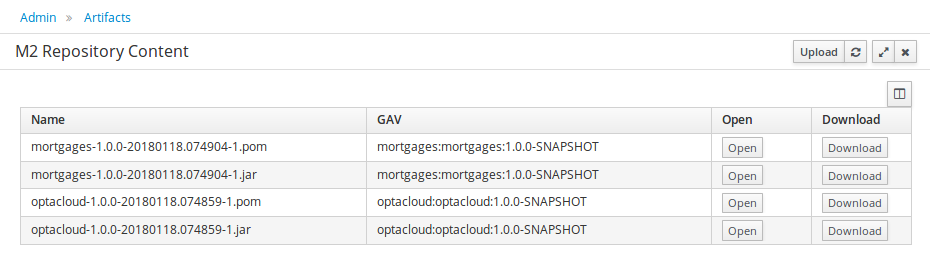

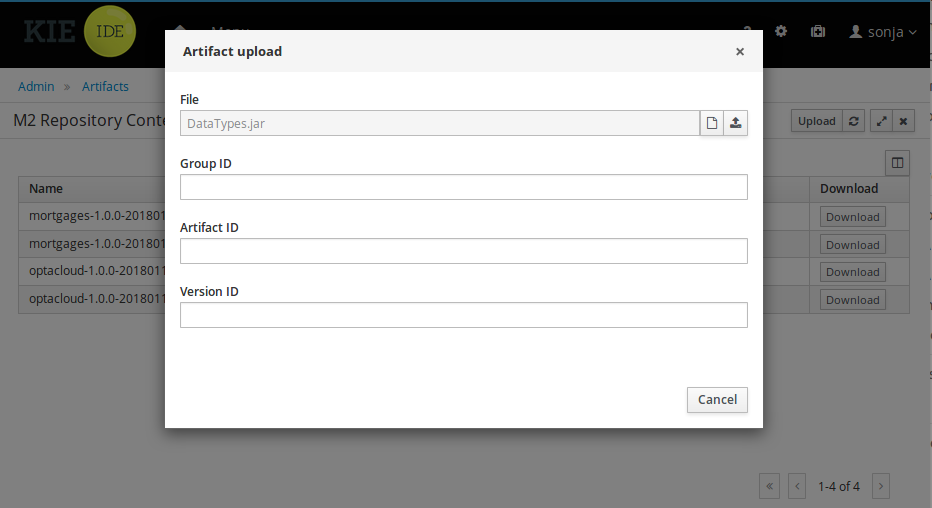

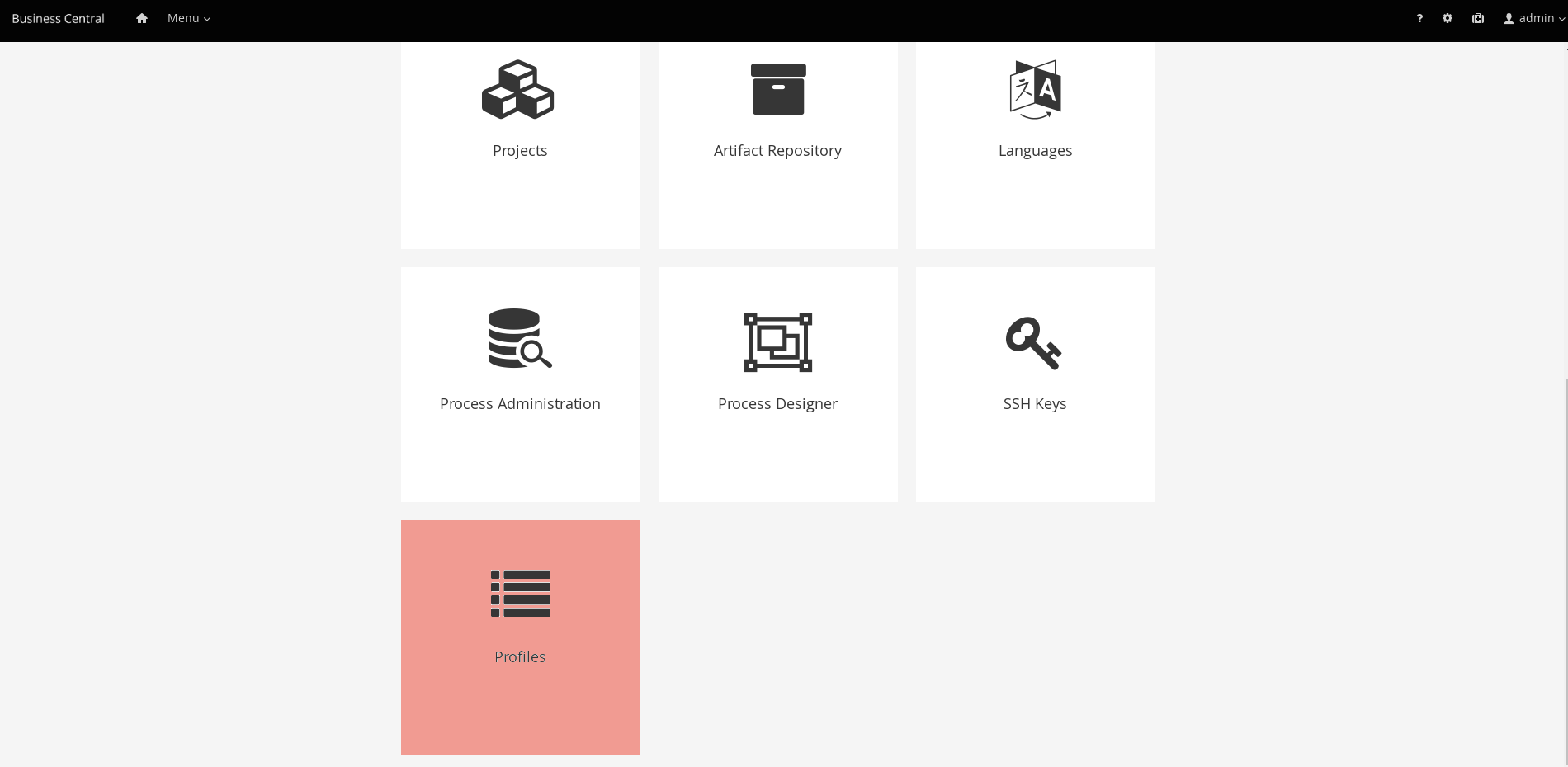

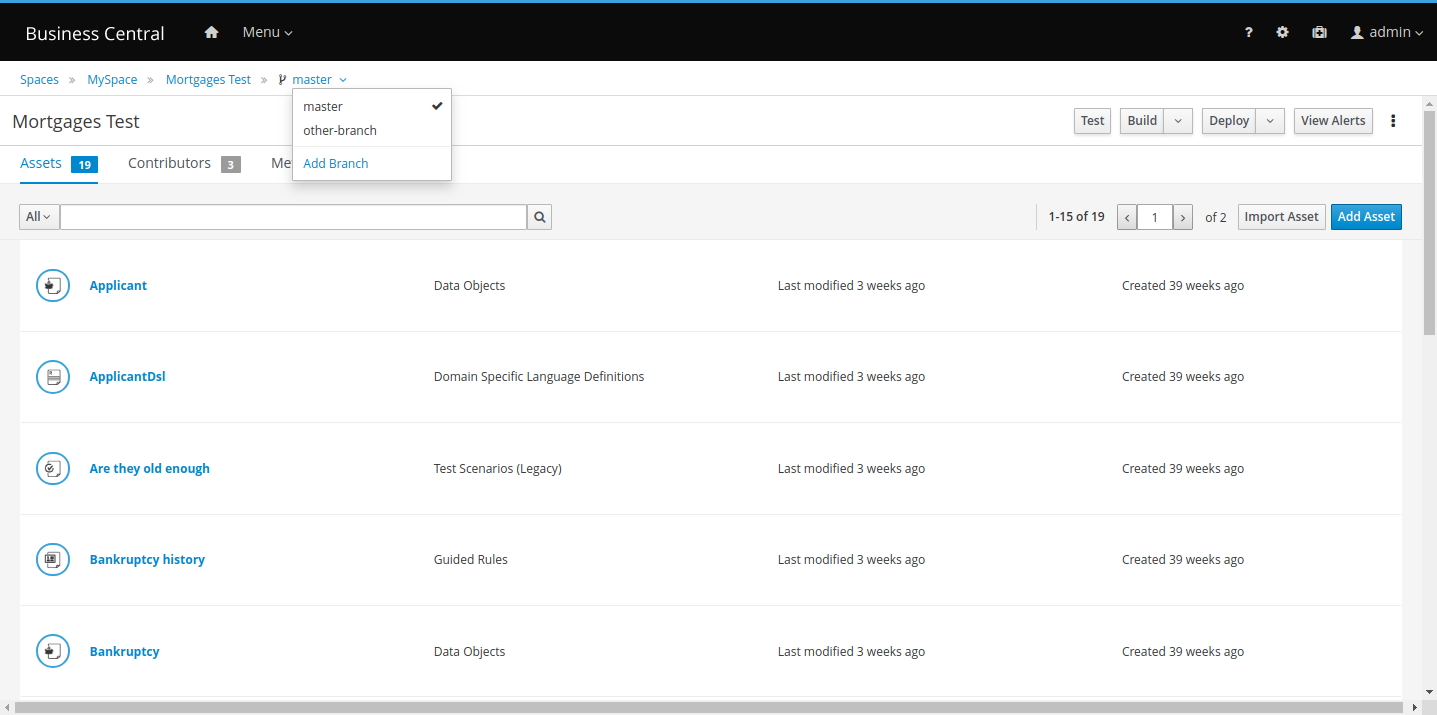

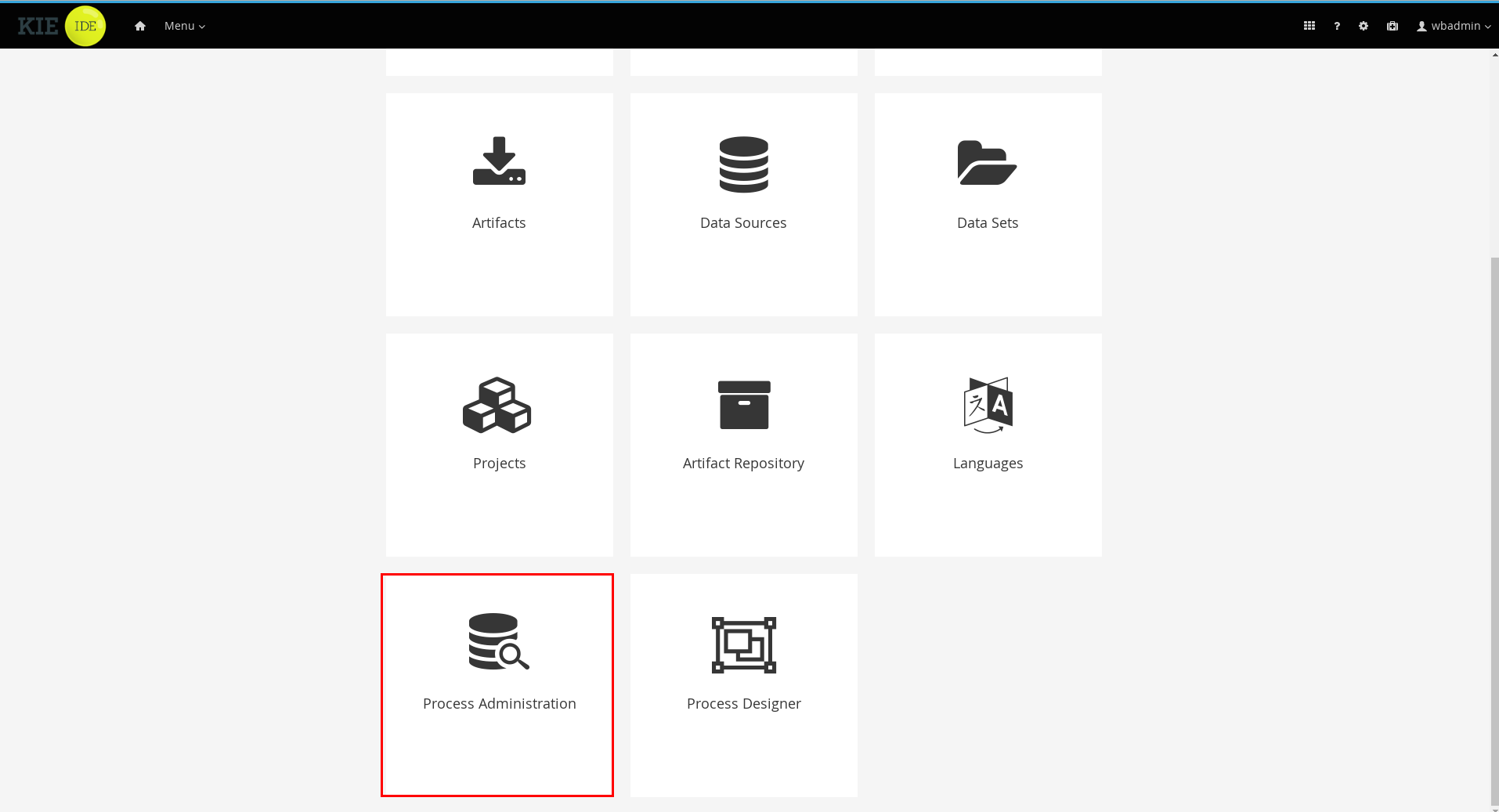

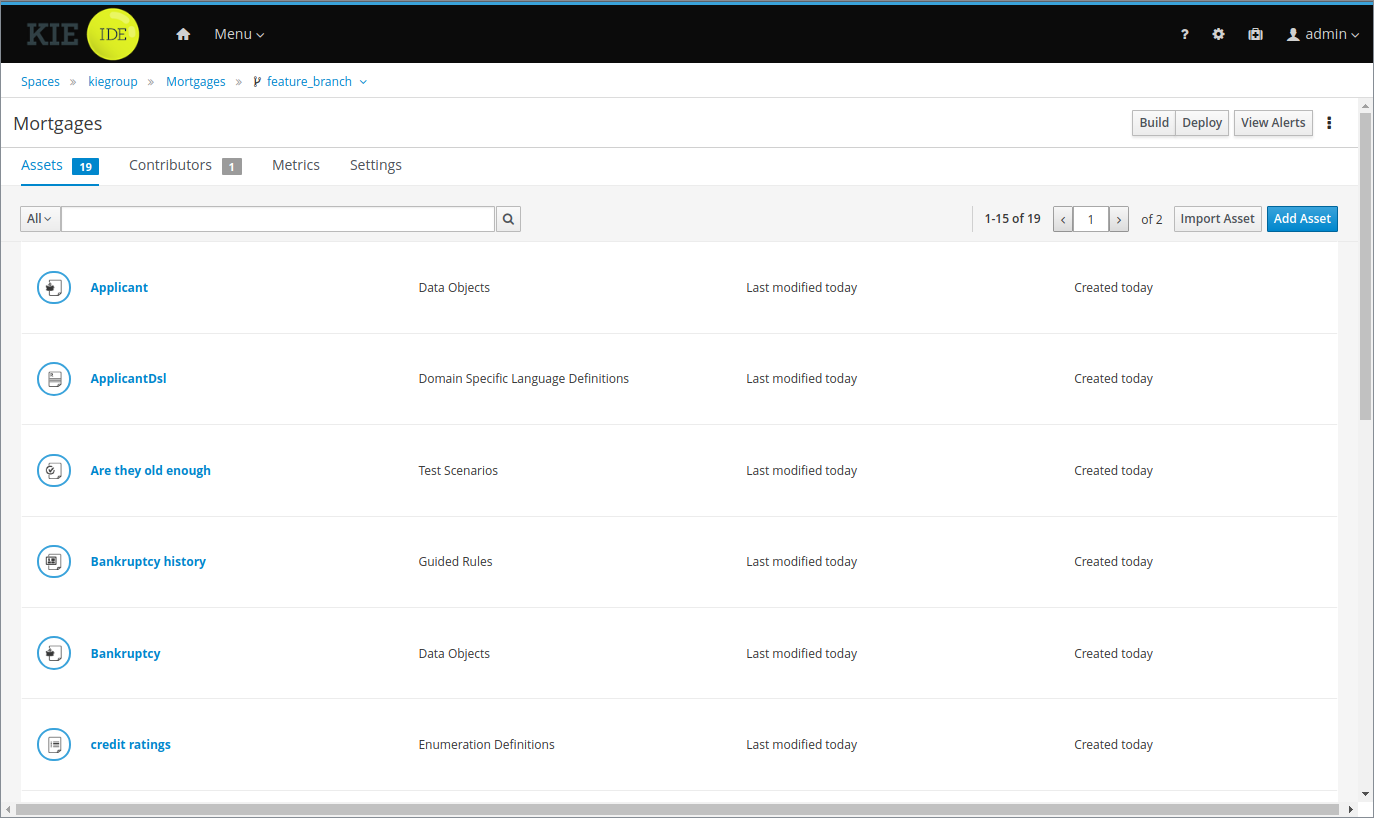

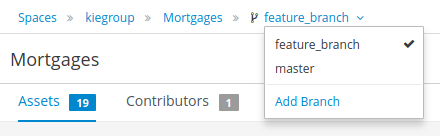

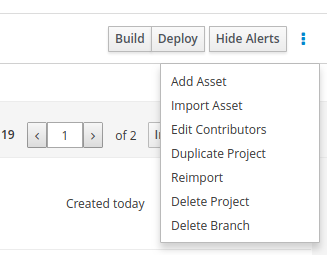

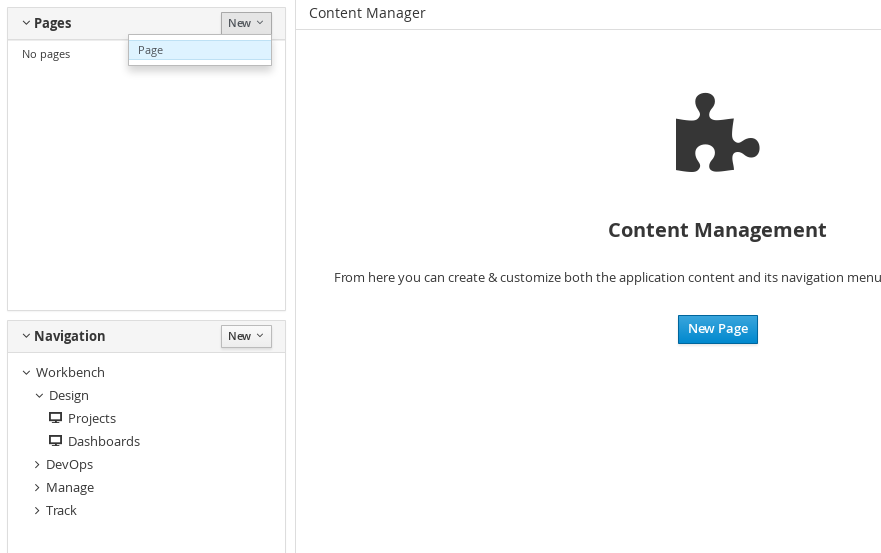

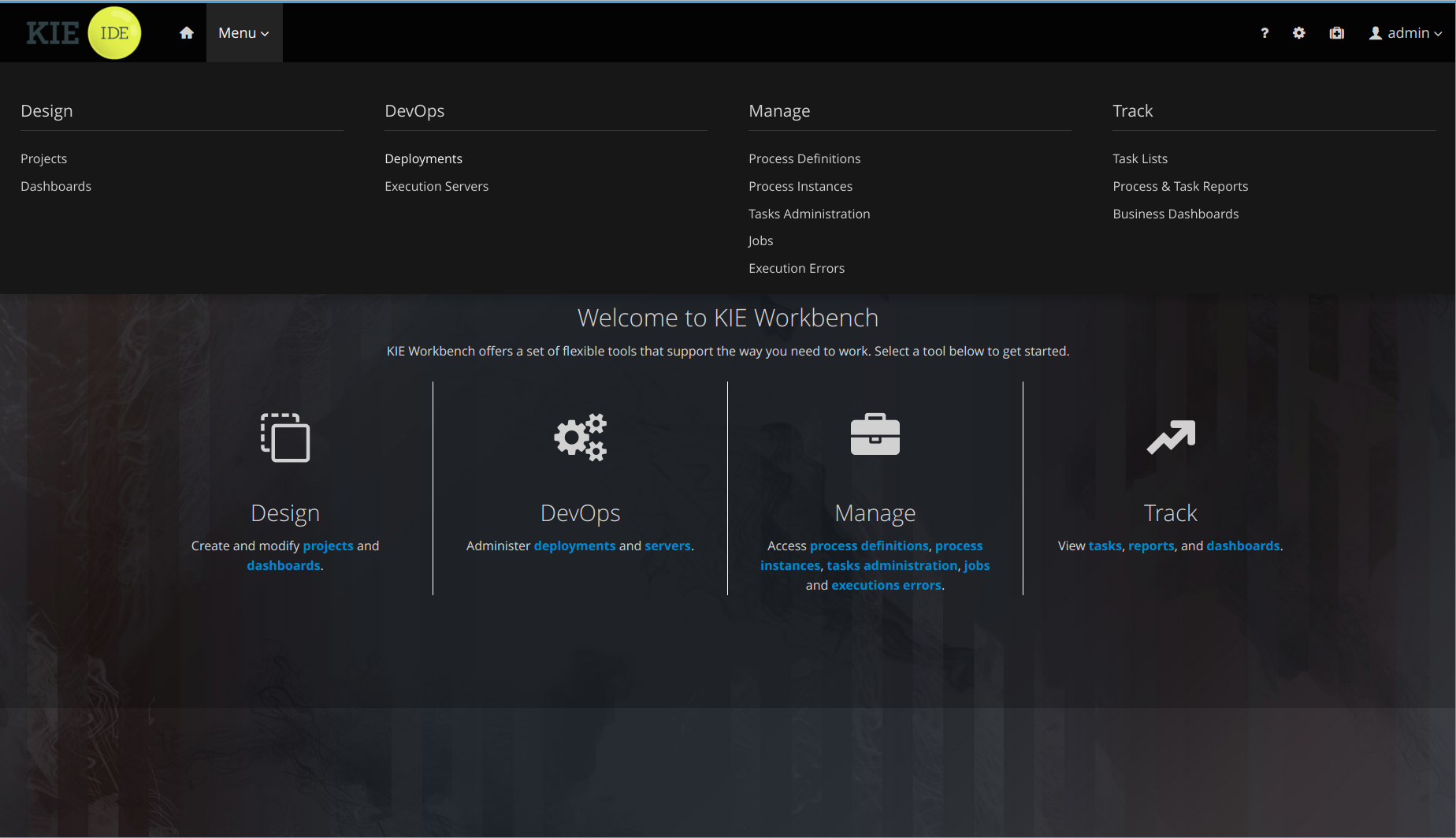

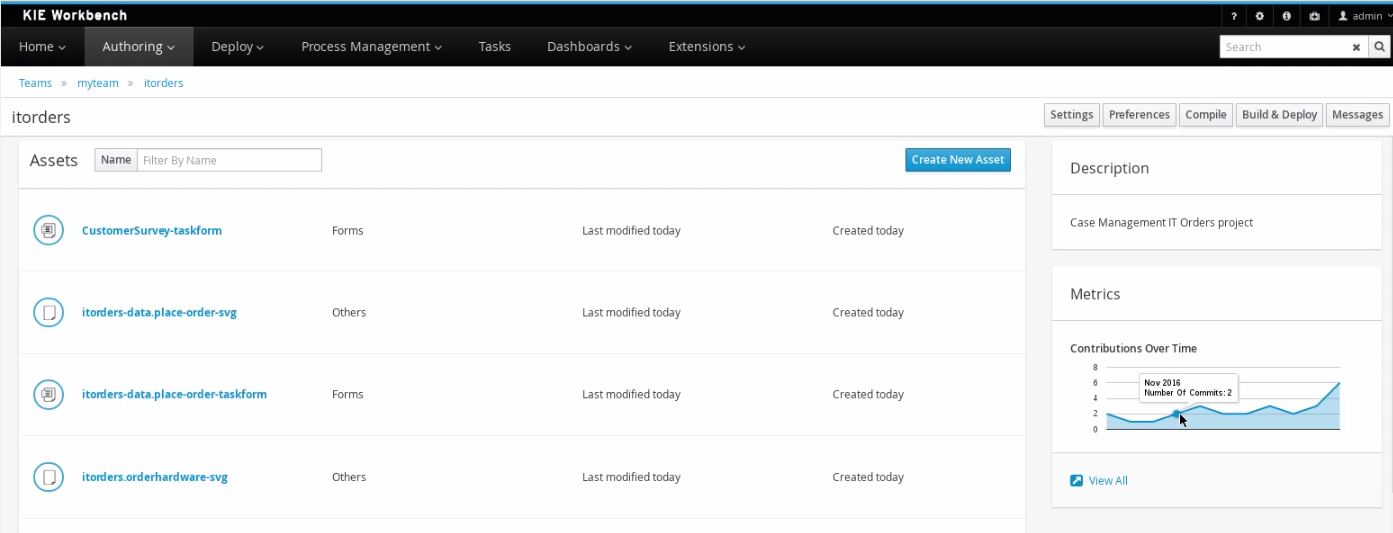

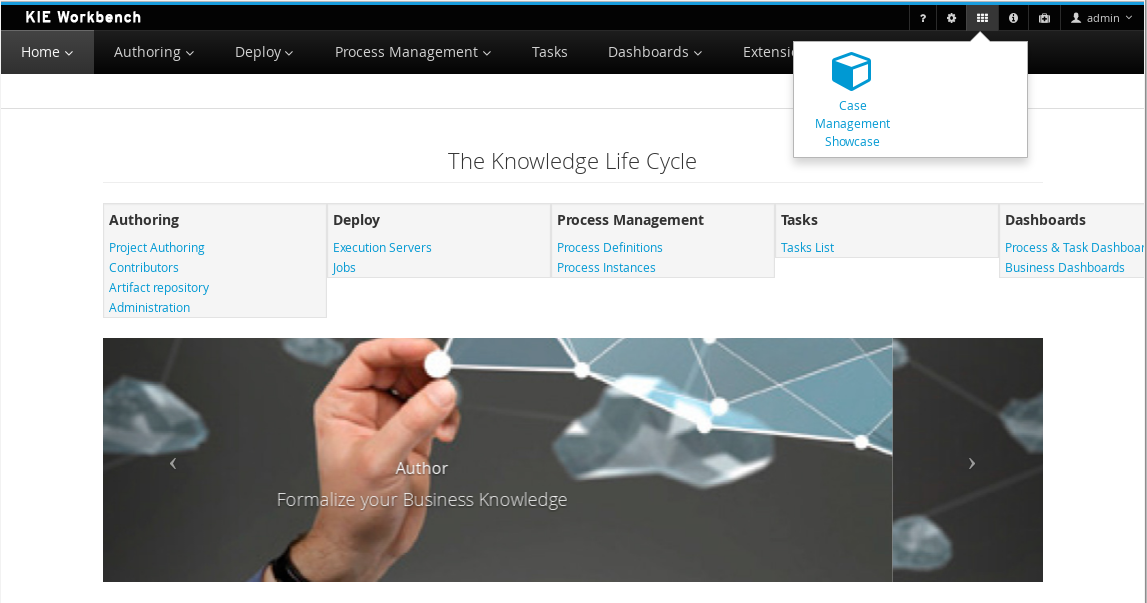

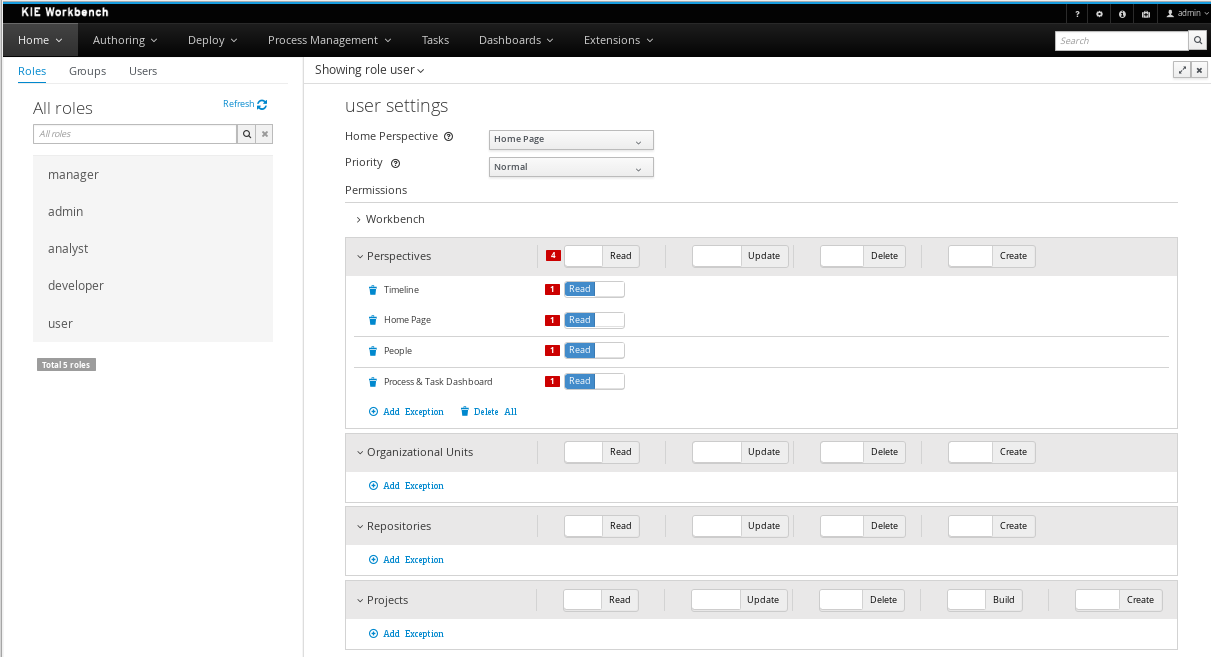

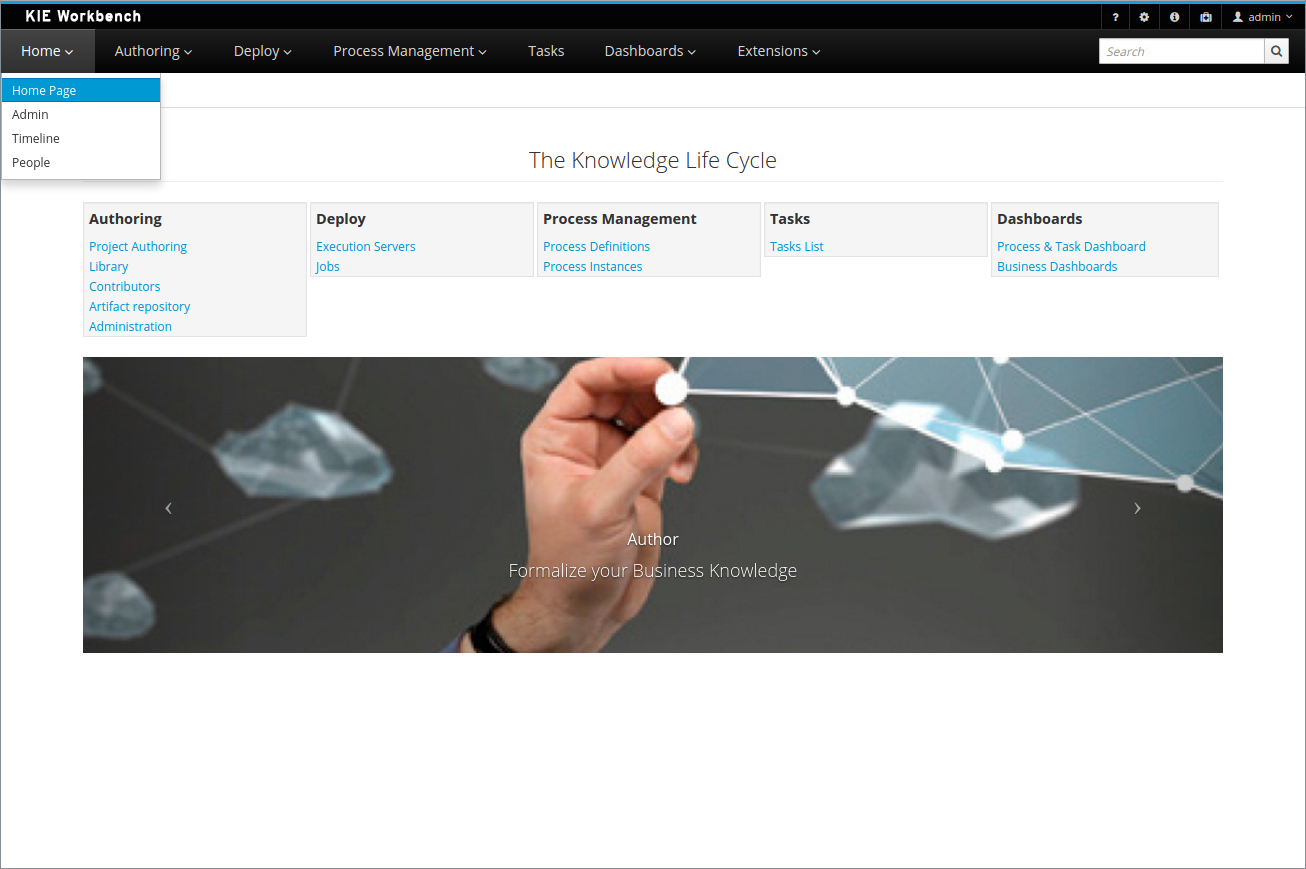

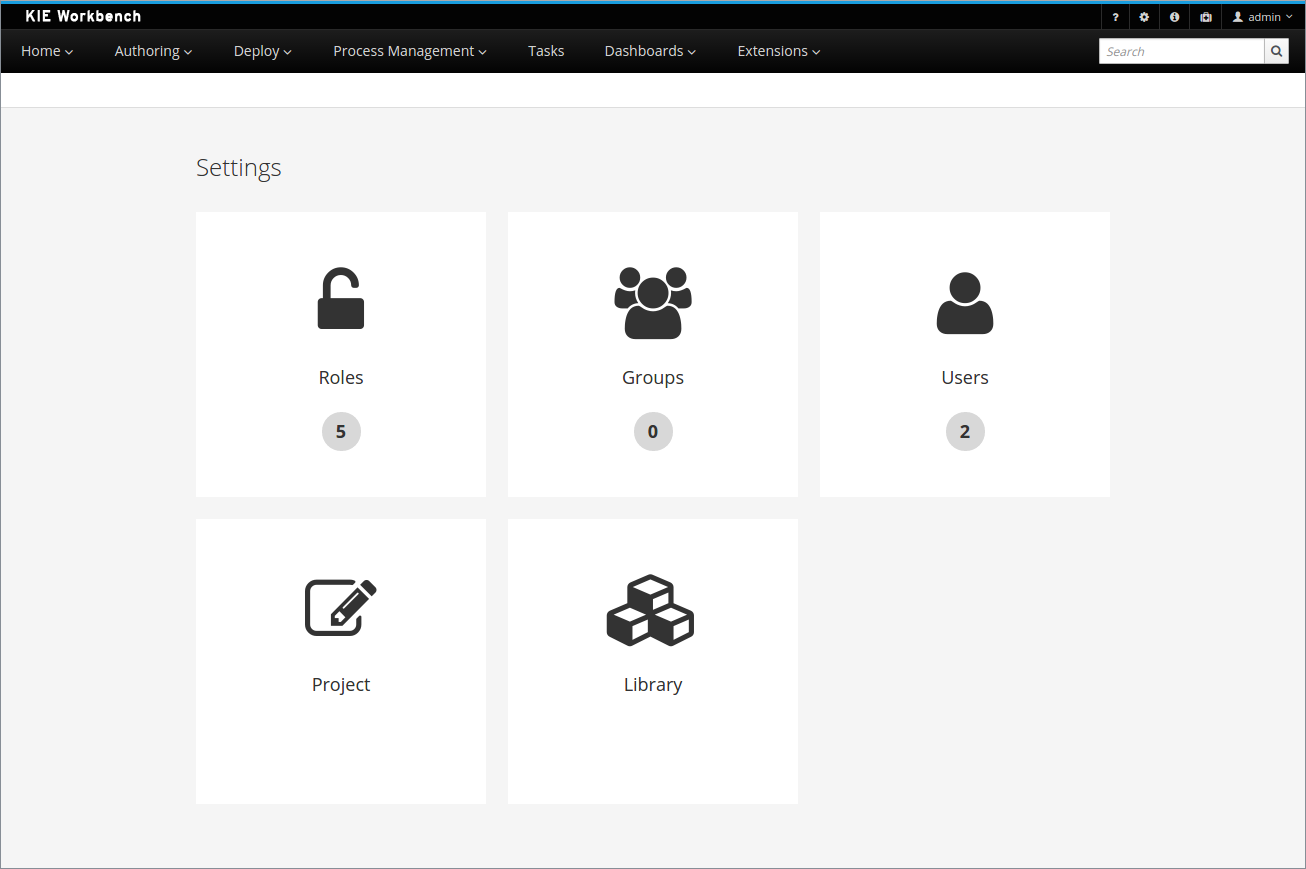

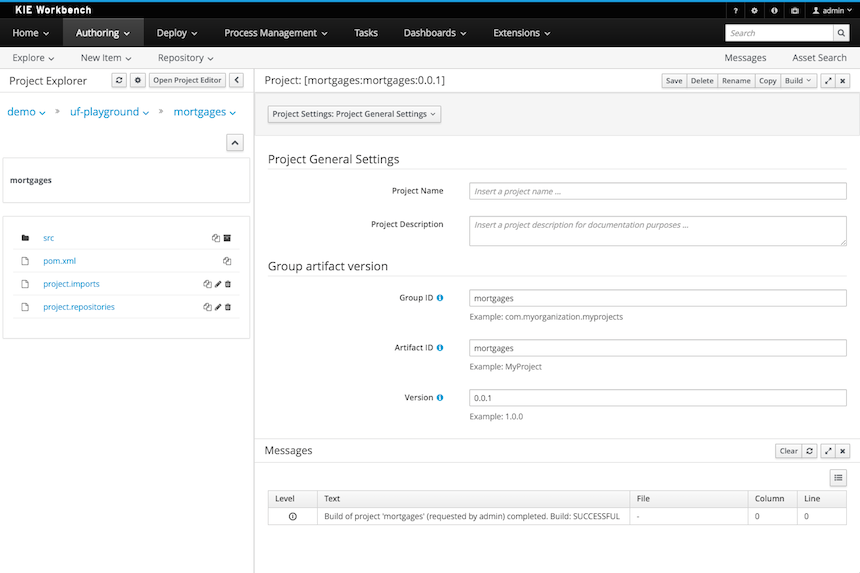

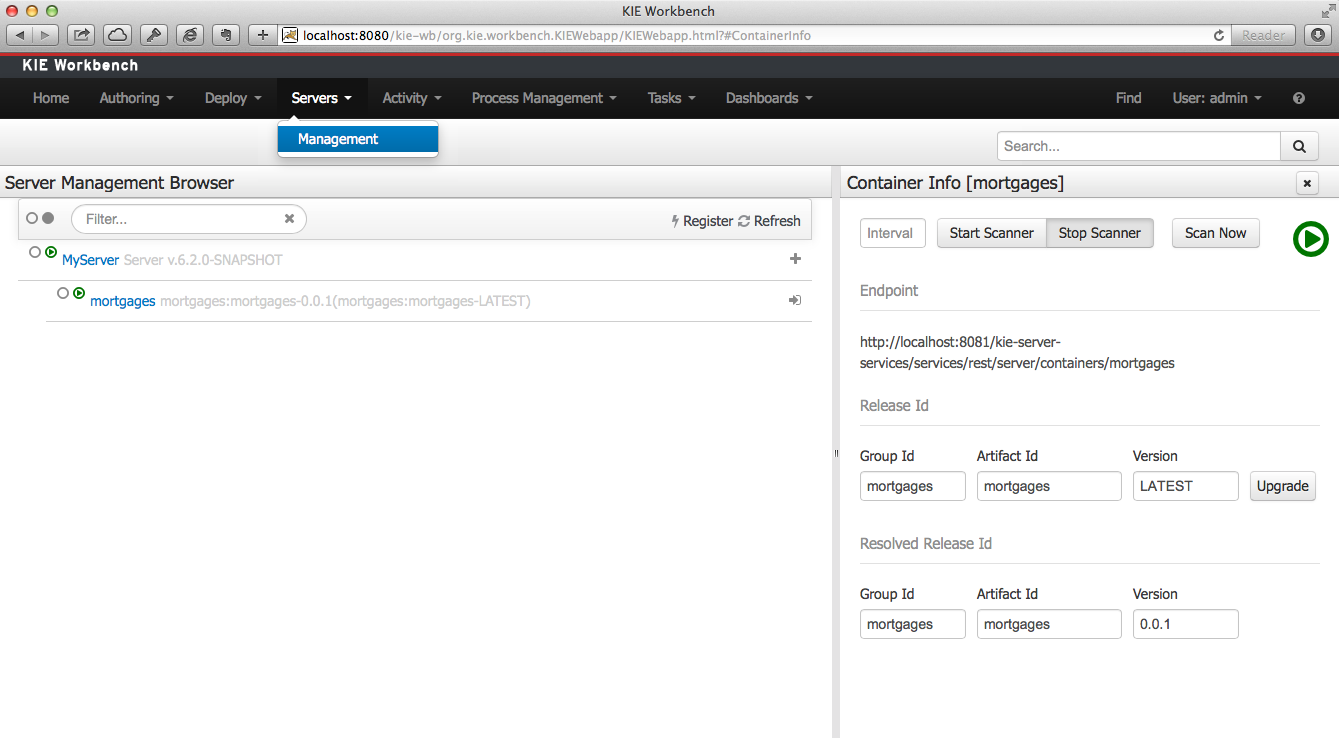

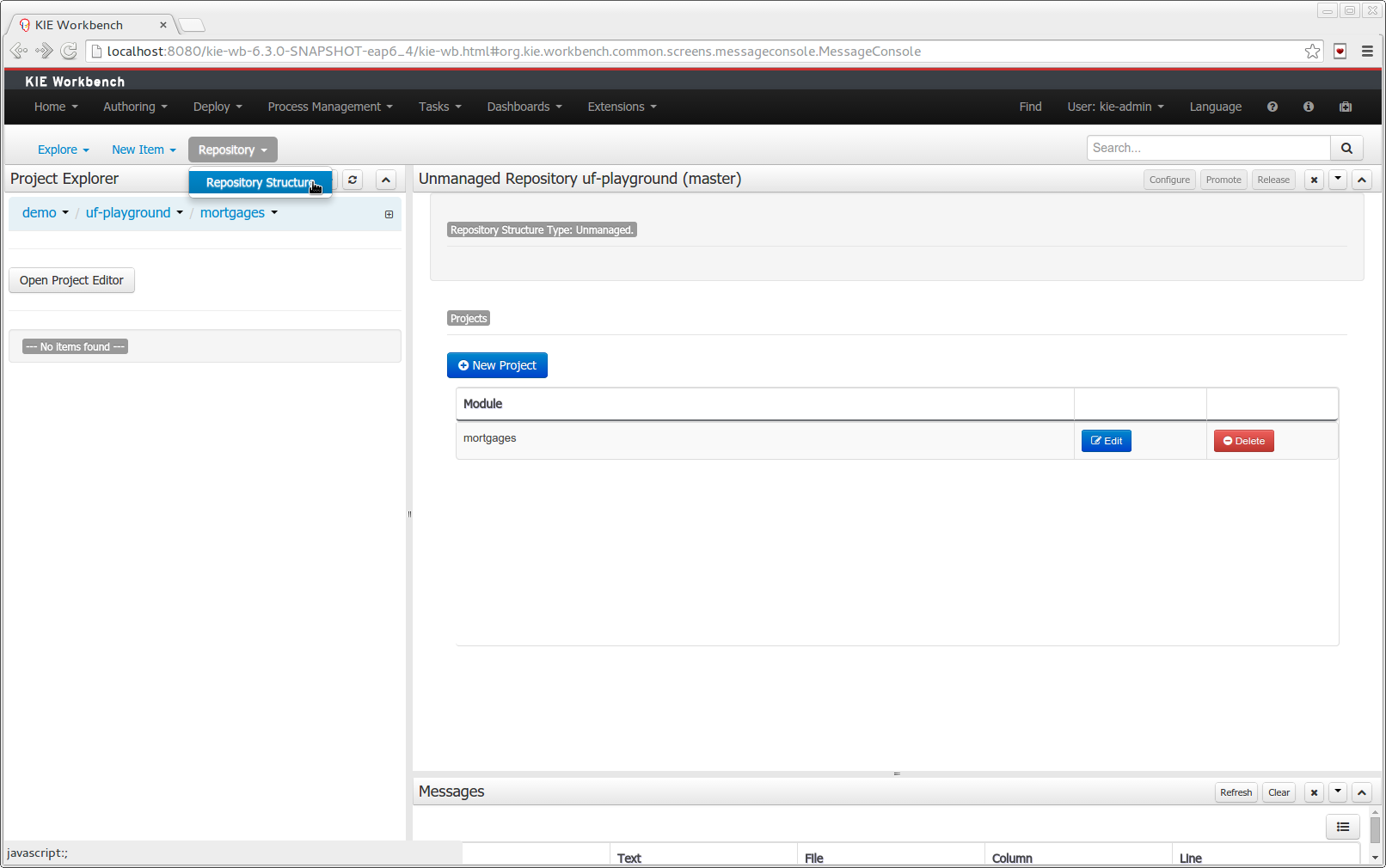

1.4. Business Central

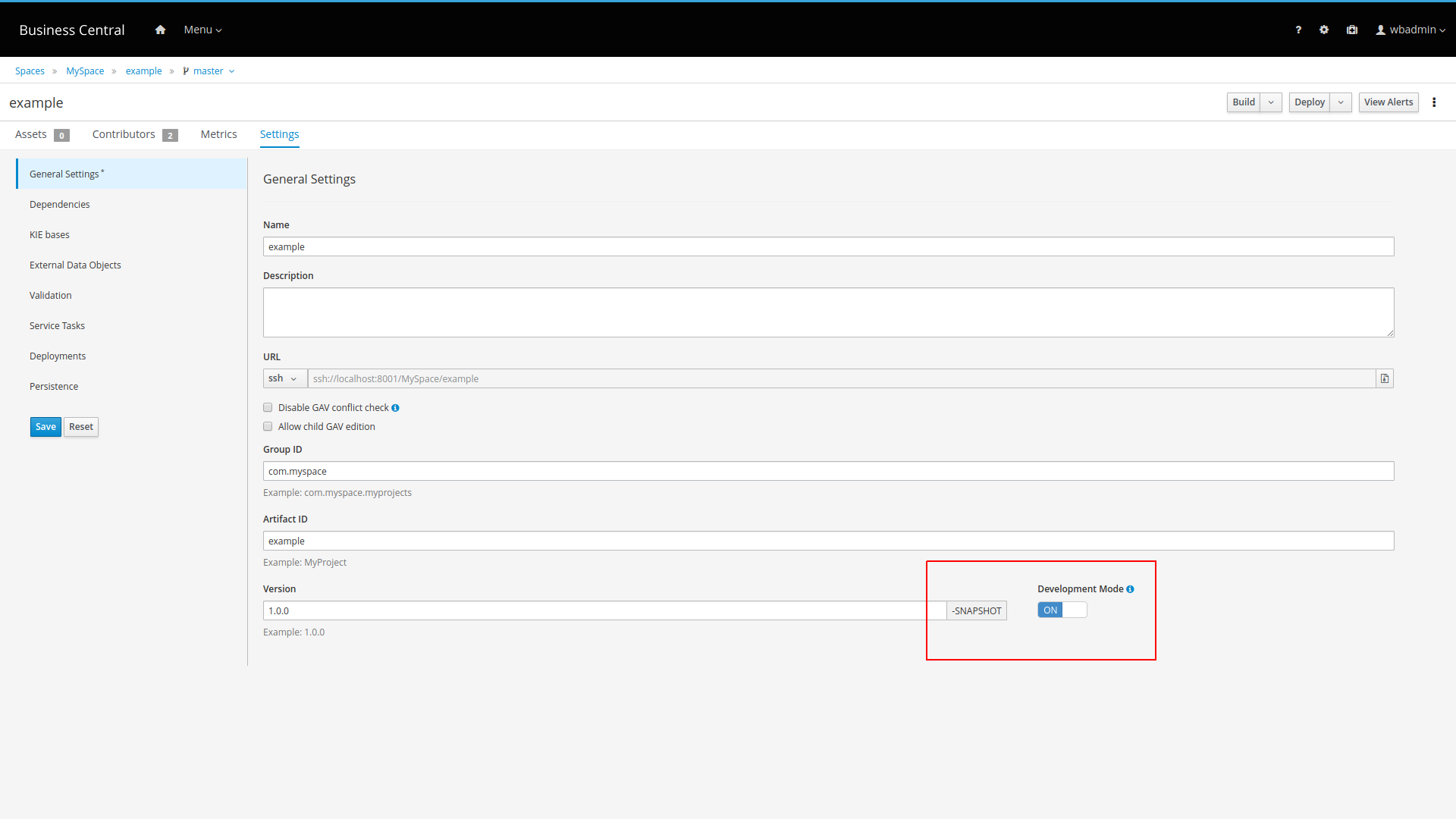

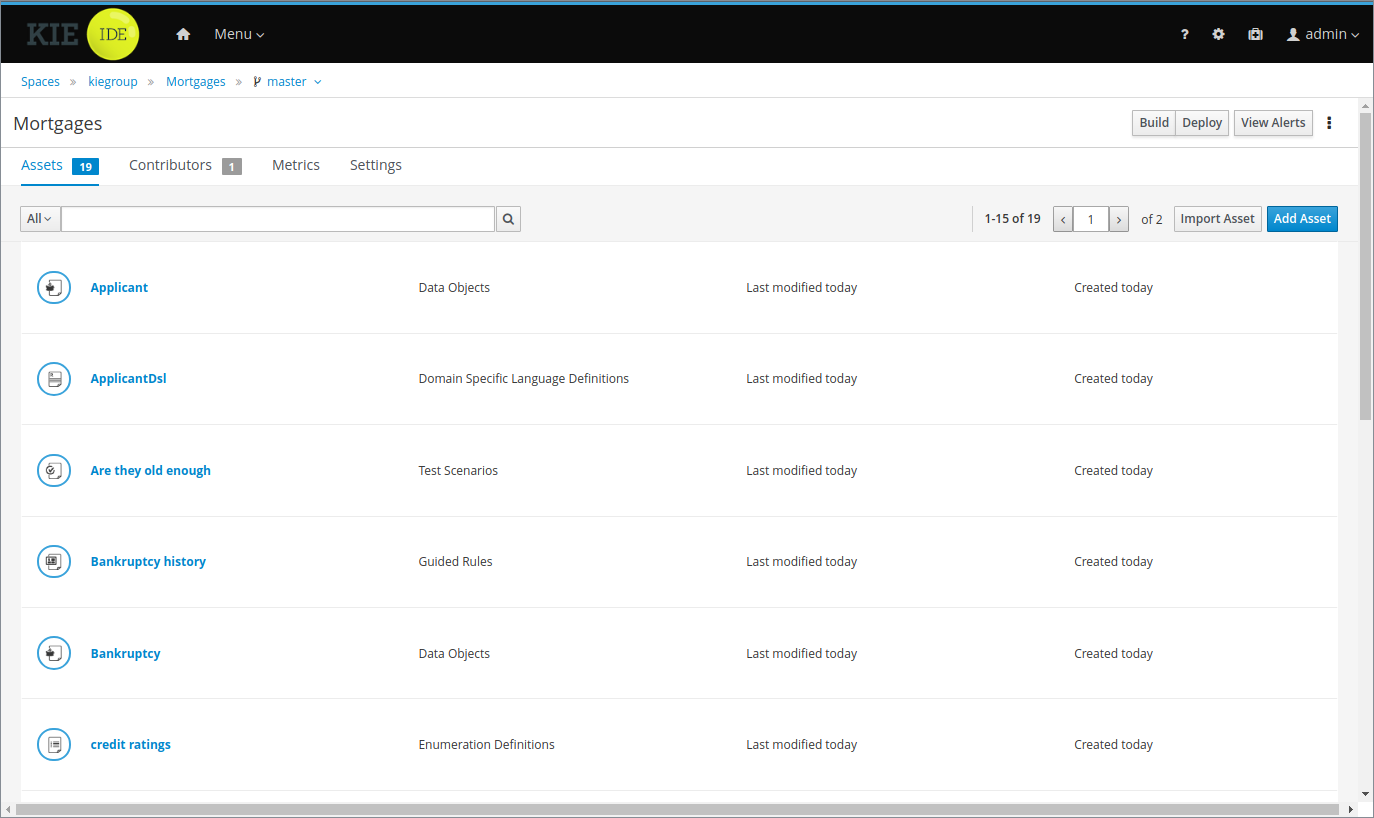

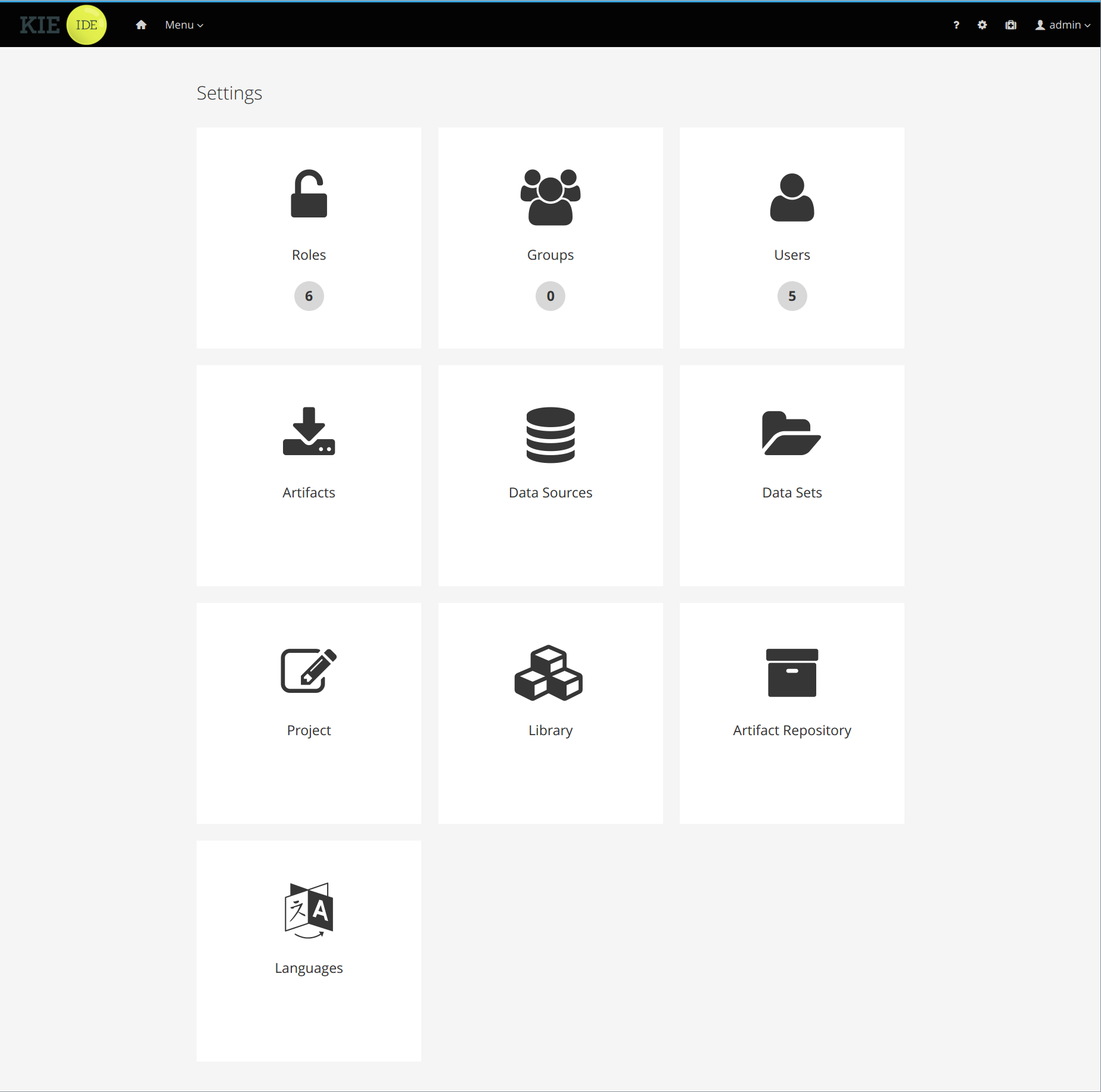

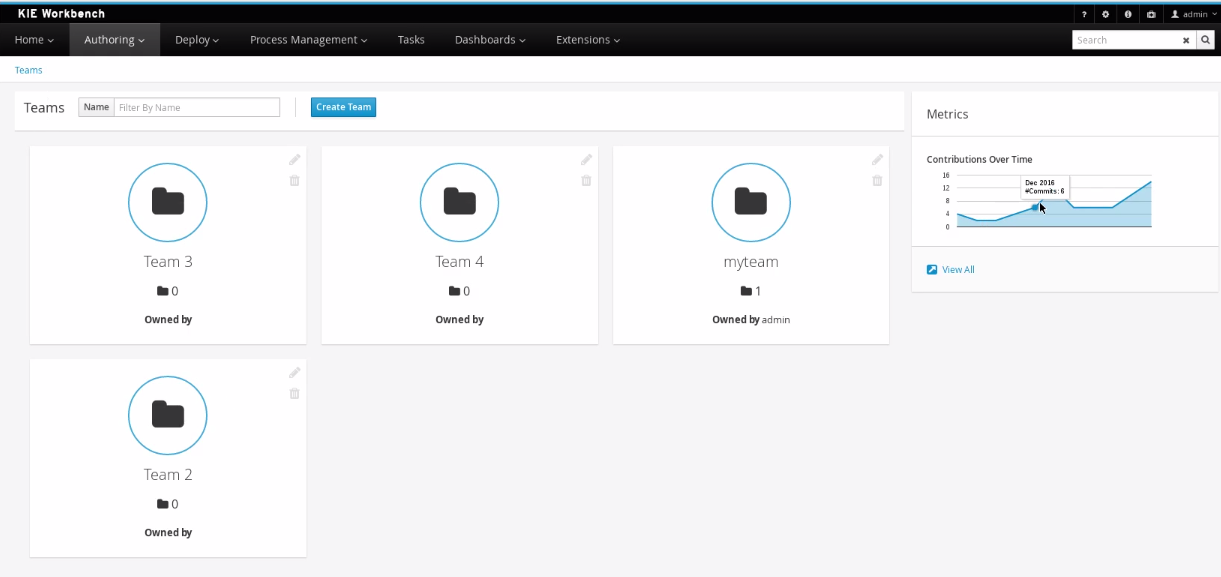

The Business Central web-based application covers the complete life cycle of BPM projects starting at authoring phase, going through implementation, execution and monitoring. It combines a series web-based tools into one configurable solution to manage all assets and runtime data needed for the business solution.

It supports the following:

-

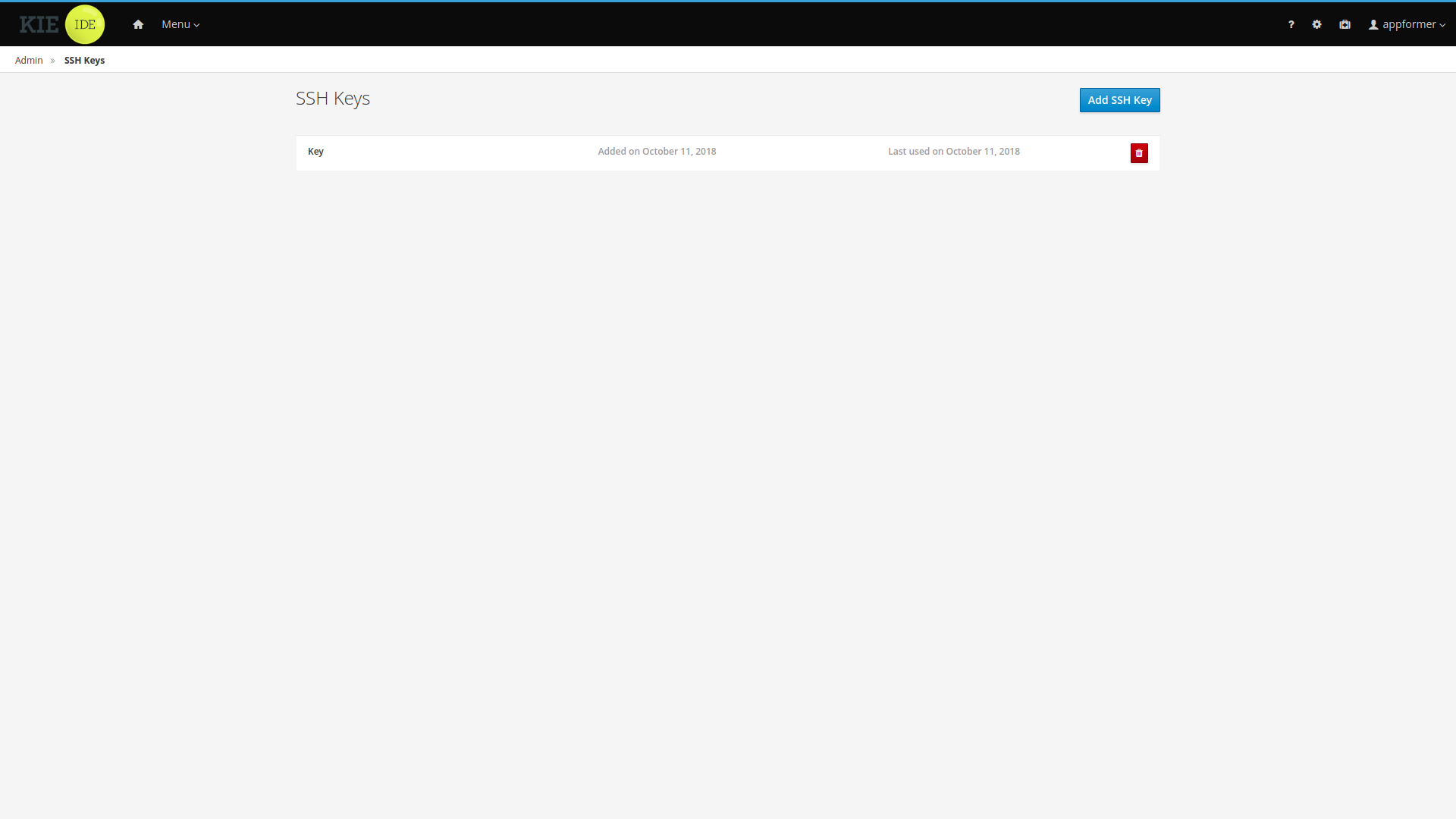

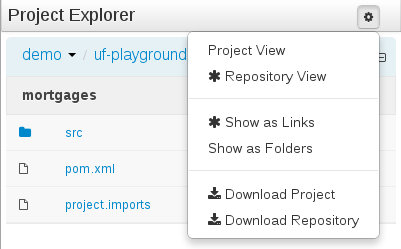

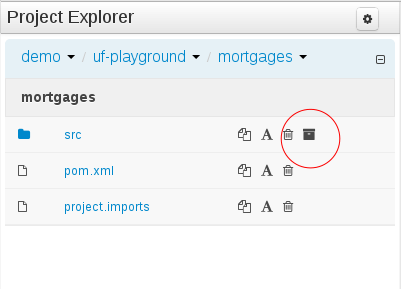

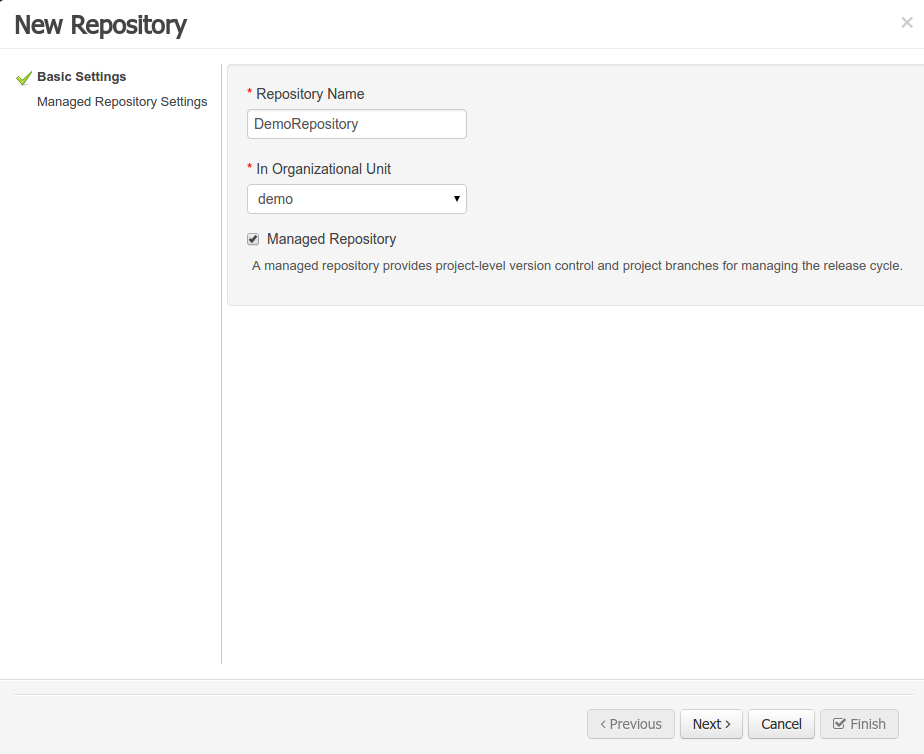

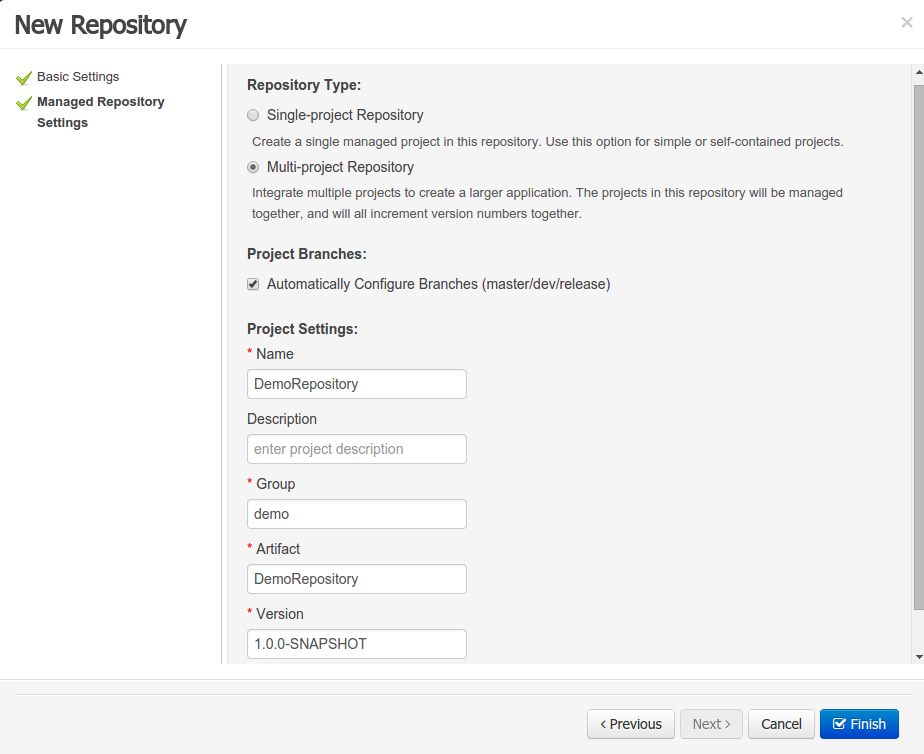

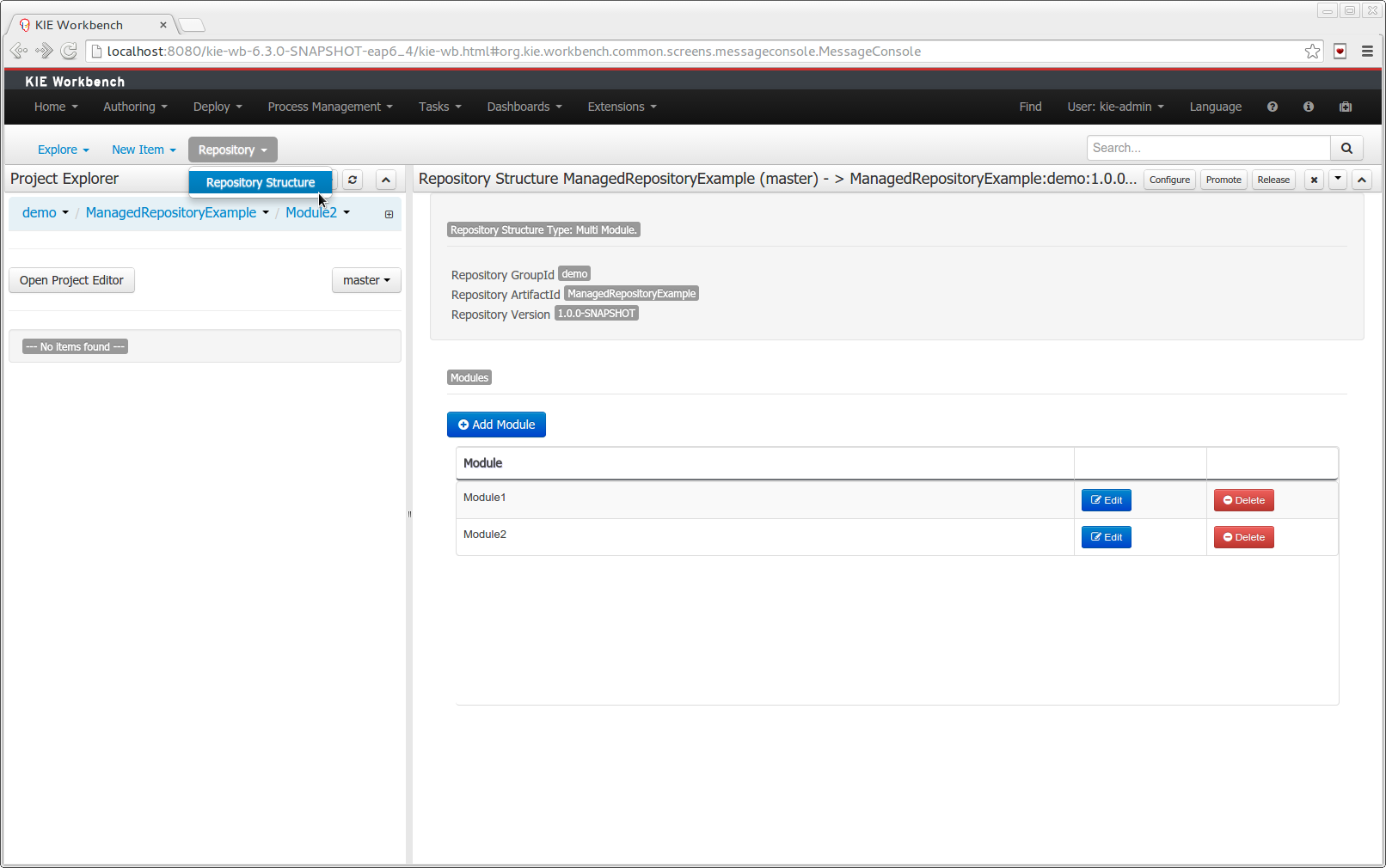

A repository service to store your business processes and related artifacts, using a Git repository, which supports versioning, remote Git access (as a file system) and access via REST.

-

A web-based user interface to manage your business processes, targeted towards business users; it also supports the visualization (and editing) of your artifacts (the web-based editors like designer, data and form modeler are integrated here), but also categorisation, build and deployment, etc..

-

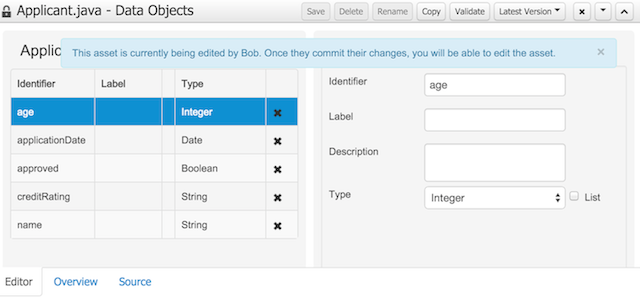

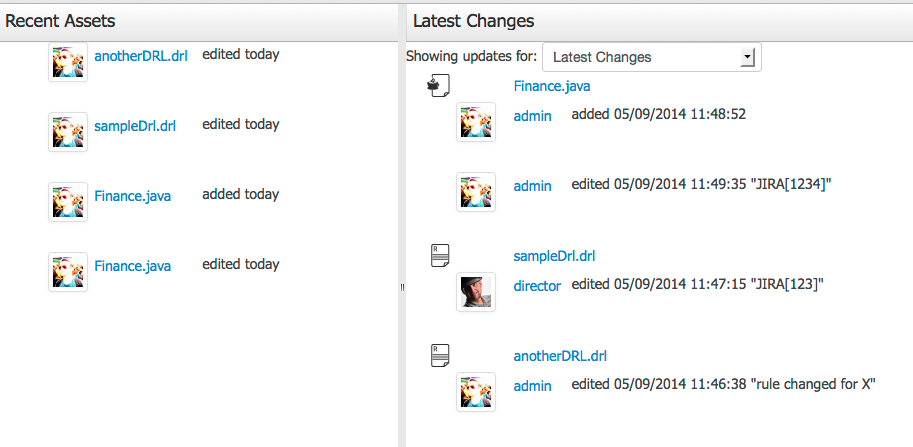

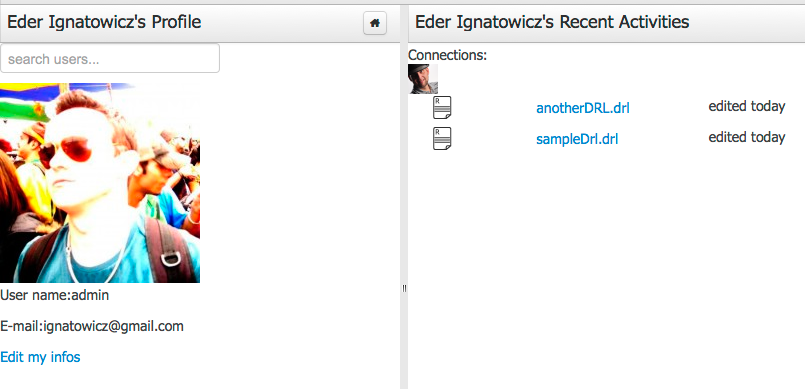

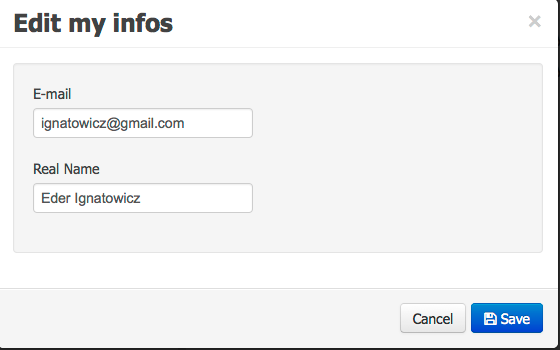

Collaboration features which enable multiple actors (for example business users and developers) to work together on the same project.

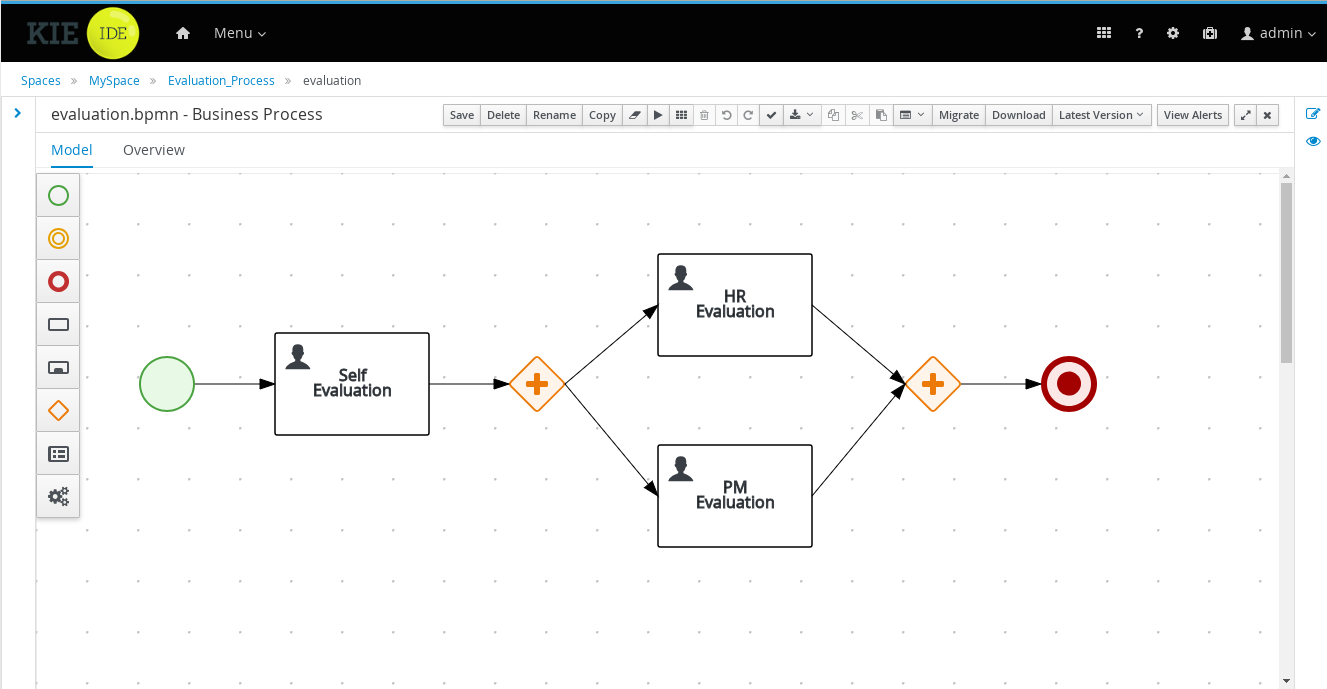

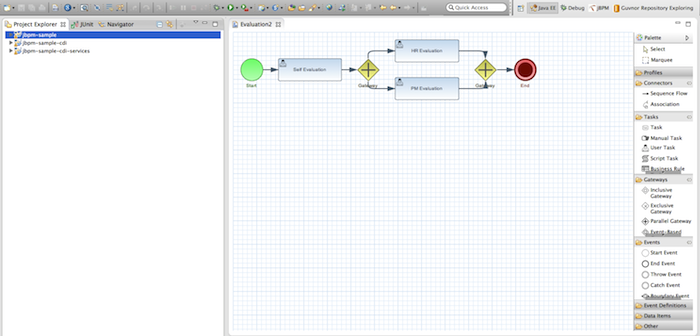

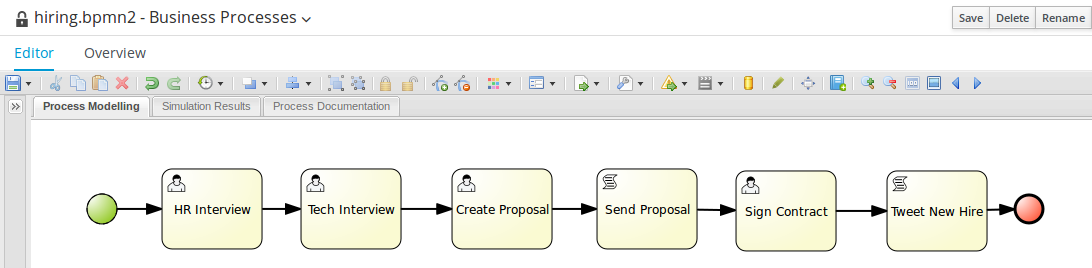

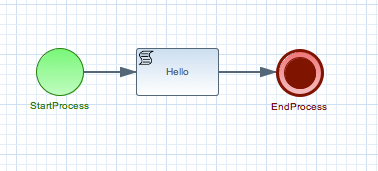

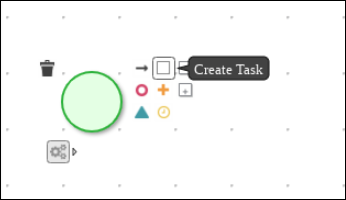

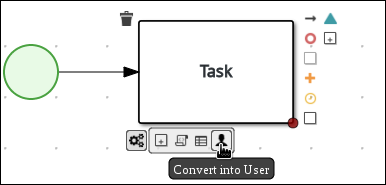

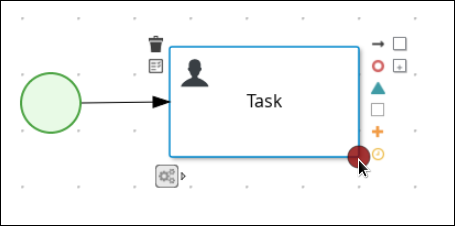

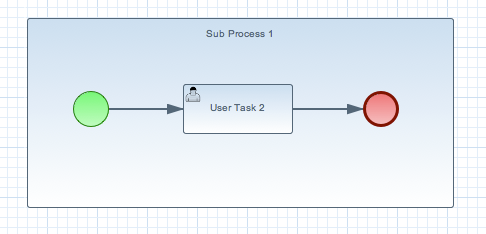

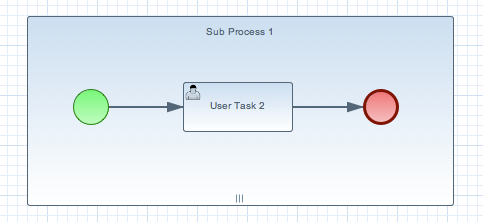

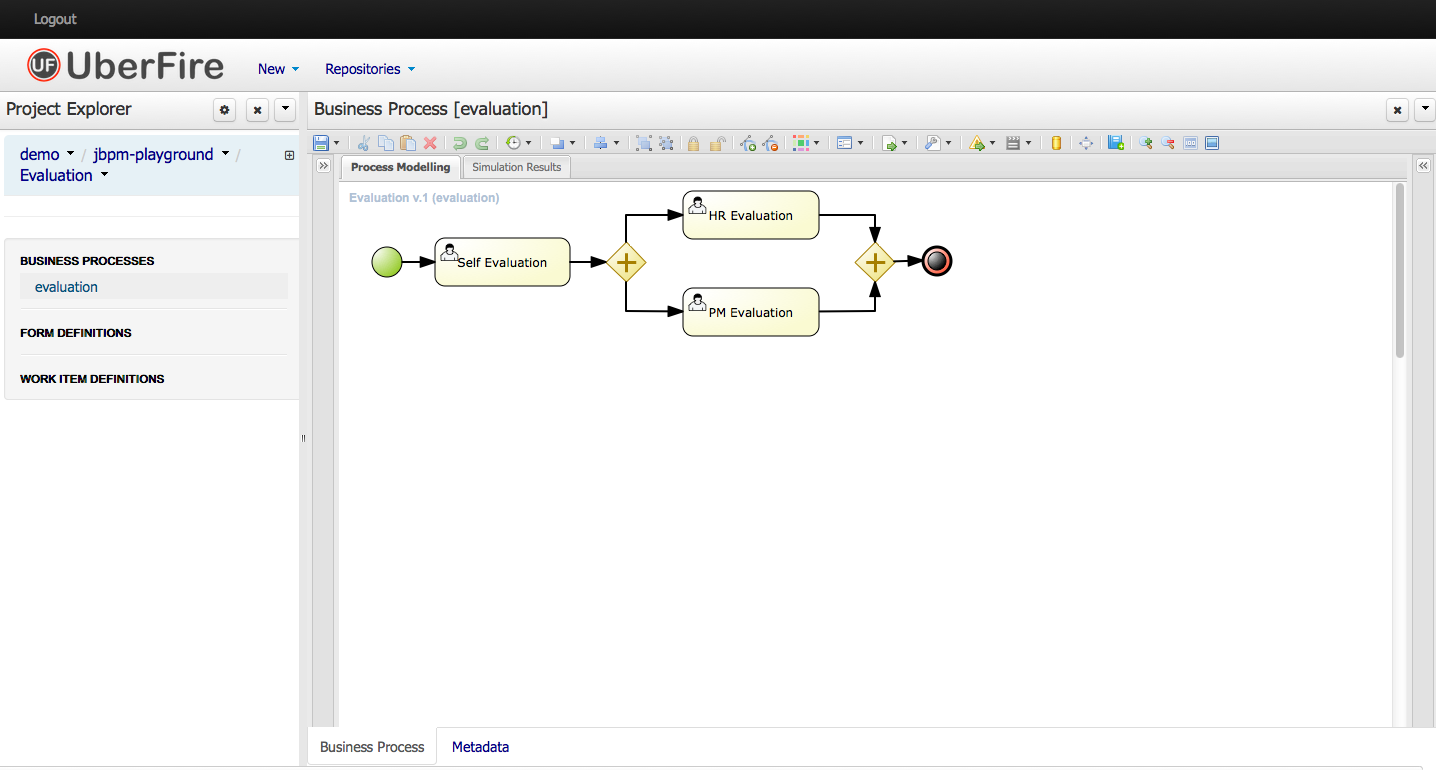

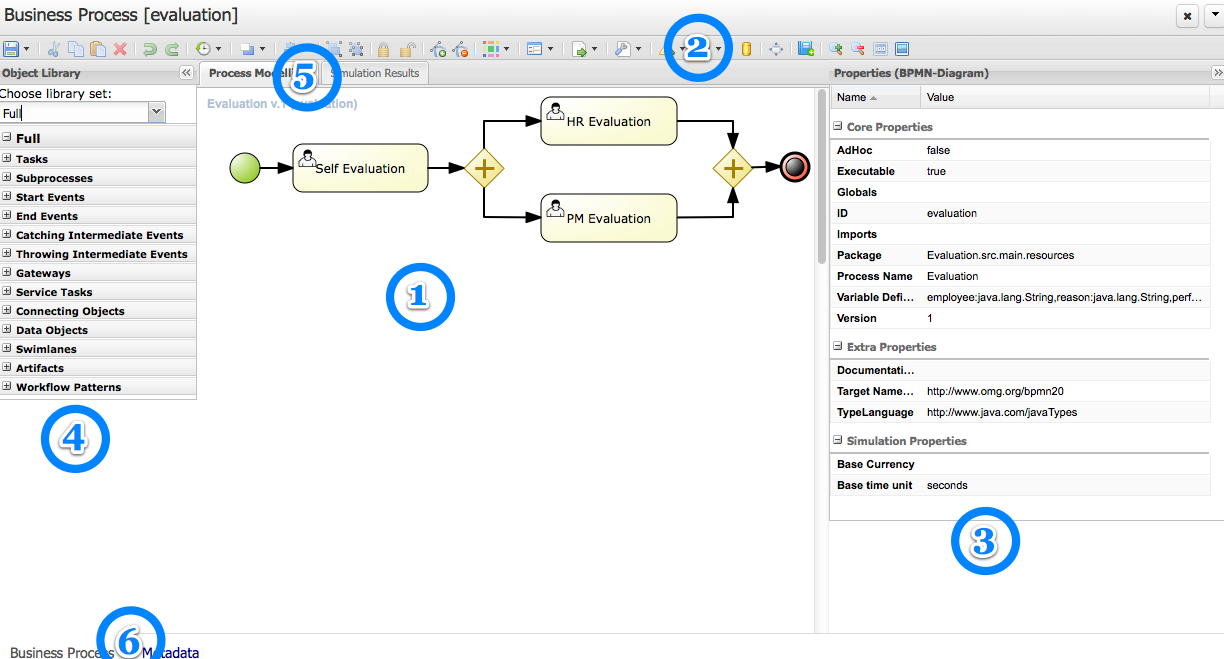

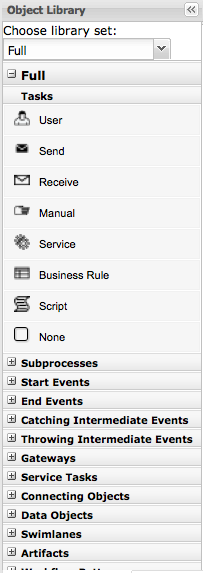

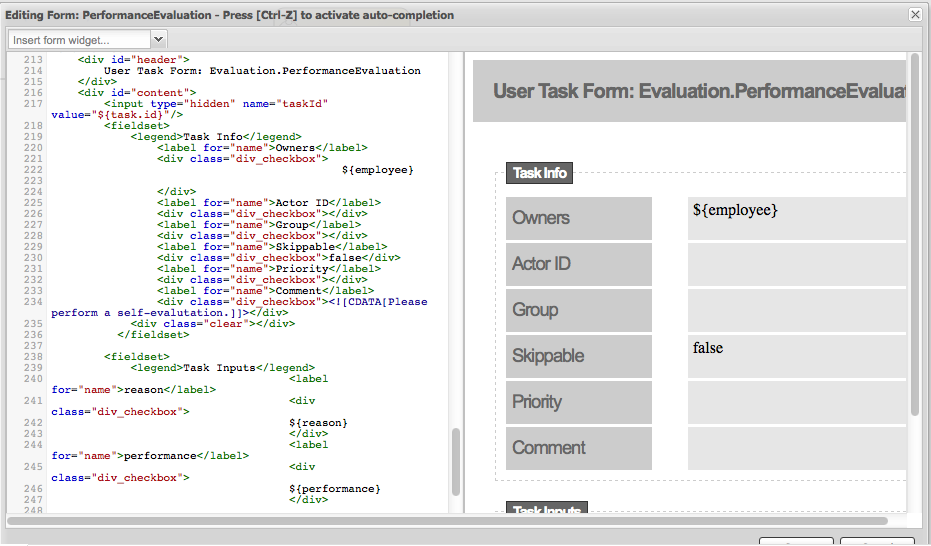

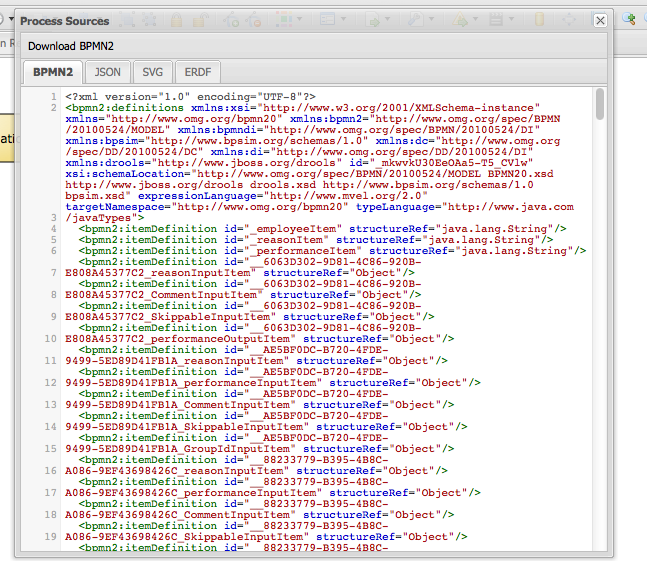

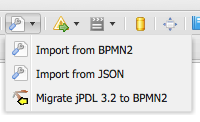

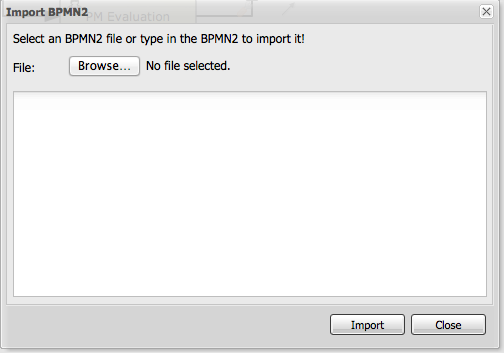

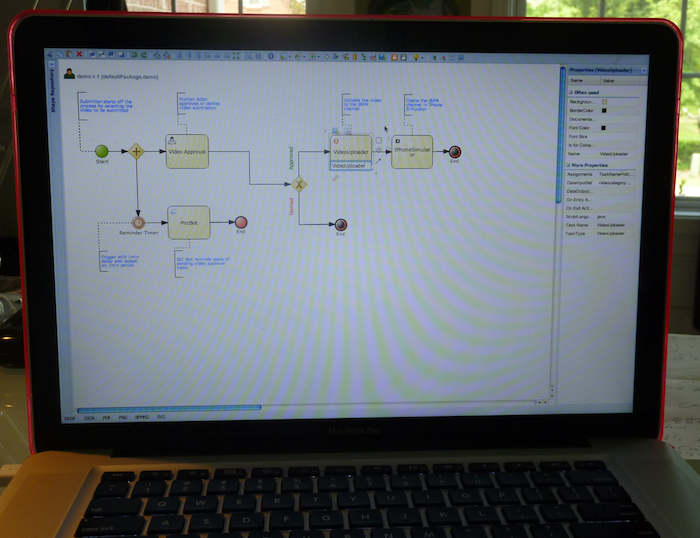

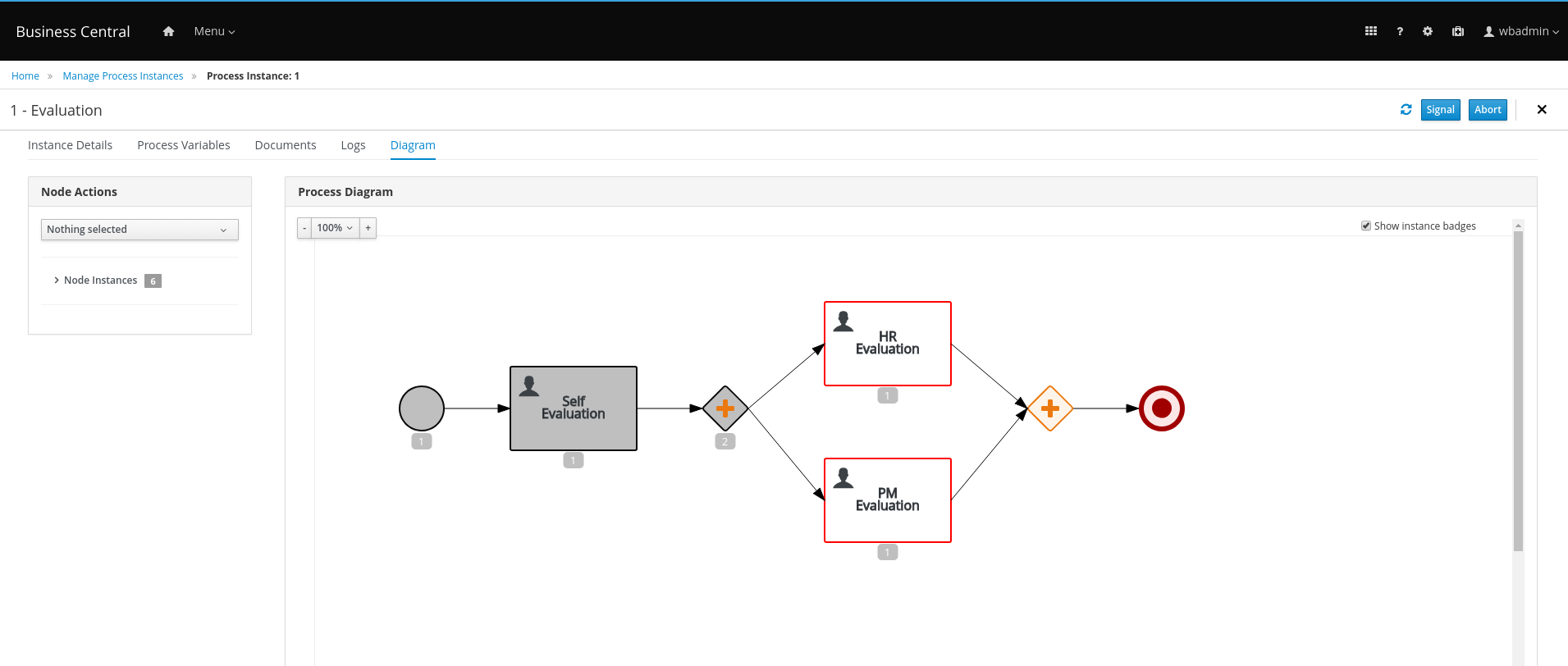

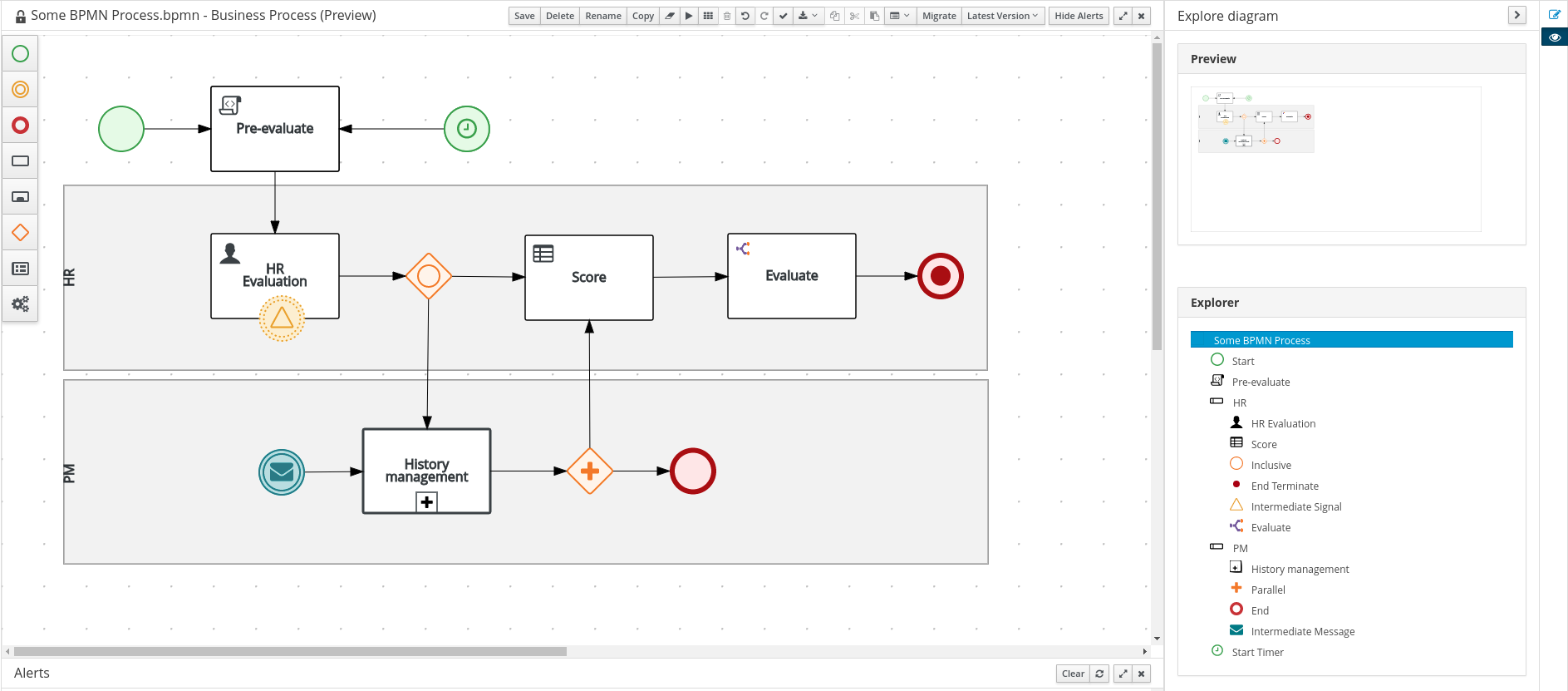

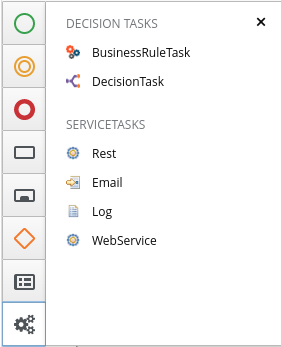

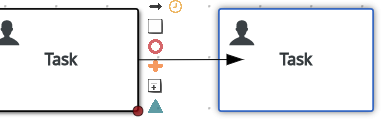

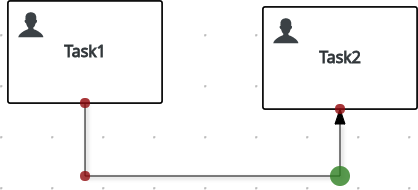

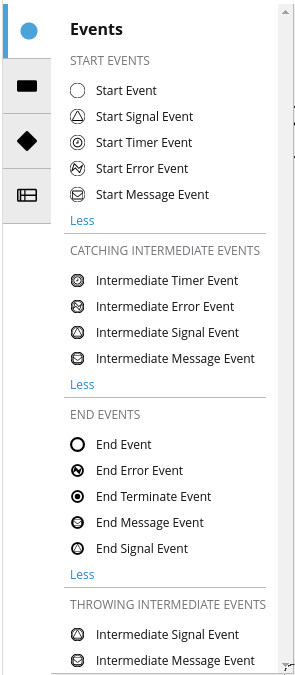

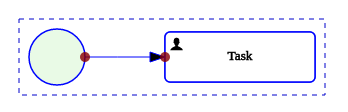

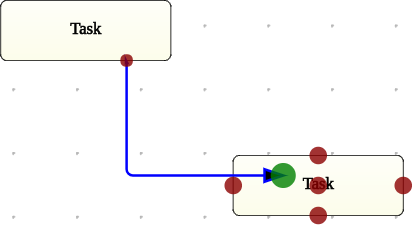

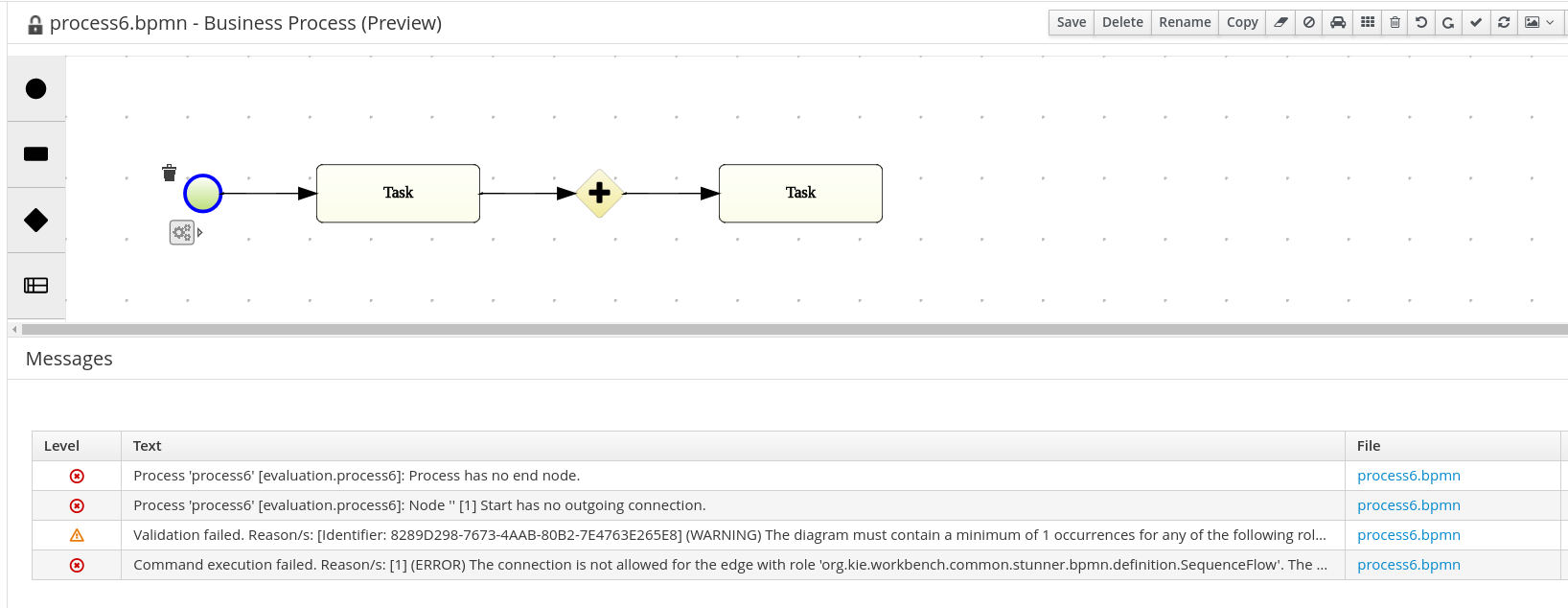

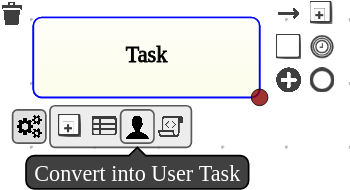

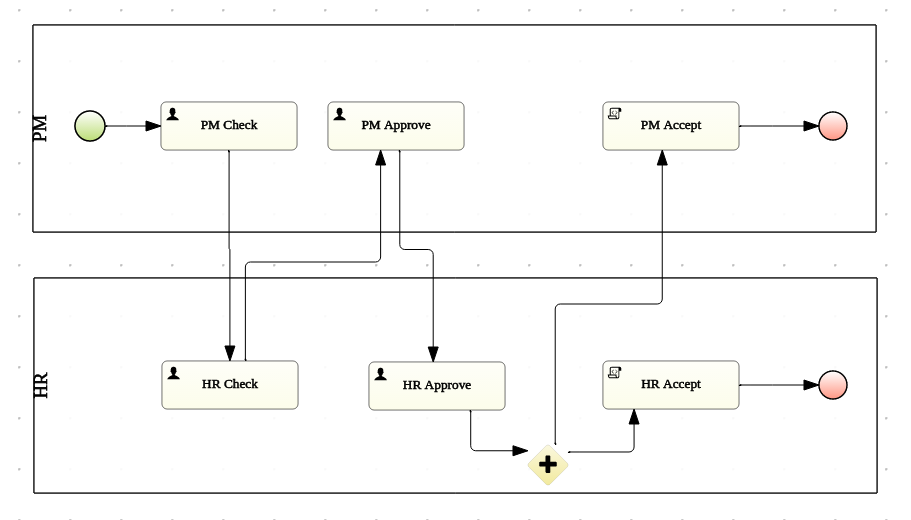

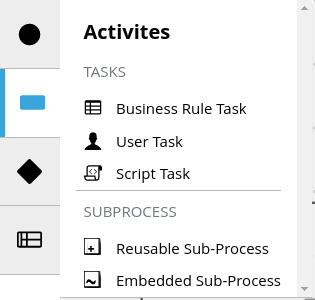

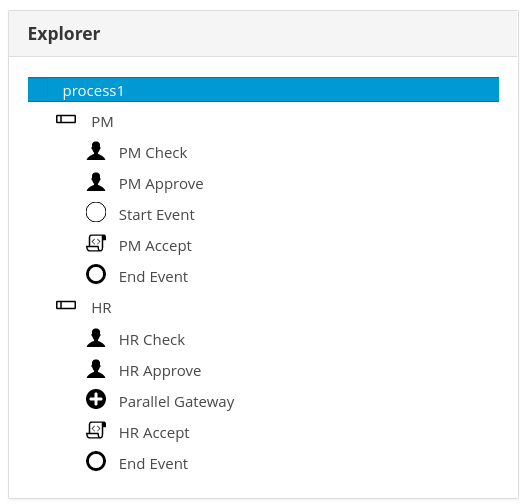

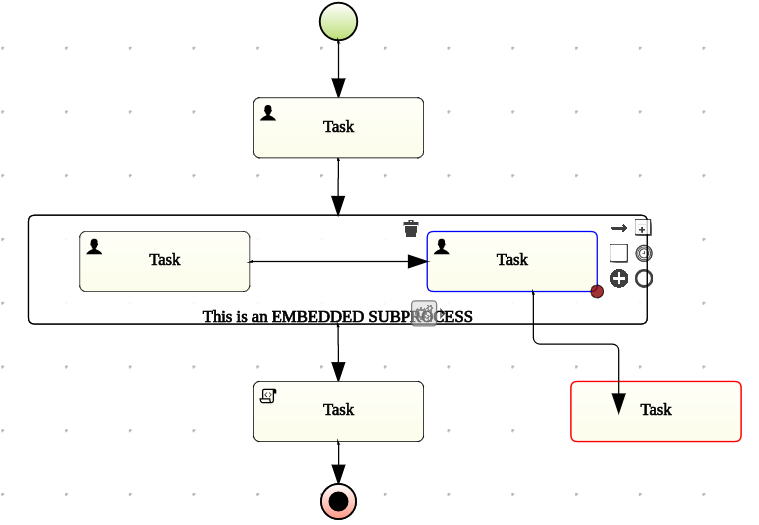

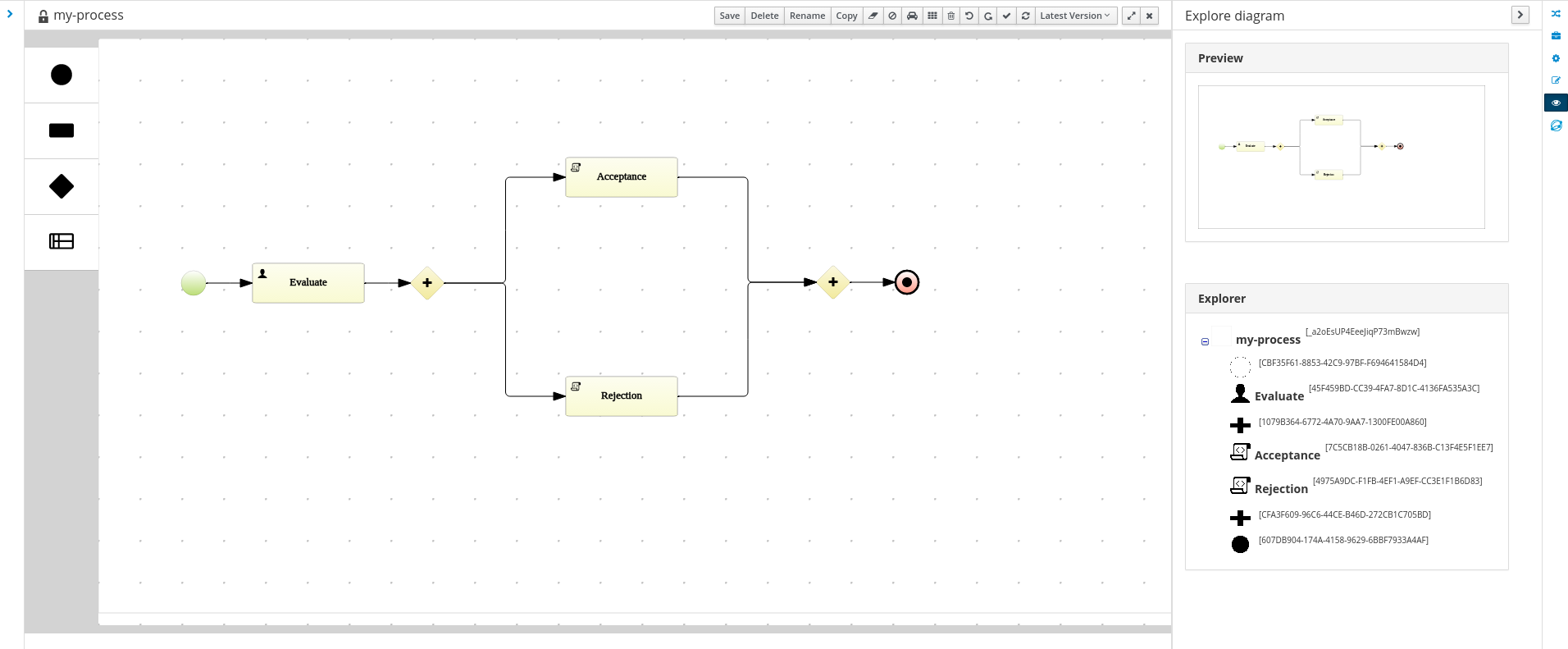

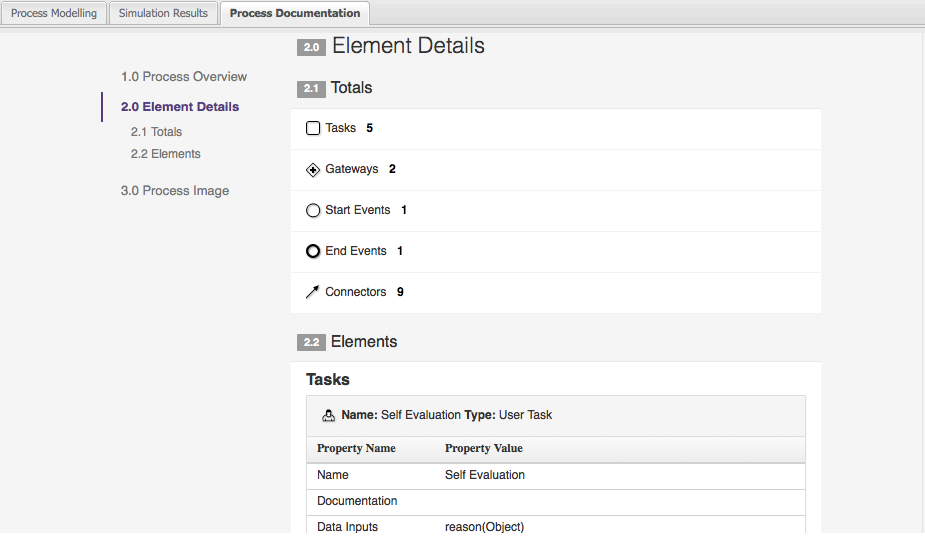

1.4.1. Process Designer

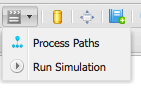

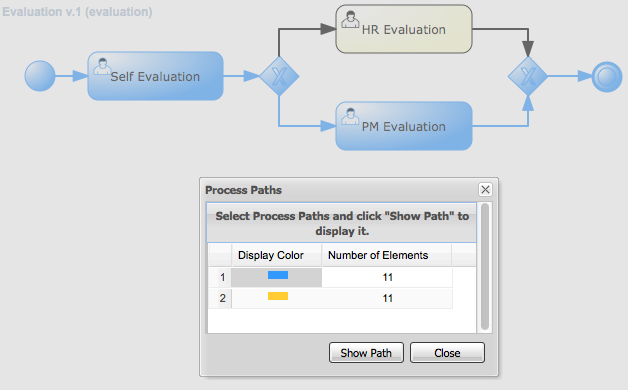

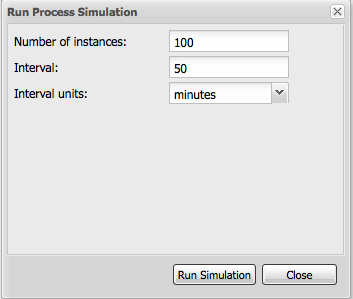

The web-based jBPM Designer allows you to model your business processes in a web-based environment. It is targeted towards business users and offers a graphical editor for viewing and editing your business processes (using drag and drop), similar to the Eclipse plugin. It supports round-tripping between the Eclipse editor and the web-based designer. It also supports simulation of processes.

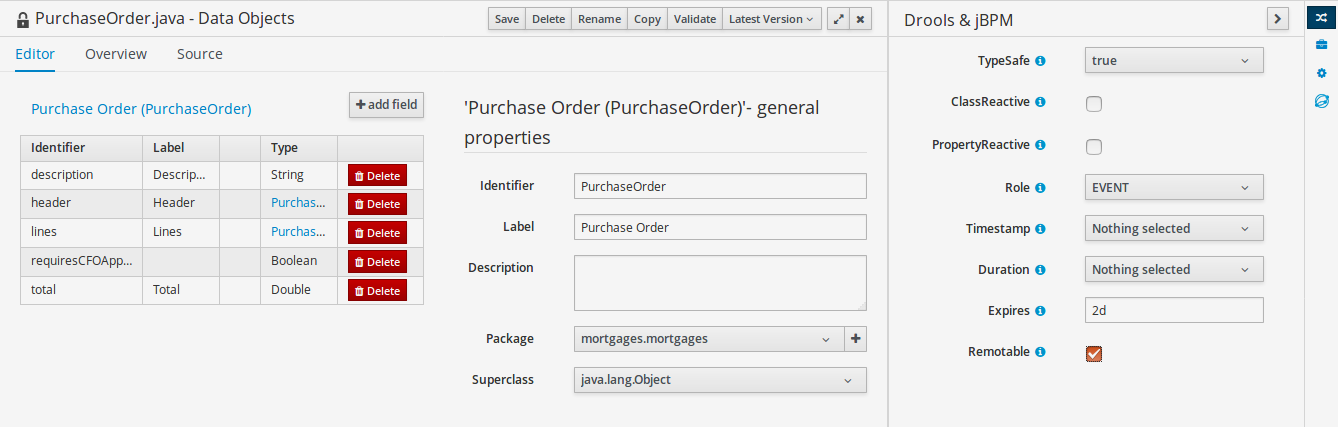

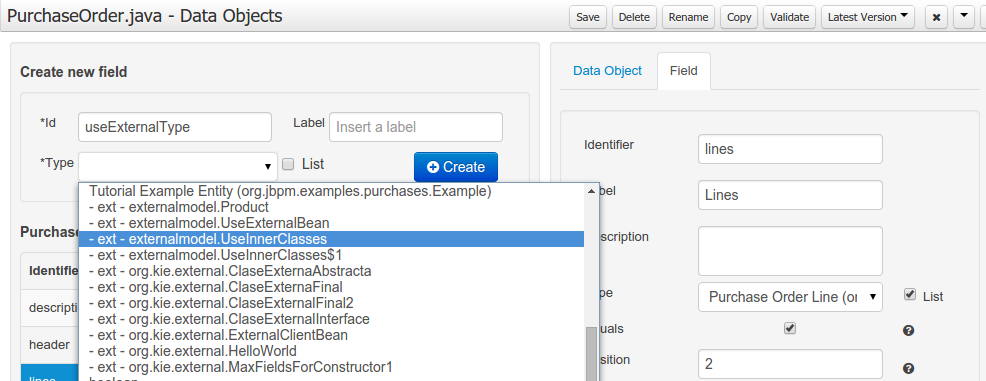

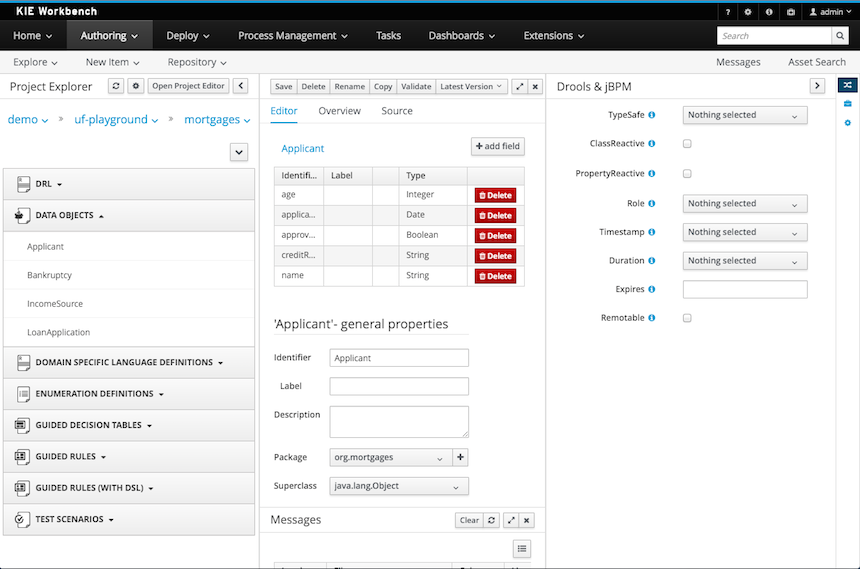

1.4.2. Data Modeler

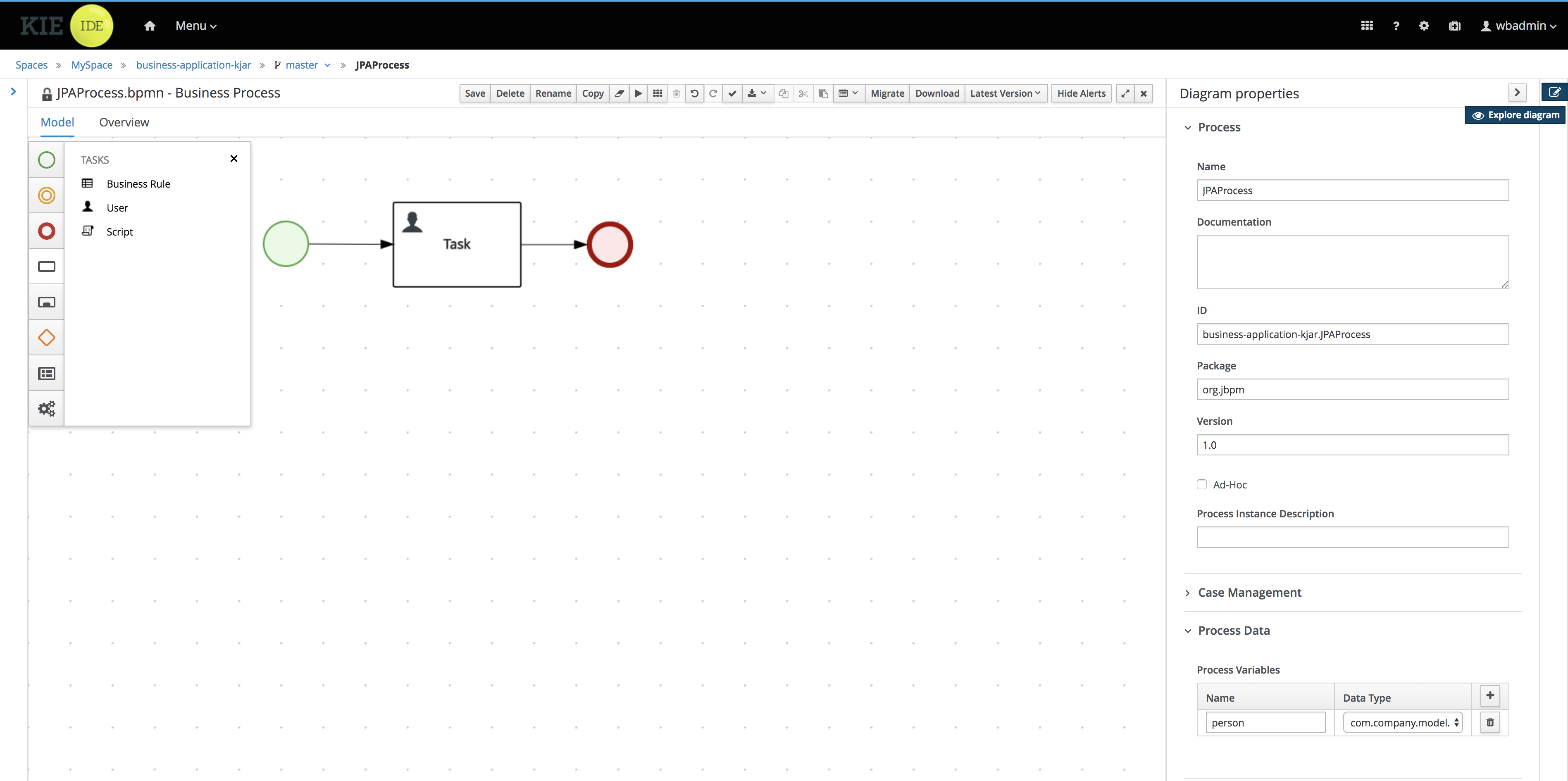

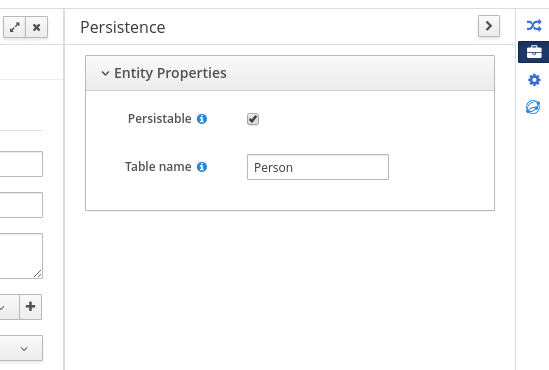

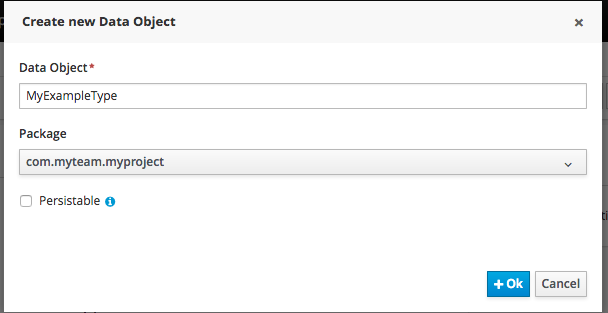

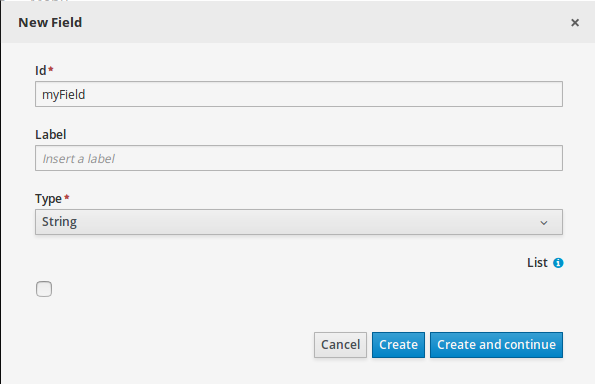

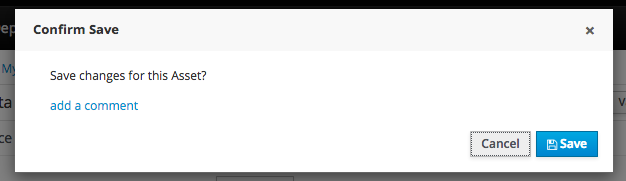

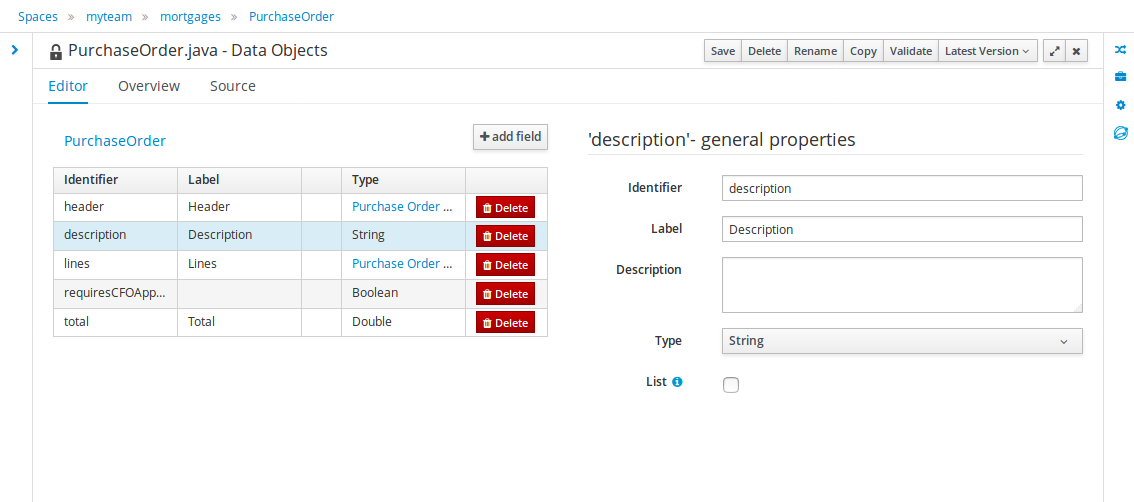

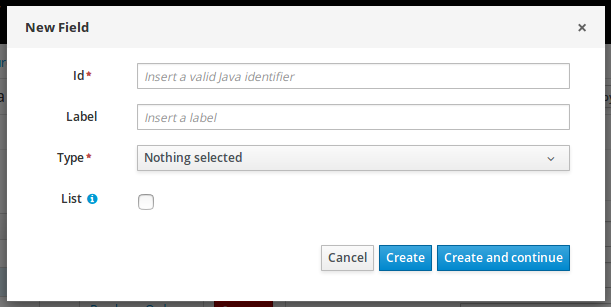

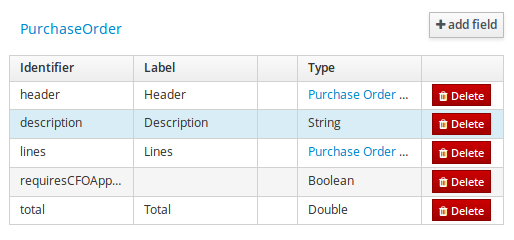

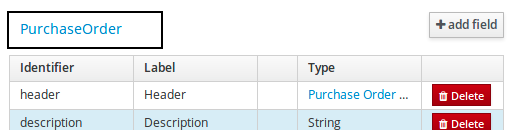

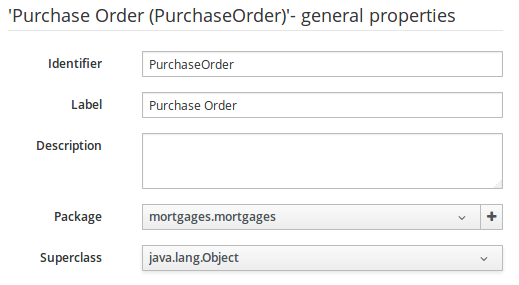

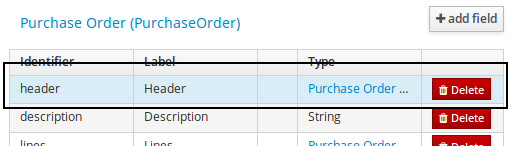

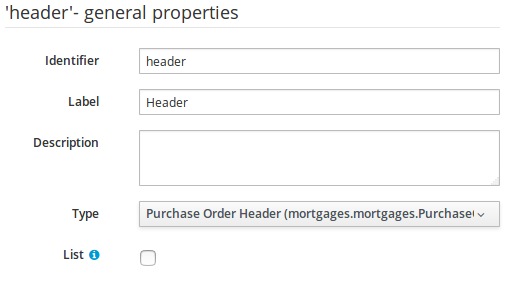

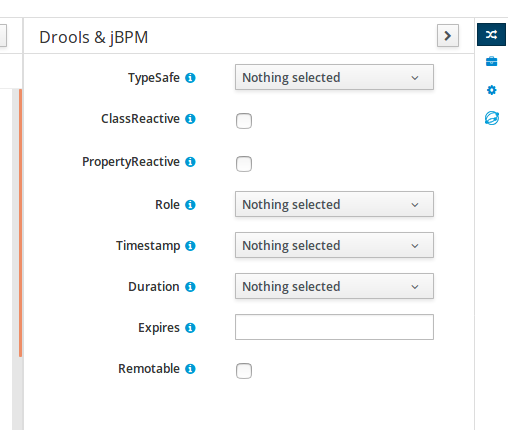

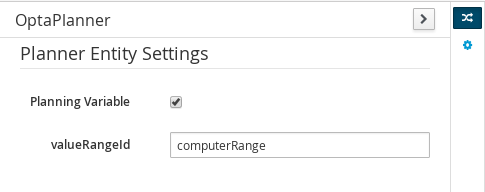

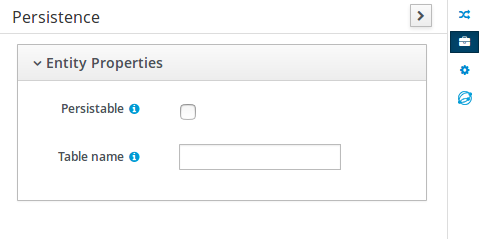

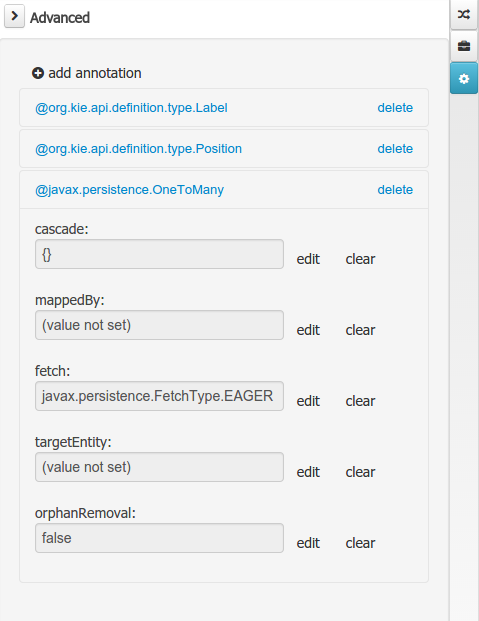

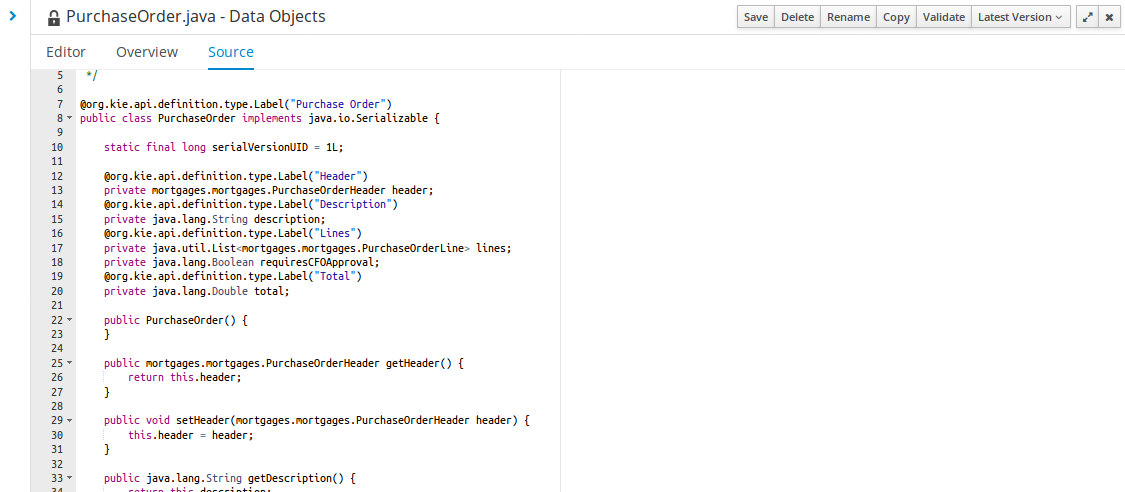

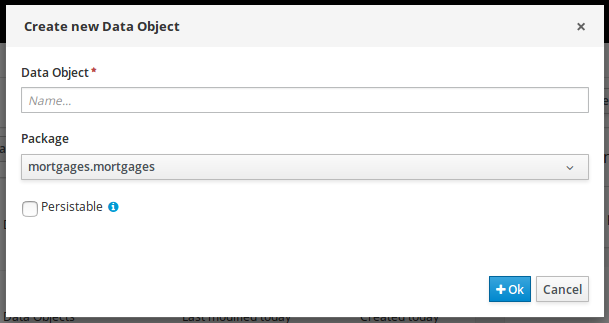

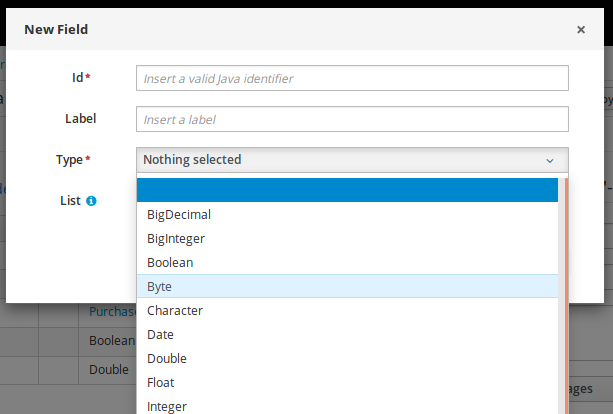

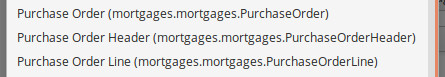

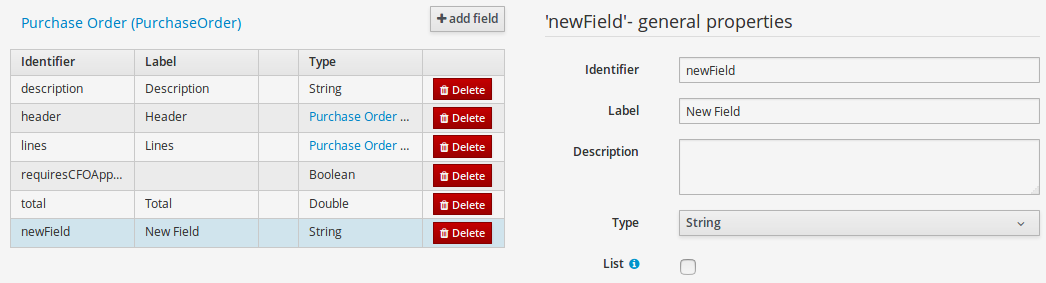

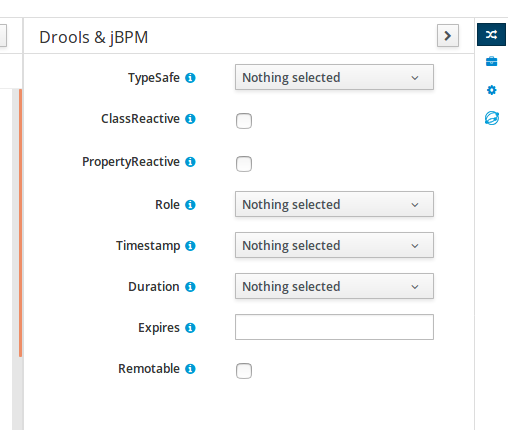

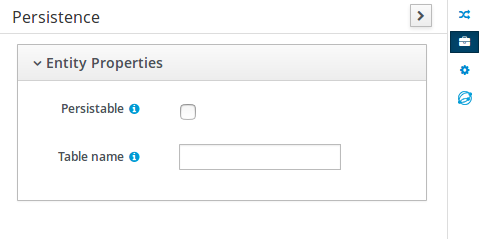

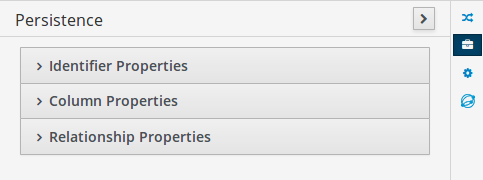

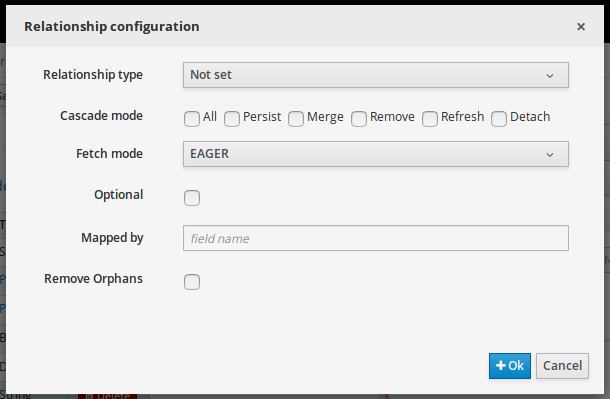

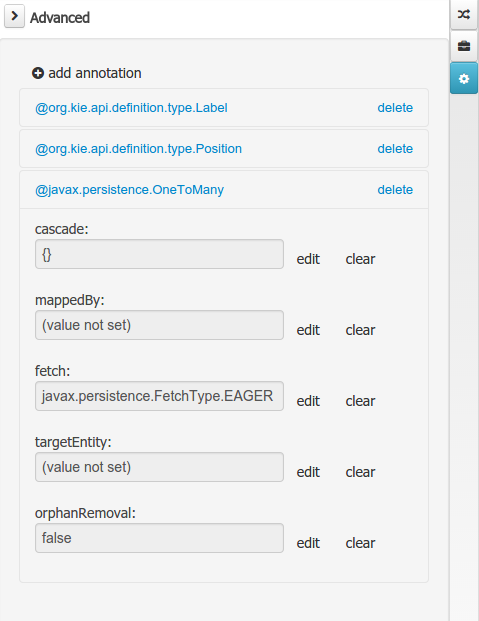

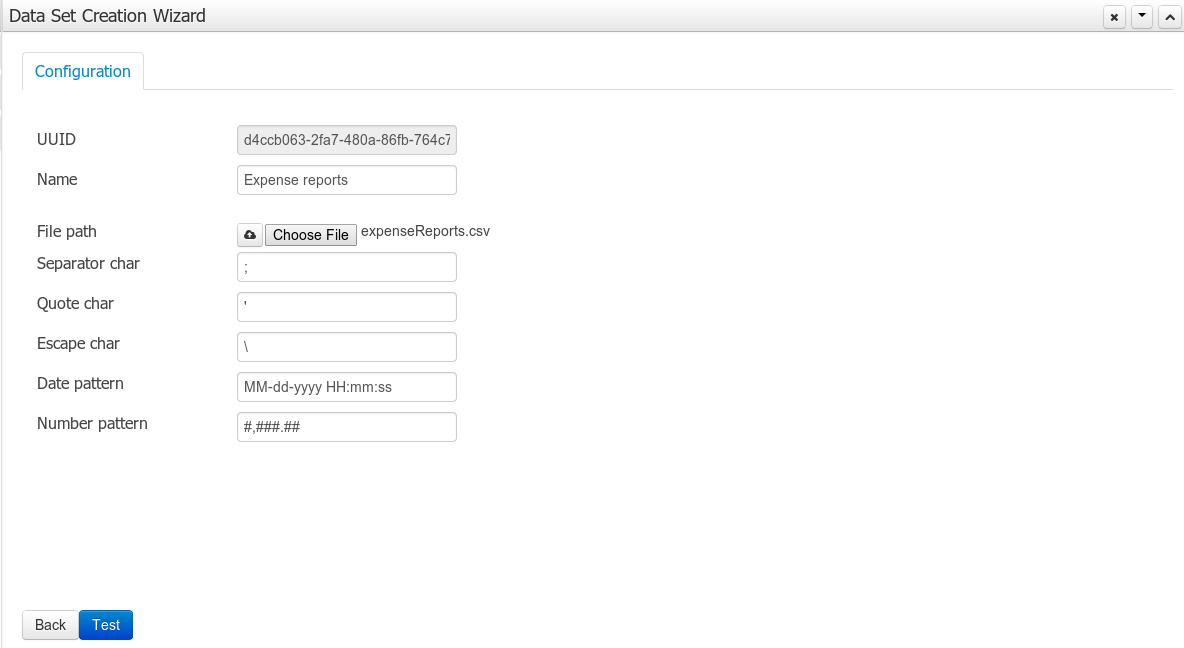

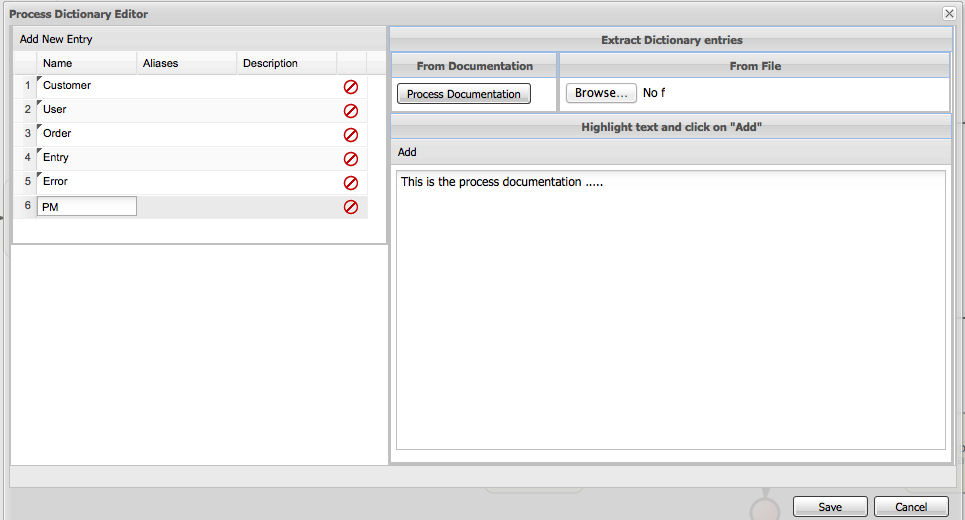

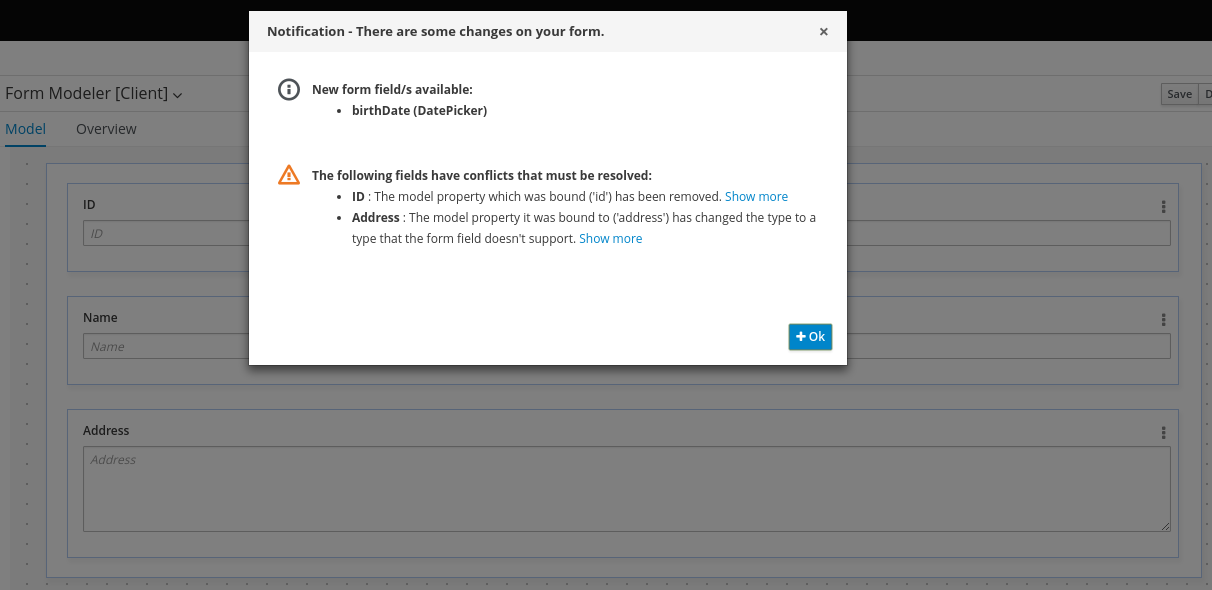

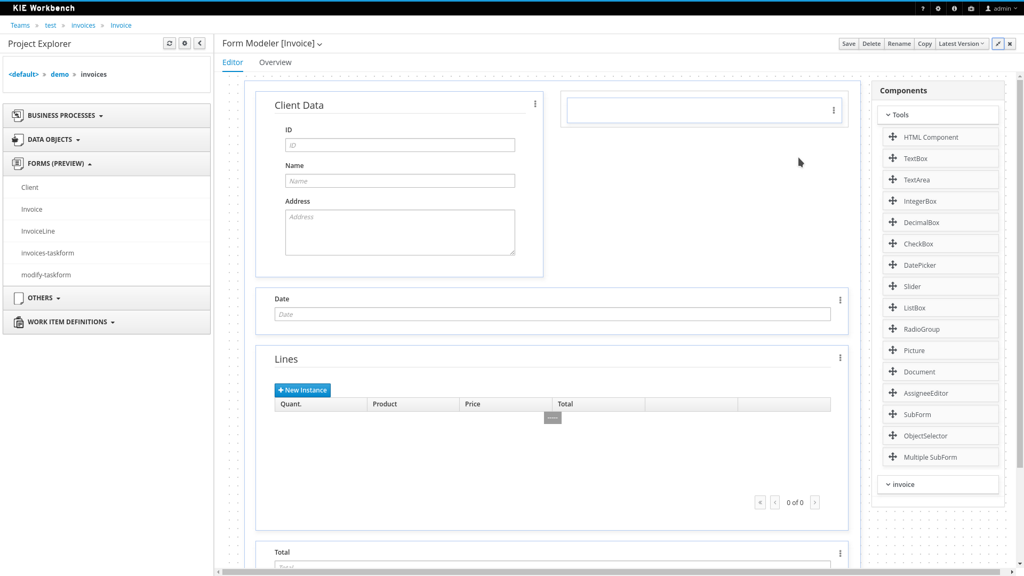

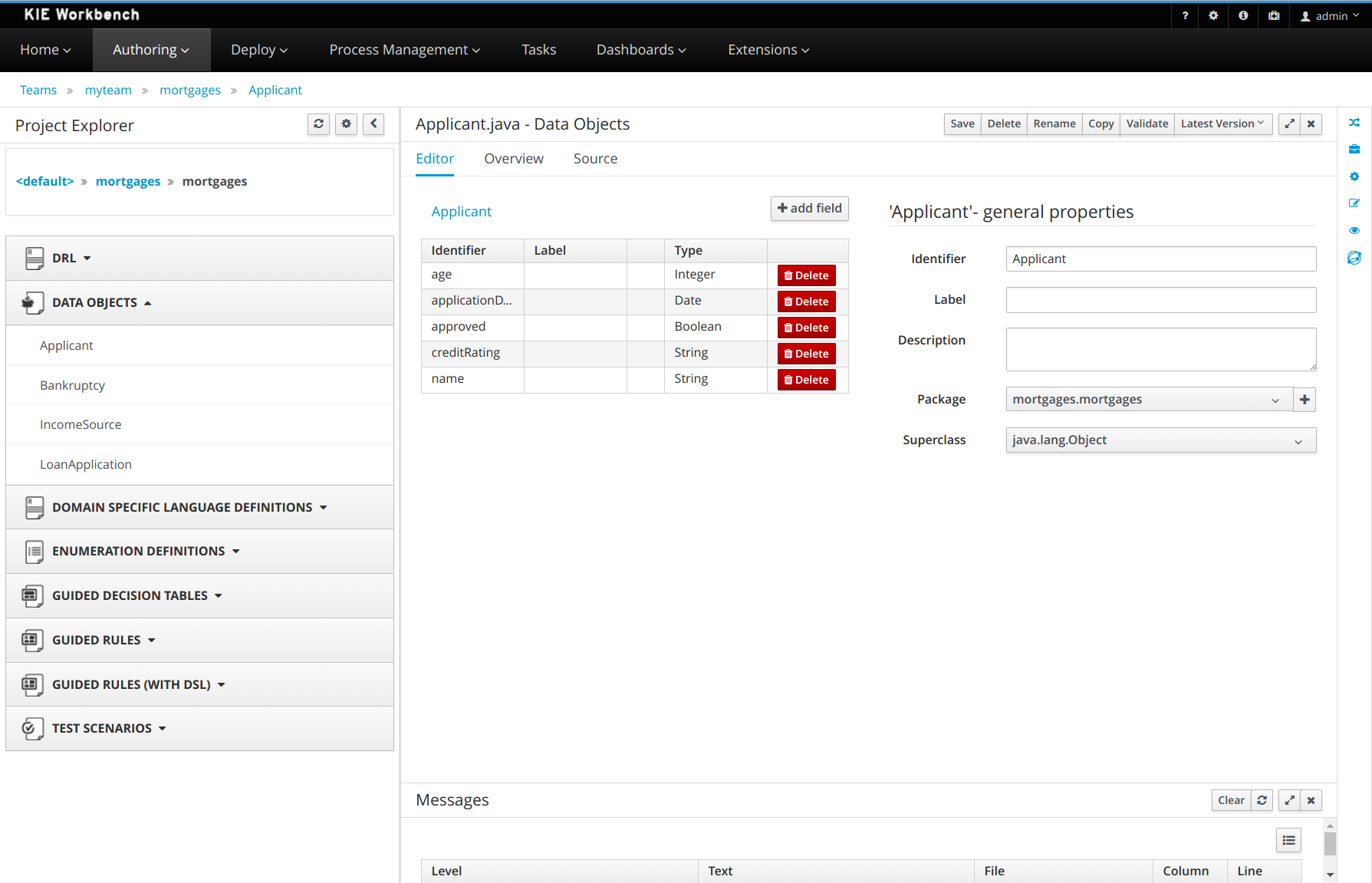

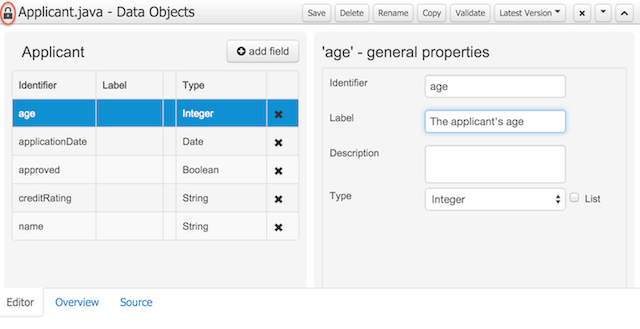

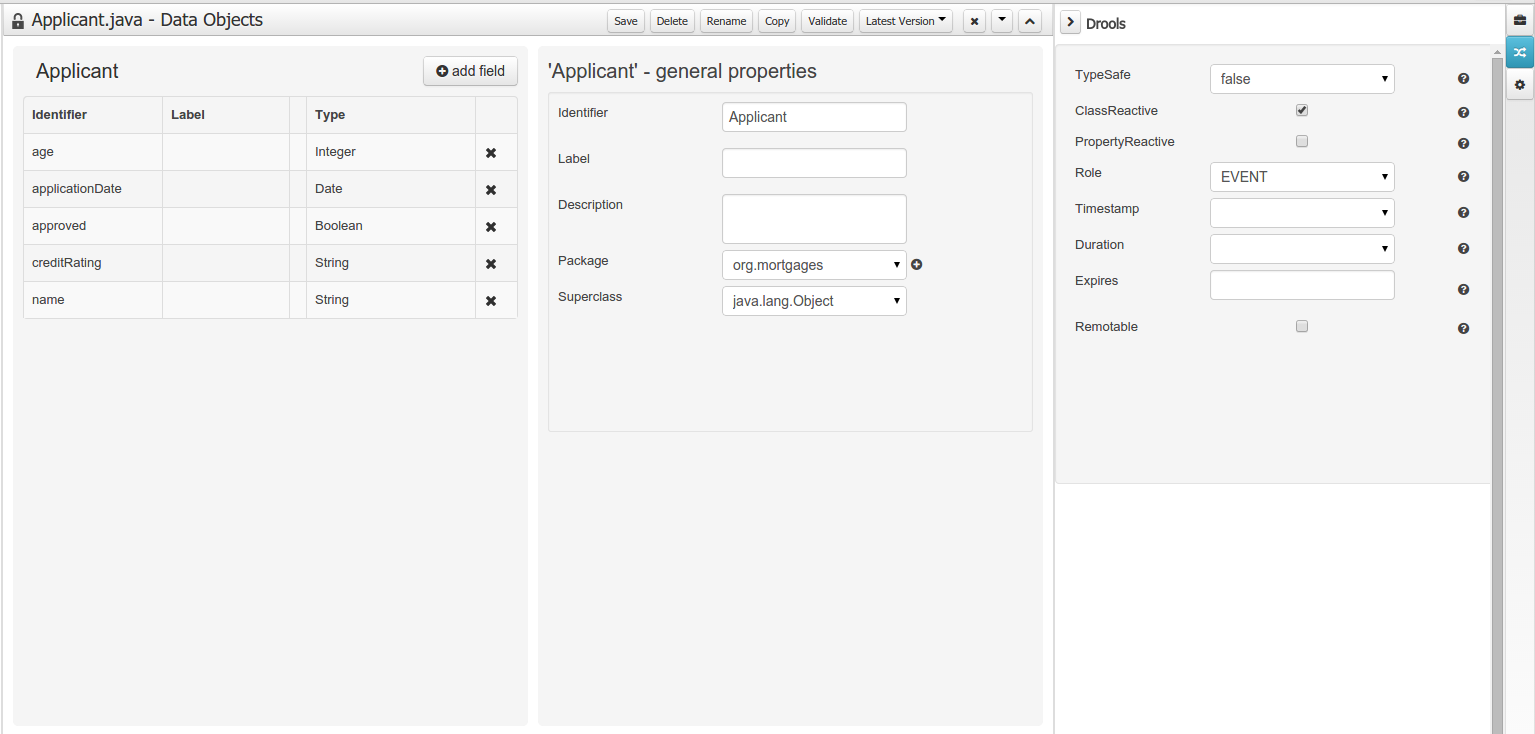

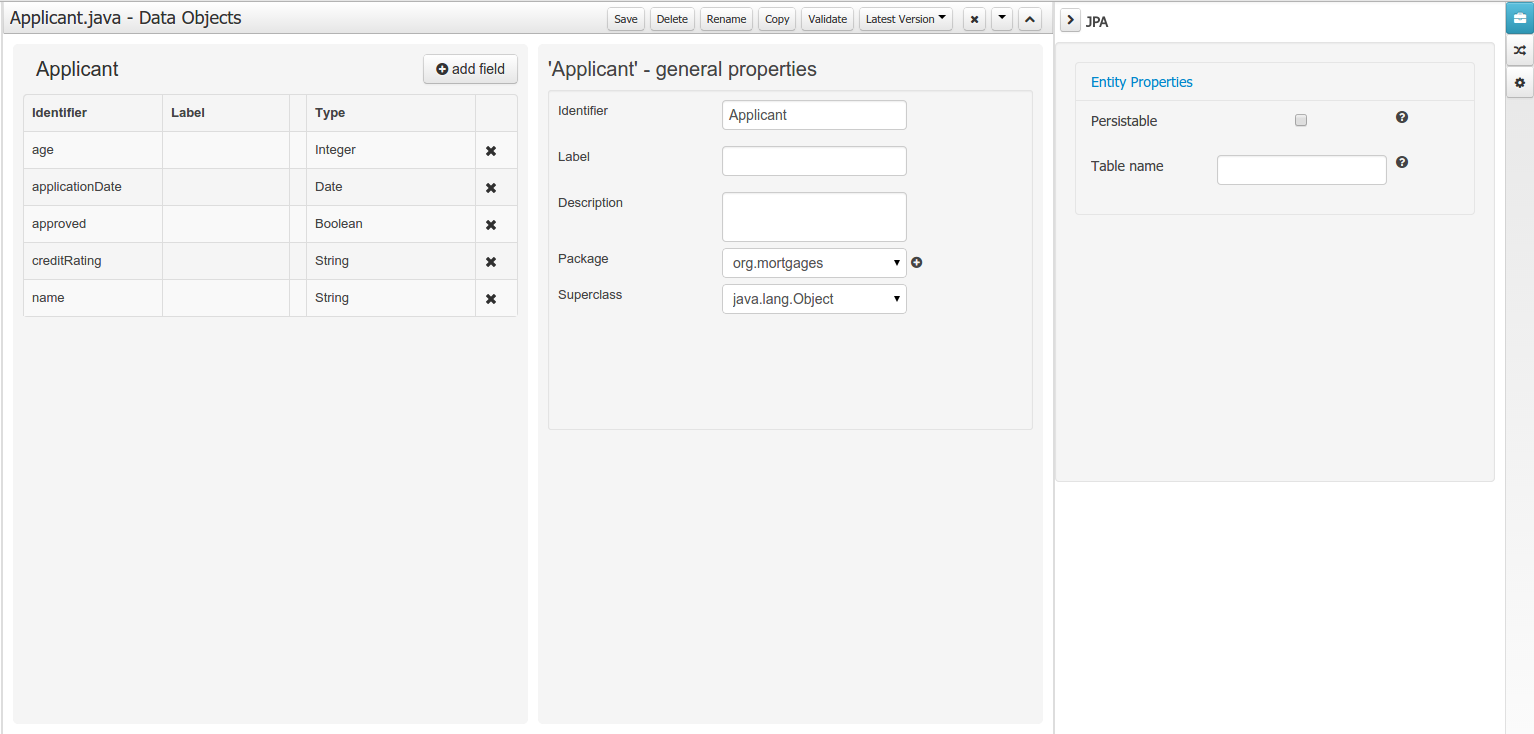

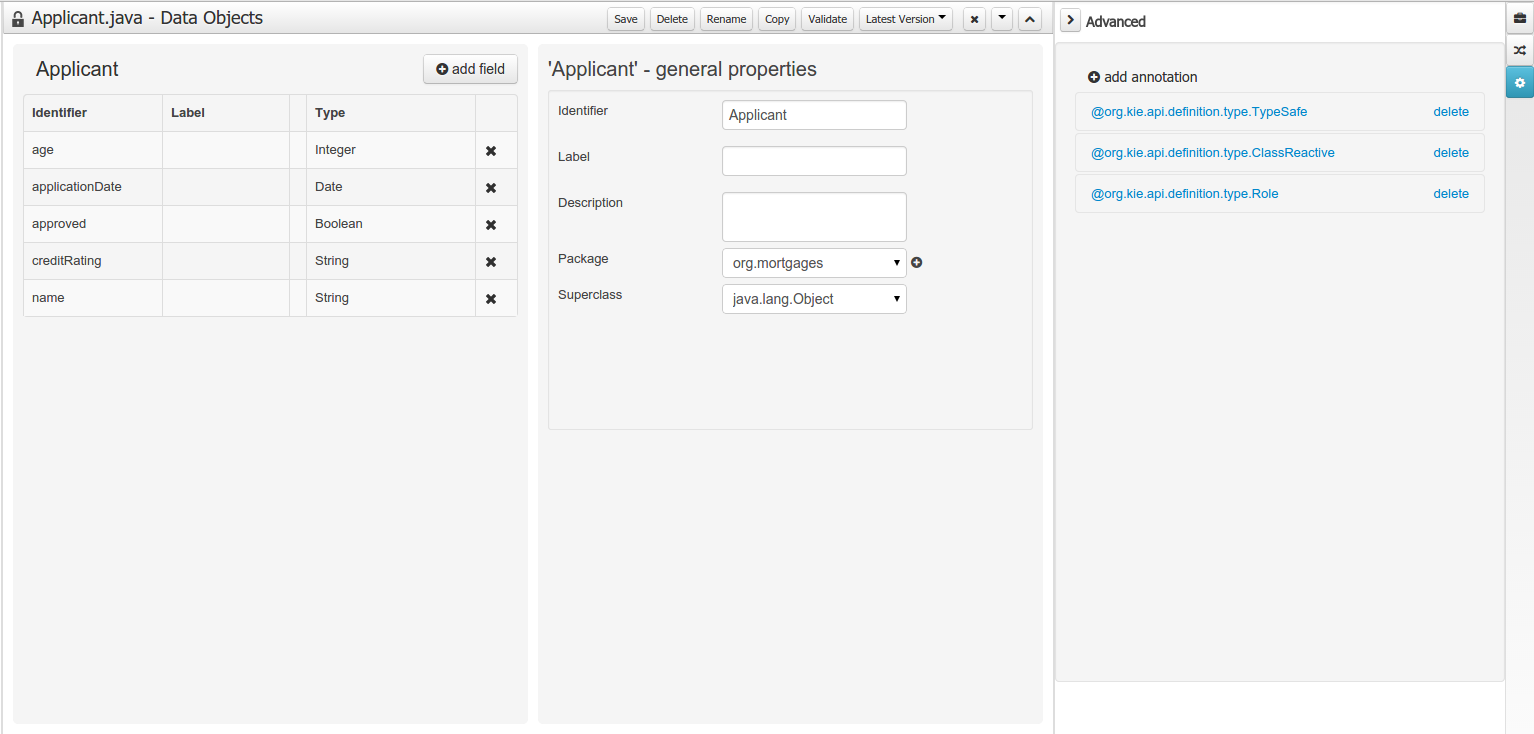

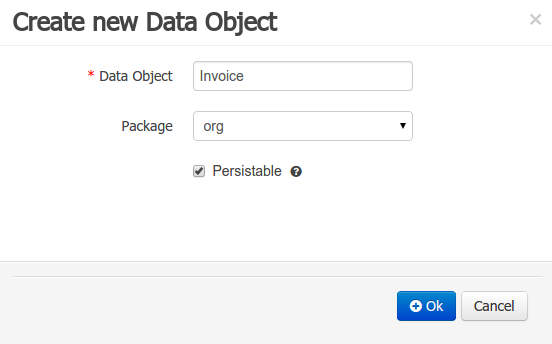

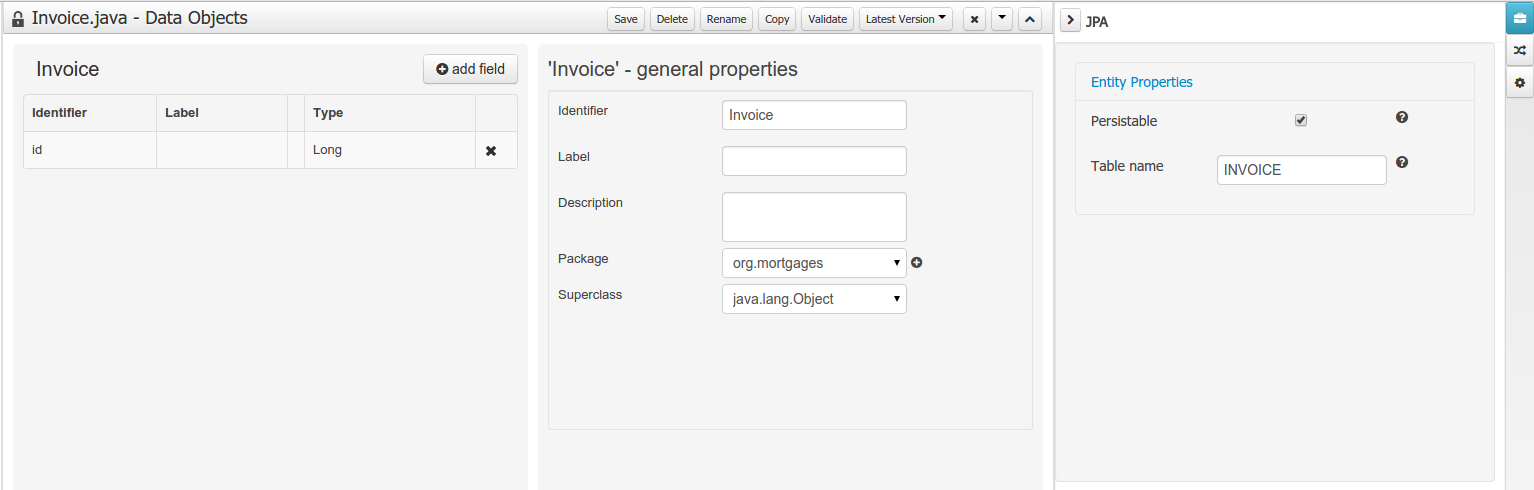

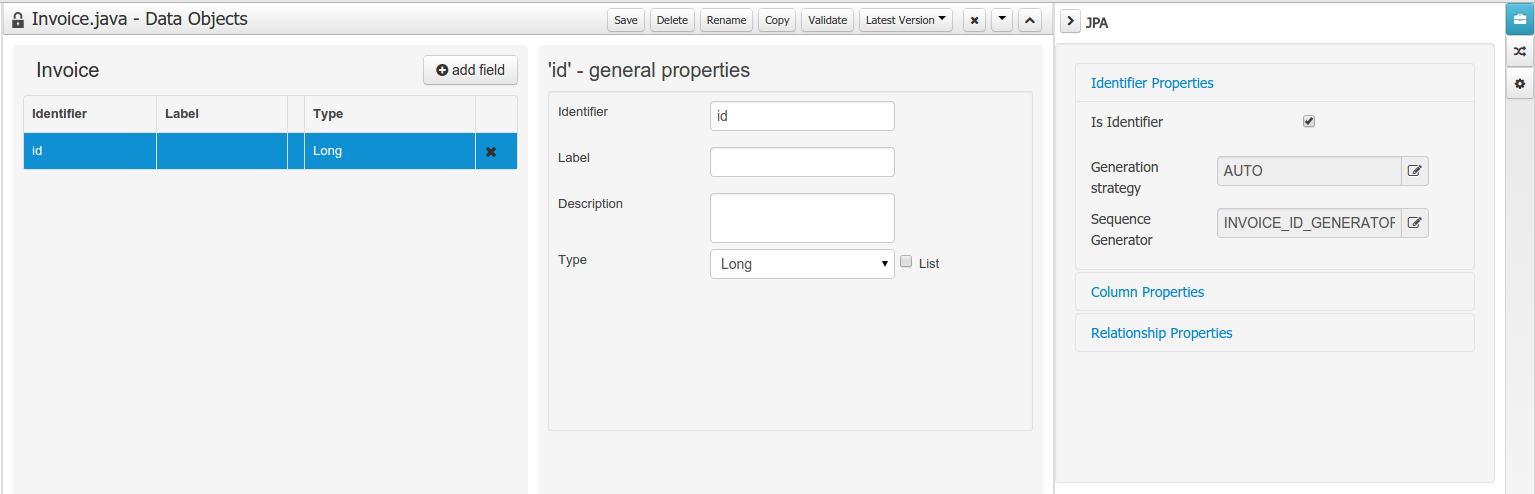

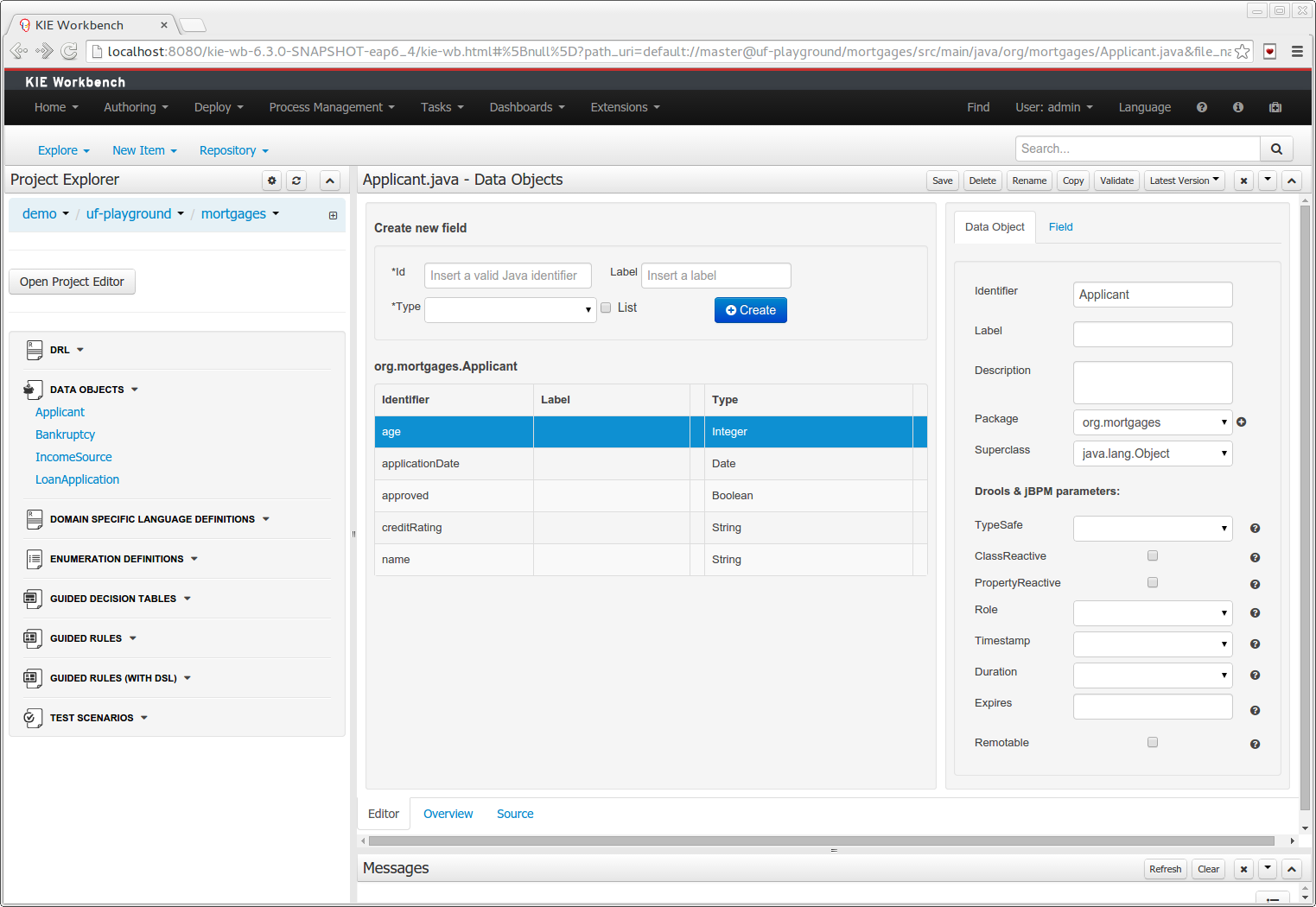

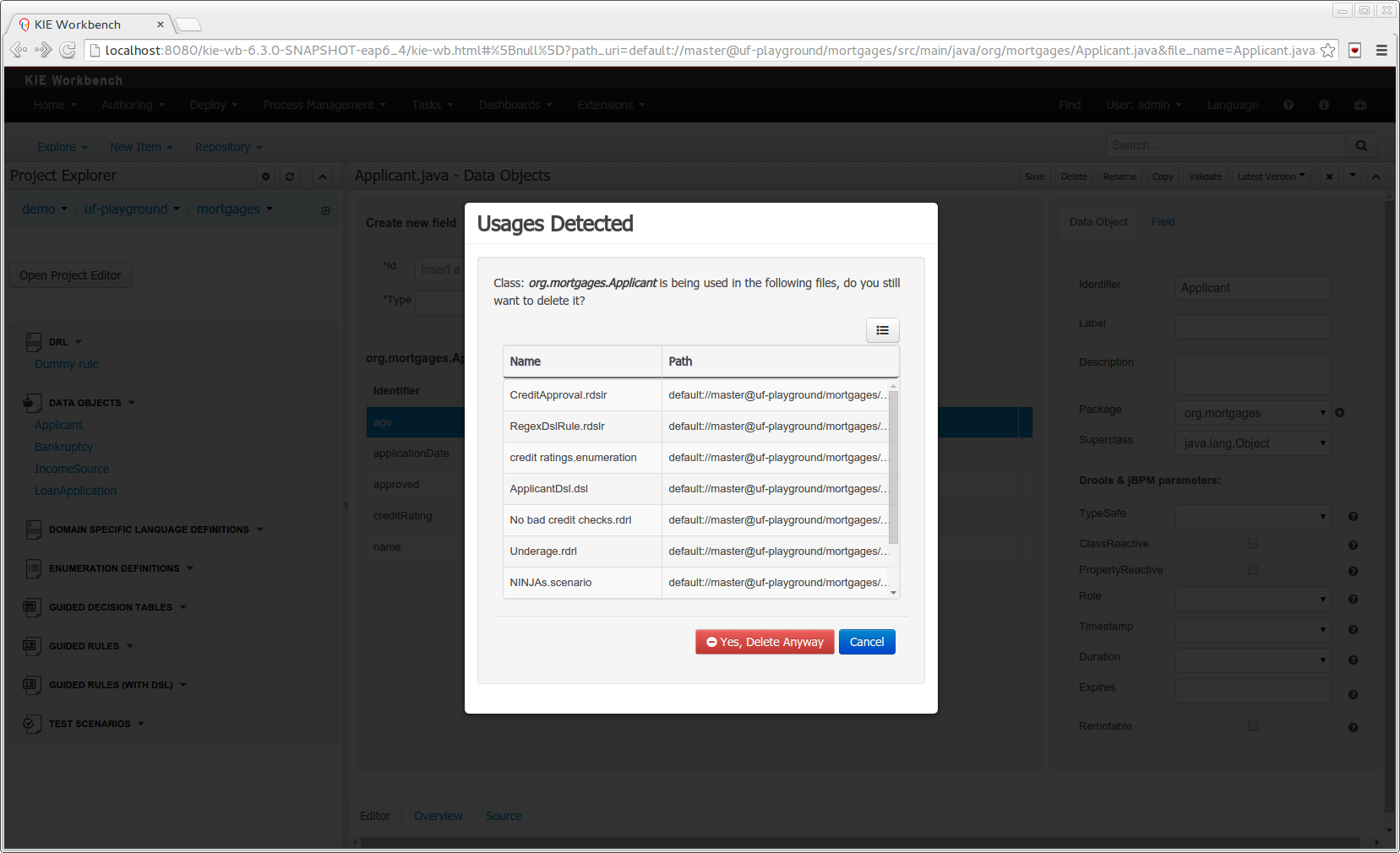

Processes almost always have some kind of data to work with. The data modeler allows non-technical users to view, edit or create these data models.

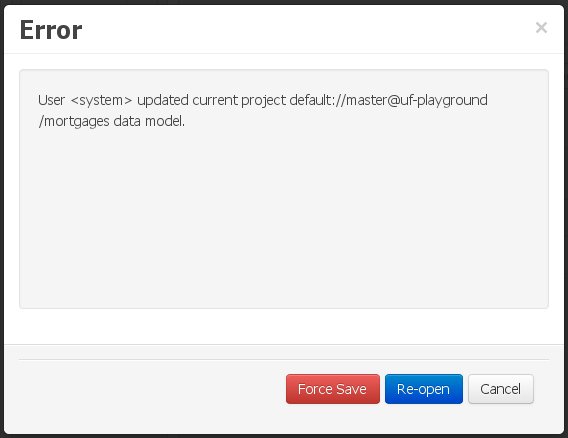

Typically, a business process analyst or data analyst will capture the requirements for a process or application and turn these into a formal set of interrelated data structures. The new Data Modeler tool provides an easy, straightforward and visual aid for building both logical and physical data models, without the need for advanced development skills or explicit coding. The data modeler is transparently integrated into Business Central. Its main goals are to make data models first class citizens in the process improvement cycle and allow for full process automation through the integrated use of data structures (and the forms that will be used to interact with them).

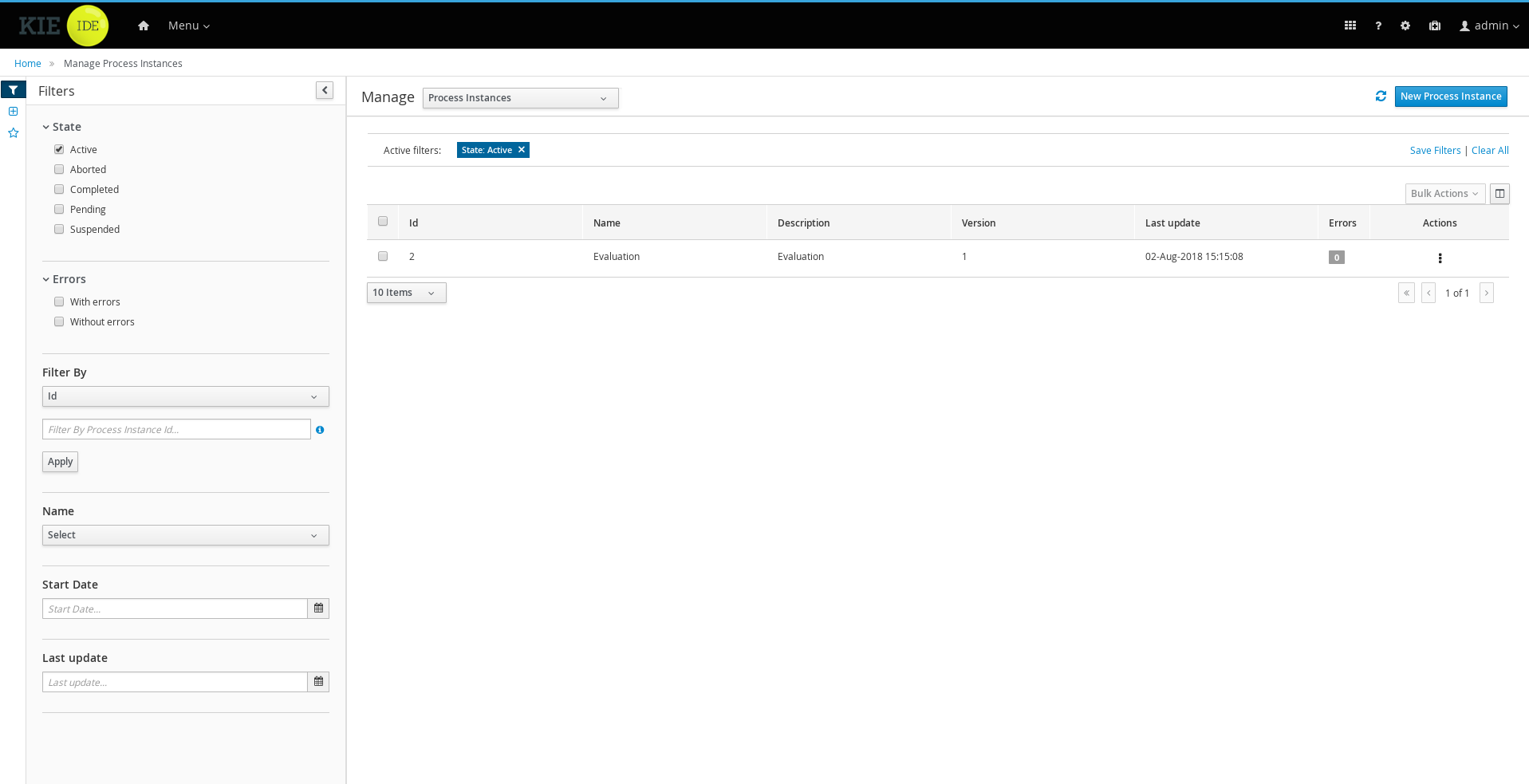

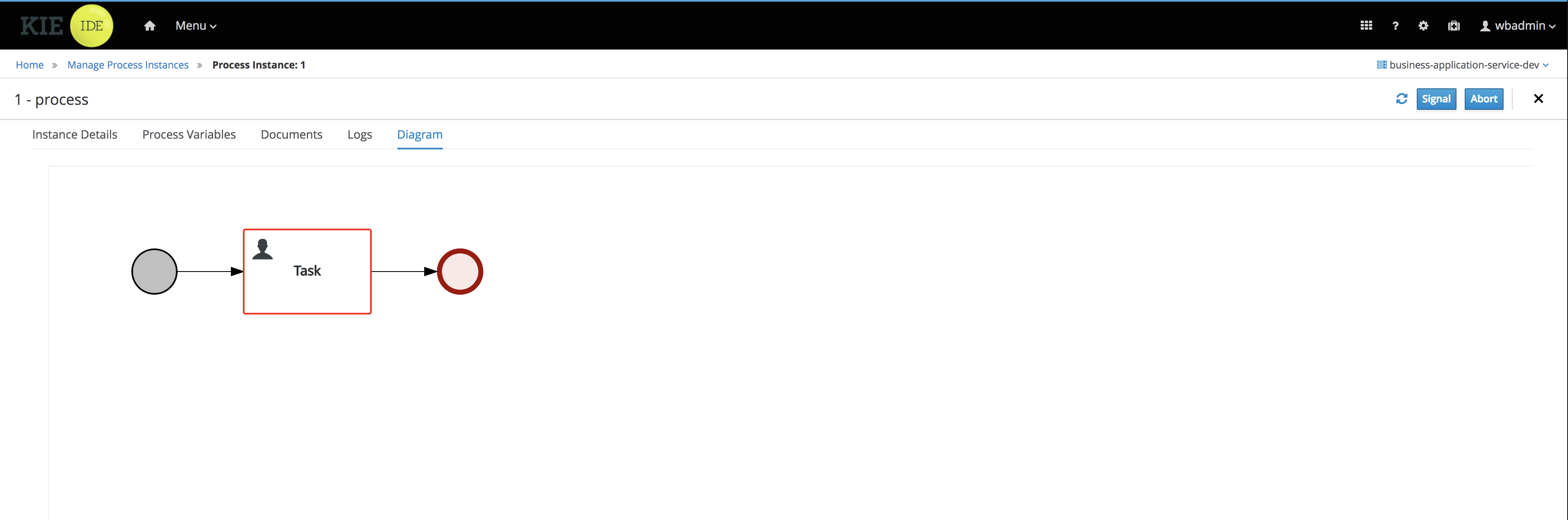

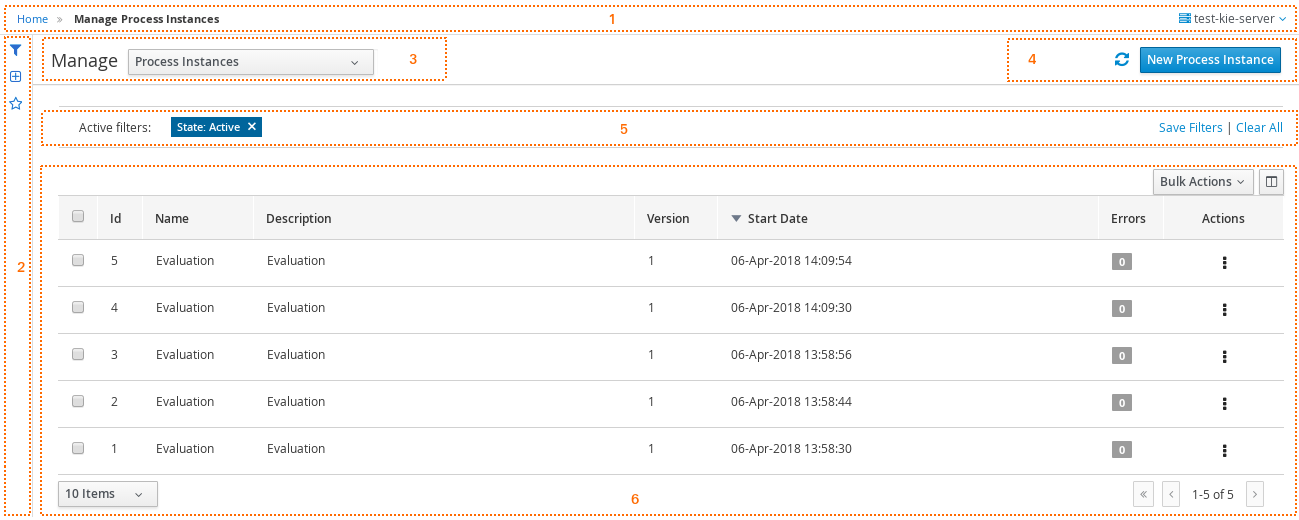

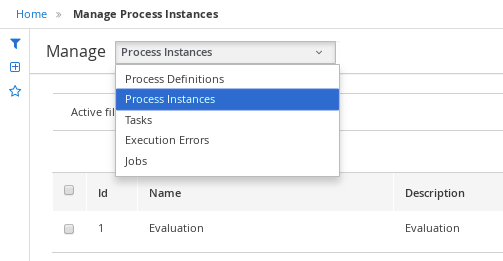

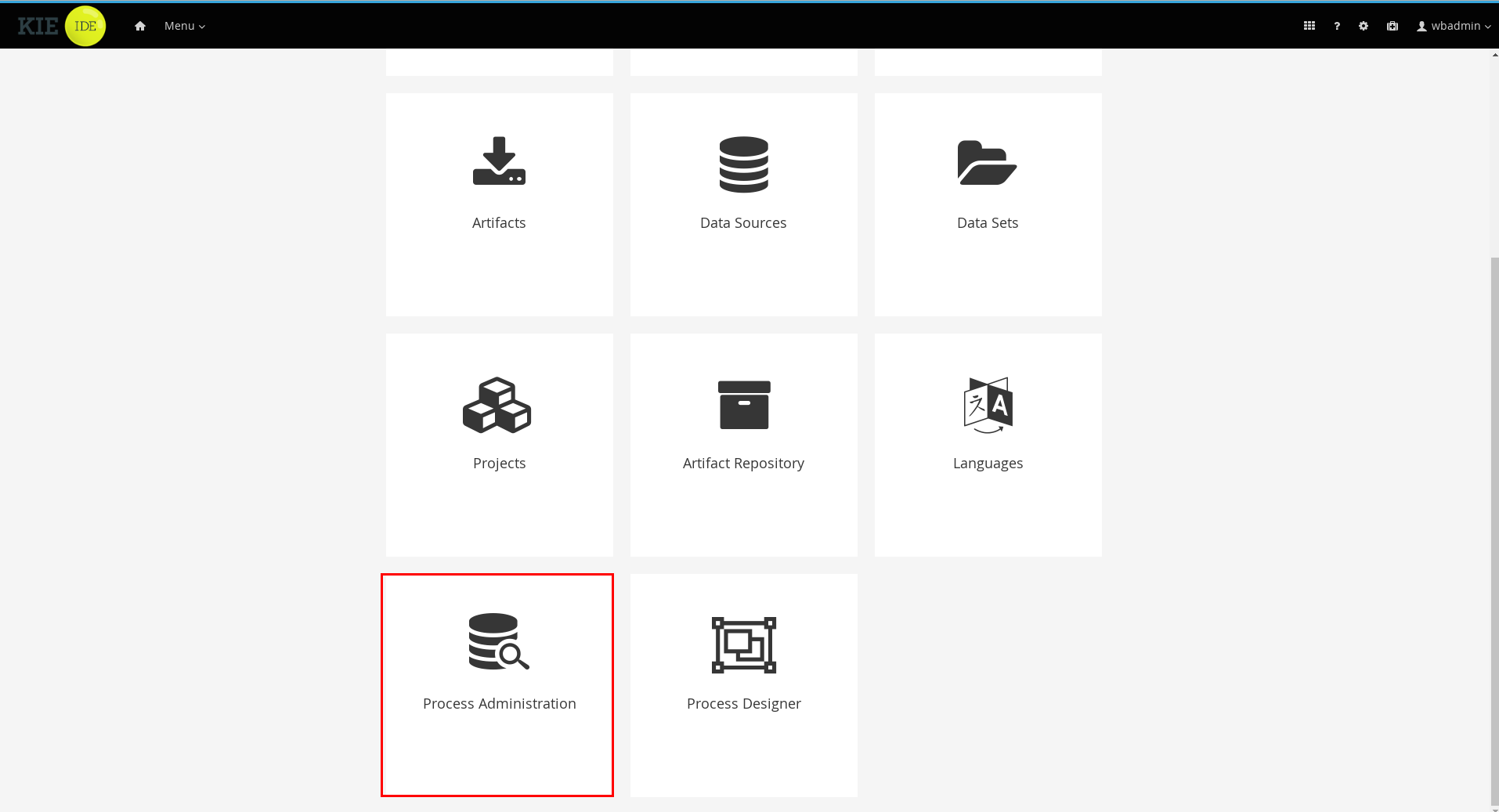

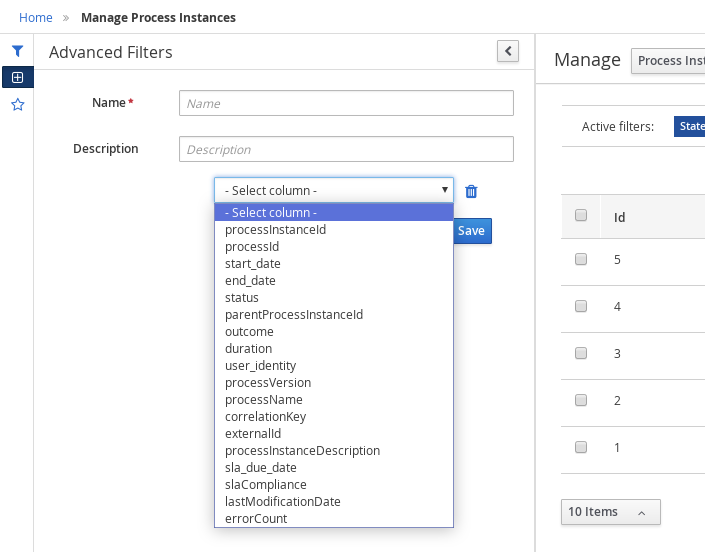

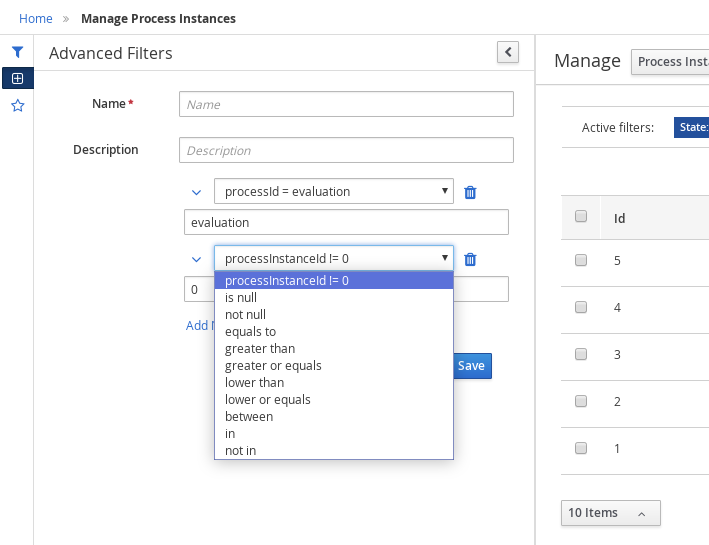

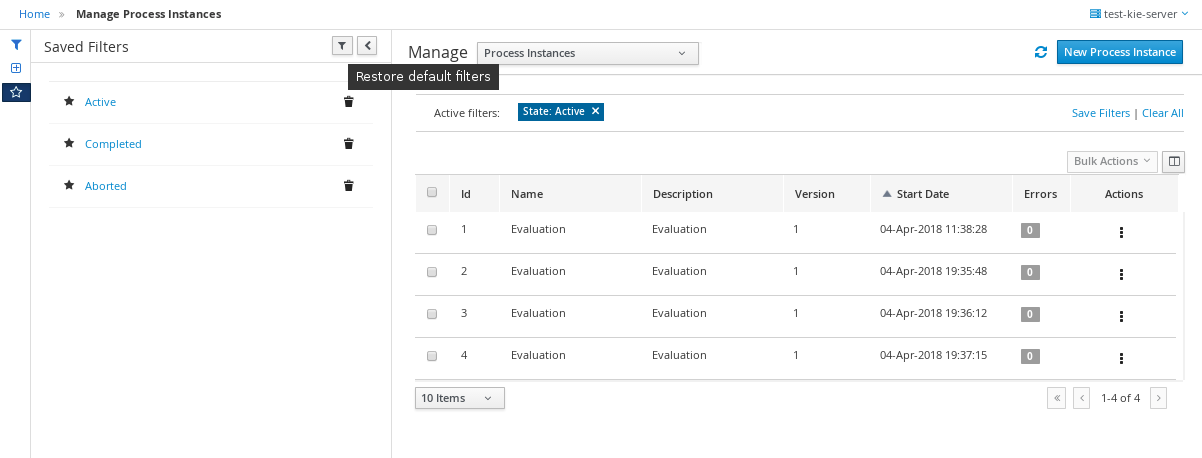

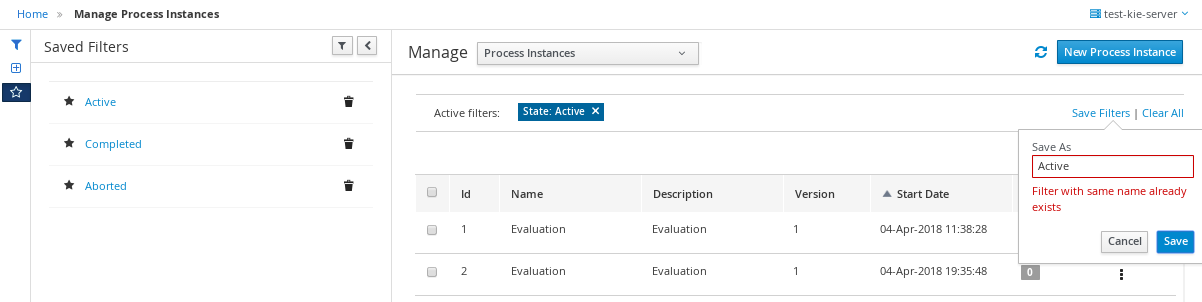

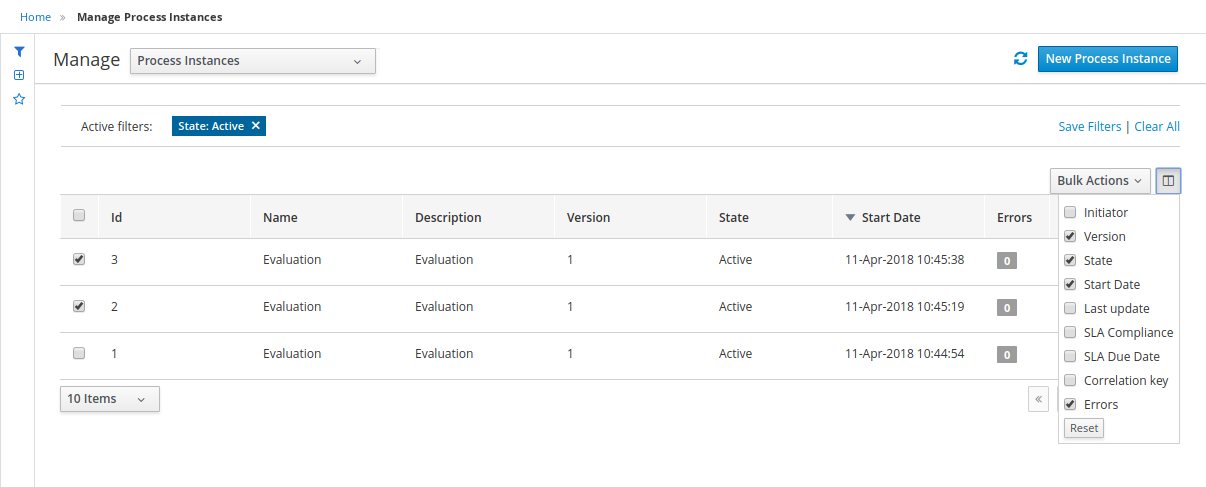

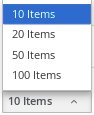

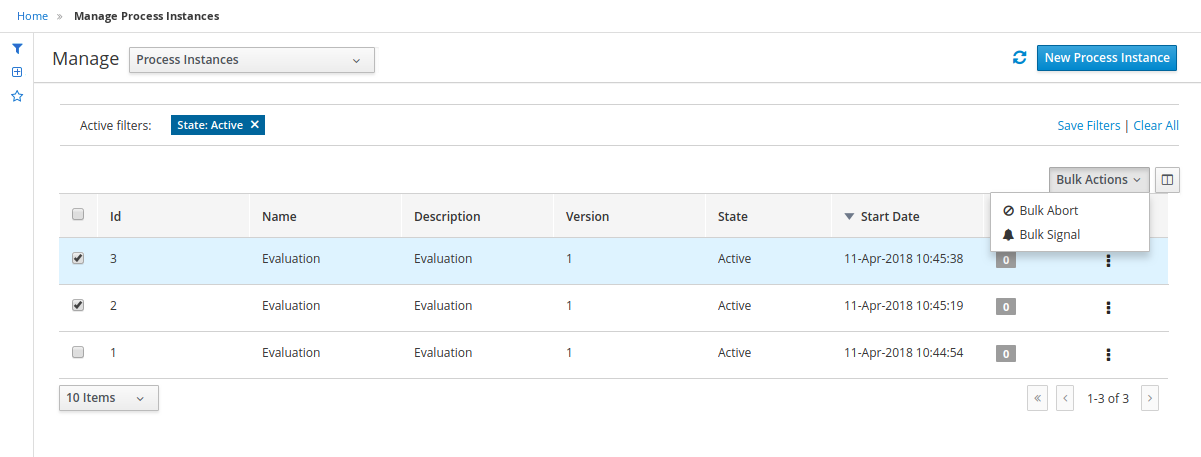

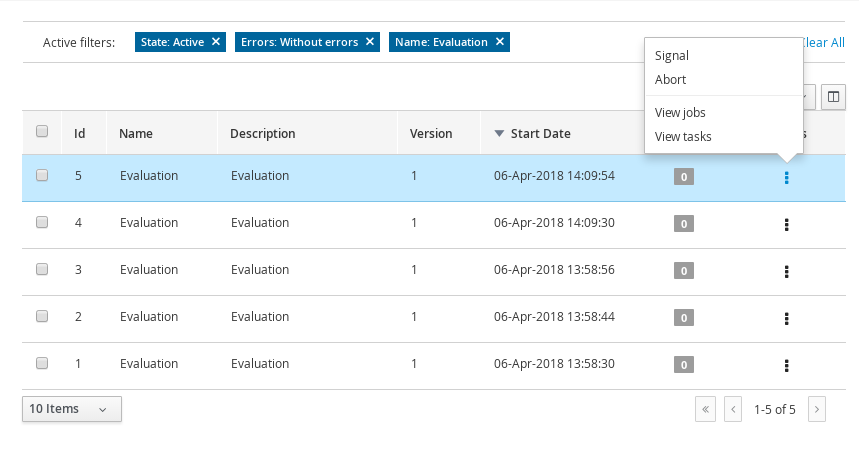

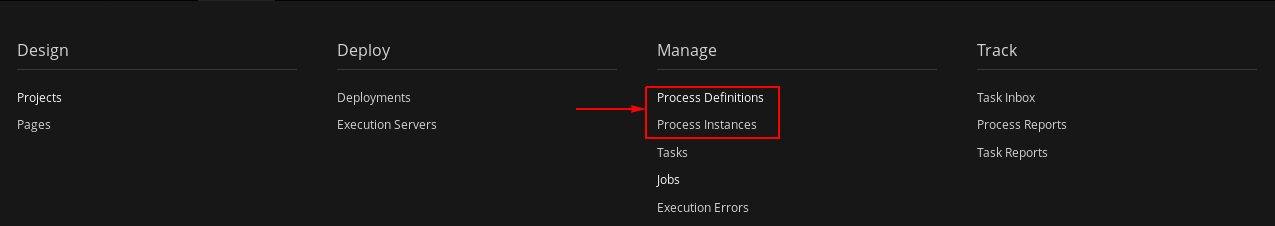

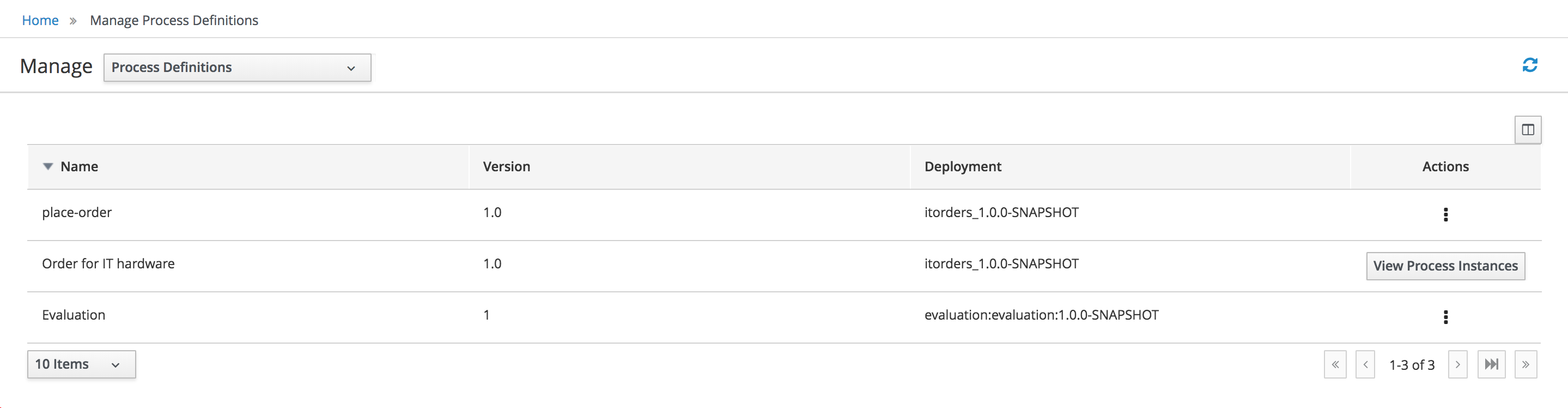

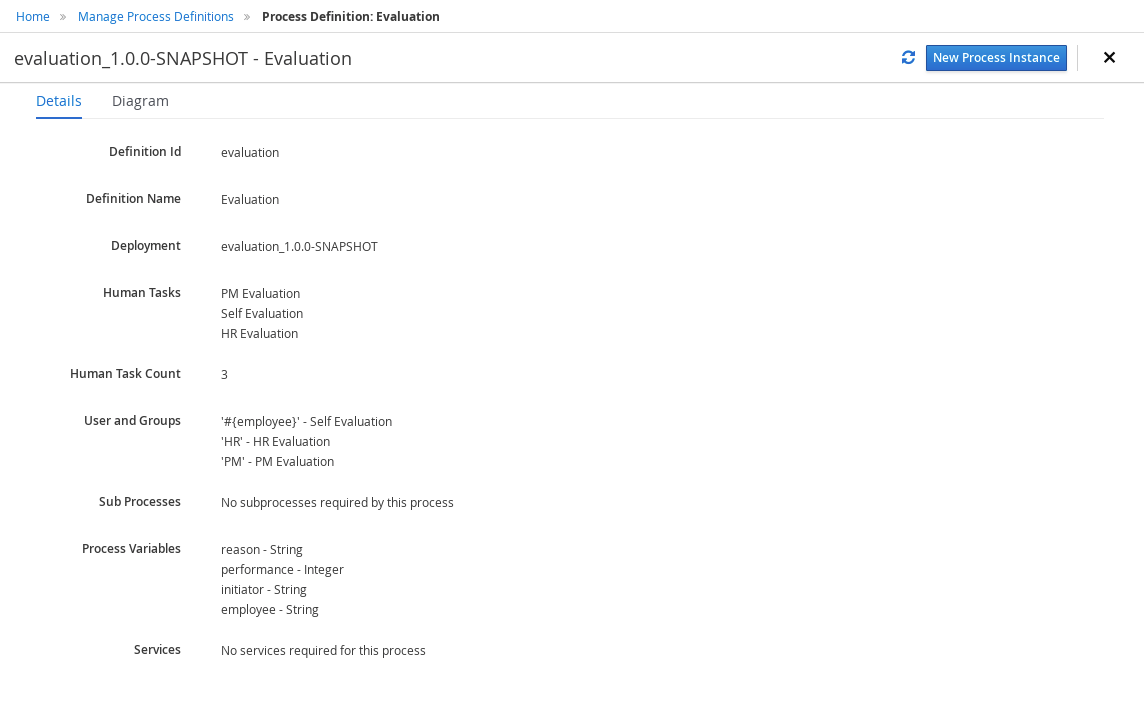

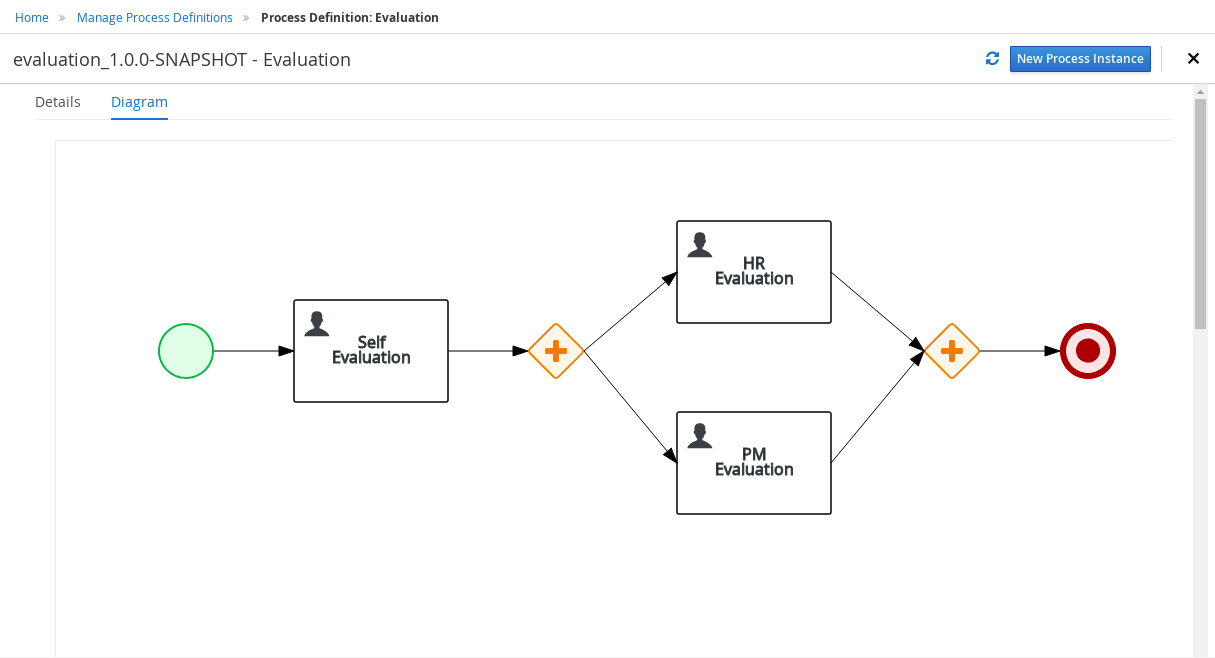

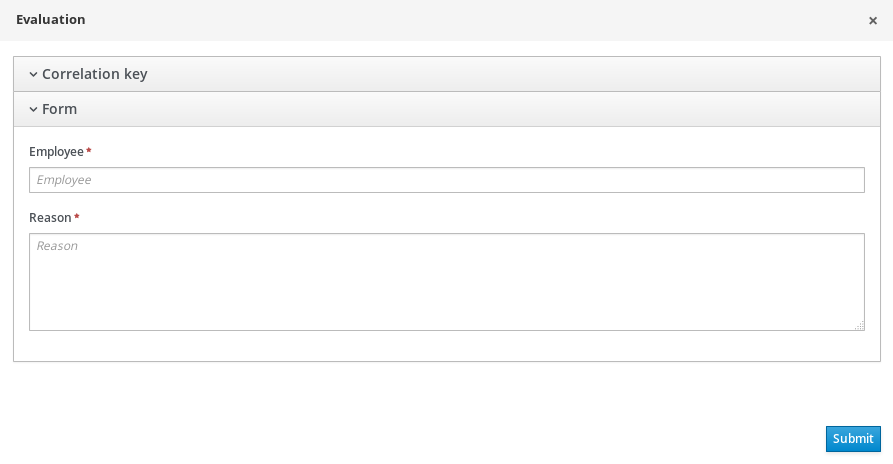

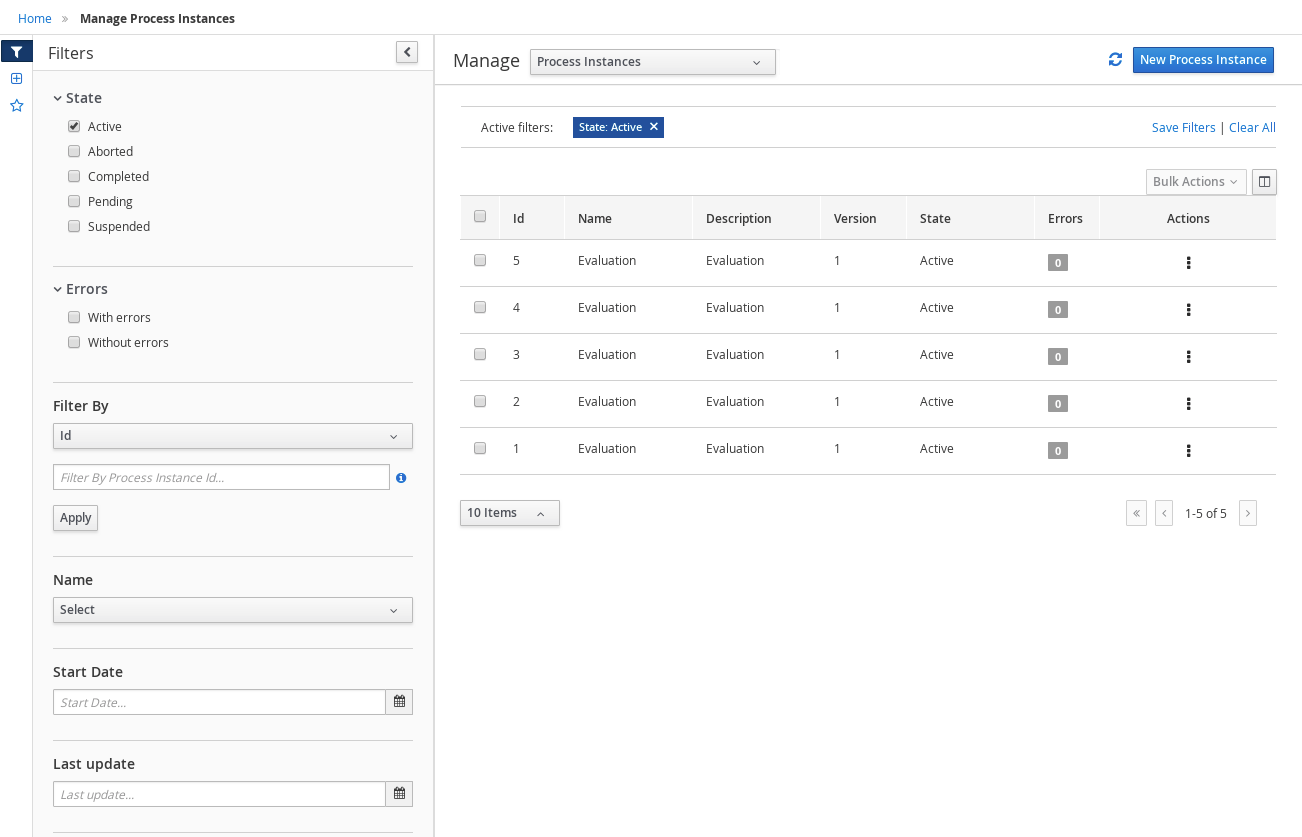

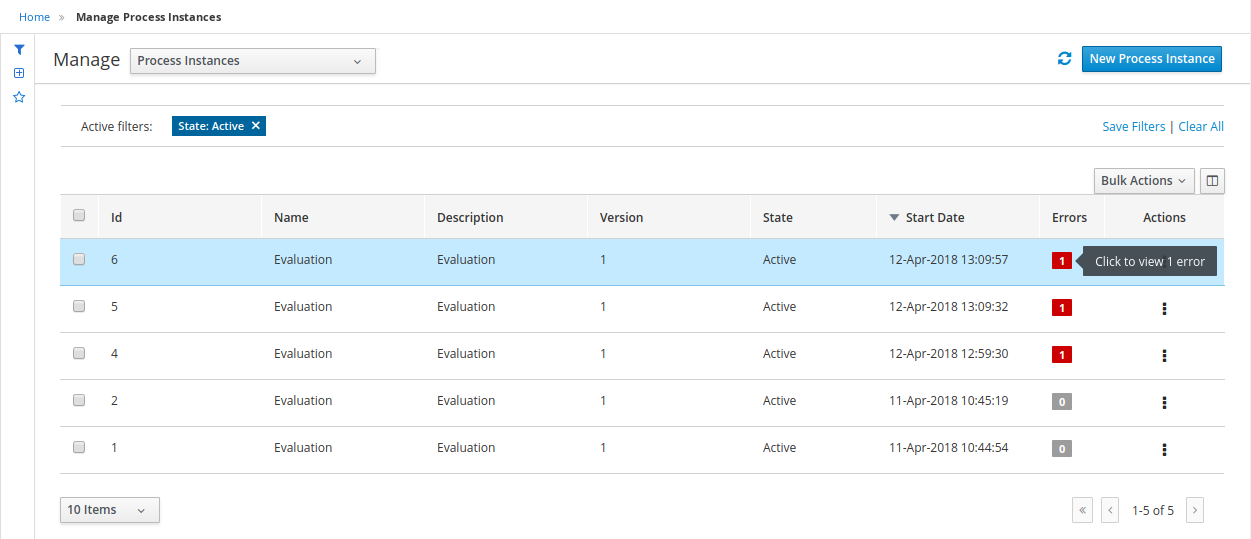

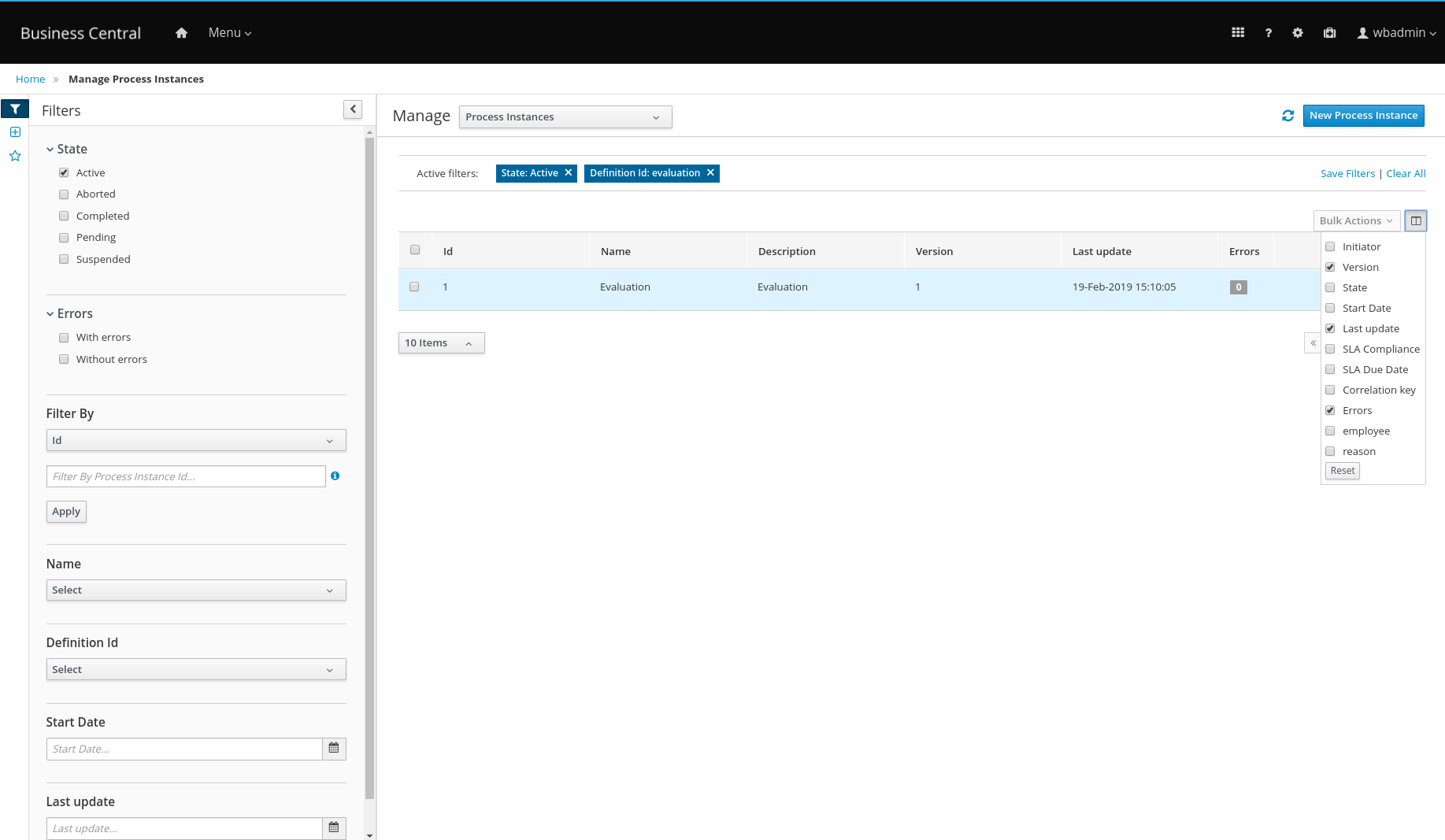

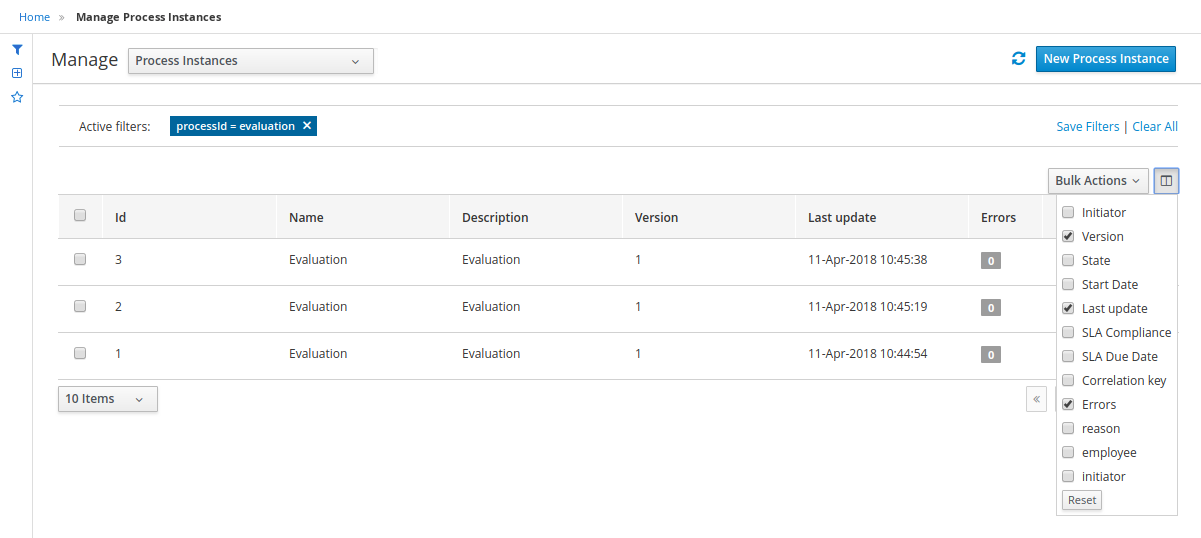

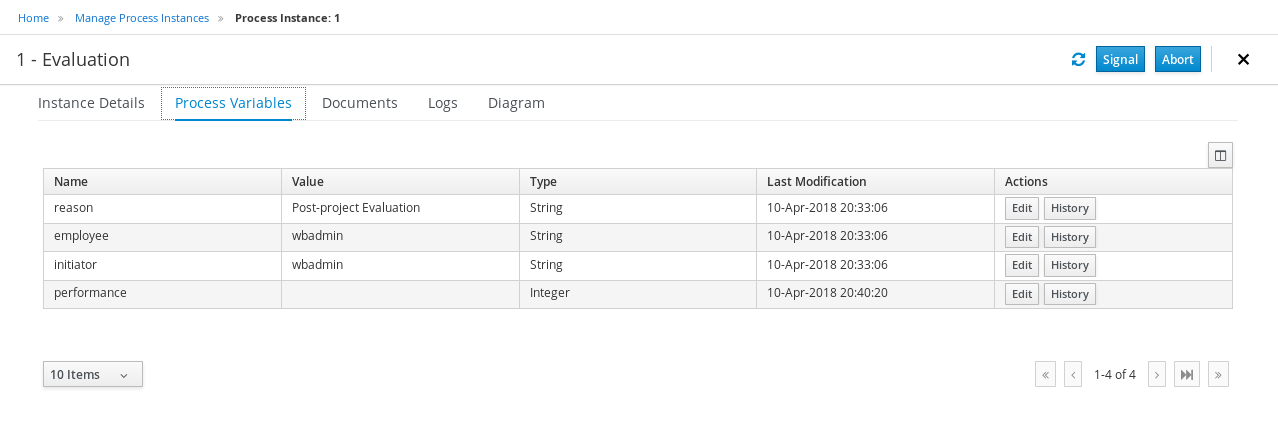

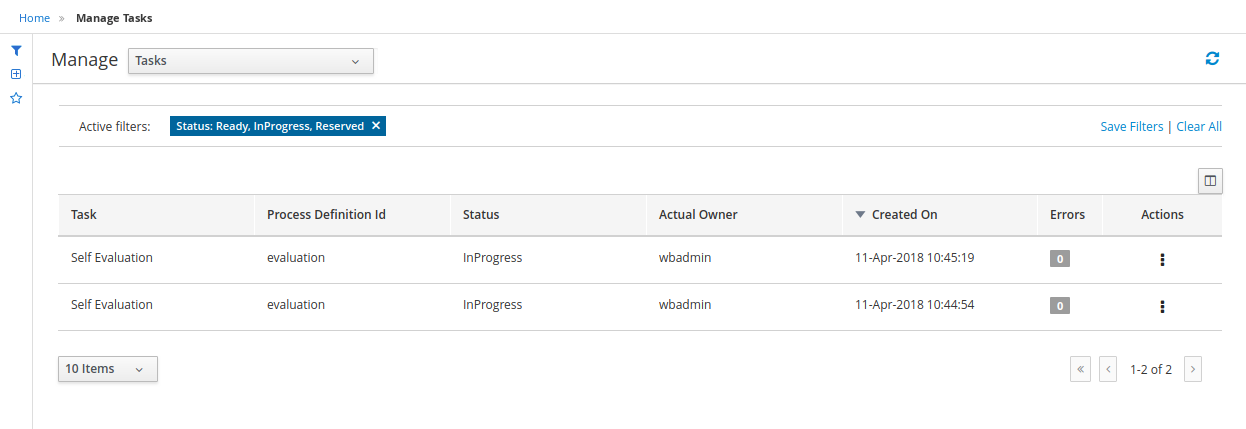

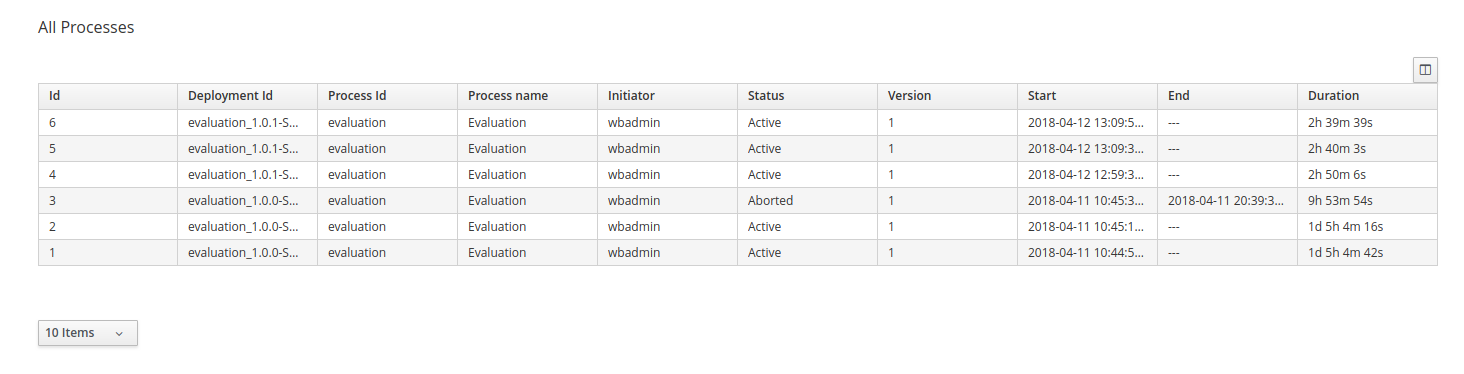

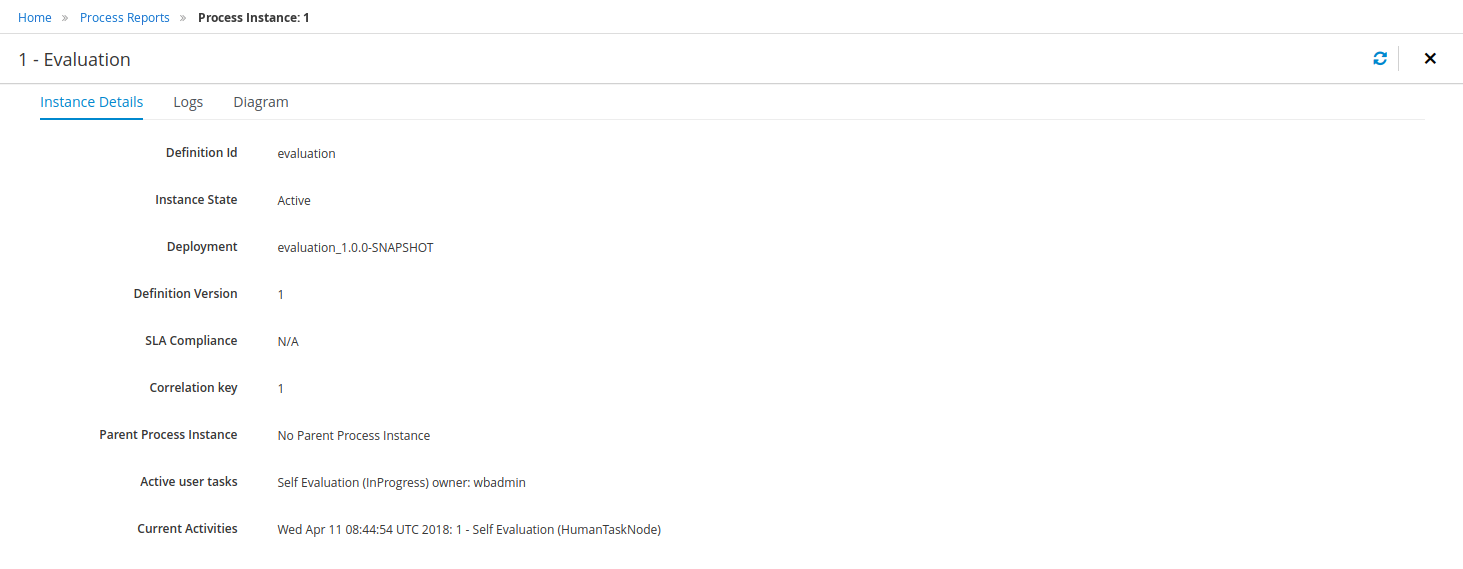

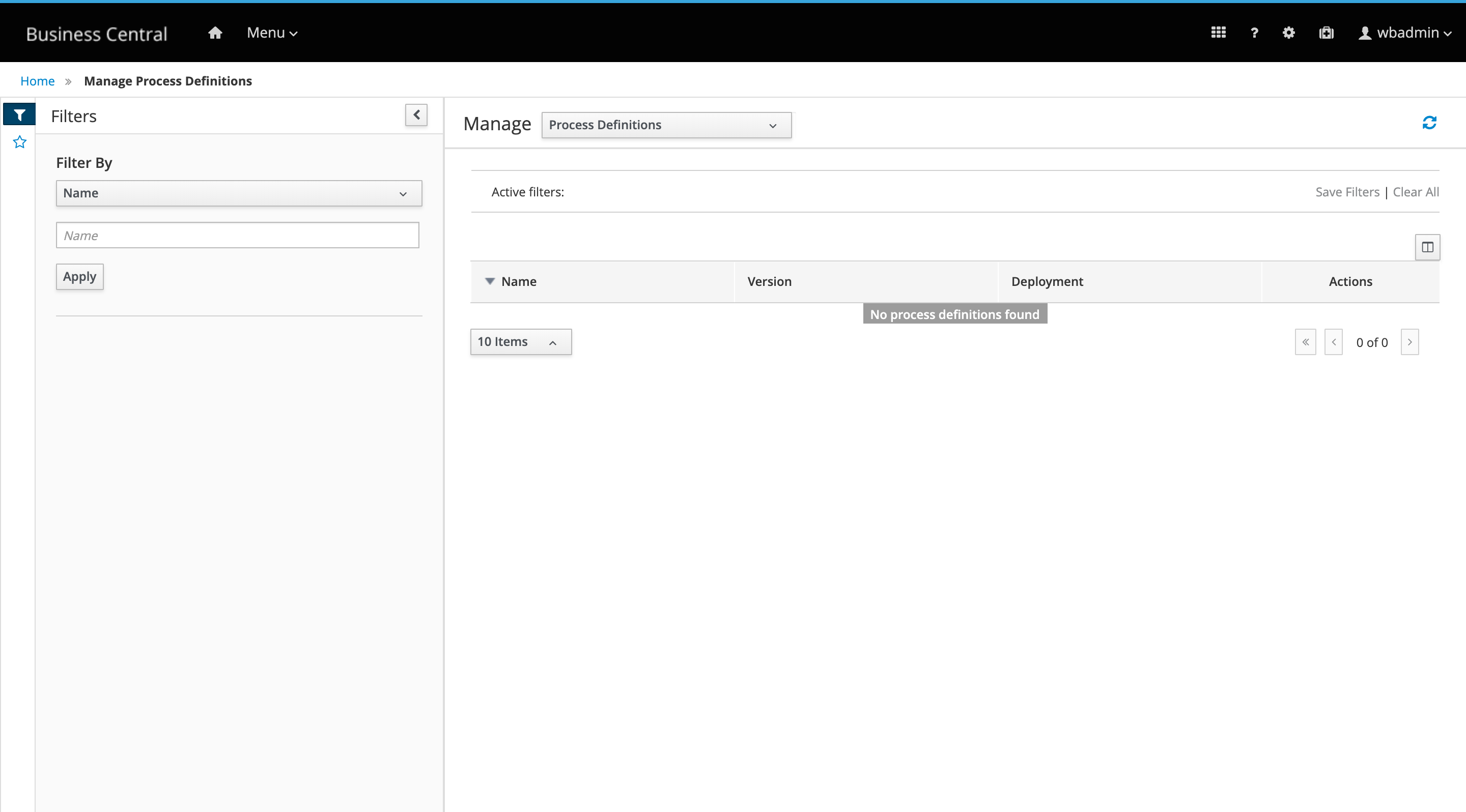

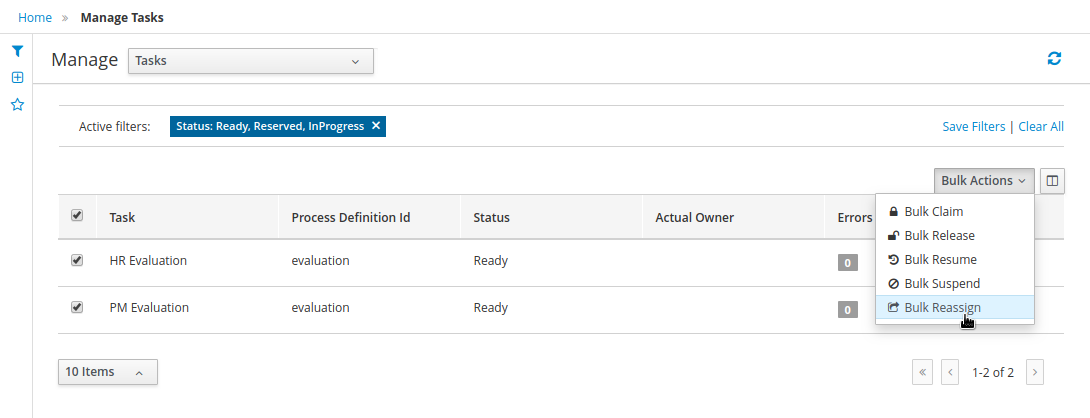

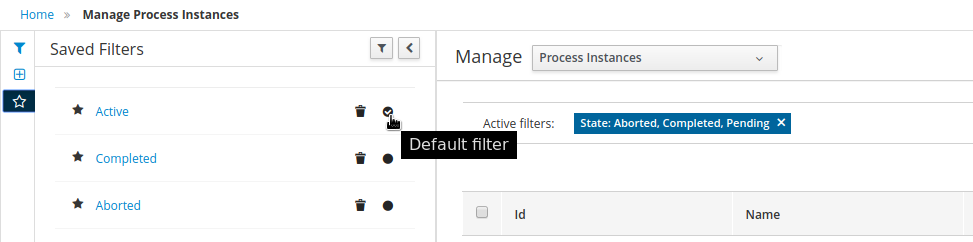

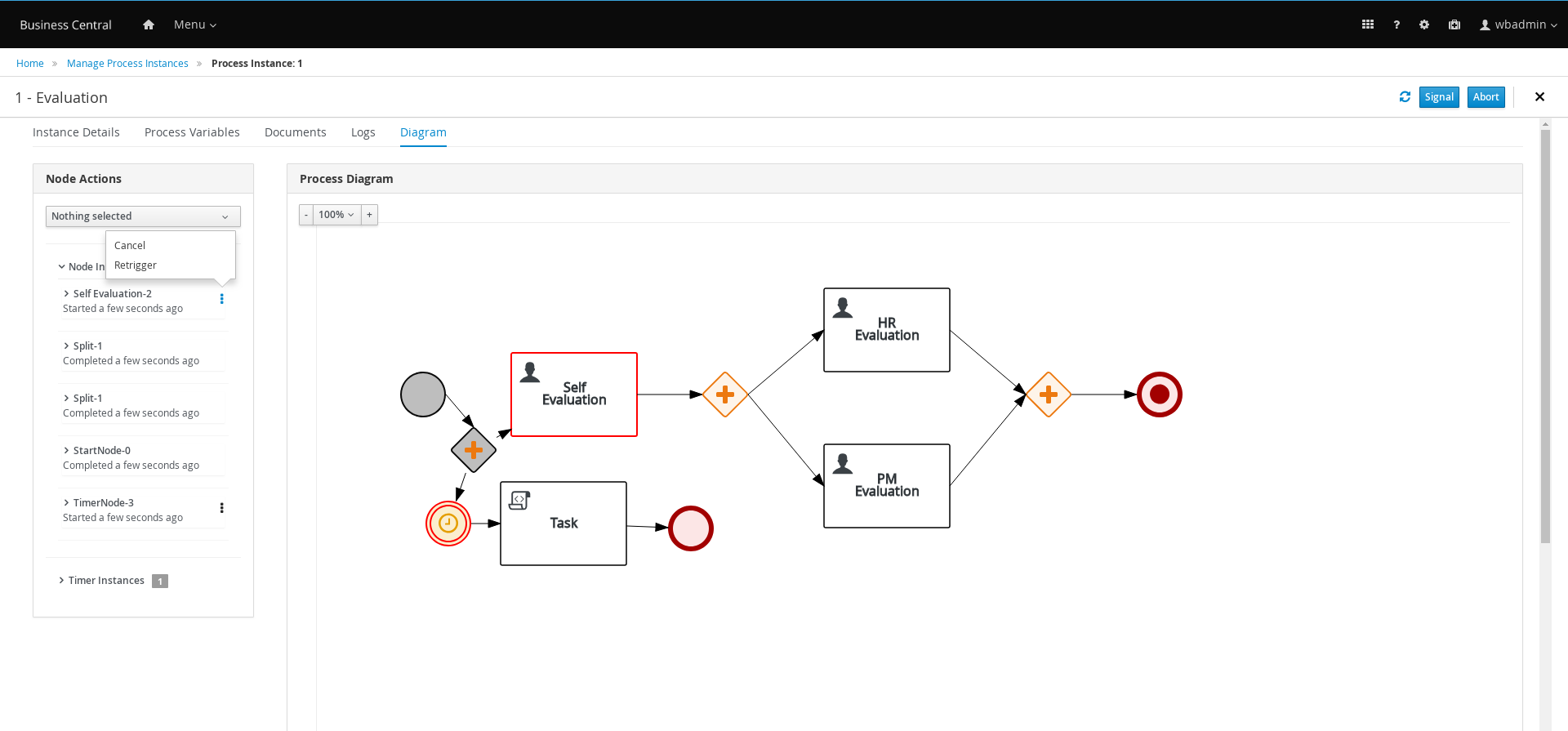

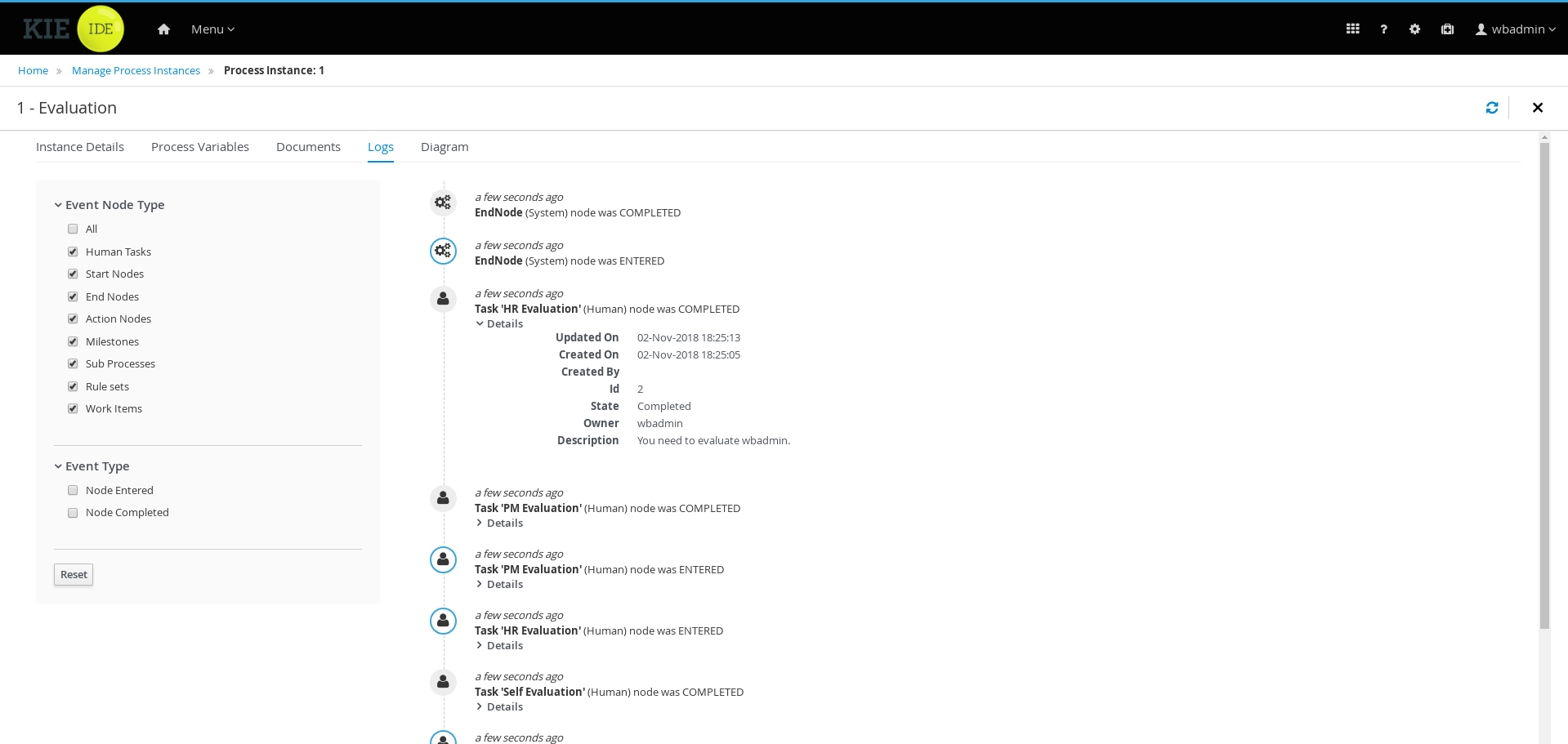

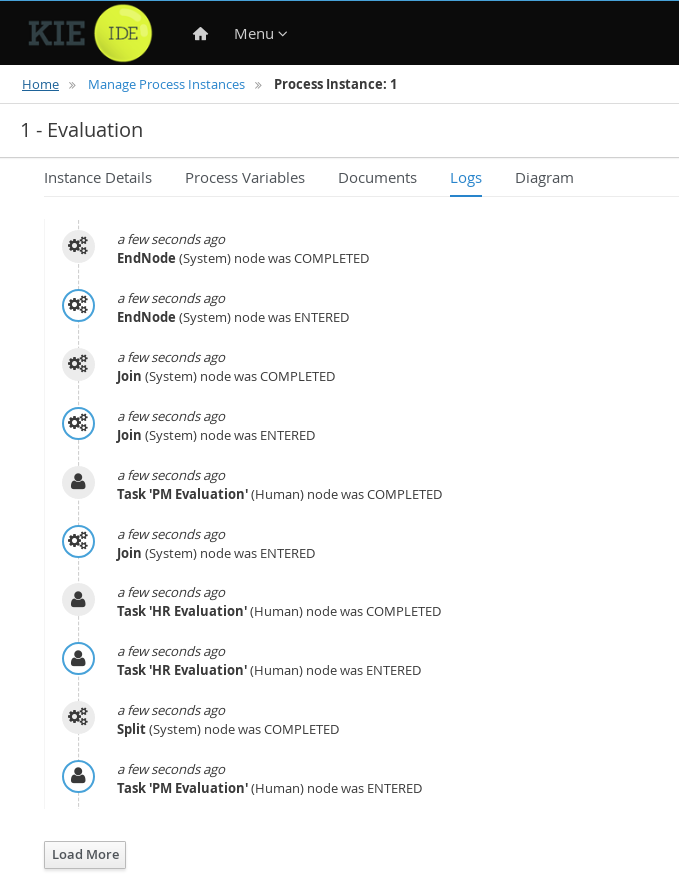

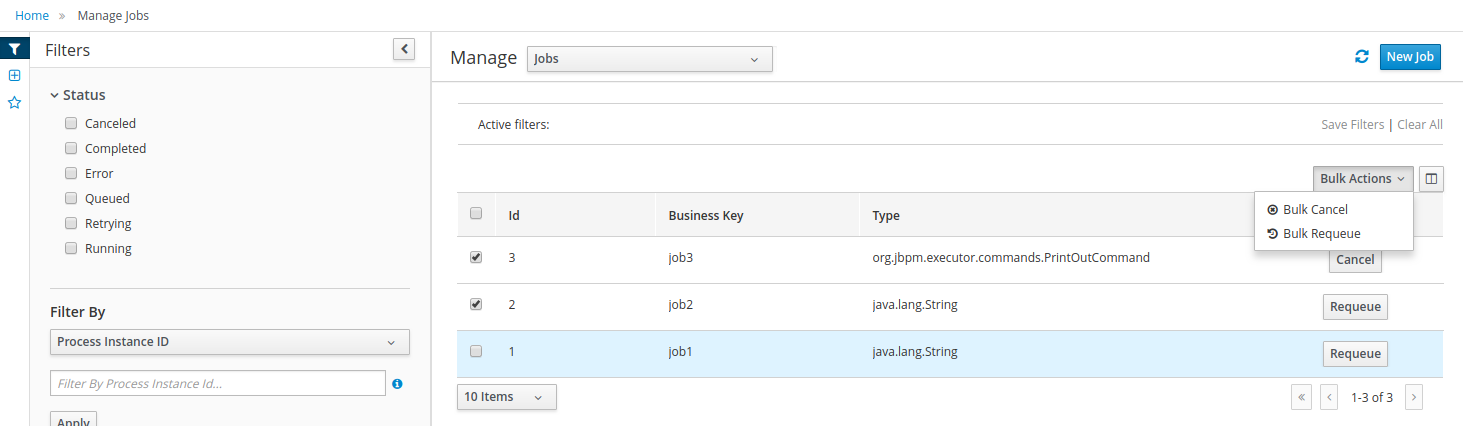

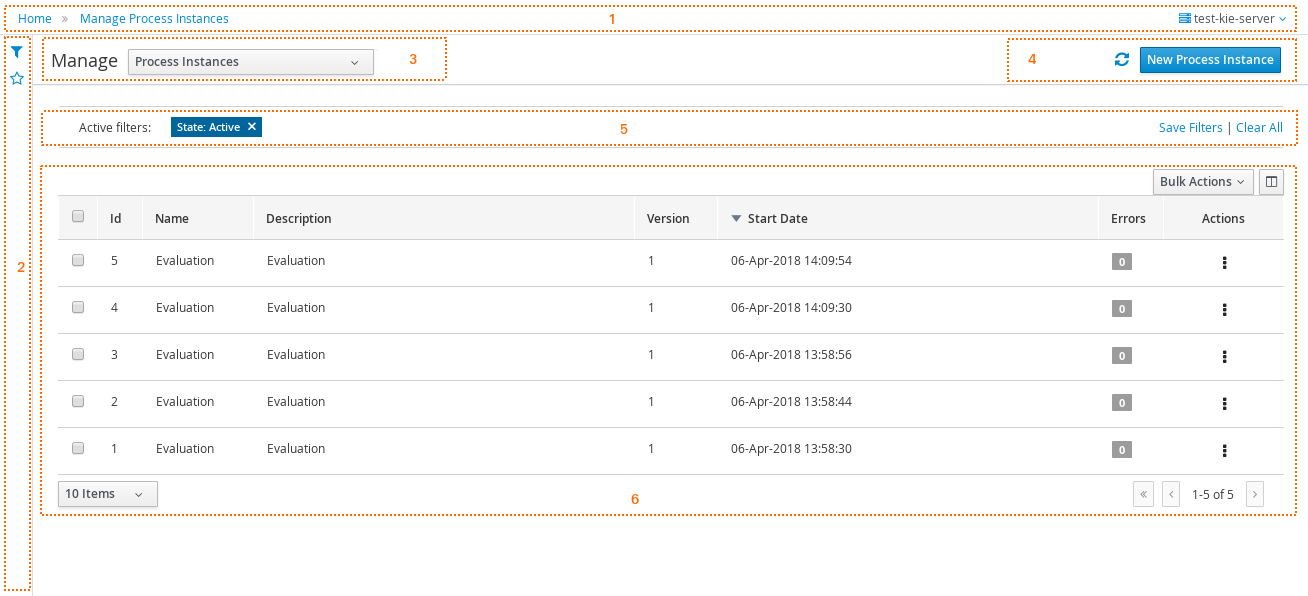

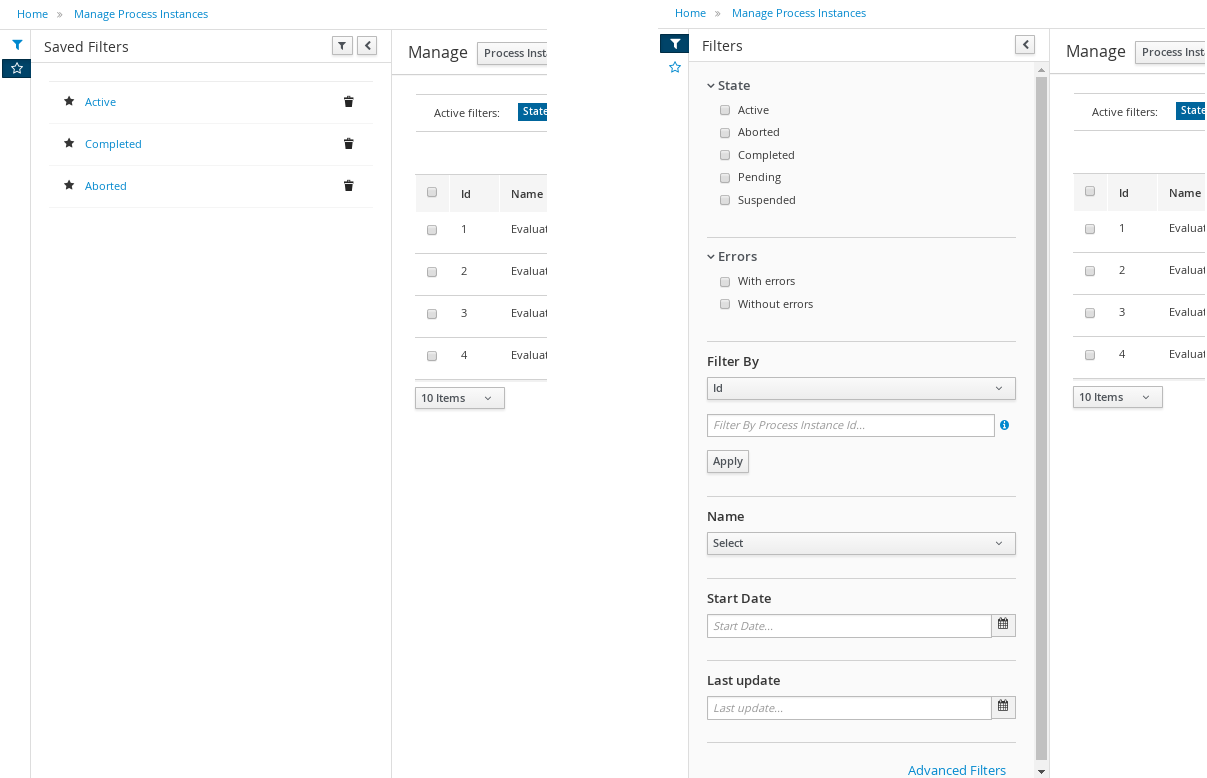

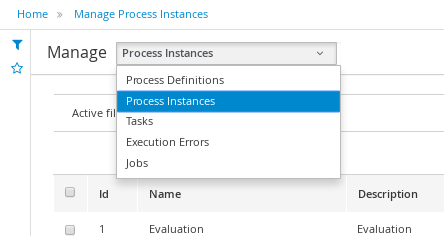

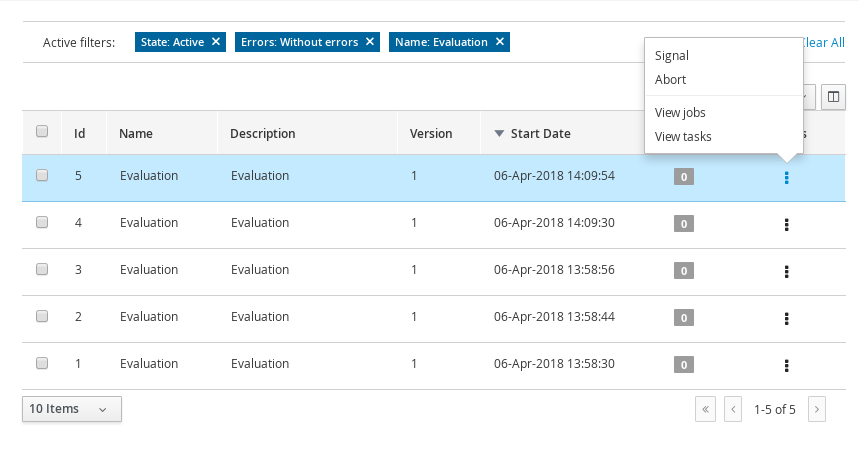

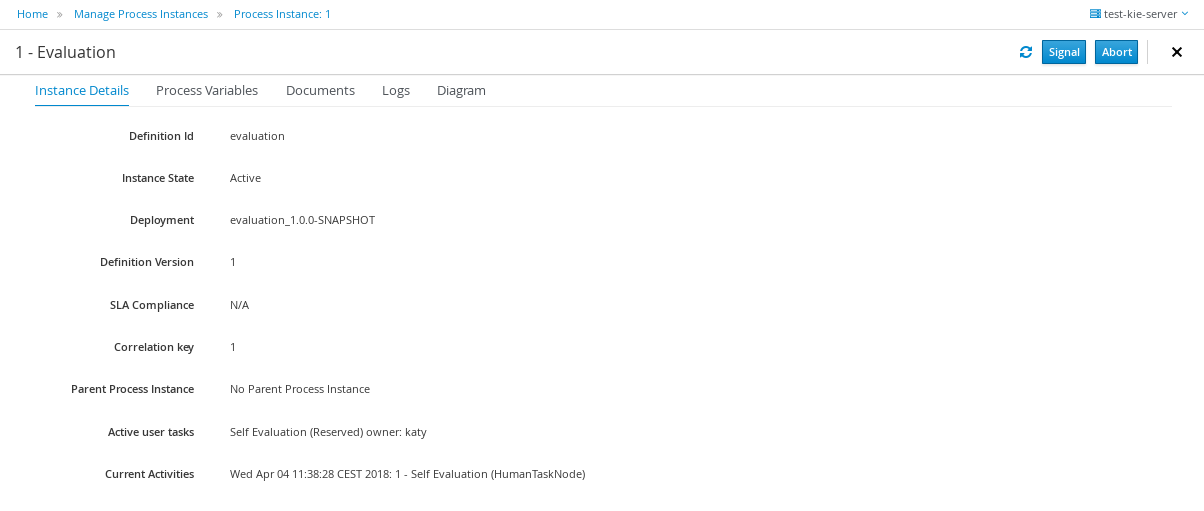

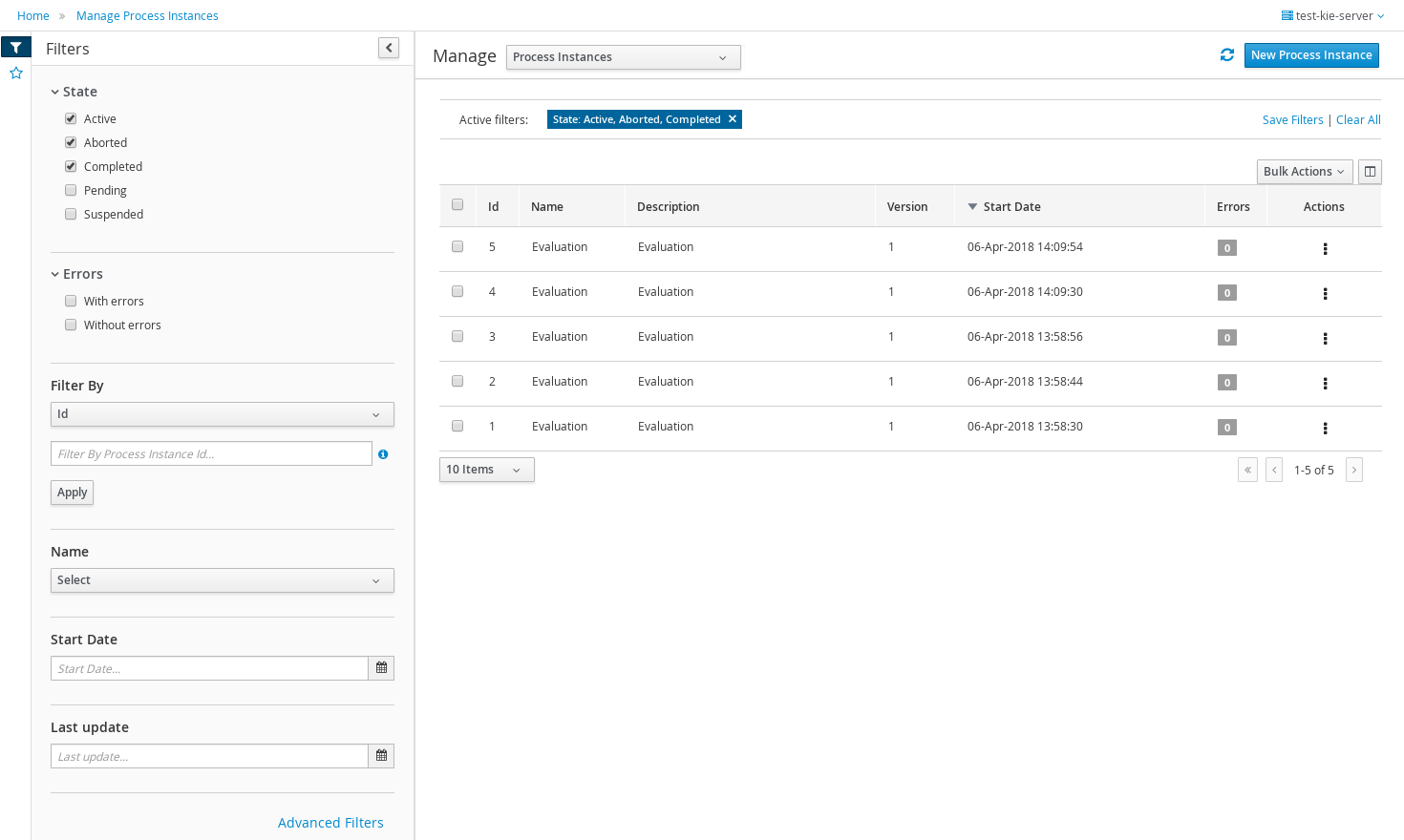

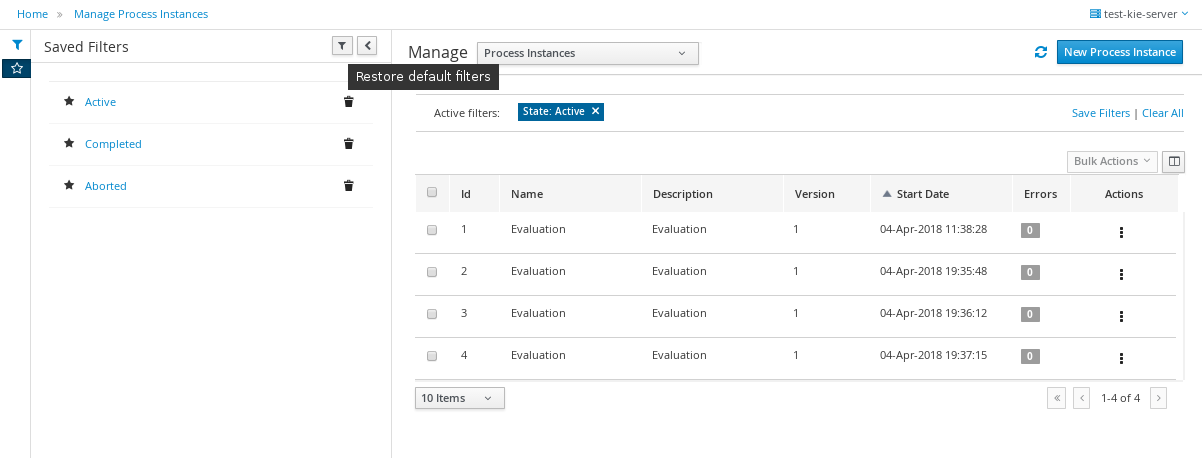

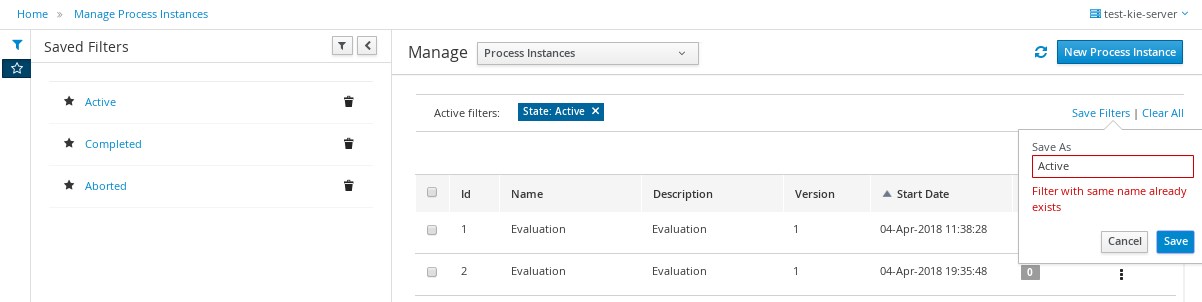

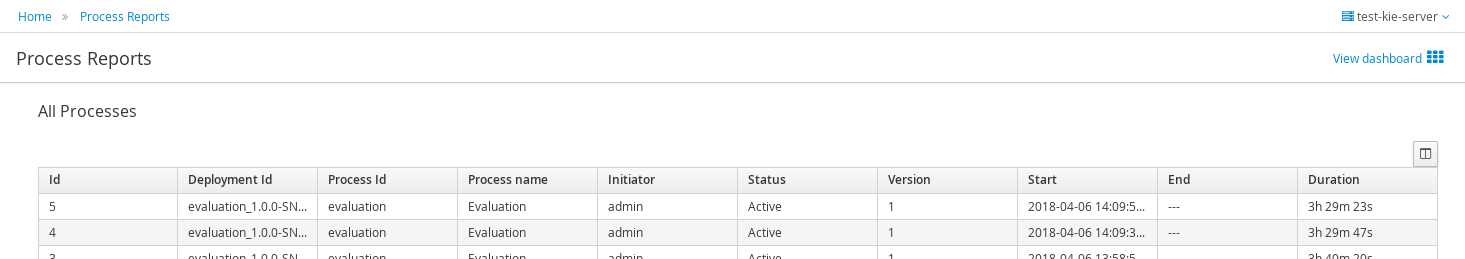

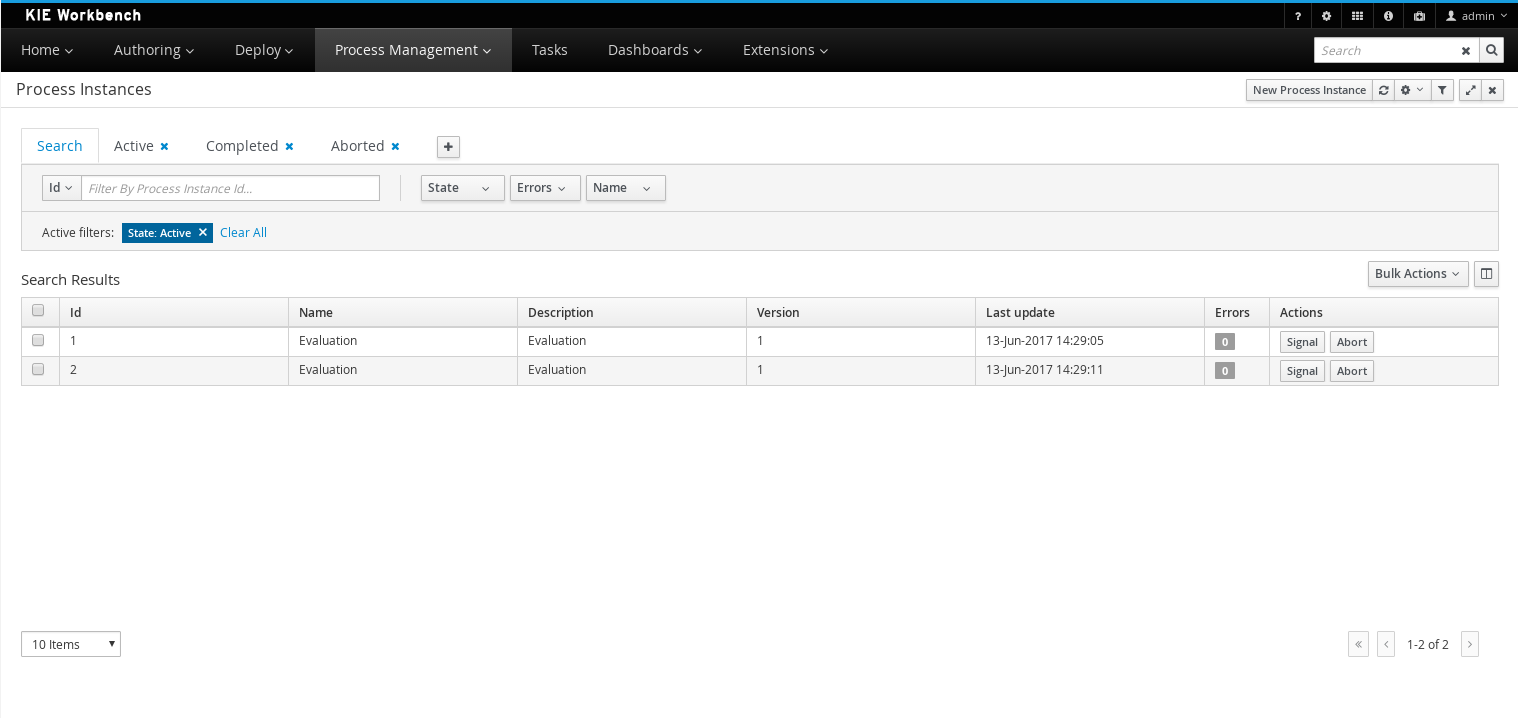

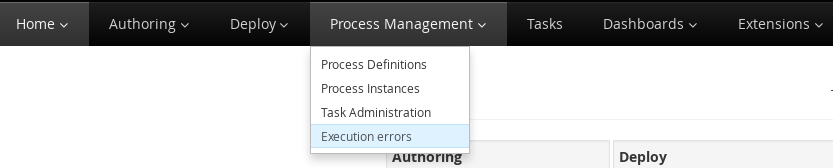

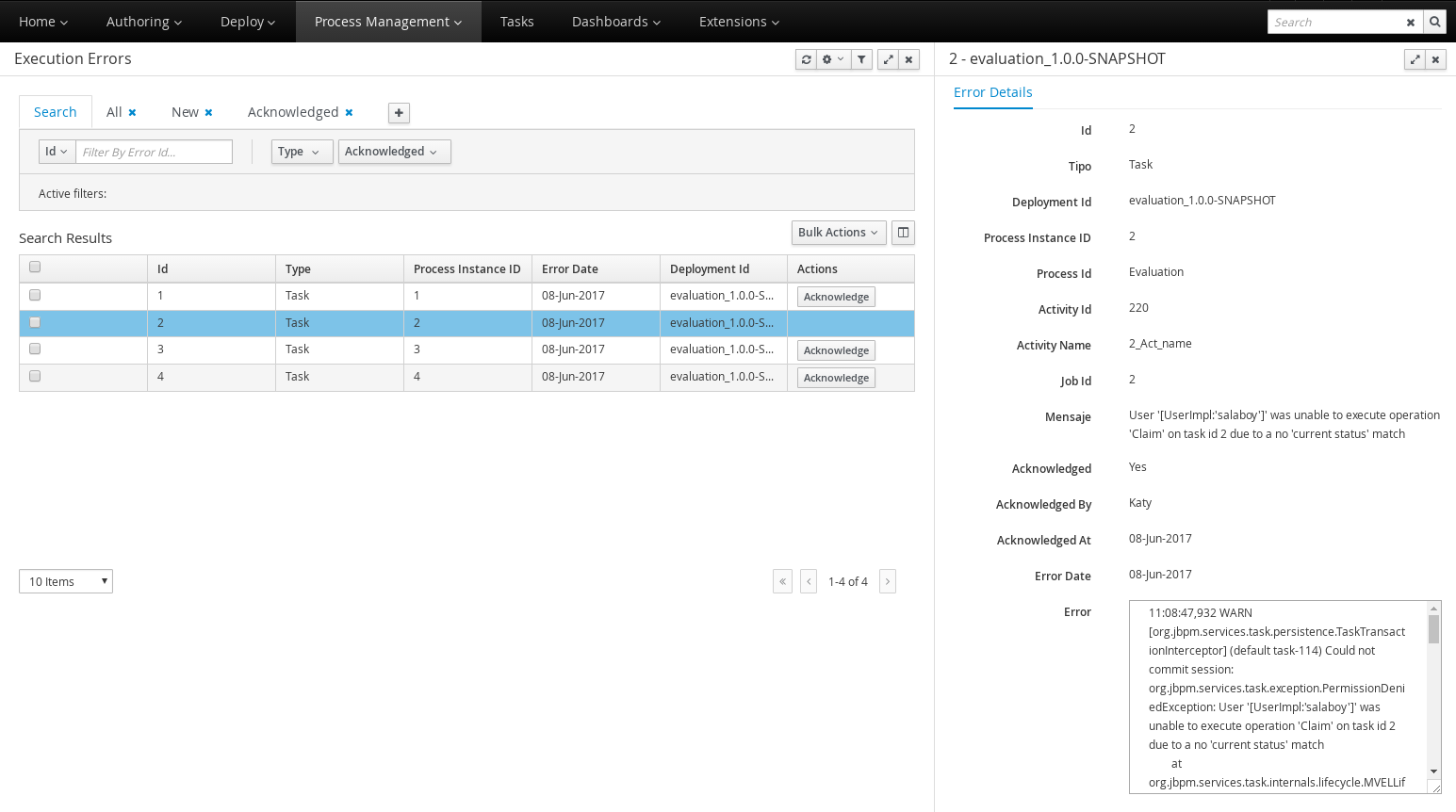

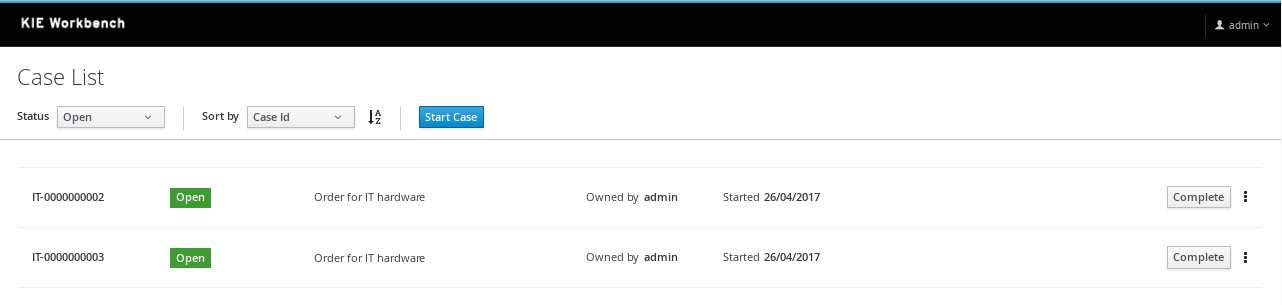

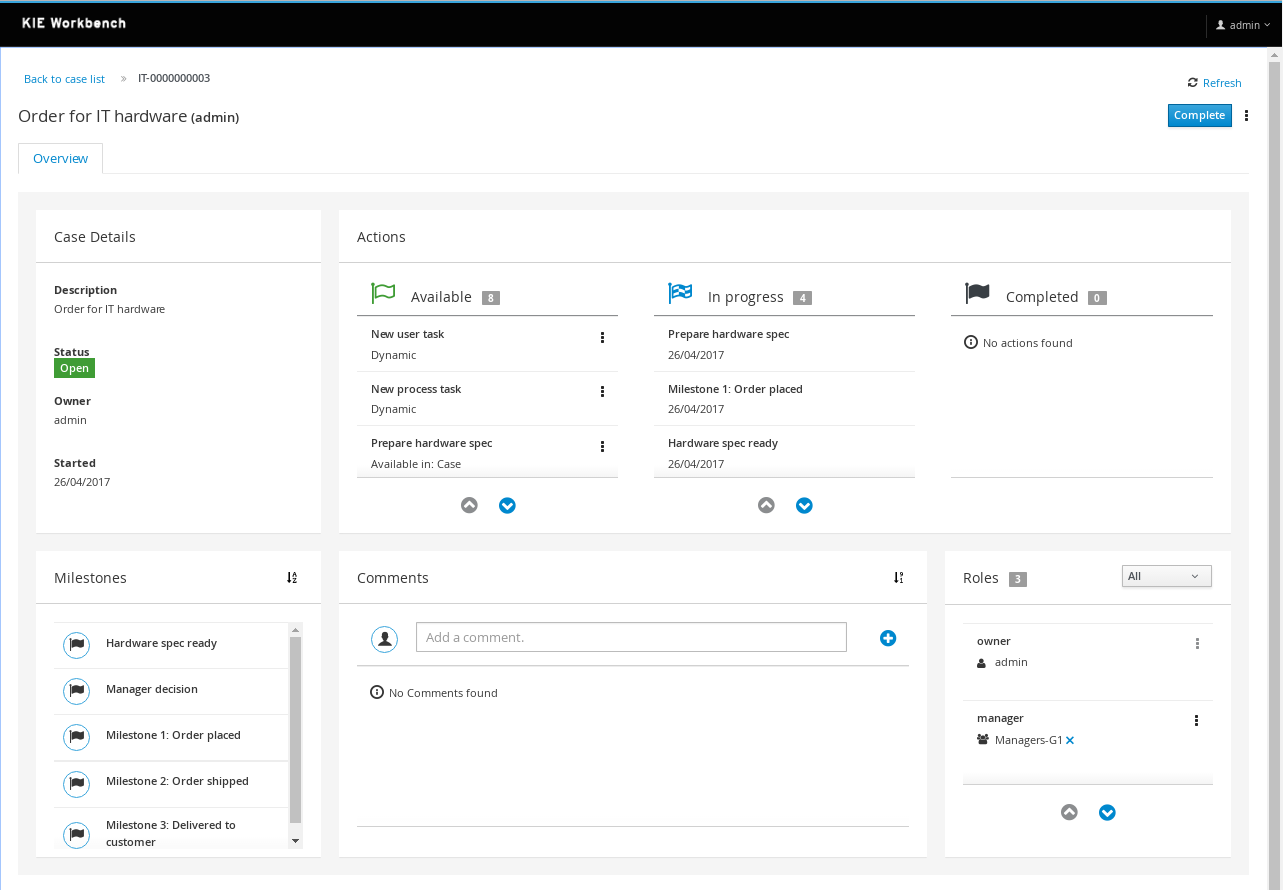

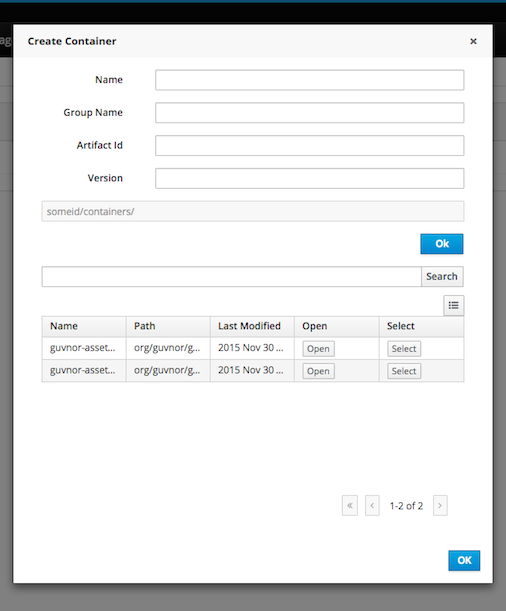

1.4.3. Process Management

Business processes and all its related runtime information can be managed through Business Central. It is targeted towards process administrators users and its main features include:

-

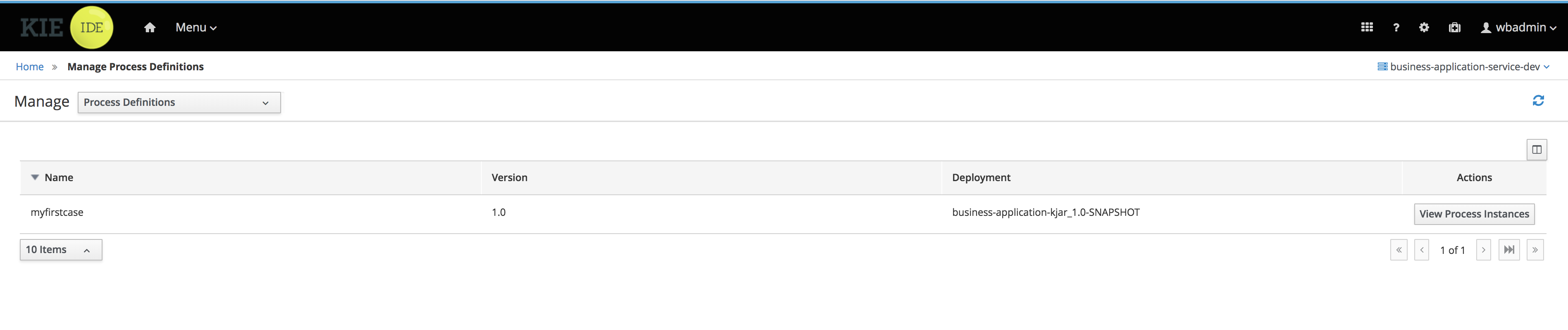

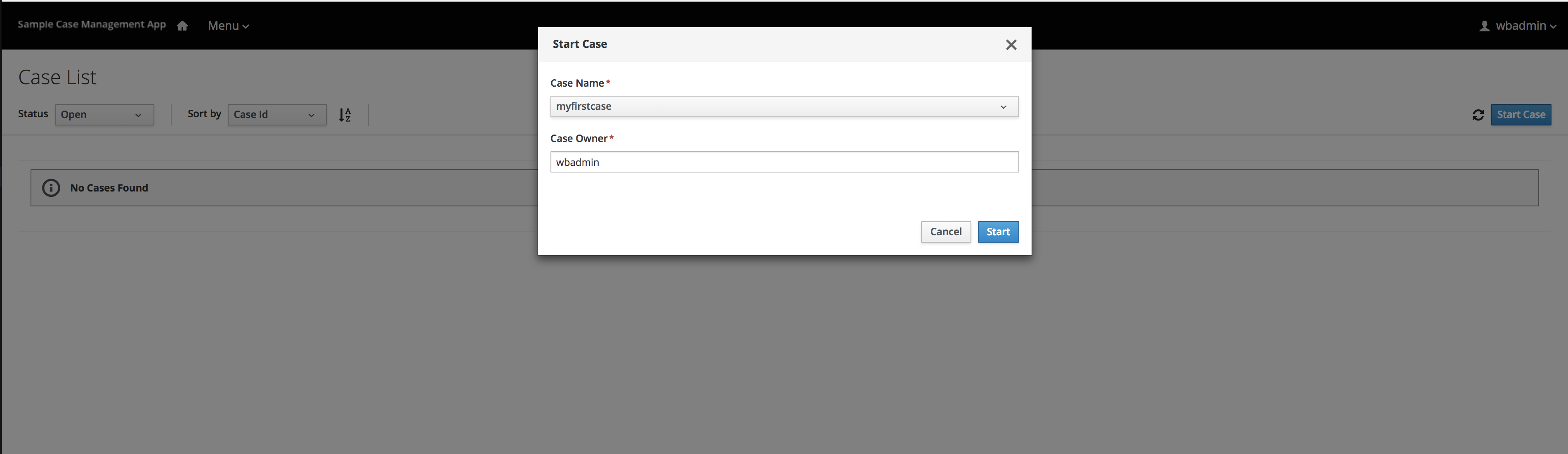

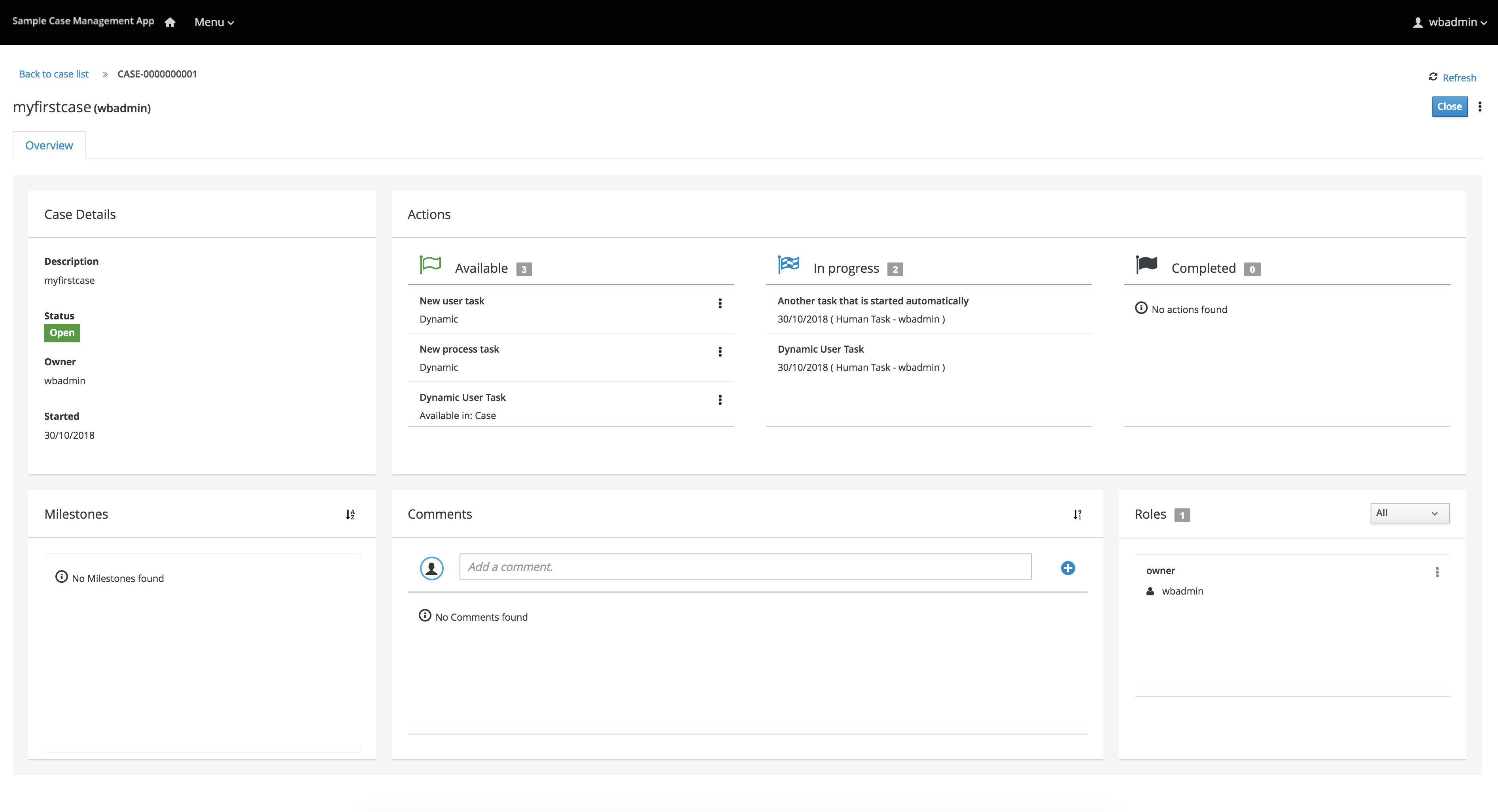

Process definitions management: view the entire list of process currently deployed into a Kie Server and its details.

-

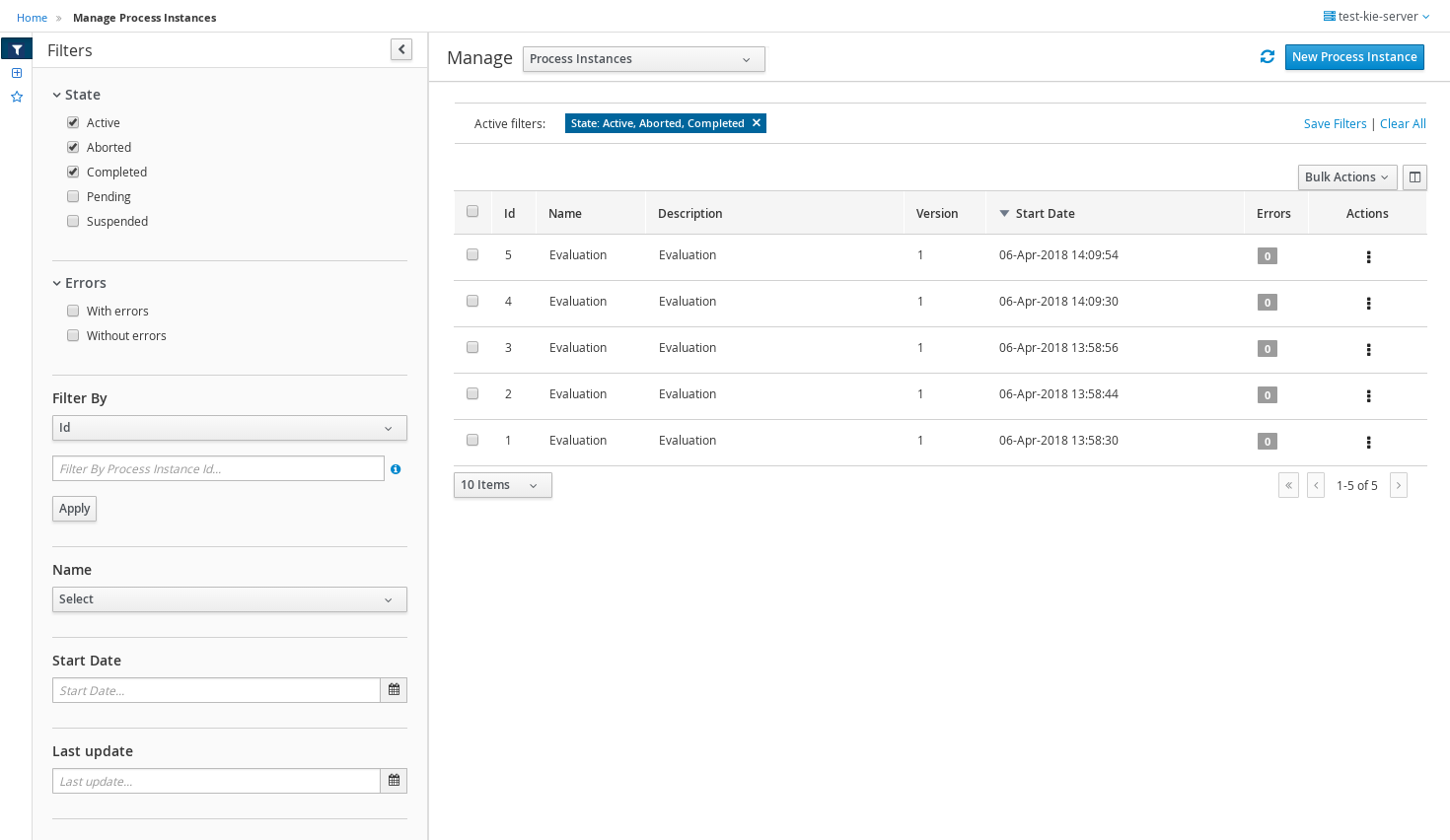

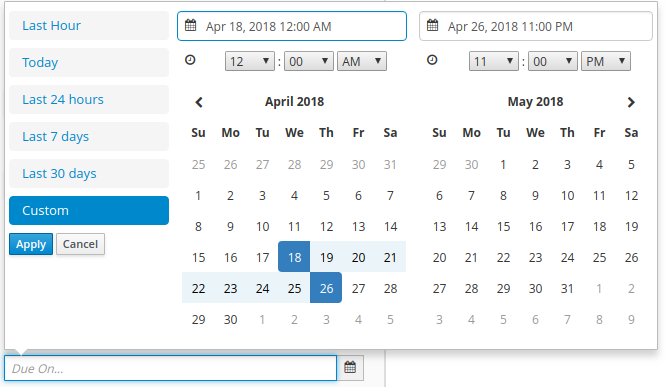

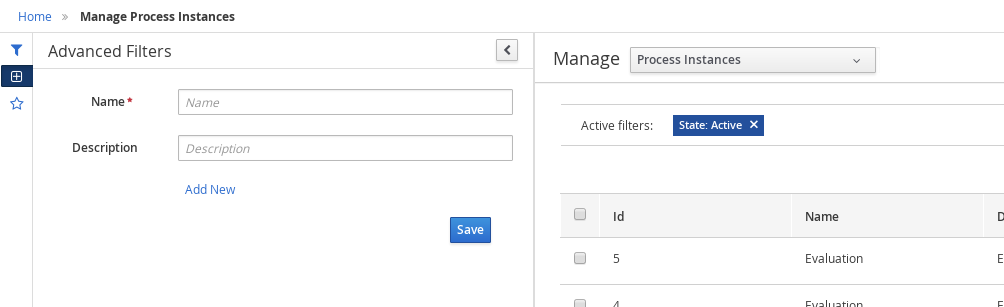

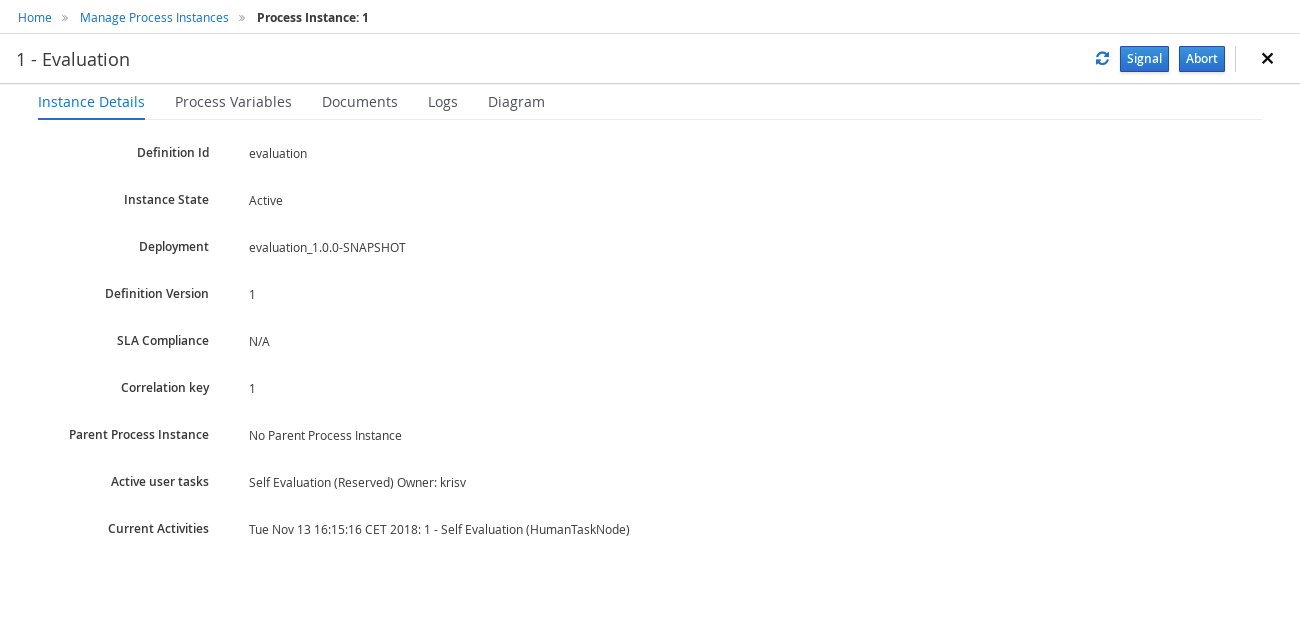

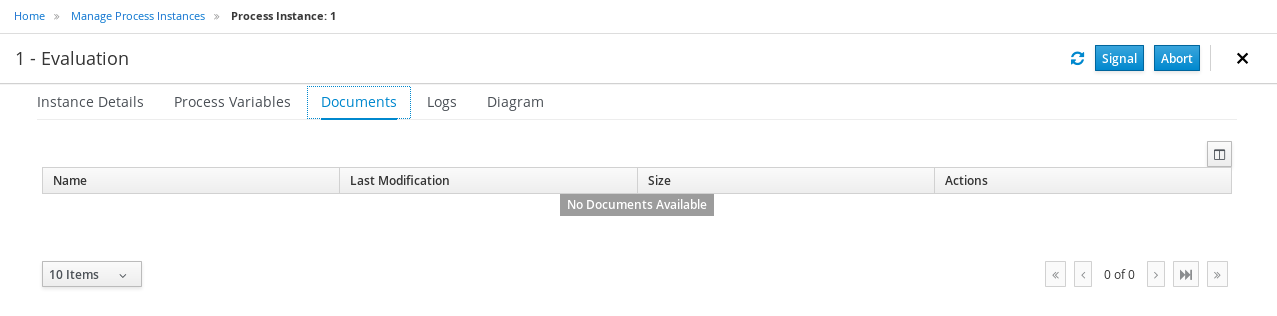

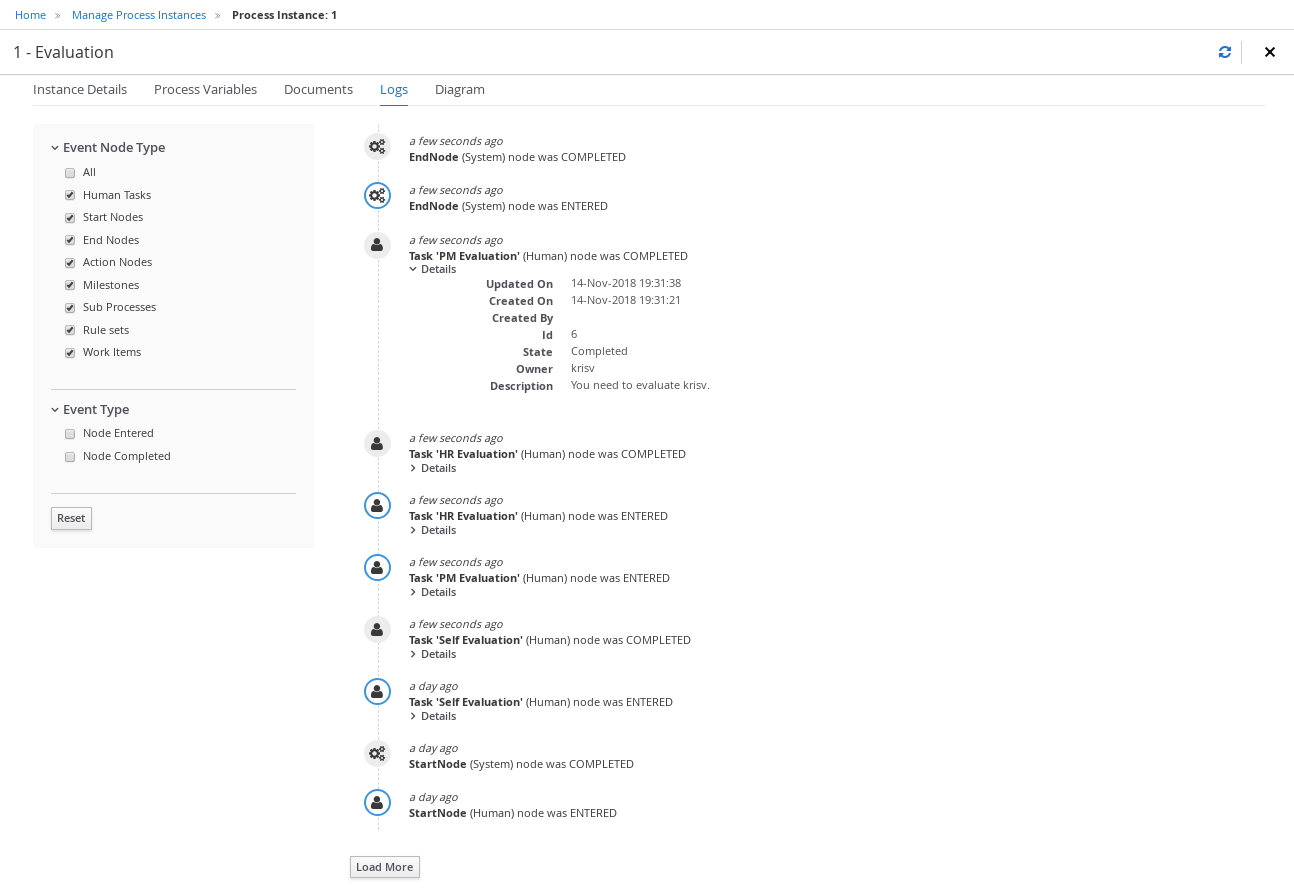

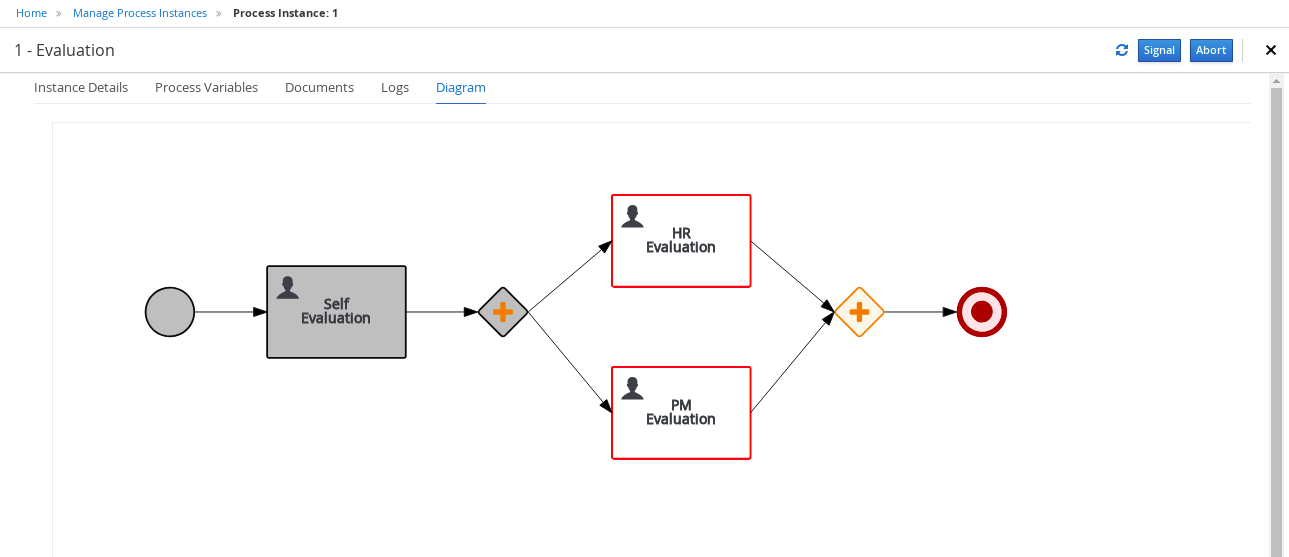

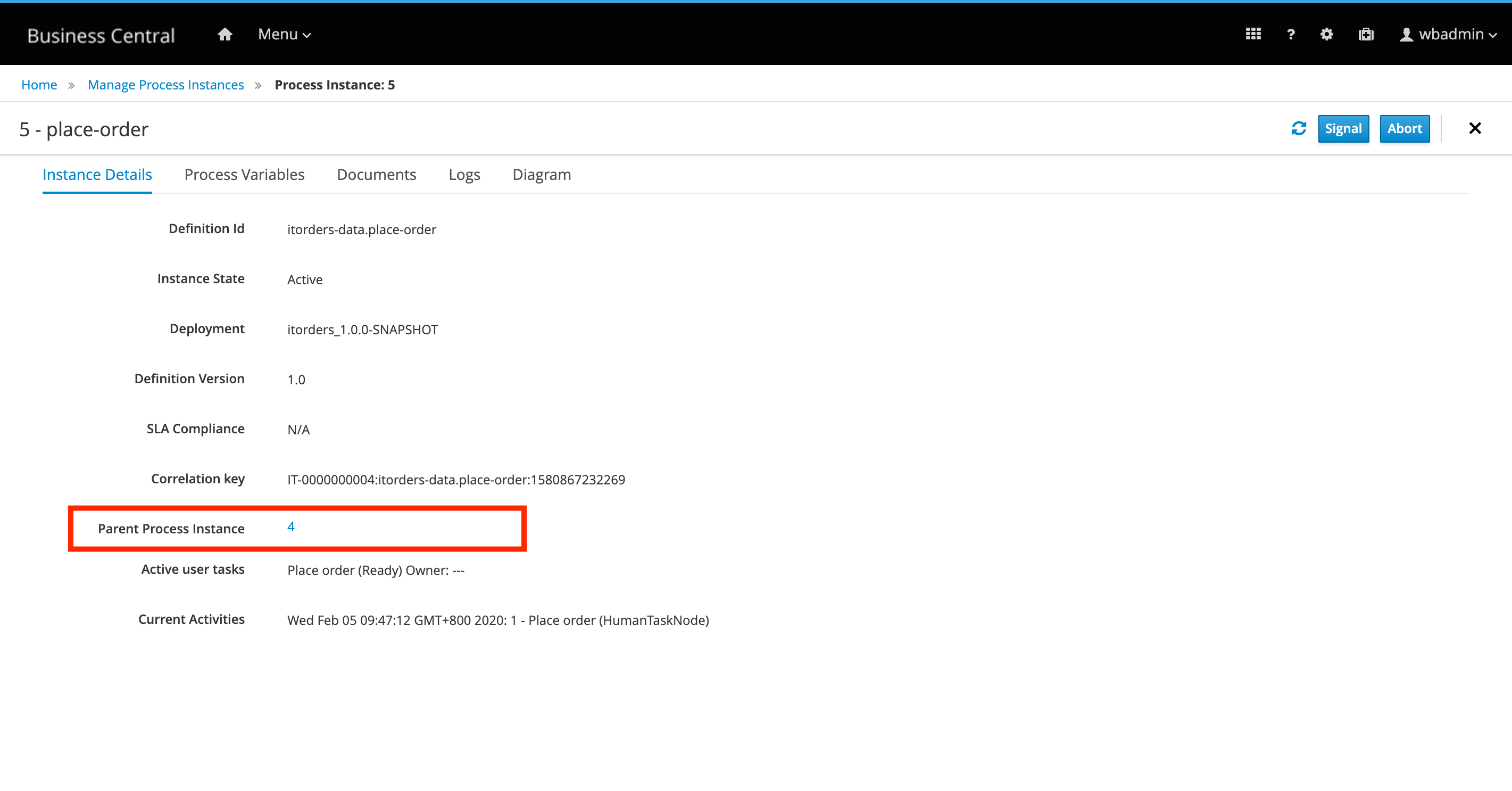

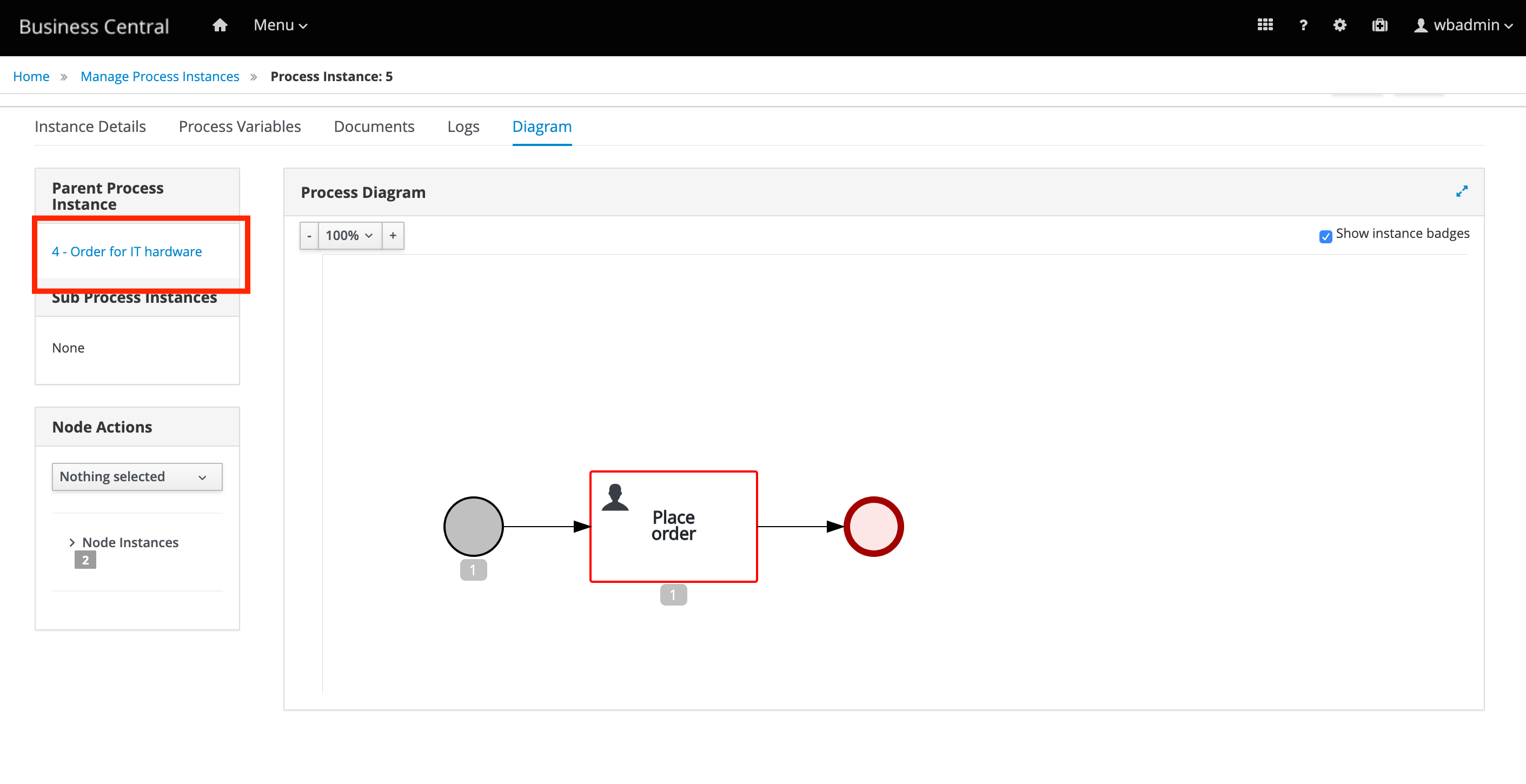

Process instances management: the ability to start new process instances, get a filtered list of process instances, visually inspect the state of a specific process instances.

-

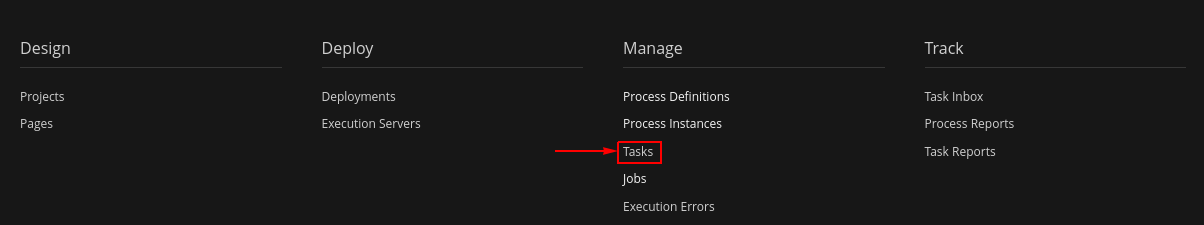

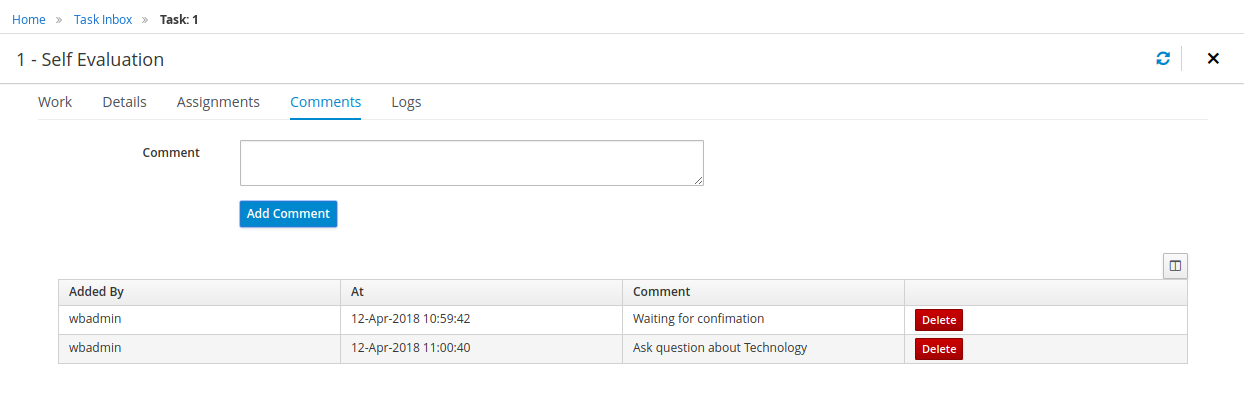

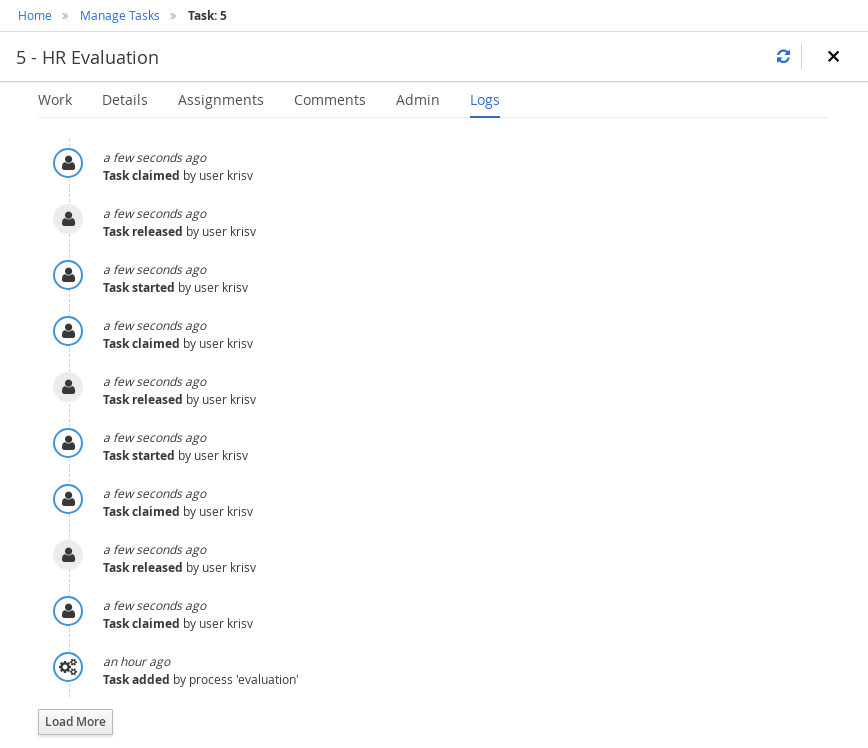

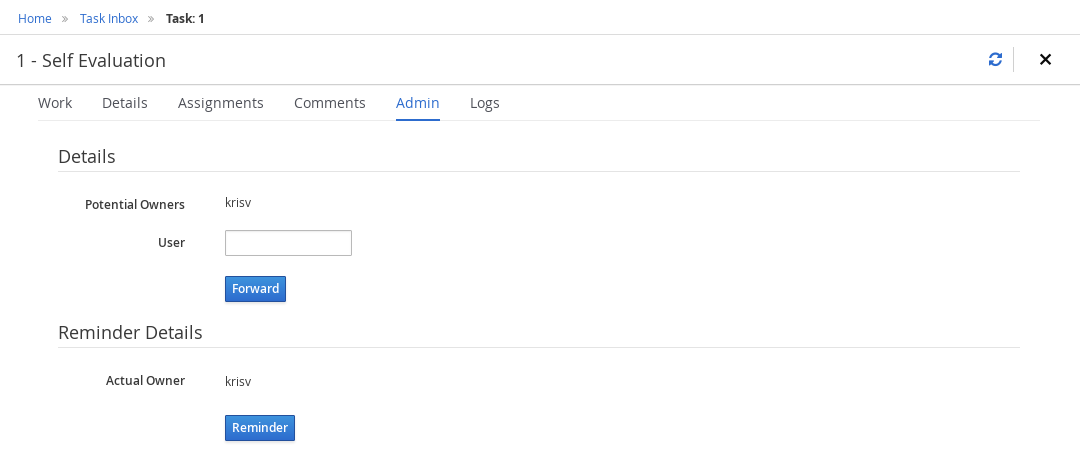

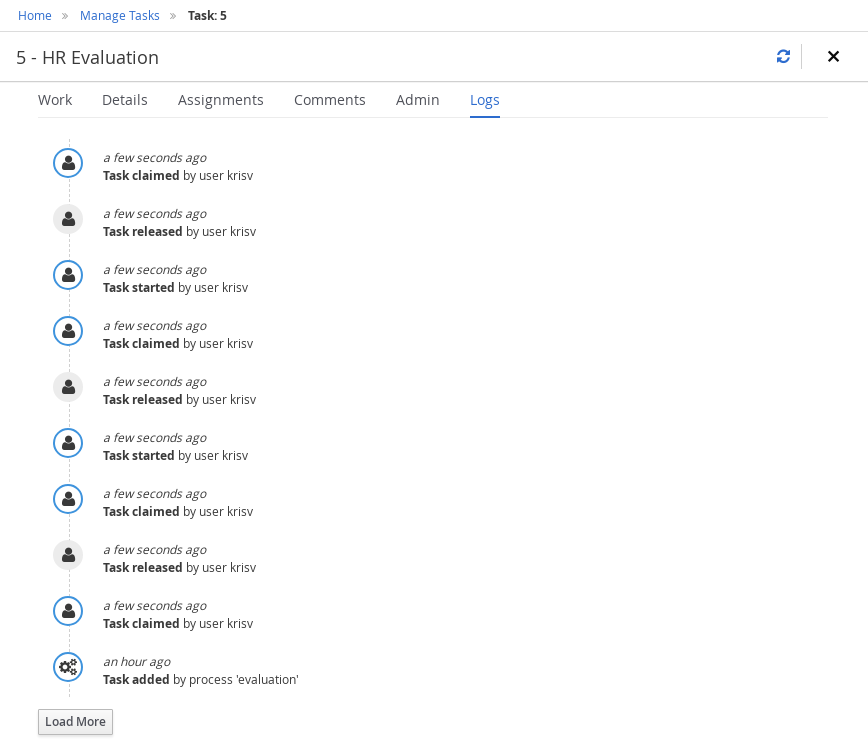

Human tasks management: being able to get a list of all tasks, view details such as current assignees, comments, activity logs as well as send reminders and forward tasks to different users and more.

-

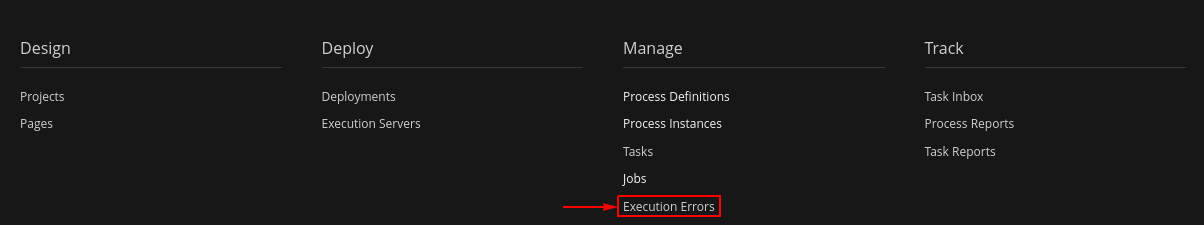

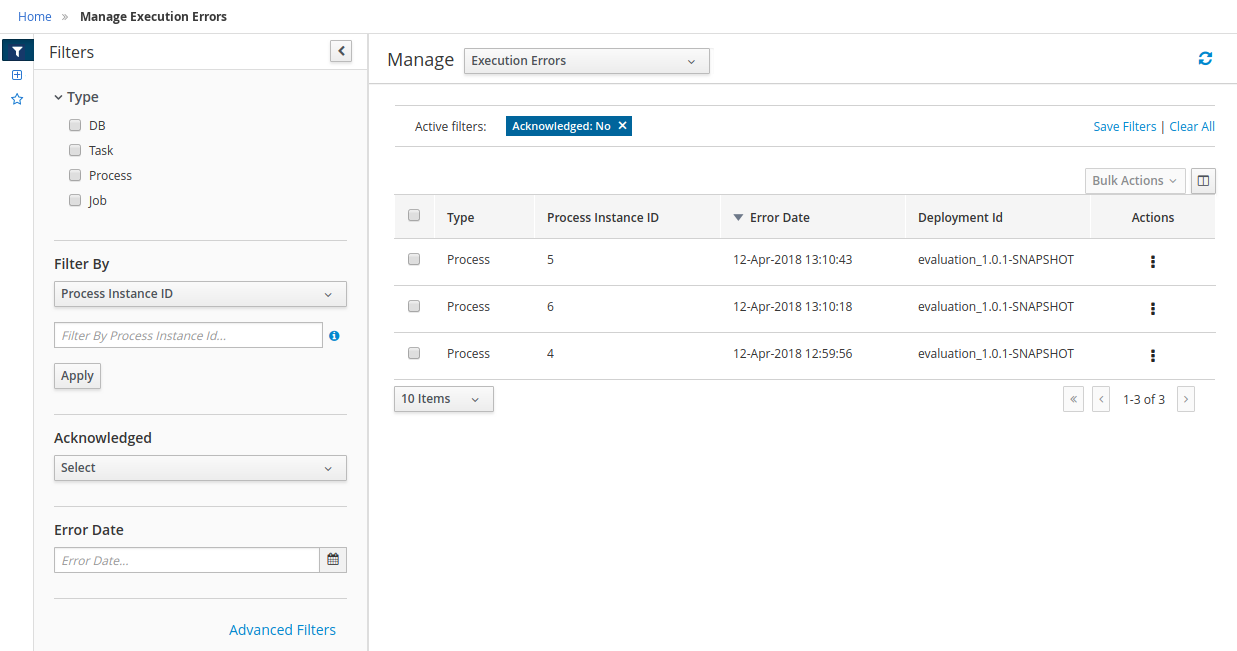

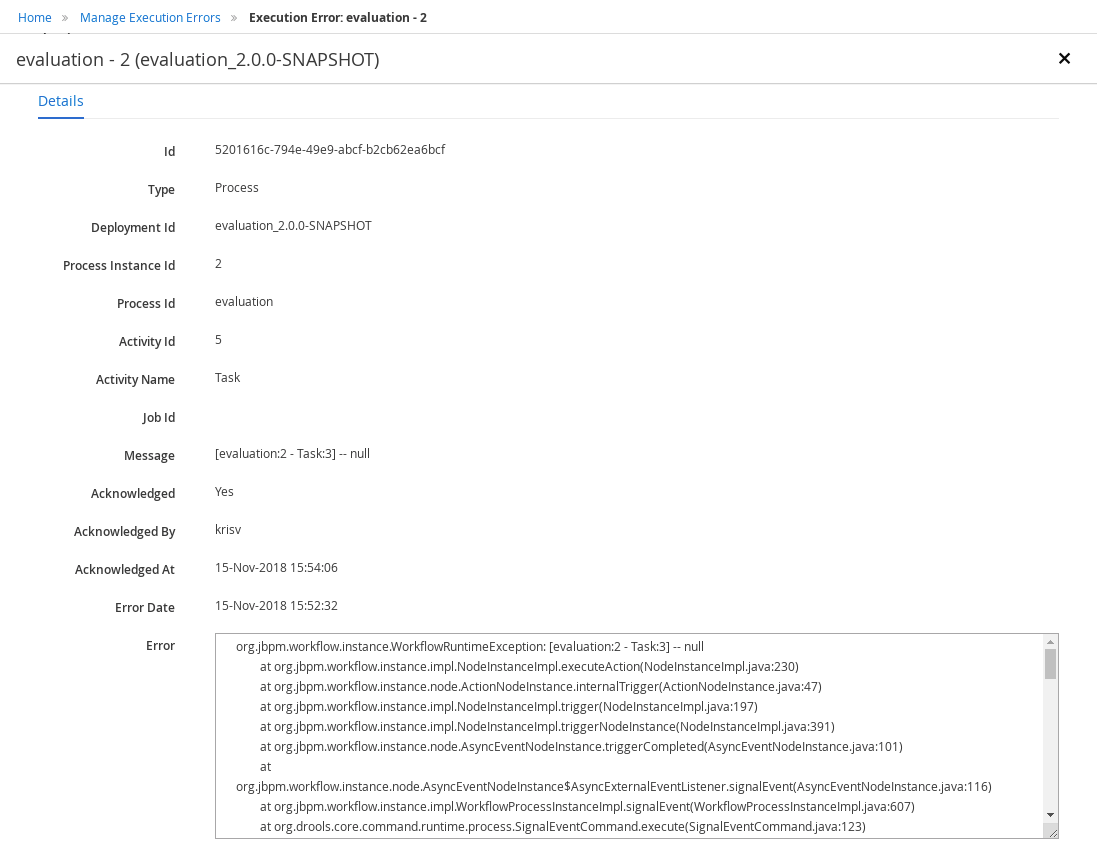

Execution Errors management: allows administrators to view any execution error reported in the Kie Server instance, inspect its details including stacktrace and perform the error acknowledgement.

-

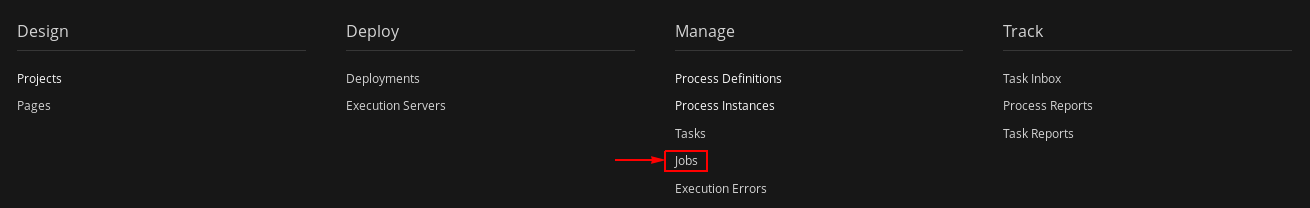

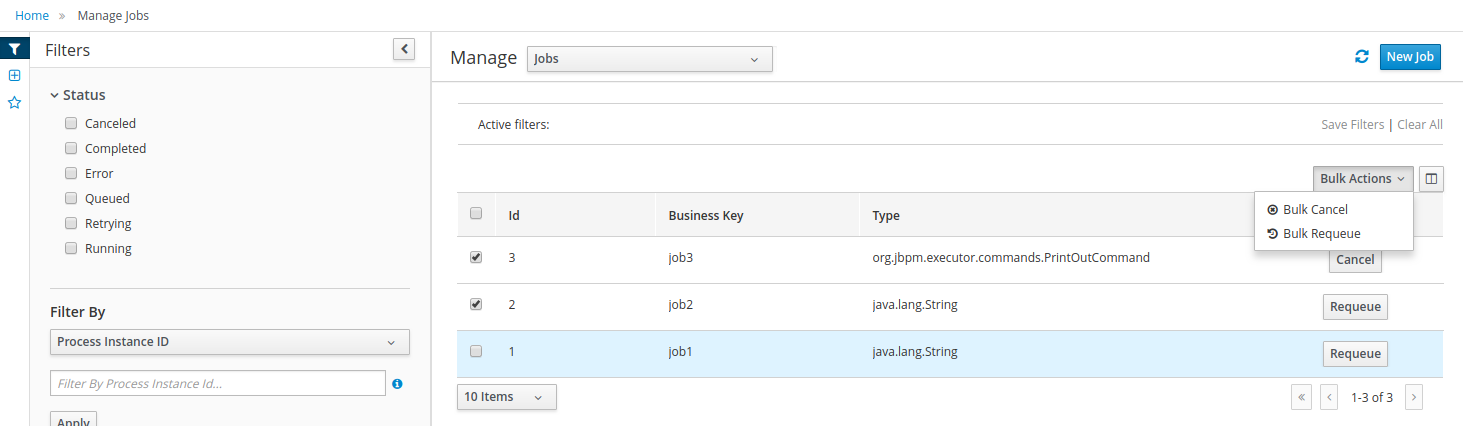

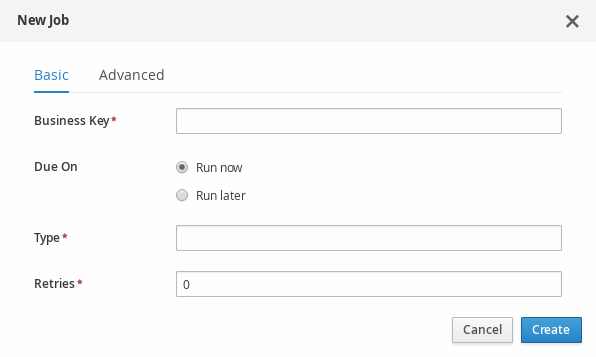

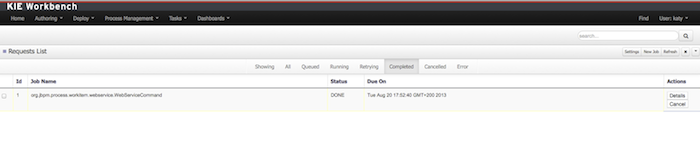

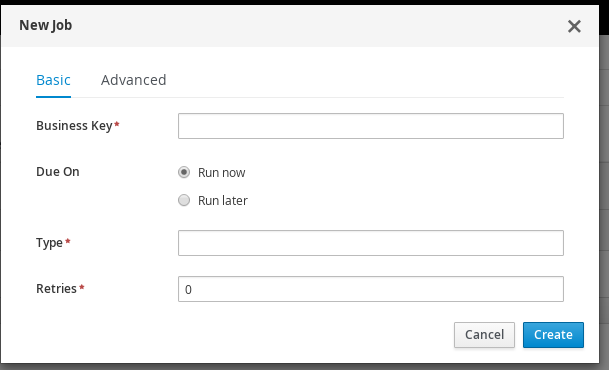

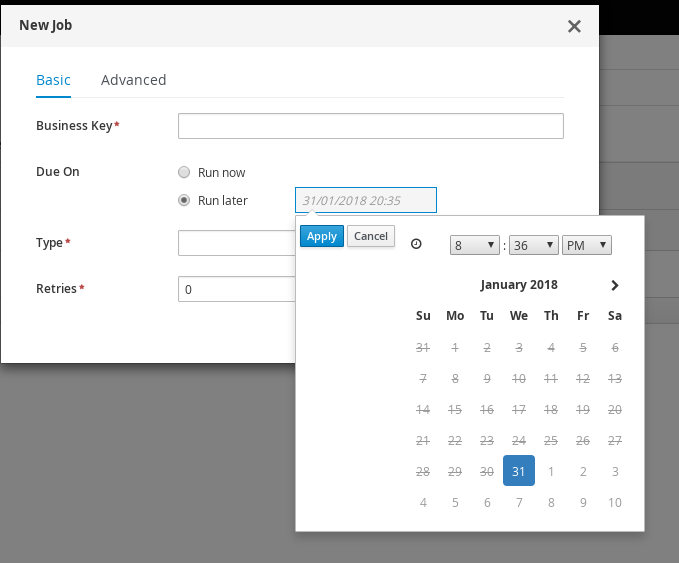

Jobs management: possibility to view currently scheduled and schedule new Jobs to run in the Kie Server instance.

For more details around the entire management section please read the process management chapter.

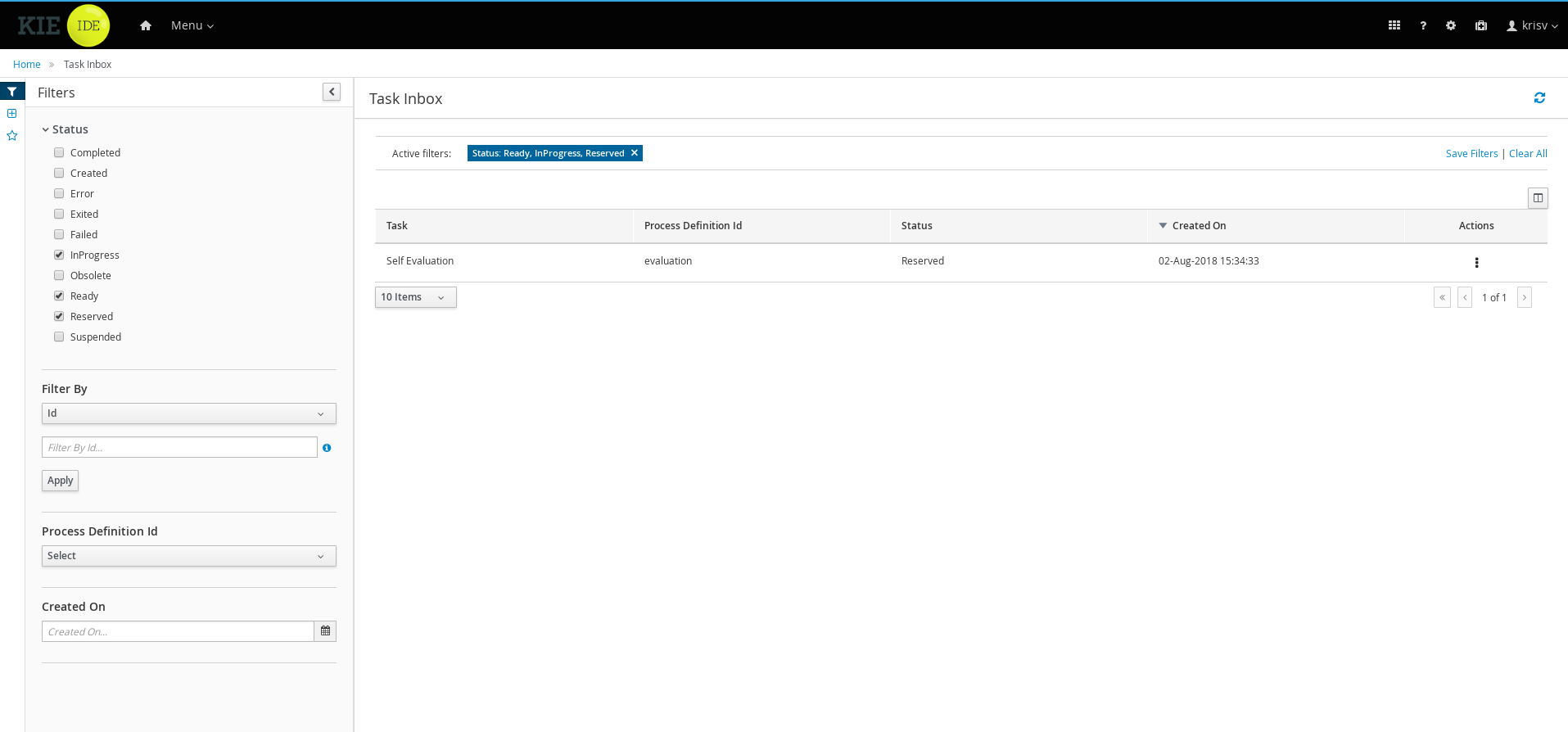

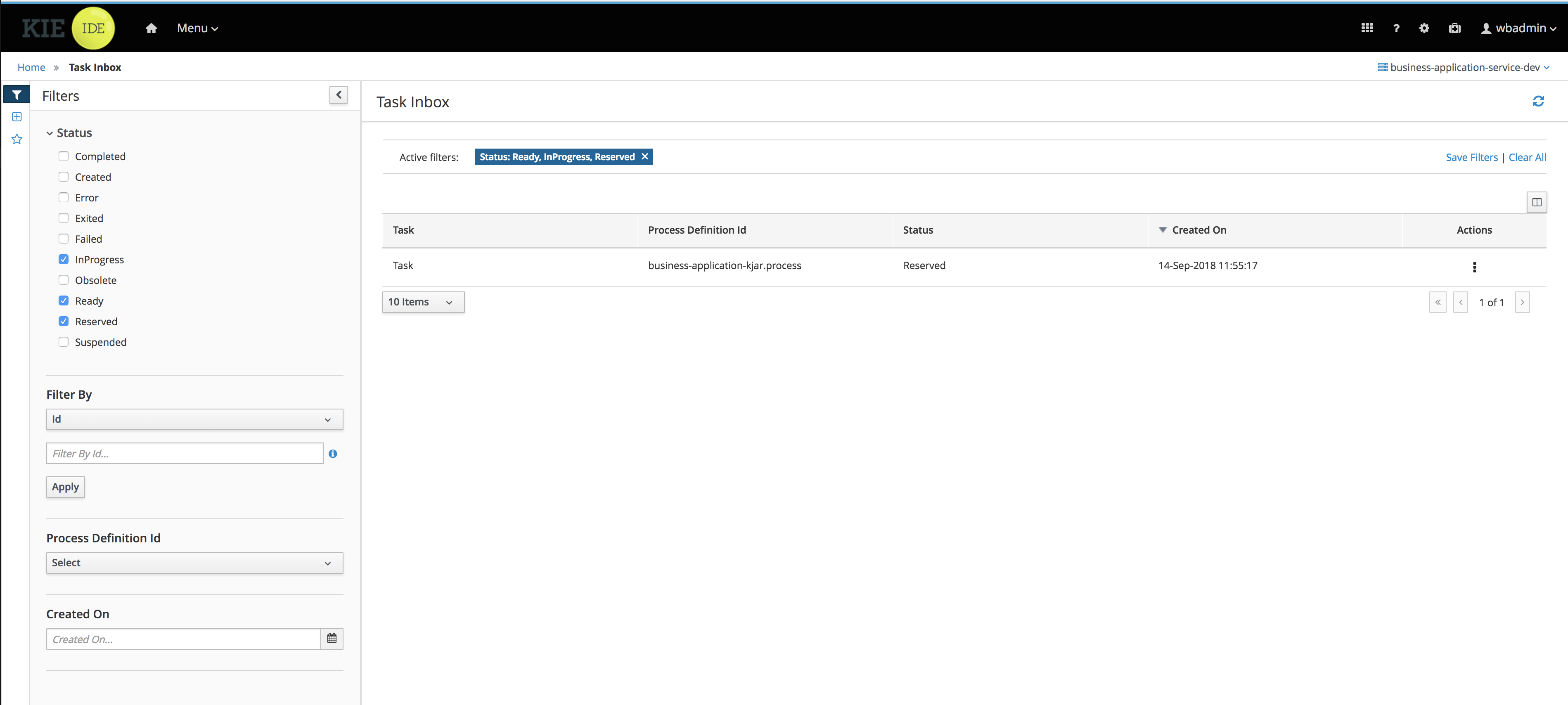

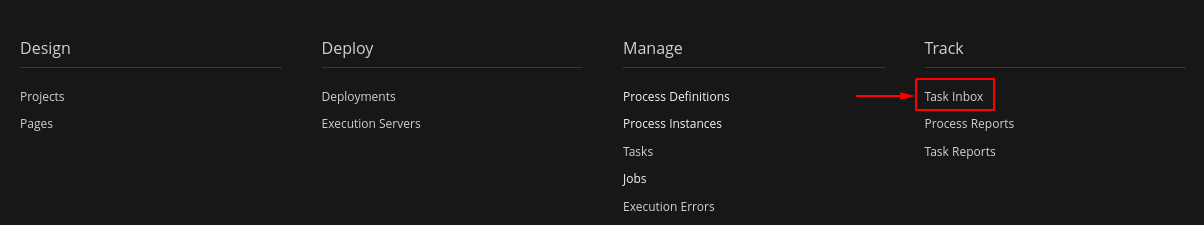

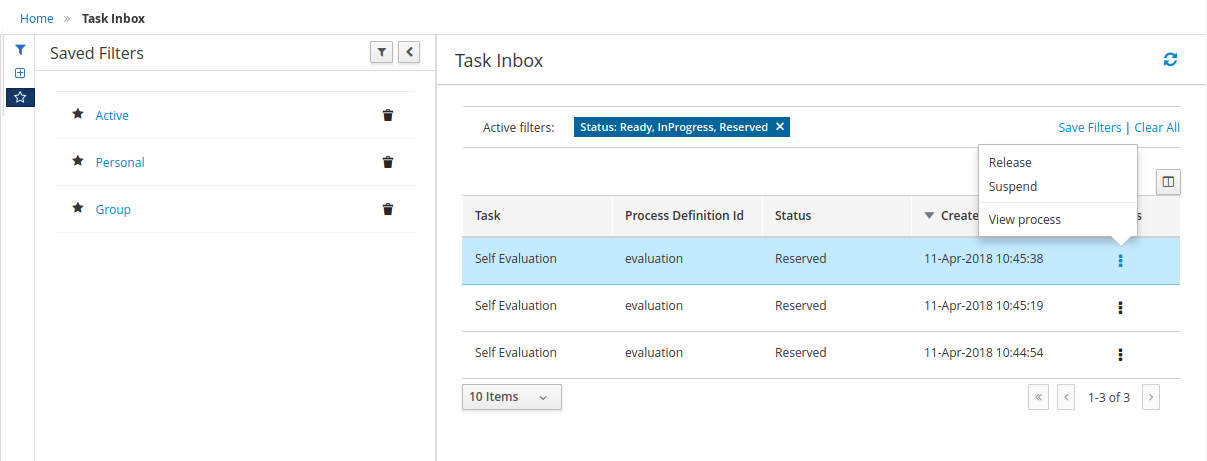

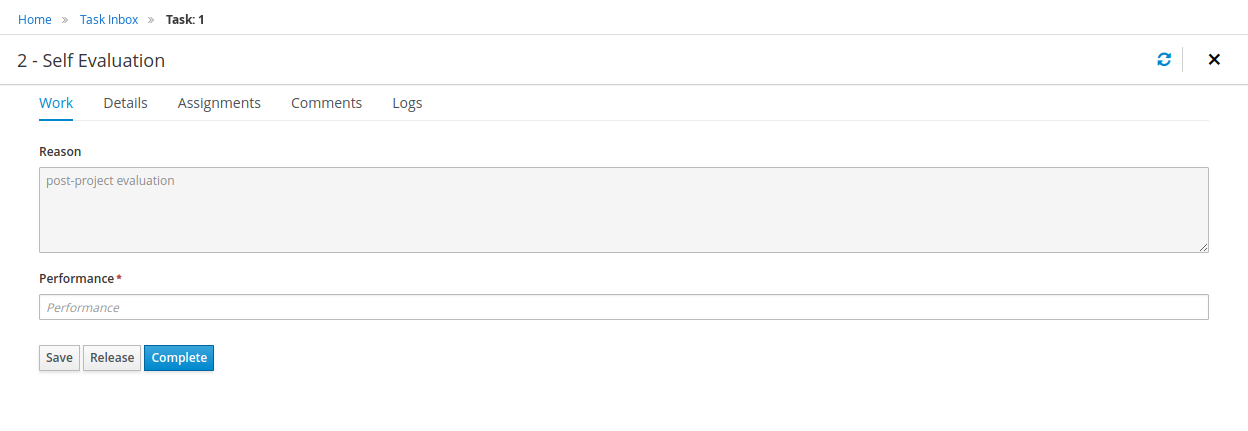

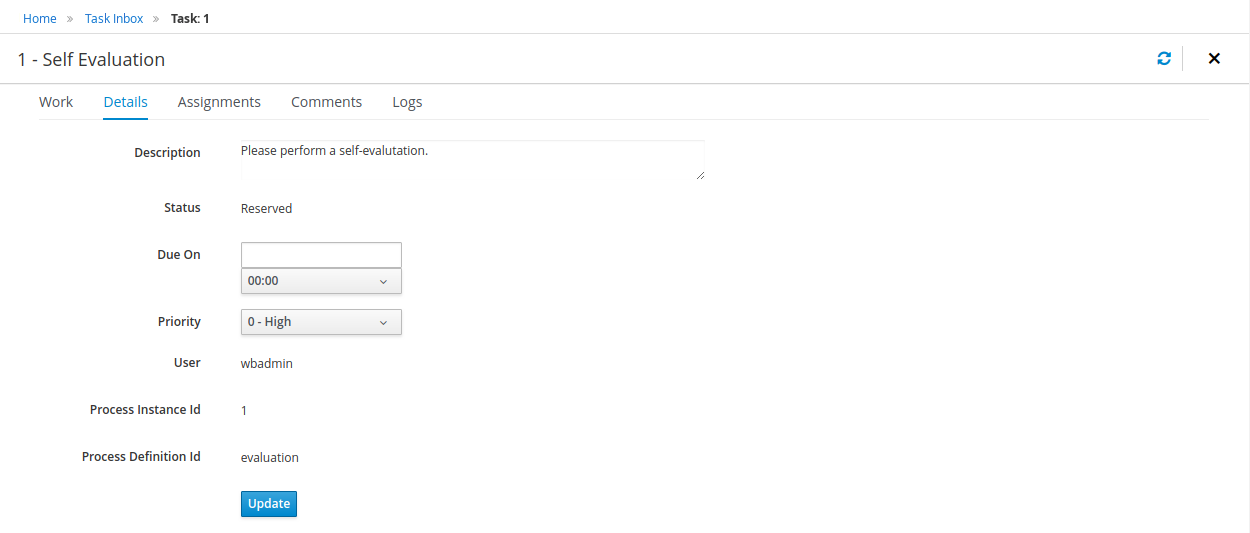

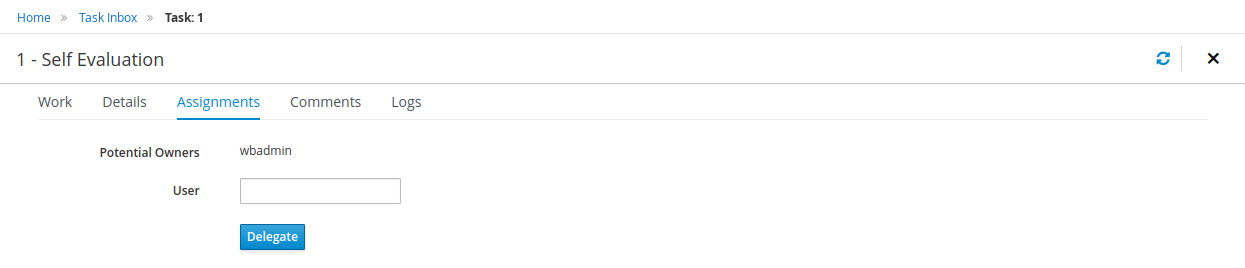

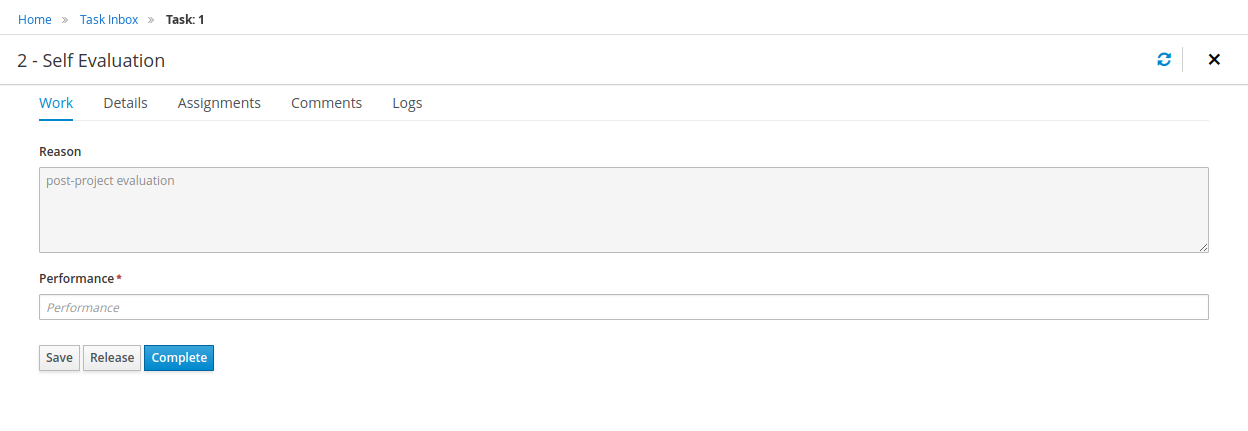

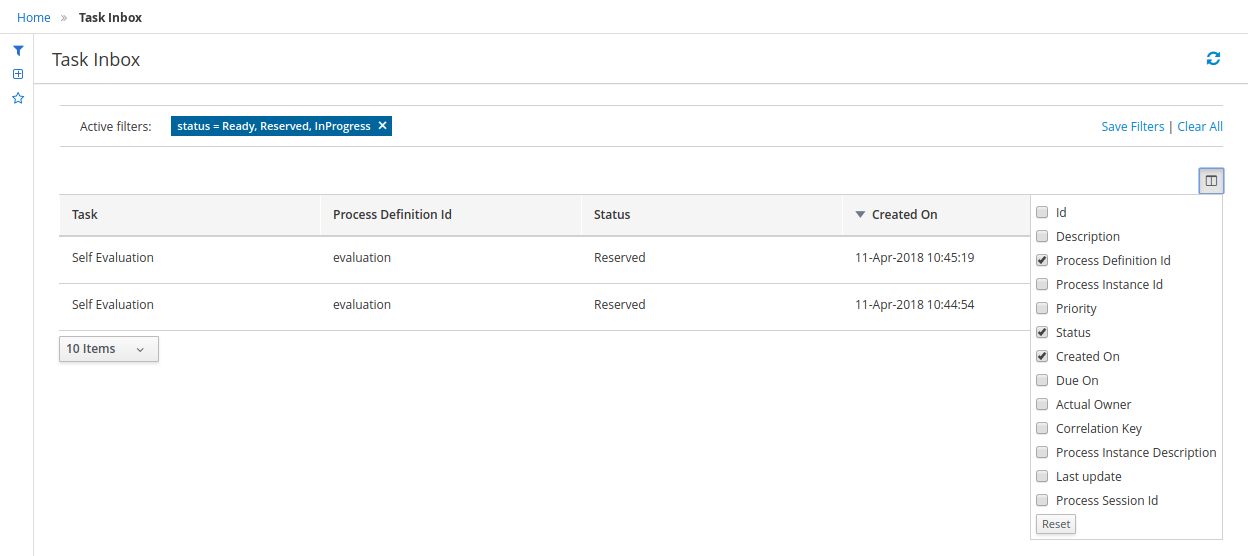

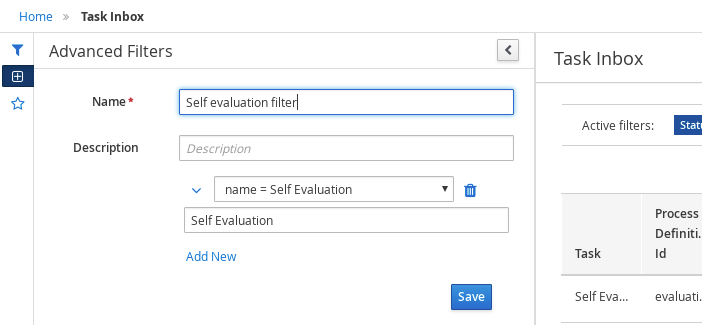

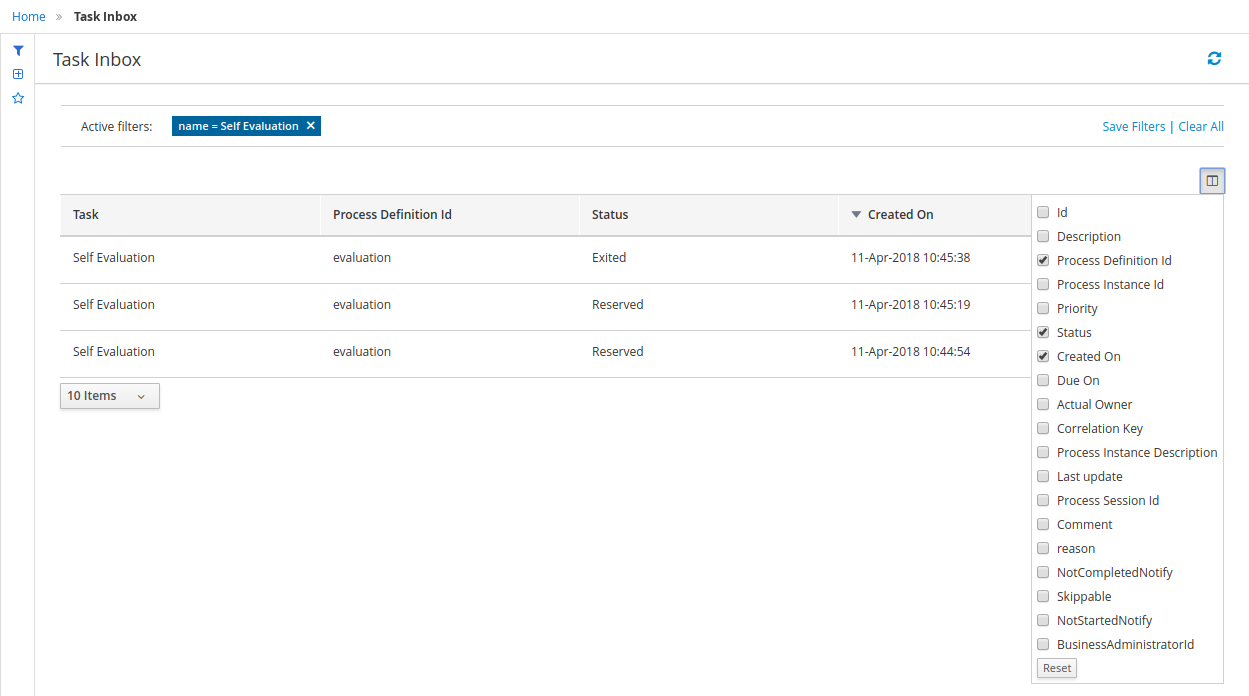

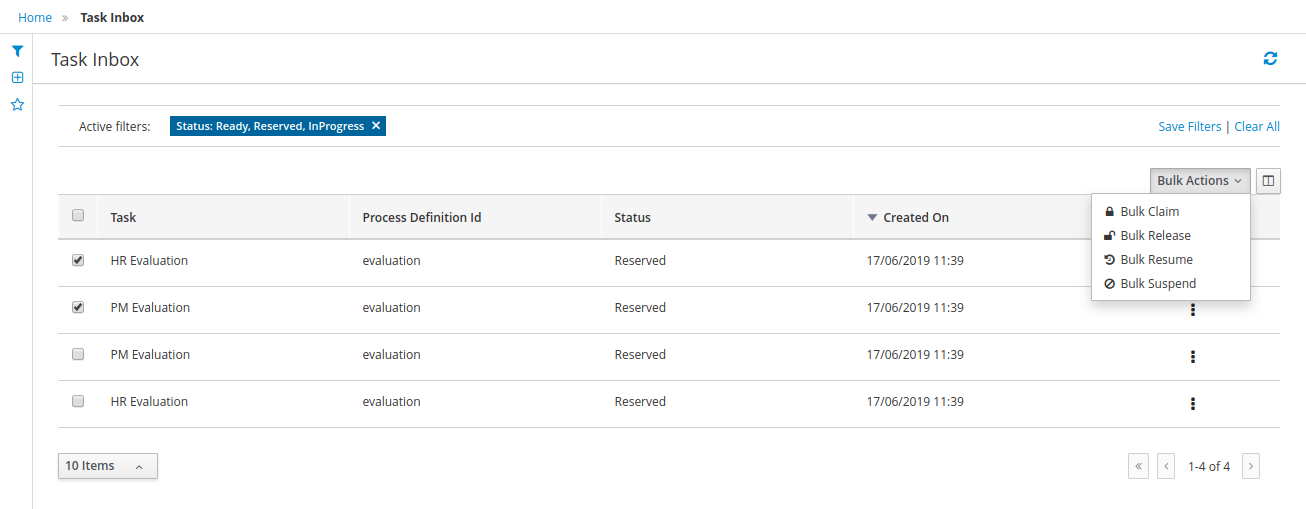

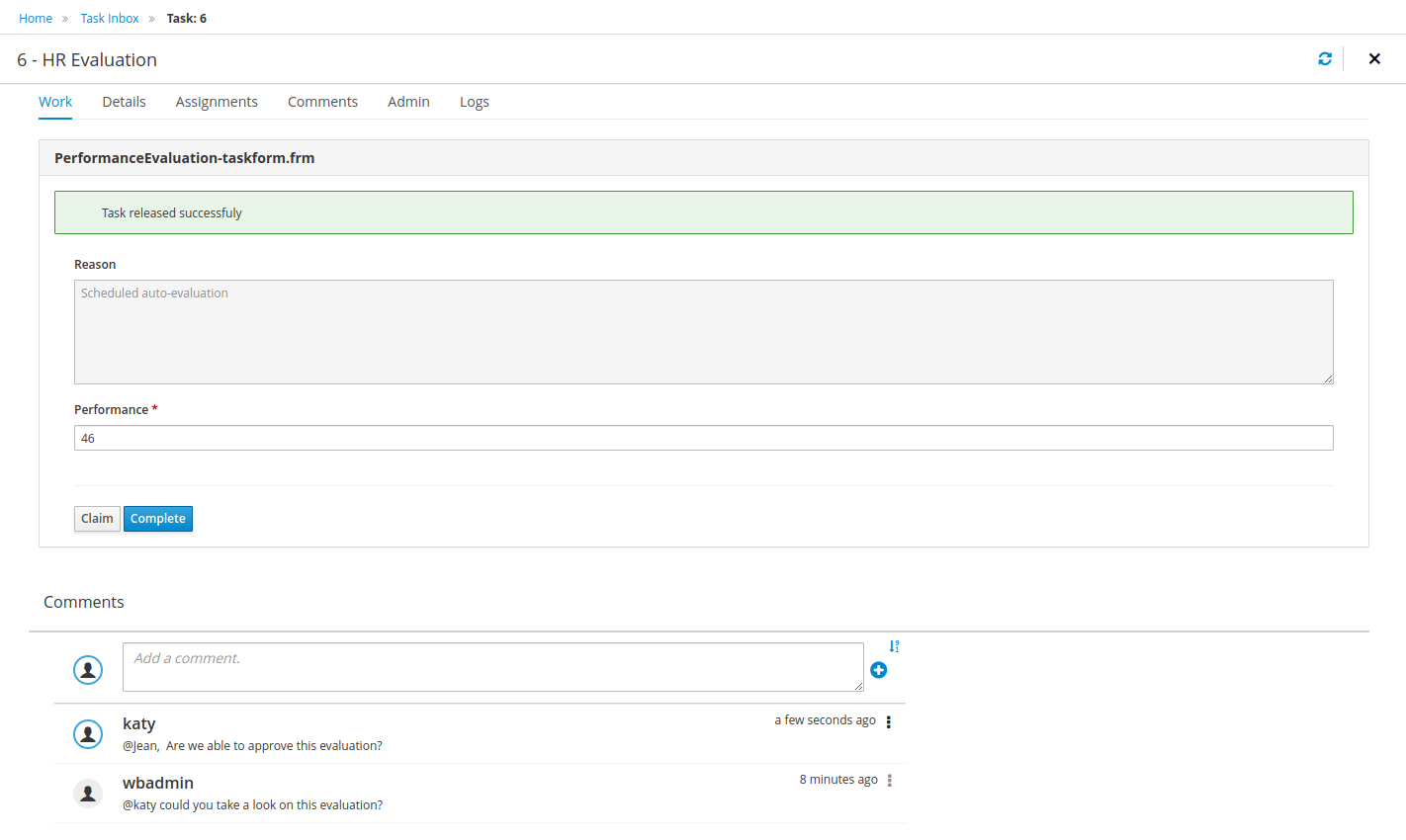

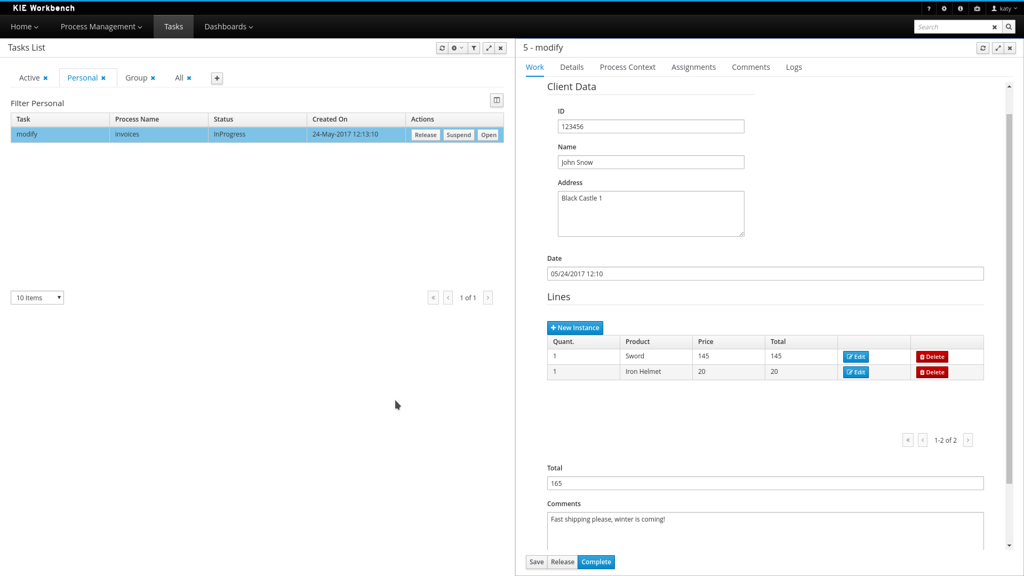

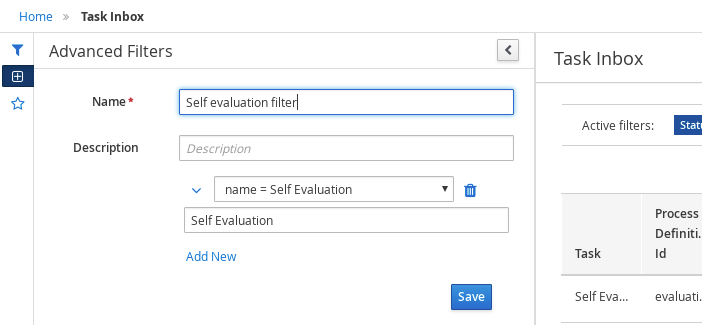

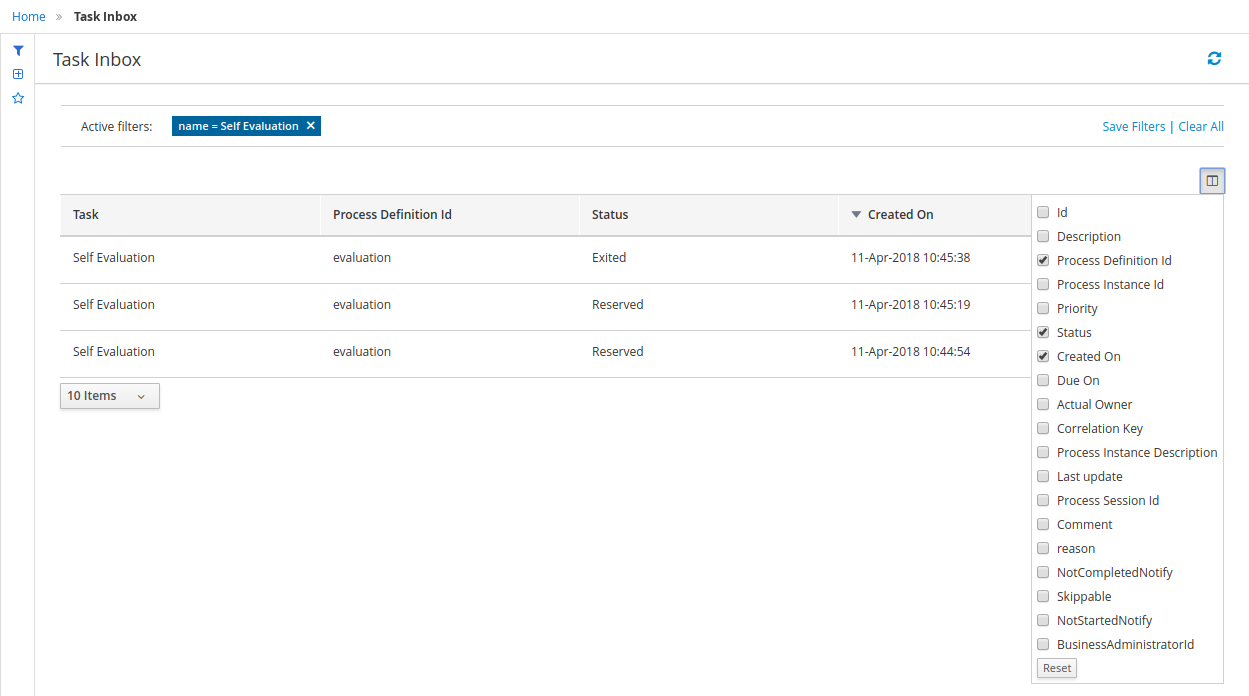

1.4.4. Task Inbox

As often part of any process execution, human involvement is needed to review, approve or provide extra information. Business Central provides a Task Inbox section where any user potentially involved with these task can manage its workload. In there, users are able to get a list of all tasks, complete tasks using customizable task forms, collaborate using comments and more.

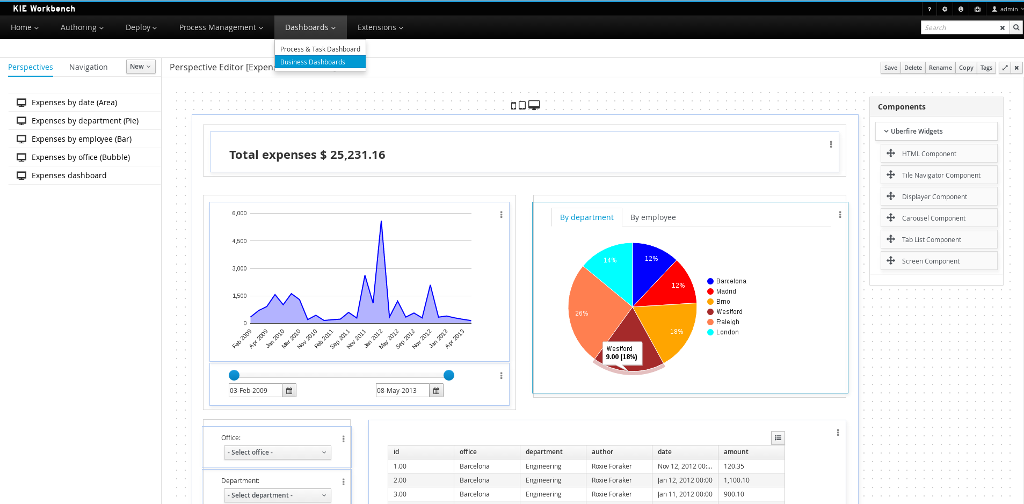

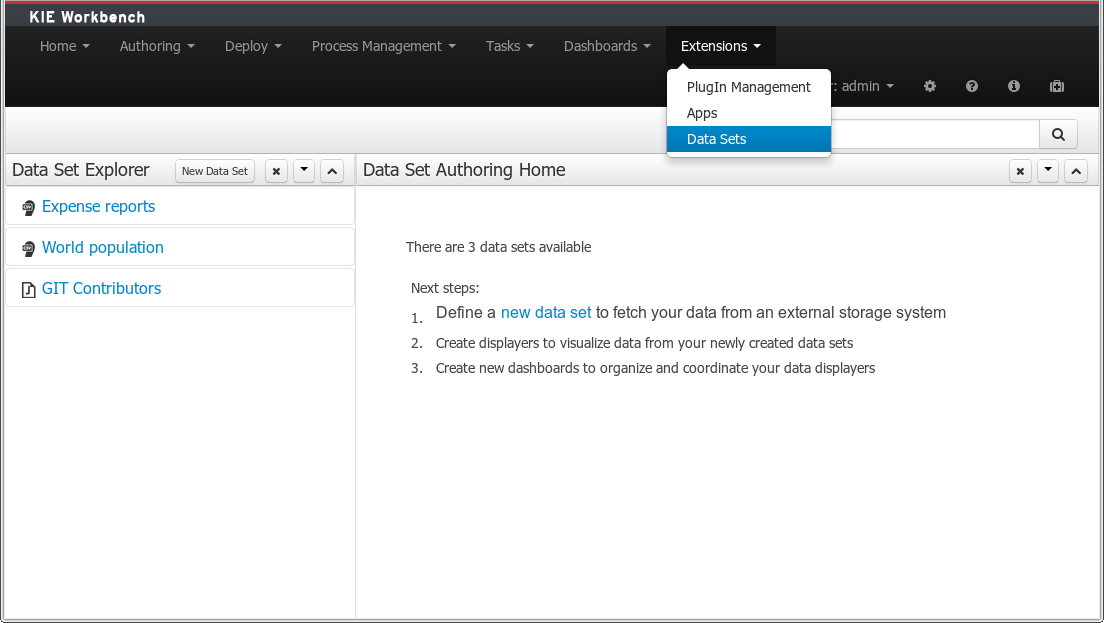

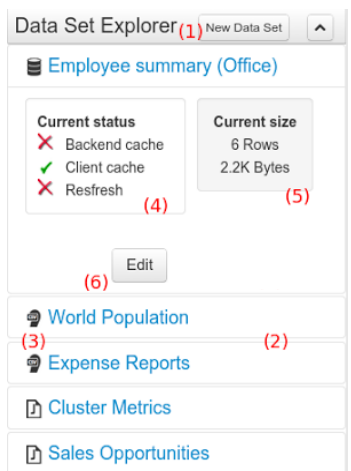

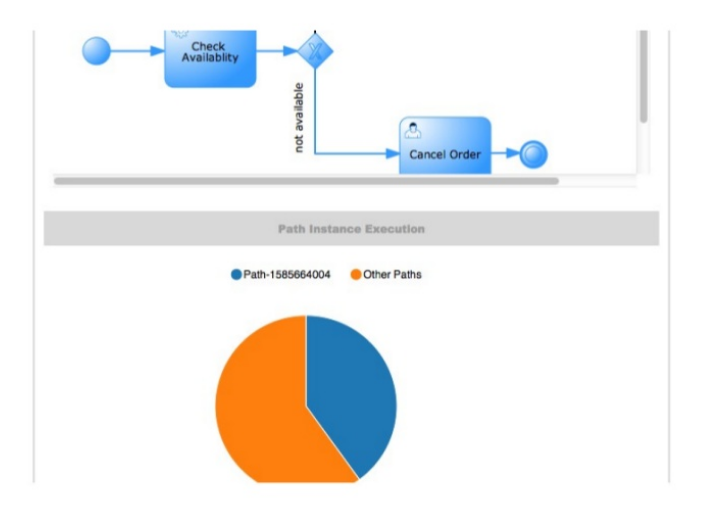

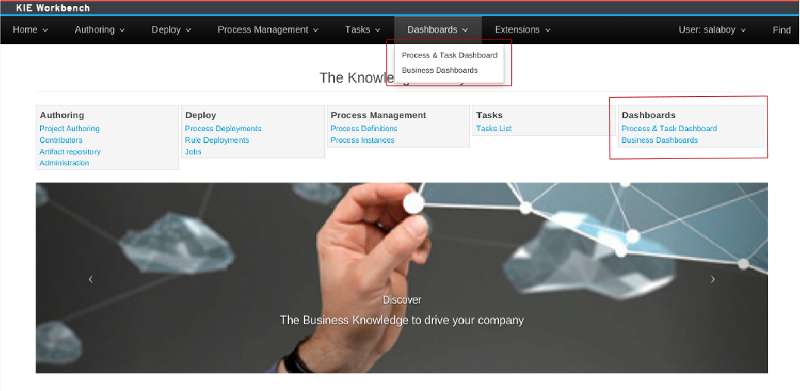

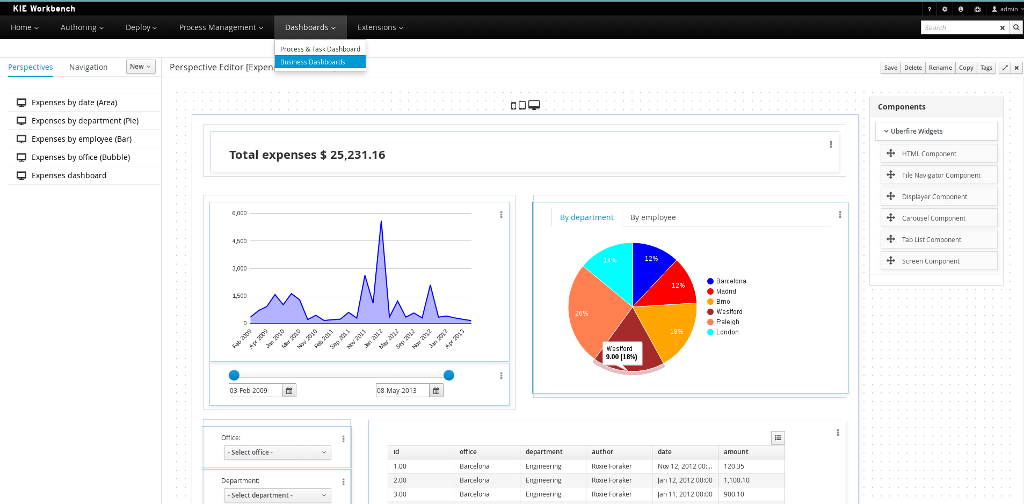

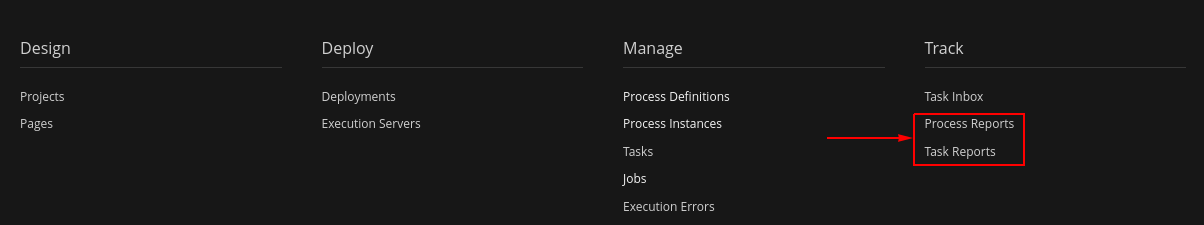

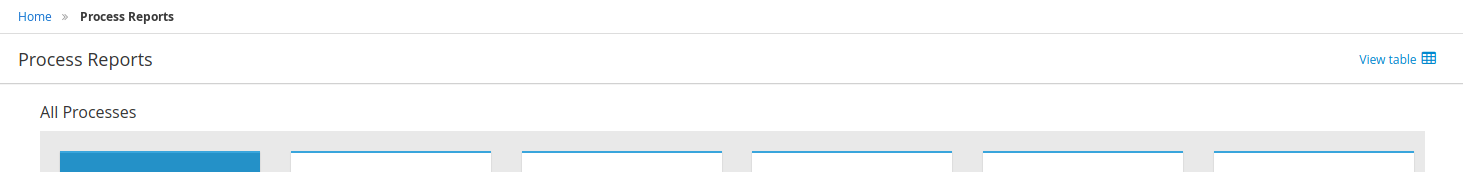

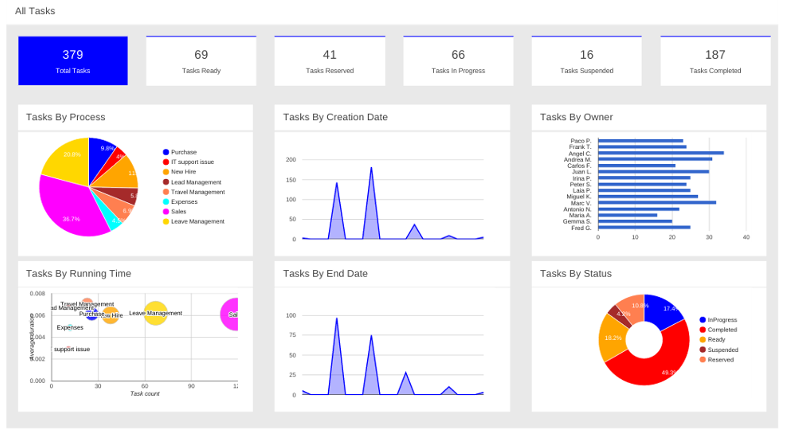

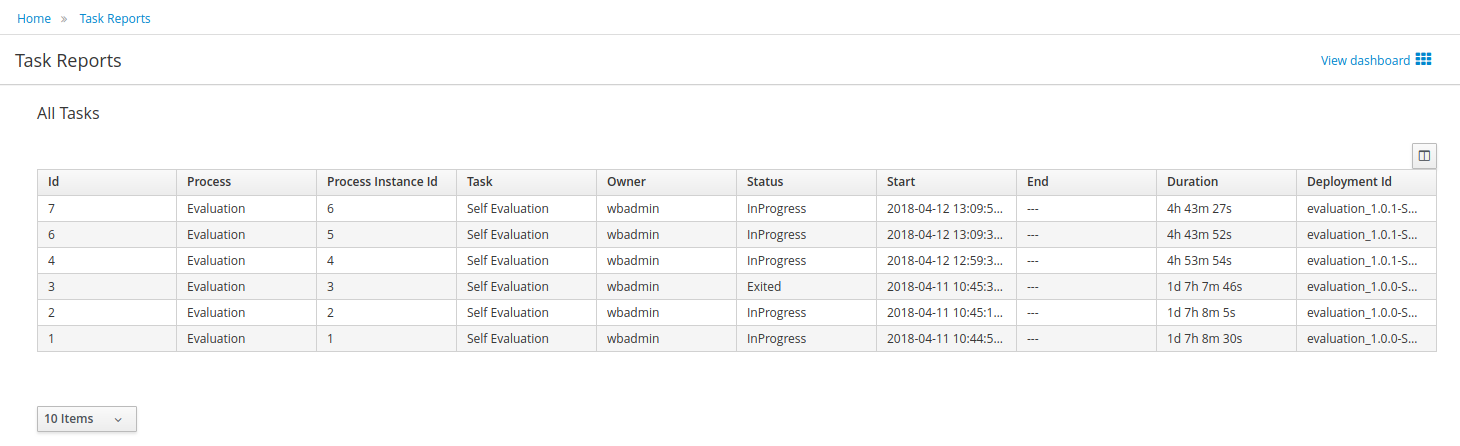

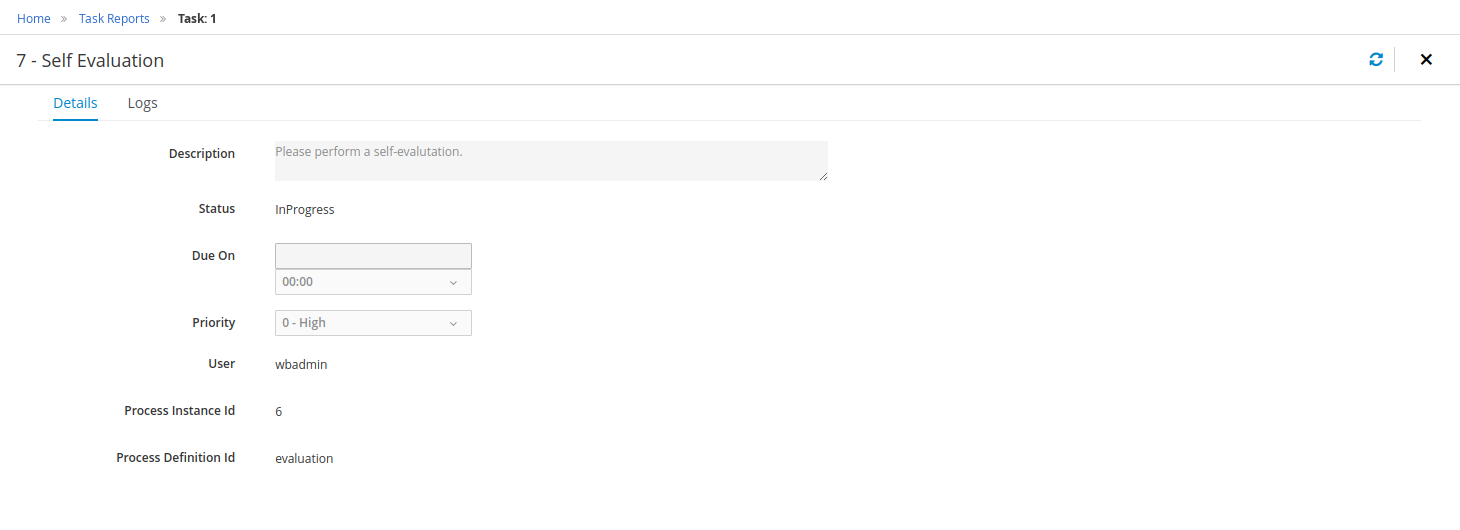

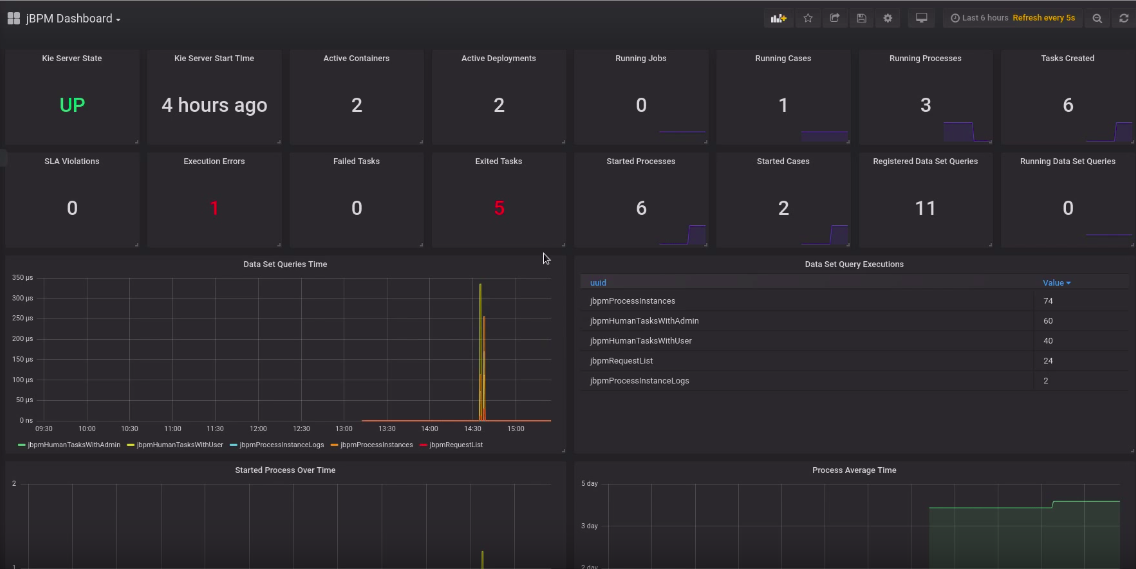

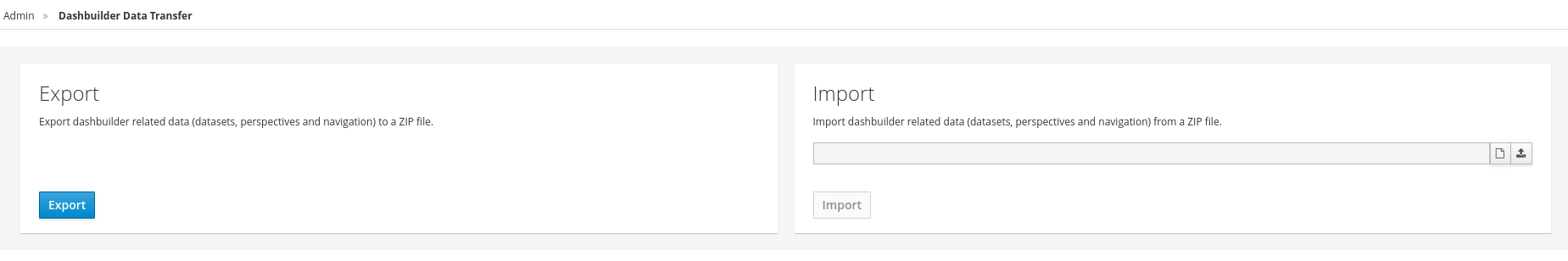

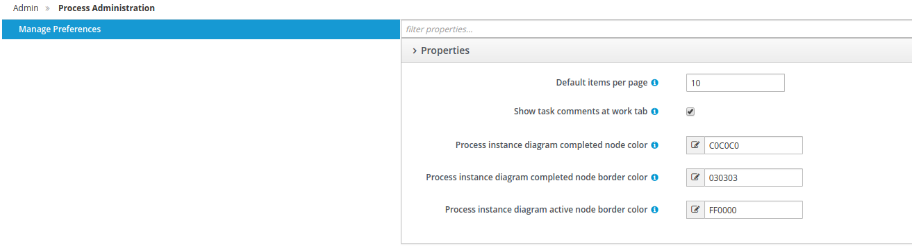

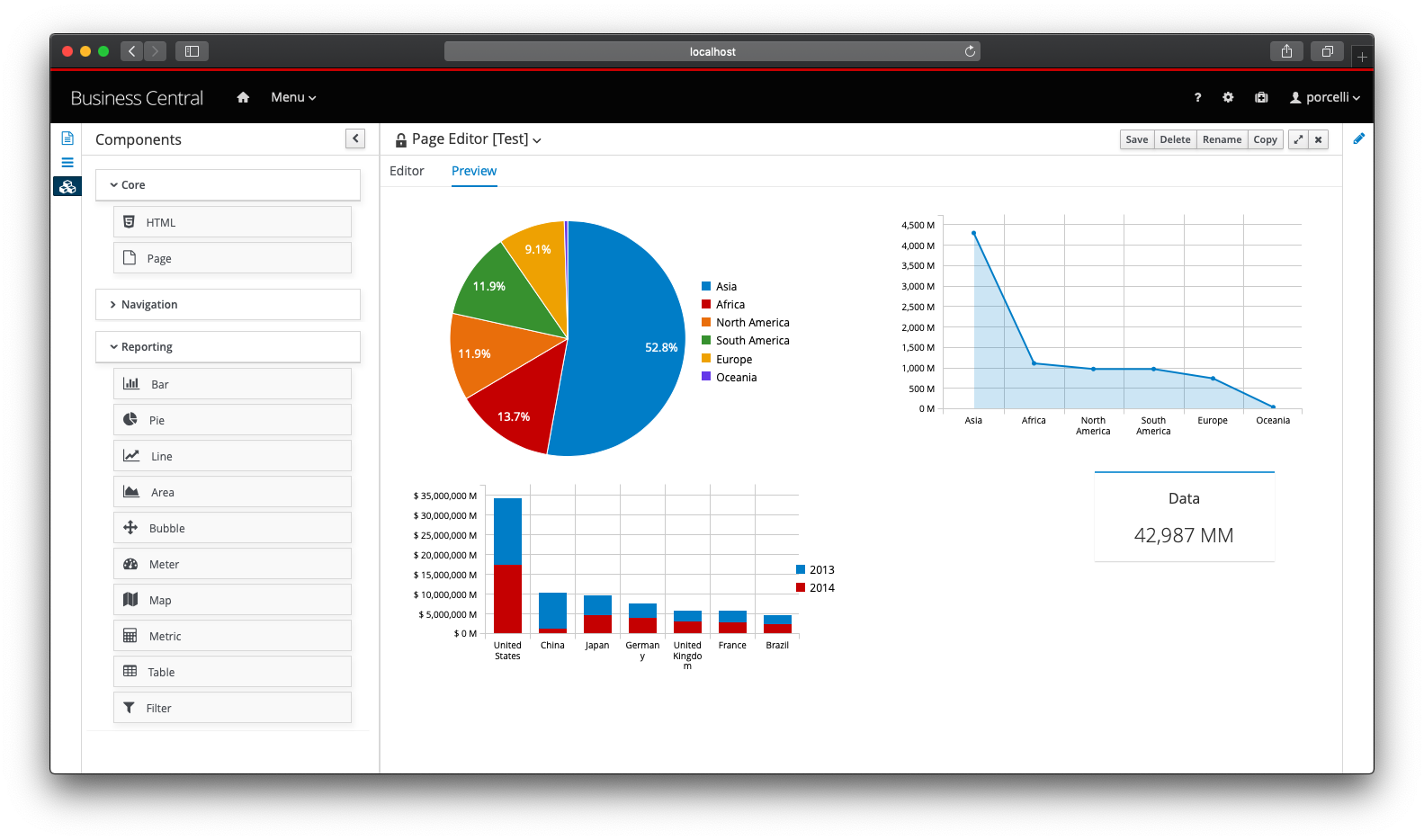

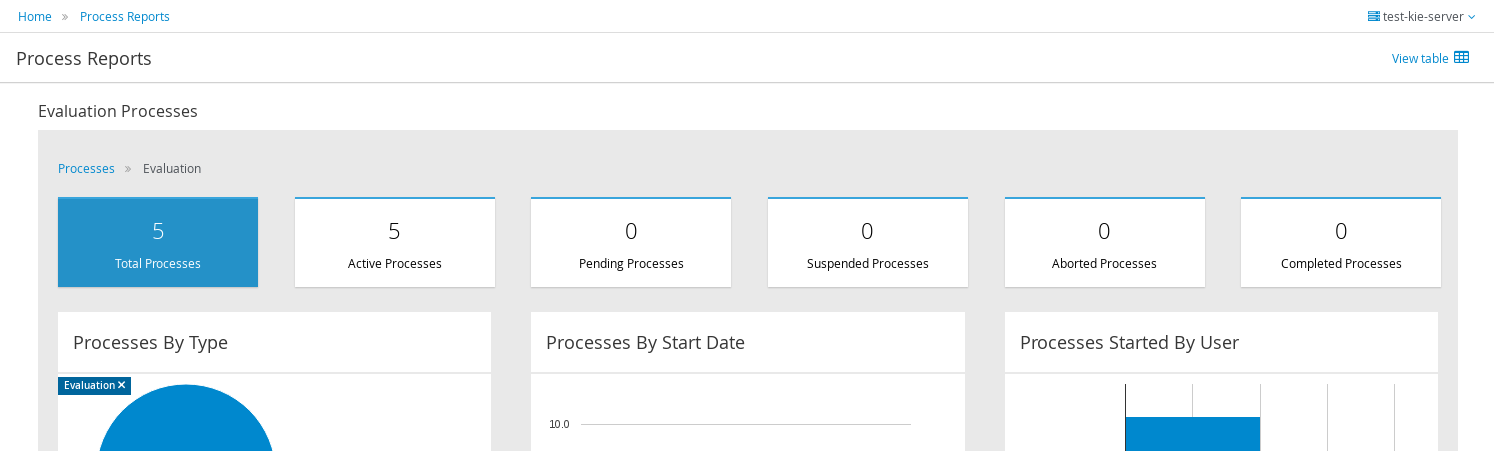

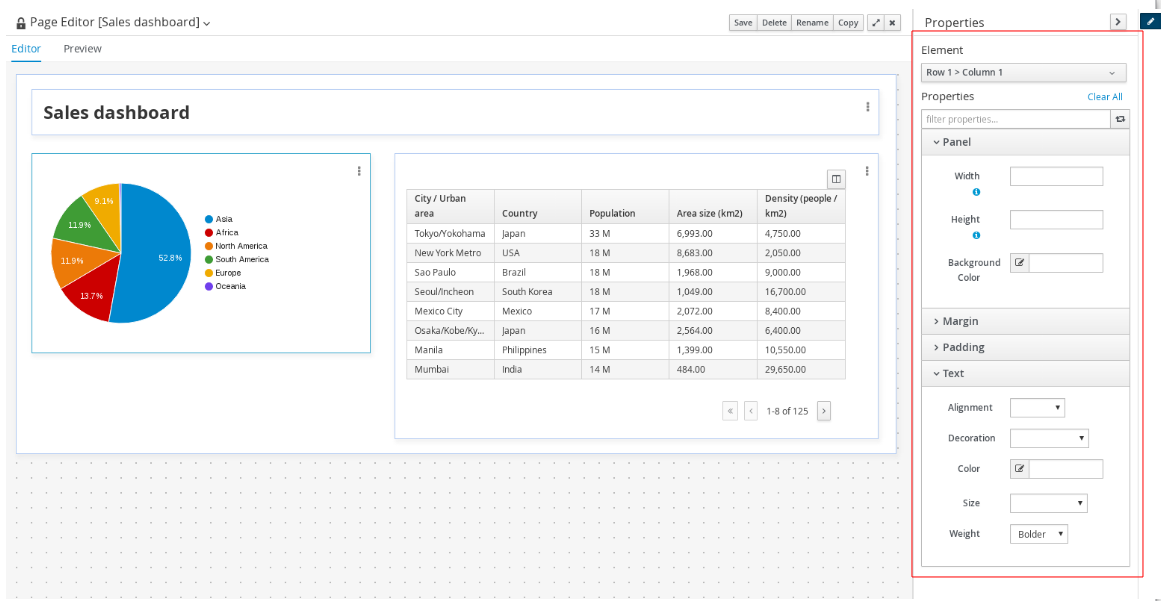

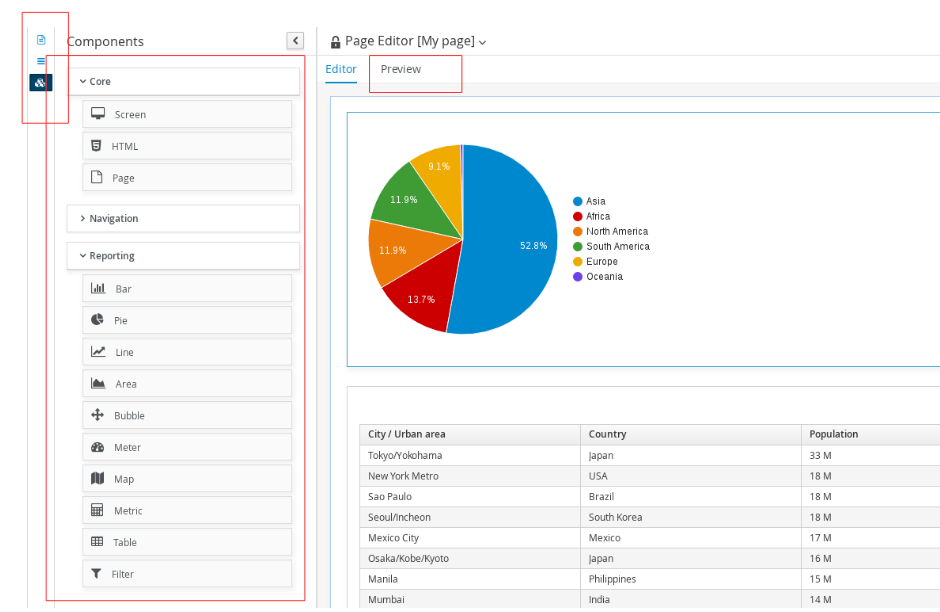

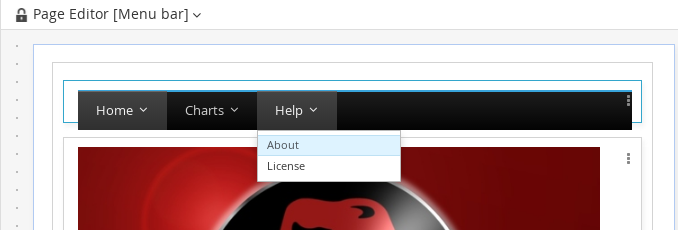

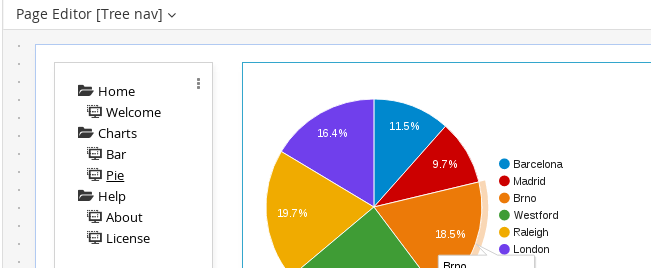

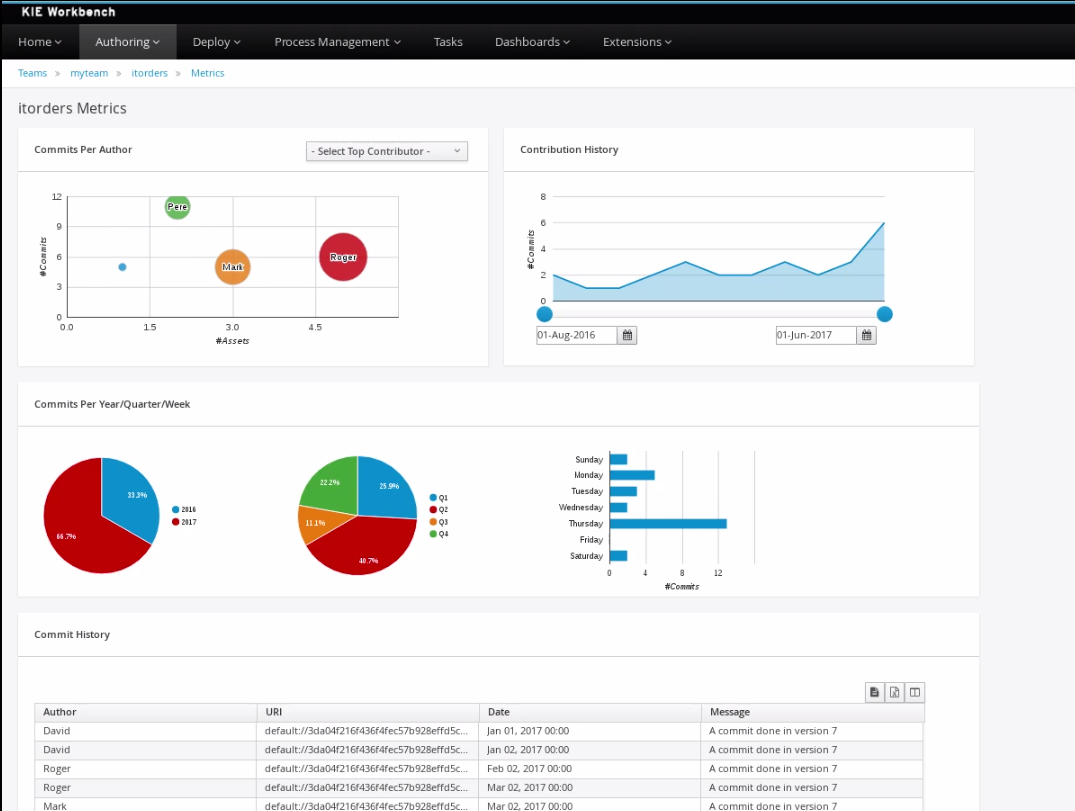

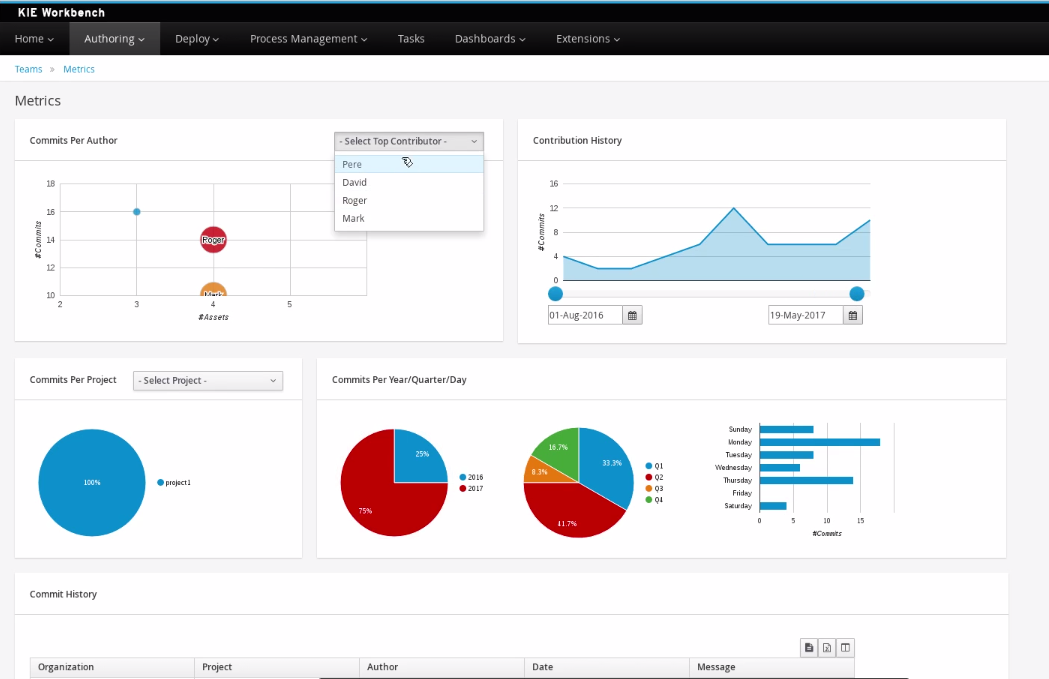

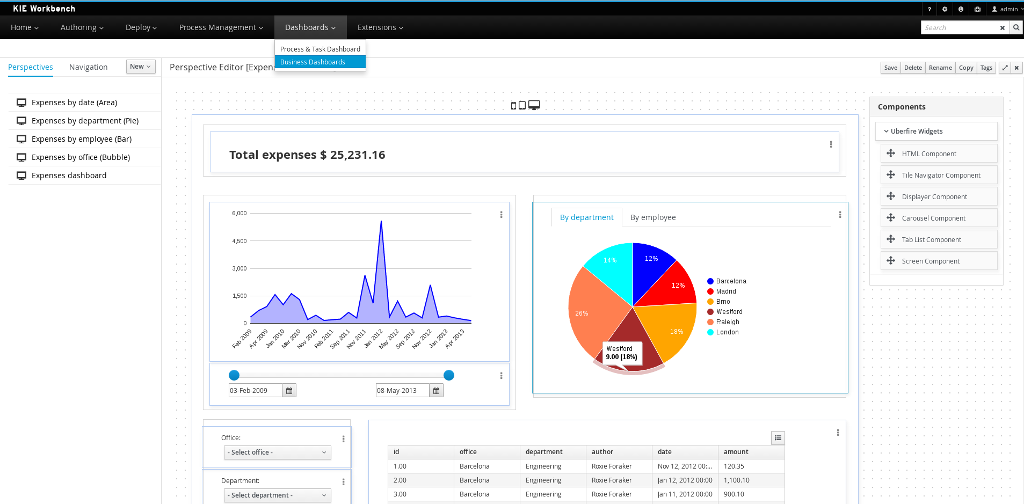

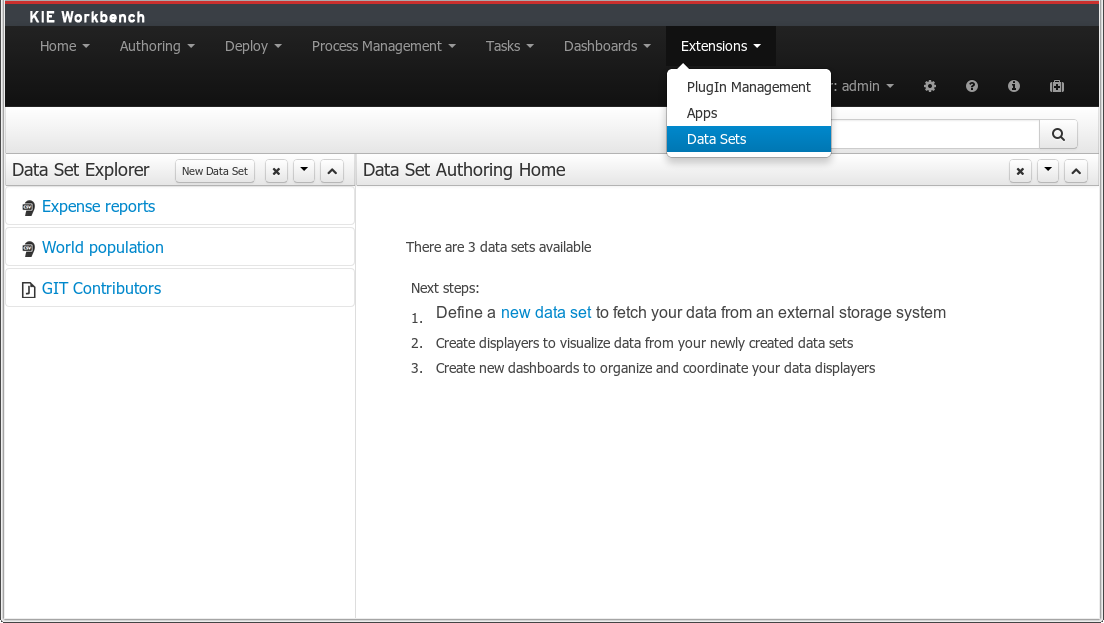

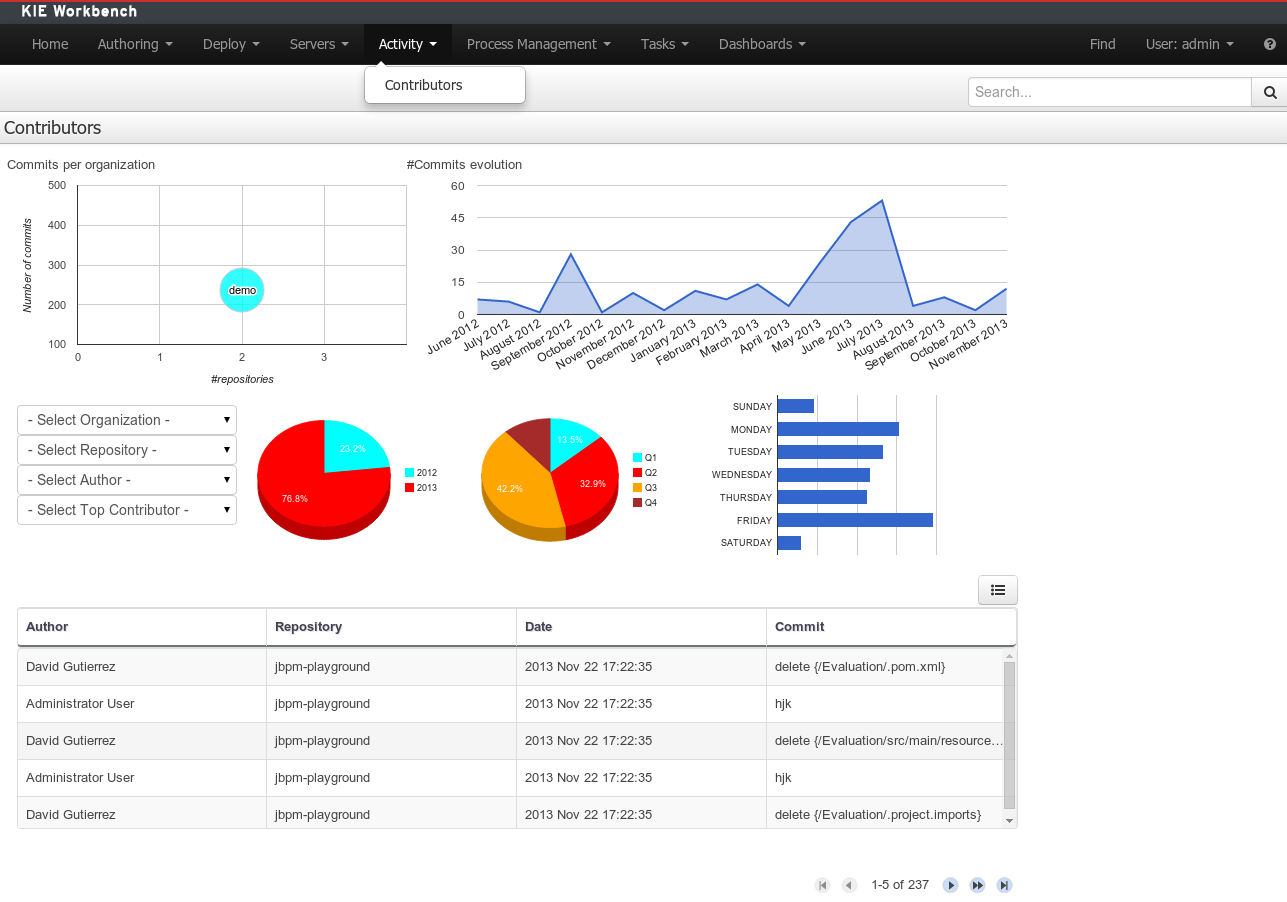

1.4.5. Business Activity Monitoring

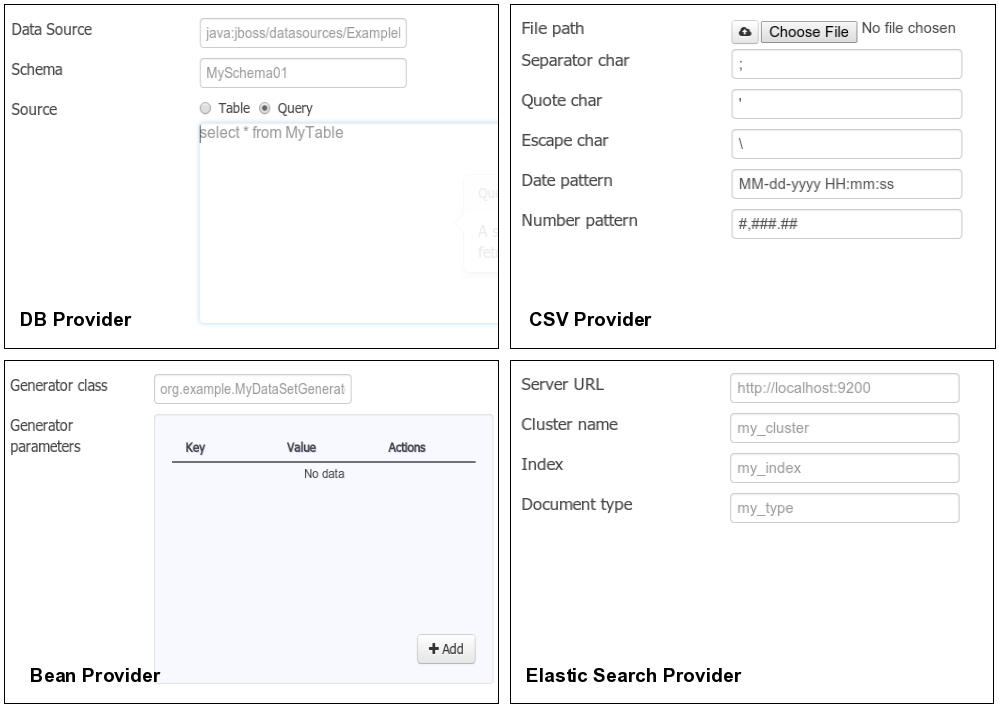

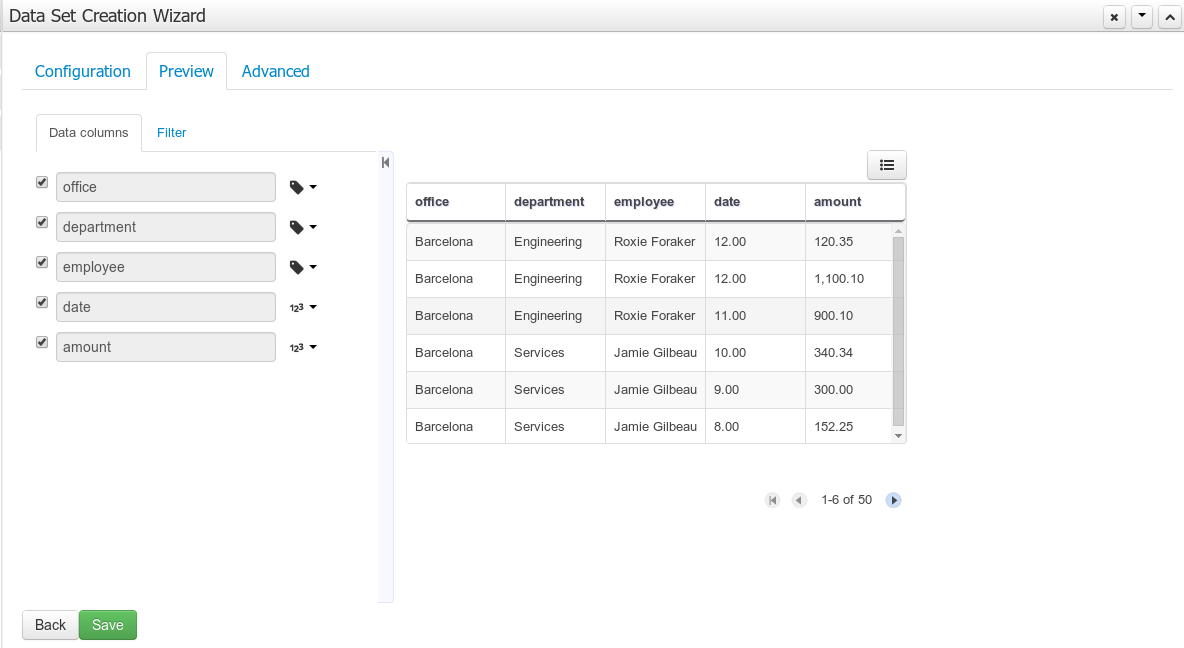

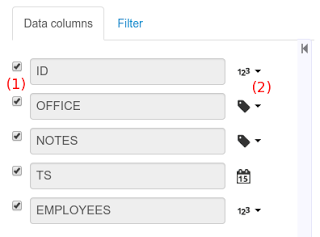

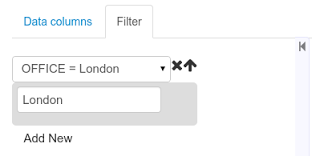

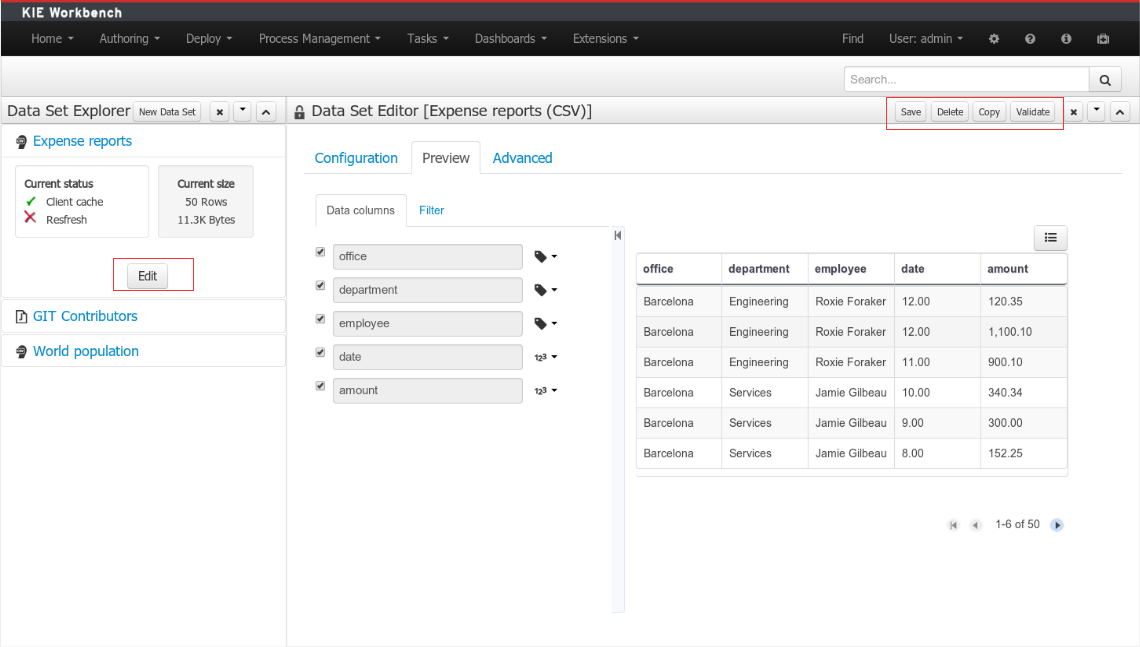

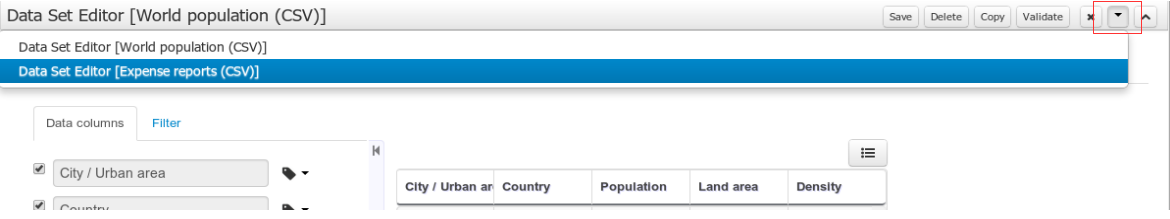

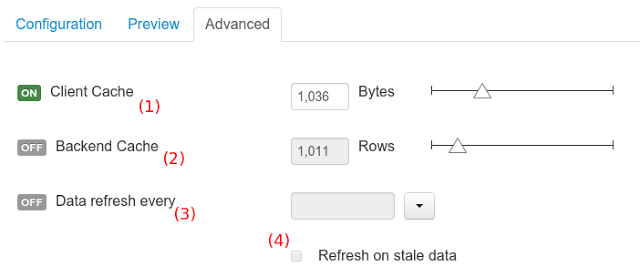

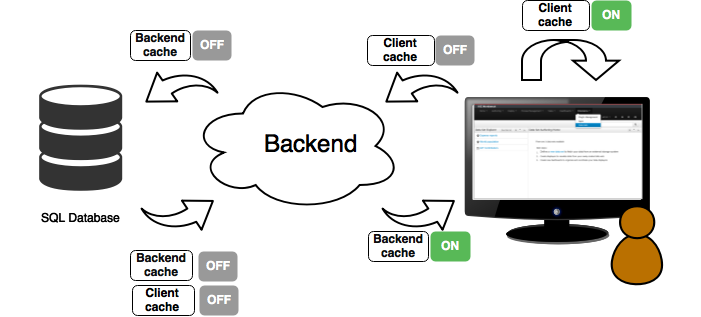

As of version 6.0, jBPM comes with a full-featured BAM tooling which allows non-technical users to visually compose business dashboards. With this brand new module, to develop business activity monitoring and reporting solutions on top of jBPM has never been so easy!

Key features:

-

Visual configuration of dashboards (Drag’n’drop).

-

Graphical representation of KPIs (Key Performance Indicators).

-

Configuration of interactive report tables.

-

Data export to Excel and CSV format.

-

Filtering and search, both in-memory or SQL based.

-

Data extraction from external systems, through different protocols.

-

Granular access control for different user profiles.

-

Look’n’feel customization tools.

-

Pluggable chart library architecture.

Target users:

-

Managers / Business owners. Consumer of dashboards and reports.

-

IT / System architects. Connectivity and data extraction.

-

Analysts / Developers. Dashboard composition & configuration.

To get further information about the new and noteworthy BAM capabilities of jBPM please read the chapter Business Activity Monitoring.

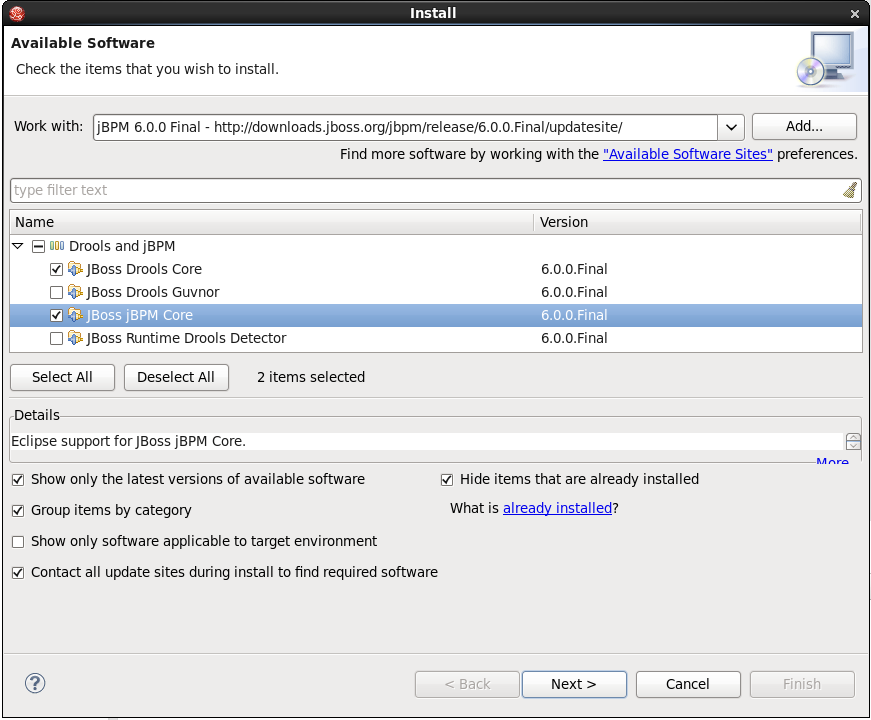

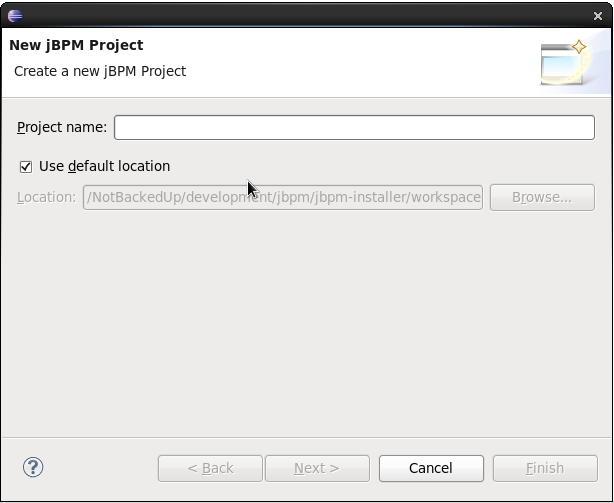

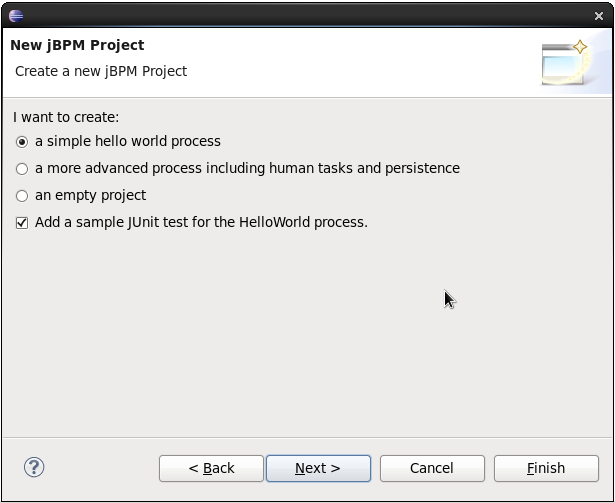

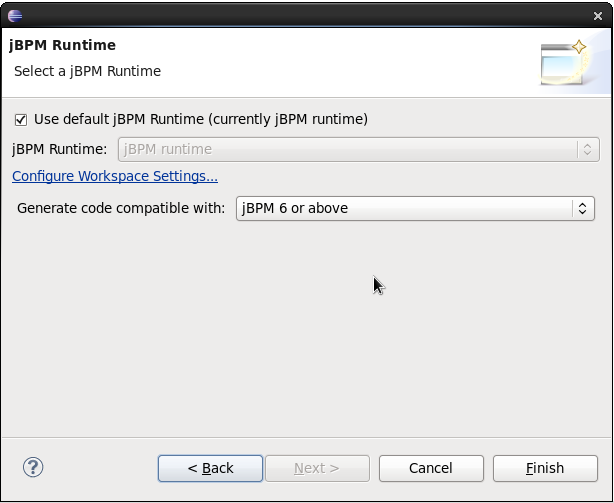

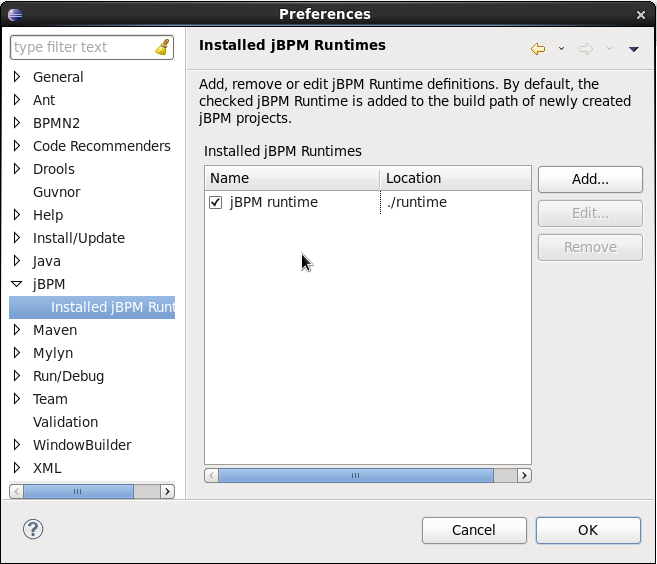

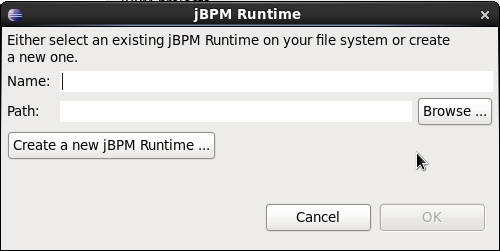

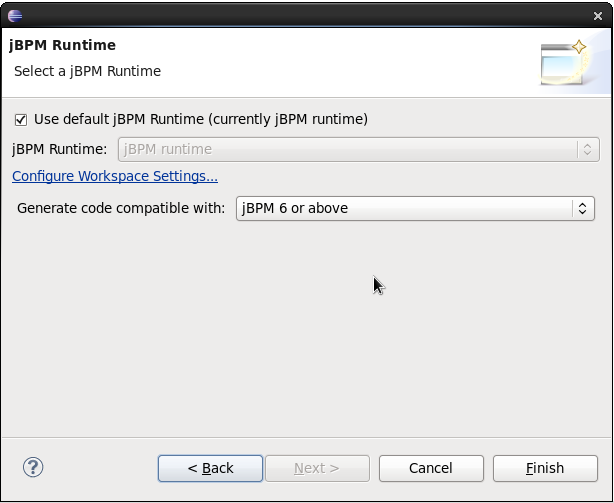

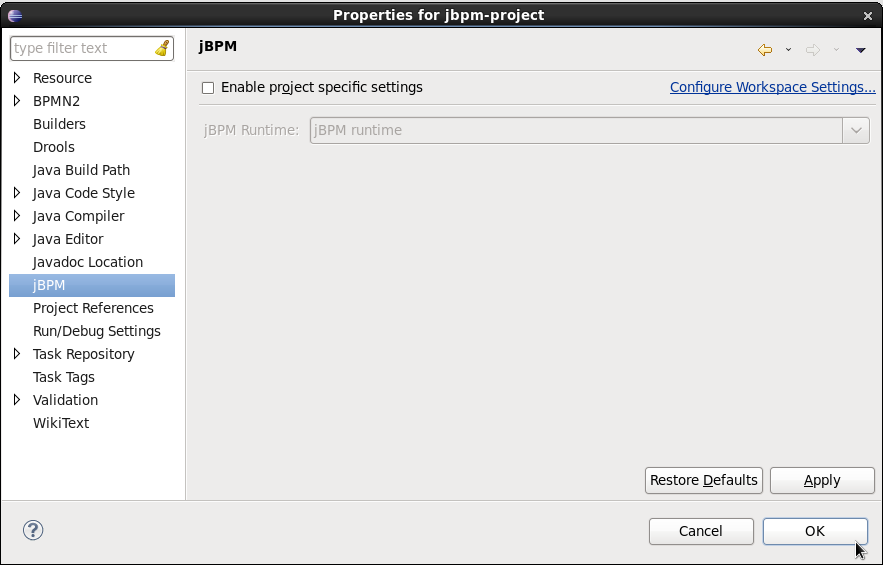

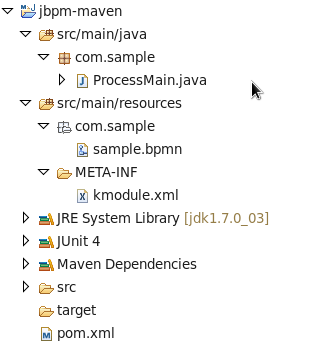

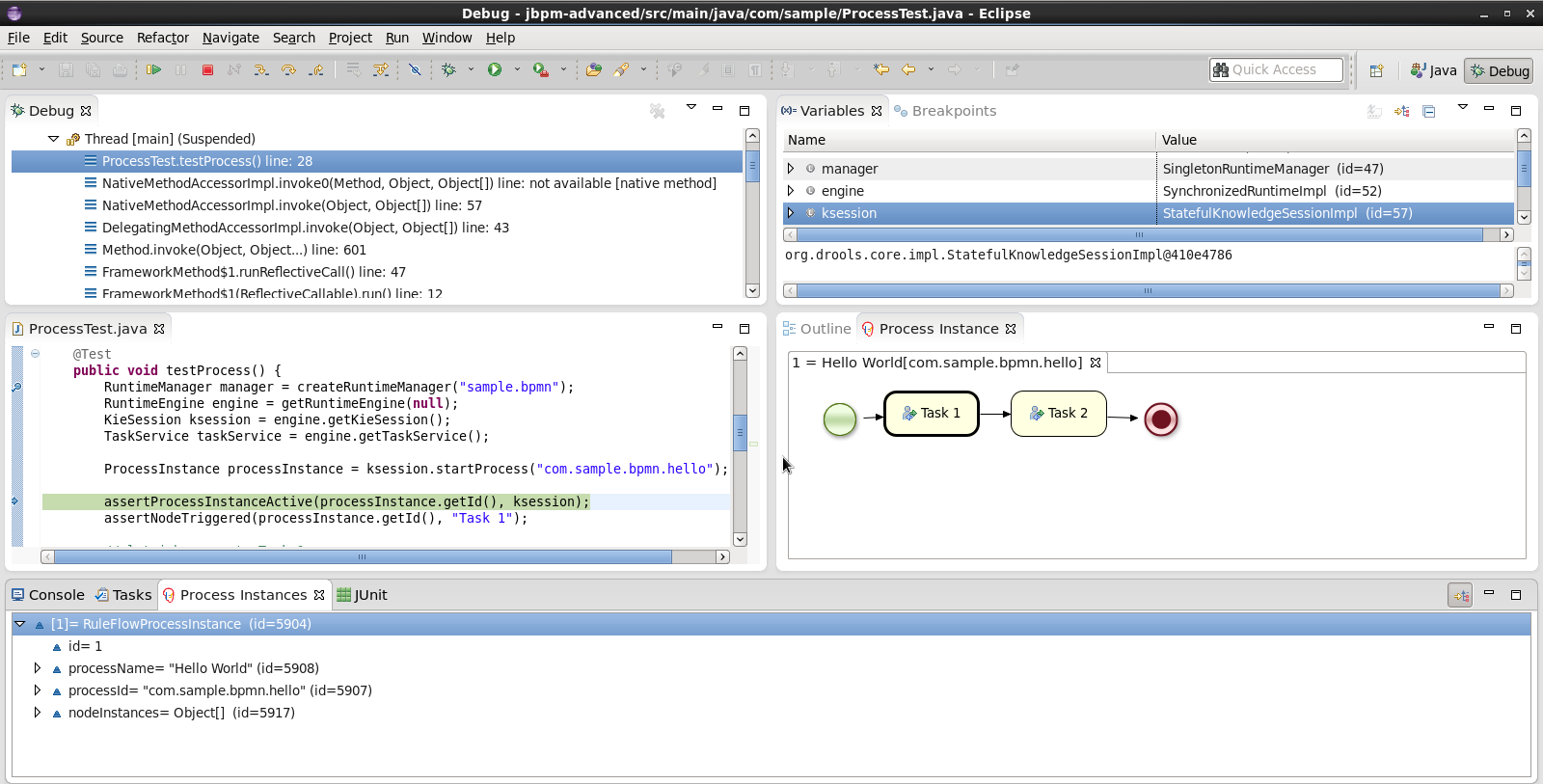

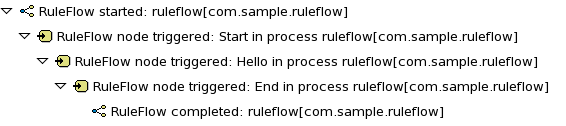

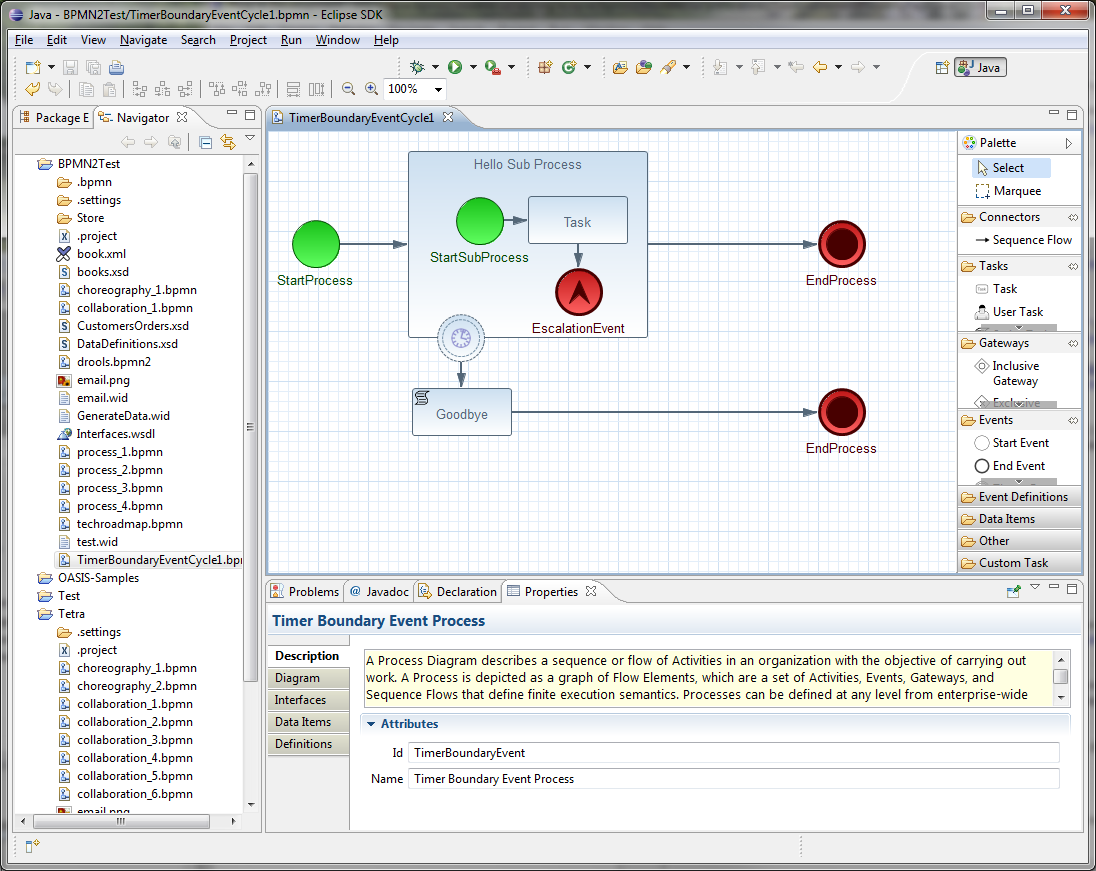

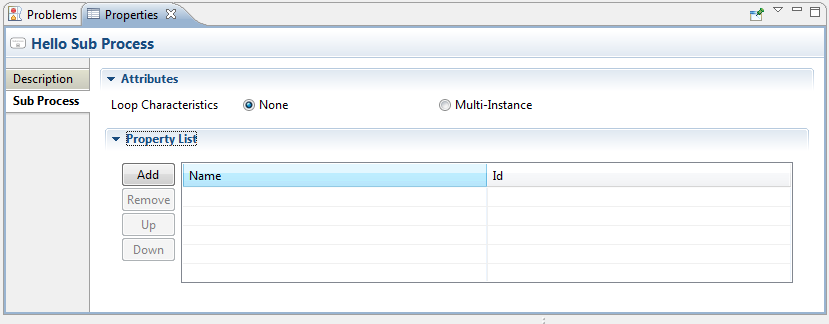

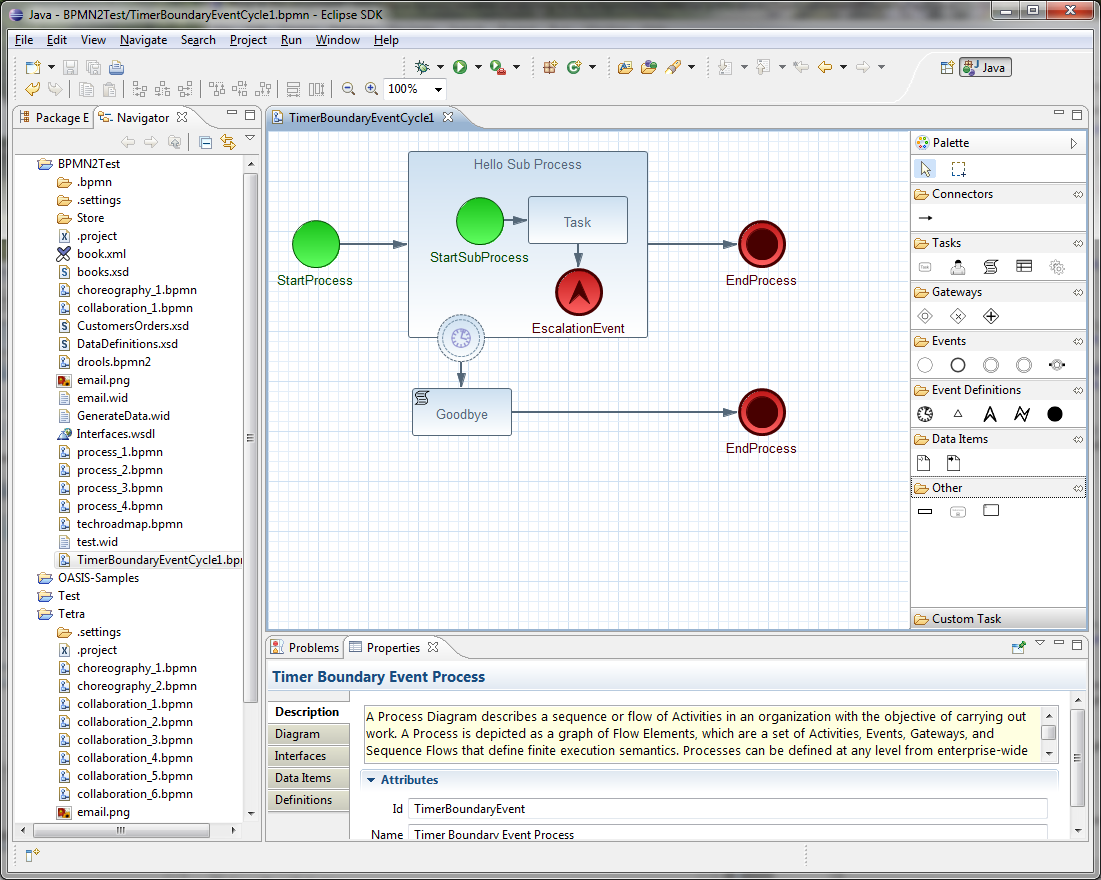

1.5. Eclipse Developer Tools

The Eclipse-based tools are a set of plugins to the Eclipse IDE and allow you to integrate your business processes in your development environment. It is targeted towards developers and has some wizards to get started, a graphical editor for creating your business processes (using drag and drop) and a lot of advanced testing and debugging capabilities.

It includes the following features:

-

Wizard for creating a new jBPM project

-

A graphical editor for BPMN 2.0 processes

-

The ability to plug in your own domain-specific nodes

-

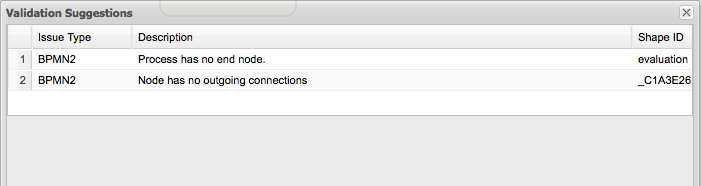

Validation

-

Runtime support (so you can select which version of jBPM you would like to use)

-

Graphical debugging to see all running process instances of a selected session, to visualize the current state of one specific process instance, etc.

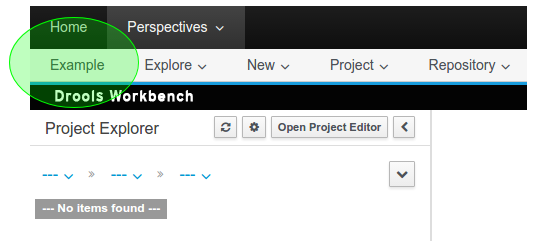

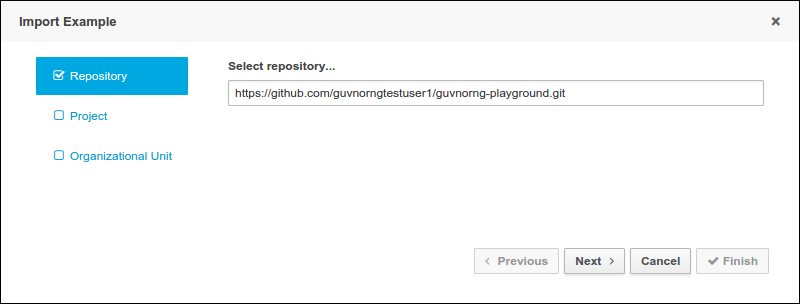

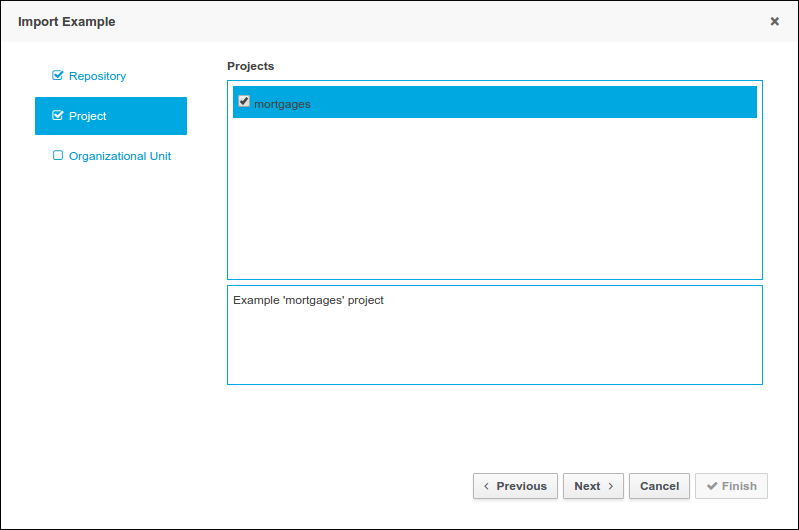

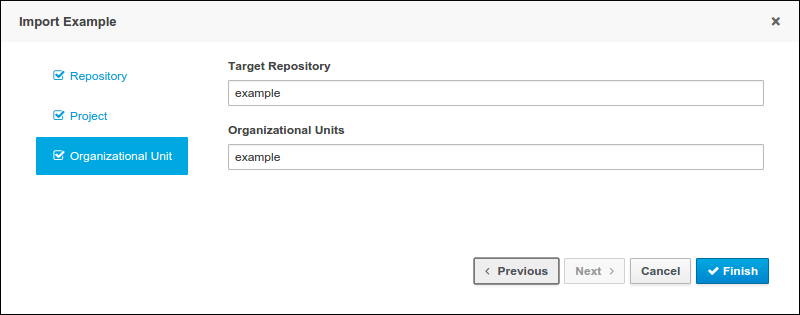

2. Getting Started

We recommend taking a look at our Getting Start page as a starting point for getting a full environment up and running with all the components you need in order to design, deploy, run and monitor a process. Alternatively, you can also take a quick tutorial that will guide you through most of the components using a simple example available in the Installer Chapter. This will teach you how to download and use the installer to create a demo setup, including most of the components. It uses a simple example to guide you through the most important features. Screencasts are available to help you out as well.

If you like to read more information first, the following chapters first focus on the core jBPM engine (API, BPMN 2.0, etc.). Further chapters will then describe the other components and other more complex topics like domain-specific processes, flexible processes, etc. After reading the core chapters, you should be able to jump to other chapters that you might find interesting.

You can also start playing around with some examples that are offered in a separate download. Check out the Examples chapter to see how to start playing with these.

After reading through these chapters, you should be ready to start creating your own processes and integrate the jBPM engine with your application. These processes can be started from the installer or be started from scratch.

2.1. Downloads

Latest releases can be downloaded from jBPM.org. Just pick the artifact you want:

-

server: single zip distribution with jBPM server (including WildFly, Business Central, jBPM case management showcase and service repository)

-

bin: all the jBPM binaries (JARs) and their transitive dependencies

-

src: the sources of the core components

-

docs: the documentation

-

examples: some jBPM examples, can be imported into Eclipse

-

installer: the jBPM Installer, downloads and installs a demo setup of jBPM

-

installer-full: full jBPM Installer, downloads and installs a demo setup of jBPM, already contains a number of dependencies prepackaged (so they don’t need to be downloaded separately)

Older releases are archived at http://downloads.jboss.org/jbpm/release/.

Alternatively, you can also use one of the many Docker images available for use at the Download section.

2.2. Community

Here are a lot of useful links part of the jBPM community:

-

jBPM Setup and jBPM Usage user forums and mailing lists

-

A JIRA bug tracking system for bugs, feature requests and roadmap

Please feel free to join us in our IRC channel at chat.freenode.net#jbpm. This is where most of the real-time discussion about the project takes place and where you can find most of the developers most of their time as well. Don’t have an IRC client installed? Simply go to http://webchat.freenode.net/, input your desired nickname, and specify #jbpm. Then click login to join the fun.

2.3. Sources

2.3.1. License

The jBPM code itself is using the Apache License v2.0.

Some other components we integrate with have their own license:

-

The new Eclipse BPMN2 plugin is Eclipse Public License (EPL) v1.0.

-

The legacy web-based designer is based on Oryx/Wapama and is MIT License

-

The Drools project is Apache License v2.0.

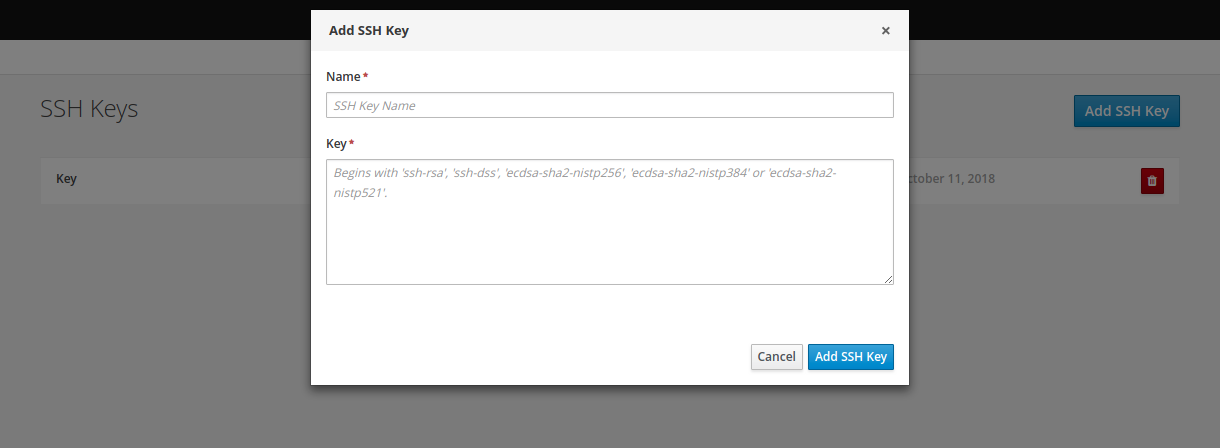

2.3.2. Source code

jBPM now uses git for its source code version control system. The sources of the jBPM project can be found here (including all releases starting from jBPM 5.0-CR1):

The source of some of the other components can be found here:

2.3.3. Building from source

If you’re interested in building the source code, contributing, releasing, etc. make sure to read this README.

2.4. Getting Involved

We are often asked "How do I get involved". Luckily the answer is simple, just write some code and submit it :) There are no hoops you have to jump through or secret handshakes. We have a very minimal "overhead" that we do request to allow for scalable project development. Below we provide a general overview of the tools and "workflow" we request, along with some general advice.

If you contribute some good work, don’t forget to blog about it :)

2.4.1. Sign up to jboss.org

Signing to jboss.org will give you access to the JBoss wiki, forums and JIRA. Go to https://www.jboss.org/ and click "Register".

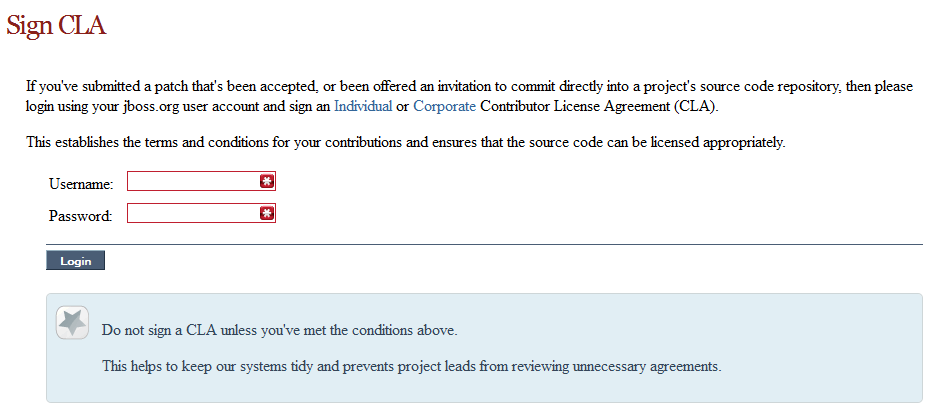

2.4.2. Sign the Contributor Agreement

The only form you need to sign is the contributor agreement, which is fully automated via the web. As the image below says "This establishes the terms and conditions for your contributions and ensures that source code can be licensed appropriately"

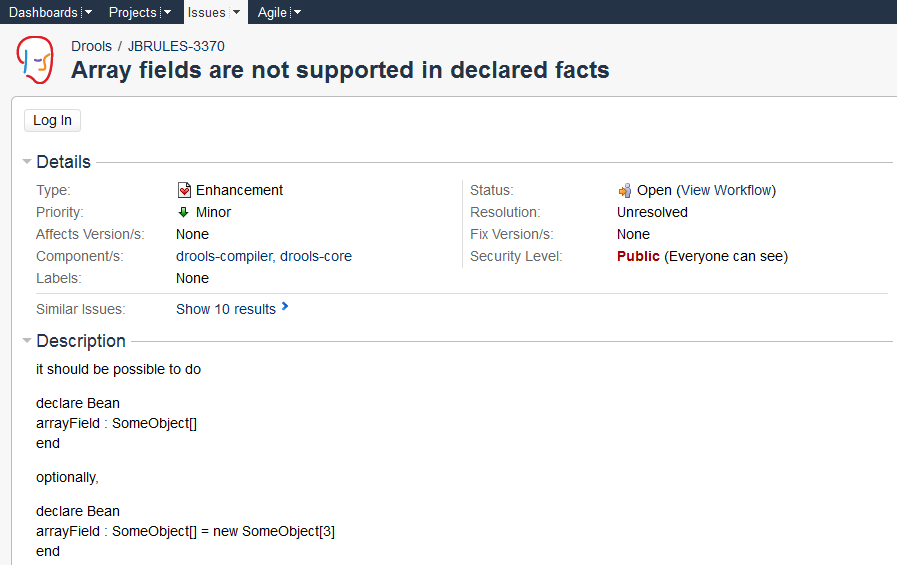

2.4.3. Submitting issues via JIRA

To be able to interact with the core development team you will need to use JIRA, the issue tracker. This ensures that all requests are logged and allocated to a release schedule and all discussions captured in one place. Bug reports, bug fixes, feature requests and feature submissions should all go here. General questions should be undertaken at the mailing lists.

Minor code submissions, like format or documentation fixes do not need an associated JIRA issue created.

2.4.4. Fork GitHub

With the contributor agreement signed and your requests submitted to JIRA you should now be ready to code :) Create a GitHub account and fork any of the Drools, jBPM or Guvnor repositories. The fork will create a copy in your own GitHub space which you can work on at your own pace. If you make a mistake, don’t worry blow it away and fork again. Note each GitHub repository provides you the clone (checkout) URL, GitHub will provide you URLs specific to your fork.

2.4.5. Writing Tests

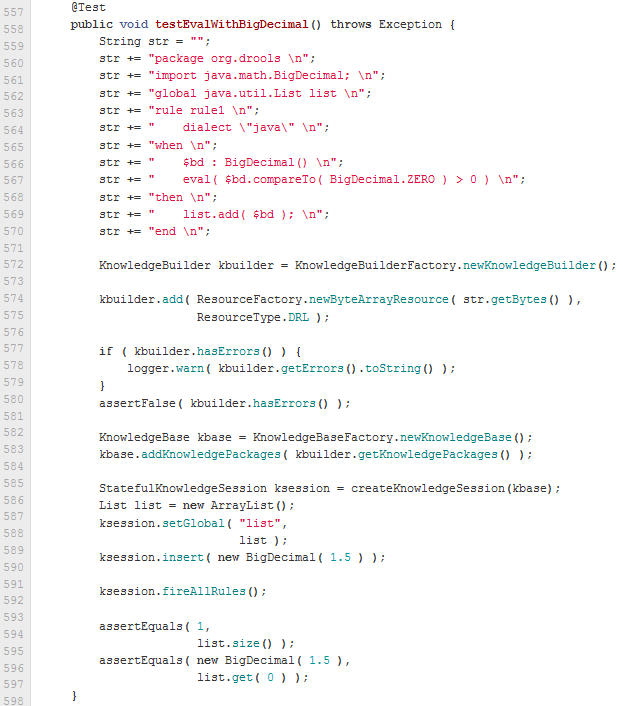

When writing tests, try and keep them minimal and self contained. We prefer to keep the DRL fragments within the test, as it makes for quicker reviewing. If there are a large number of rules then using a String is not practical so then by all means place them in separate DRL files instead to be loaded from the classpath. If your tests need to use a model, please try to use those that already exist for other unit tests; such as Person, Cheese or Order. If no classes exist that have the fields you need, try and update fields of existing classes before adding a new class.

There are a vast number of tests to look over to get an idea, MiscTest is a good place to start.

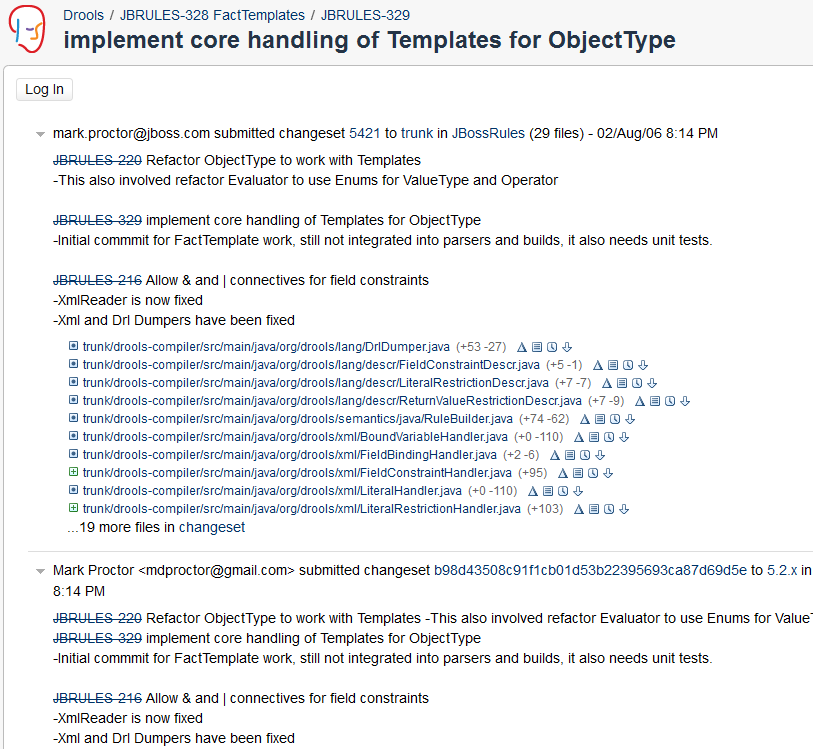

2.4.6. Commit with Correct Conventions

When you commit, make sure you use the correct conventions. The commit must start with the JIRA issue id, such as DROOLS-1946. This ensures the commits are cross referenced via JIRA, so we can see all commits for a given issue in the same place. After the id the title of the issue should come next. Then use a newline, indented with a dash, to provide additional information related to this commit. Use an additional new line and dash for each separate point you wish to make. You may add additional JIRA cross references to the same commit, if it’s appropriate. In general try to avoid combining unrelated issues in the same commit.

Don’t forget to rebase your local fork from the original master and then push your commits back to your fork.

2.4.7. Submit Pull Requests

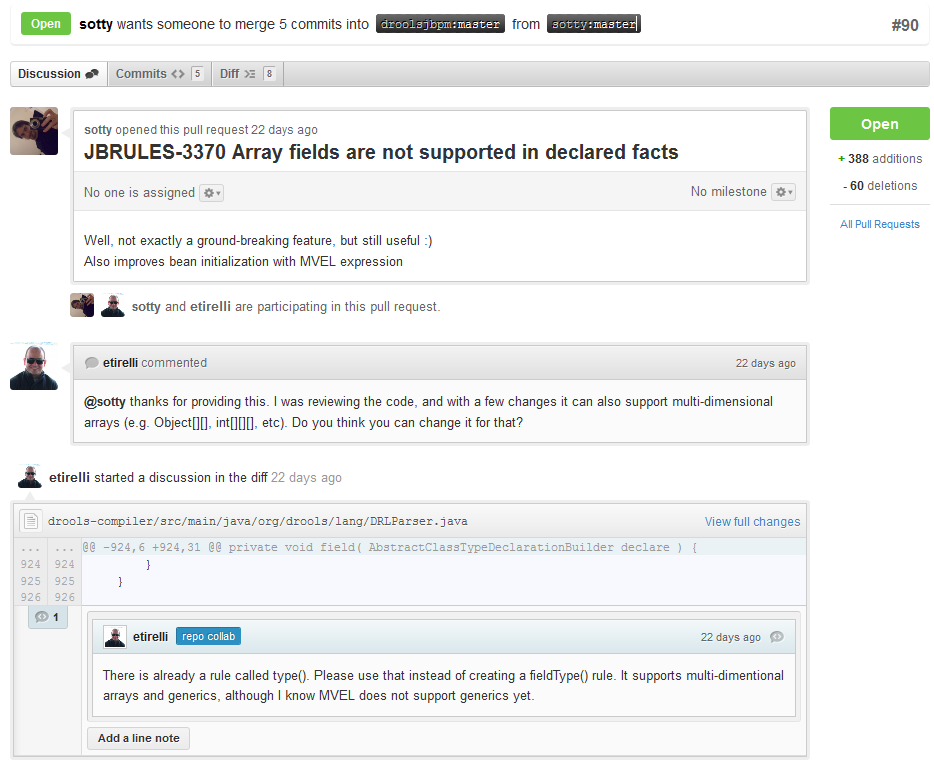

With your code rebased from original master and pushed to your personal GitHub area, you can now submit your work as a pull request. If you look at the top of the page in GitHub for your work area there will be a "Pull Request" button. Selecting this will then provide a gui to automate the submission of your pull request.

The pull request then goes into a queue for everyone to see and comment on. Below you can see a typical pull request. The pull requests allow for discussions and it shows all associated commits and the diffs for each commit. The discussions typically involve code reviews which provide helpful suggestions for improvements, and allows for us to leave inline comments on specific parts of the code. Don’t be disheartened if we don’t merge straight away, it can often take several revisions before we accept a pull request. Luckily GitHub makes it very trivial to go back to your code, do some more commits and then update your pull request to your latest and greatest.

It can take time for us to get round to responding to pull requests, so please be patient. Submitted tests that come with a fix will generally be applied quite quickly, where as just tests will often way until we get time to also submit that with a fix. Don’t forget to rebase and resubmit your request from time to time, otherwise over time it will have merge conflicts and core developers will general ignore those.

2.5. What to do if I encounter problems or have questions?

You can always contact the jBPM community for assistance.

IRC: #jbpm at chat.freenode.net

jBPM Setup Google Group - Installation, configuration, setup and administration discussions for Business Central, Eclipse, runtime environments and general enterprise architectures.

jBPM Usage Google Group - Authoring, executing and managing processes with jBPM. Any questions regarding the use of jBPM. General API help and best practices in building BPM systems.

Visit our website for more options on how to get help.

Legacy jBPM User Forum - serves as an archive; post new questions to one of the Google Groups above

3. Business applications

3.1. Overview

Business application can be defined as an automated solution, built with selected frameworks and capabilities that implements business functions and/or business problems. Capabilities can be (among others):

-

persistence

-

messaging

-

transactions

-

business processes, business rules

-

planning solutions

Business application is more of a logical grouping of individual services that represent certain business capabilities. Usually they are deployed separately and can also be versioned individually. Overall goal is that the complete business application will allow particular domain to achieve their business goals e.g. order management, accommodation management, etc.

-

Build on any runtime (most popular options)

-

SpringBoot

-

WildFly

-

Thorntail

-

-

deployable to cloud with just single command

-

OpenShift

-

Kubernetes

-

Docker

-

-

UI agnostic

-

Doesn’t enforce any UI frameworks and let users to make their own choice

-

-

Configurable database profiles

-

to allow smooth transition from one database to another with just single parameter/switch

-

-

Generated

-

makes it really easy to start for developers so they don’t get upset with initial failures usually related to configuration

-

-

Many project

-

data model project - shared data model between business assets and service

-

business assets (kjar) project - easily importable into Business Central

-

service project - actual service with various capabilities

-

-

Configuration for

-

maven repository - settings.xml

-

database profiles

-

deployment setup

-

local

-

docker

-

OpenShift

-

-

Service project is the one that is deployable but will in most of the cases include business assets and data model projects. Data model project represents the common data structures that will be shared between service implementation and business assets. That enables proper encapsulation and promotes reuse and at the same time reduces shortcuts to make data model classes something more than they are - include too much of implementation into data models.

Business applications you build are not restricted to having only one of each project types. In order to build the solutions you need your business app can:

-

Have multiple data model projects - each service project can expose its own public data model

-

Have multiple business assets (kjar) projects - in case there is a business need for it

-

Have multiple service projects - to split services into smaller components for better manageability

-

Have UI modules - either per service (embedded in the service project) or a federated one (separate project for UI only)

-

Service projects can communicate with each other either directly or via business processes

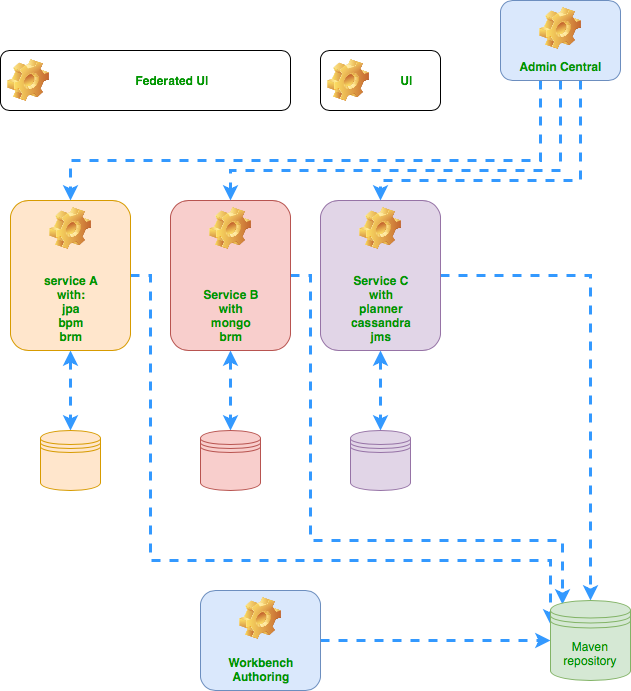

Following diagram represents the sample business application

3.2. Create your business application

Business application can be created in multiple ways, depending on the project types you need.

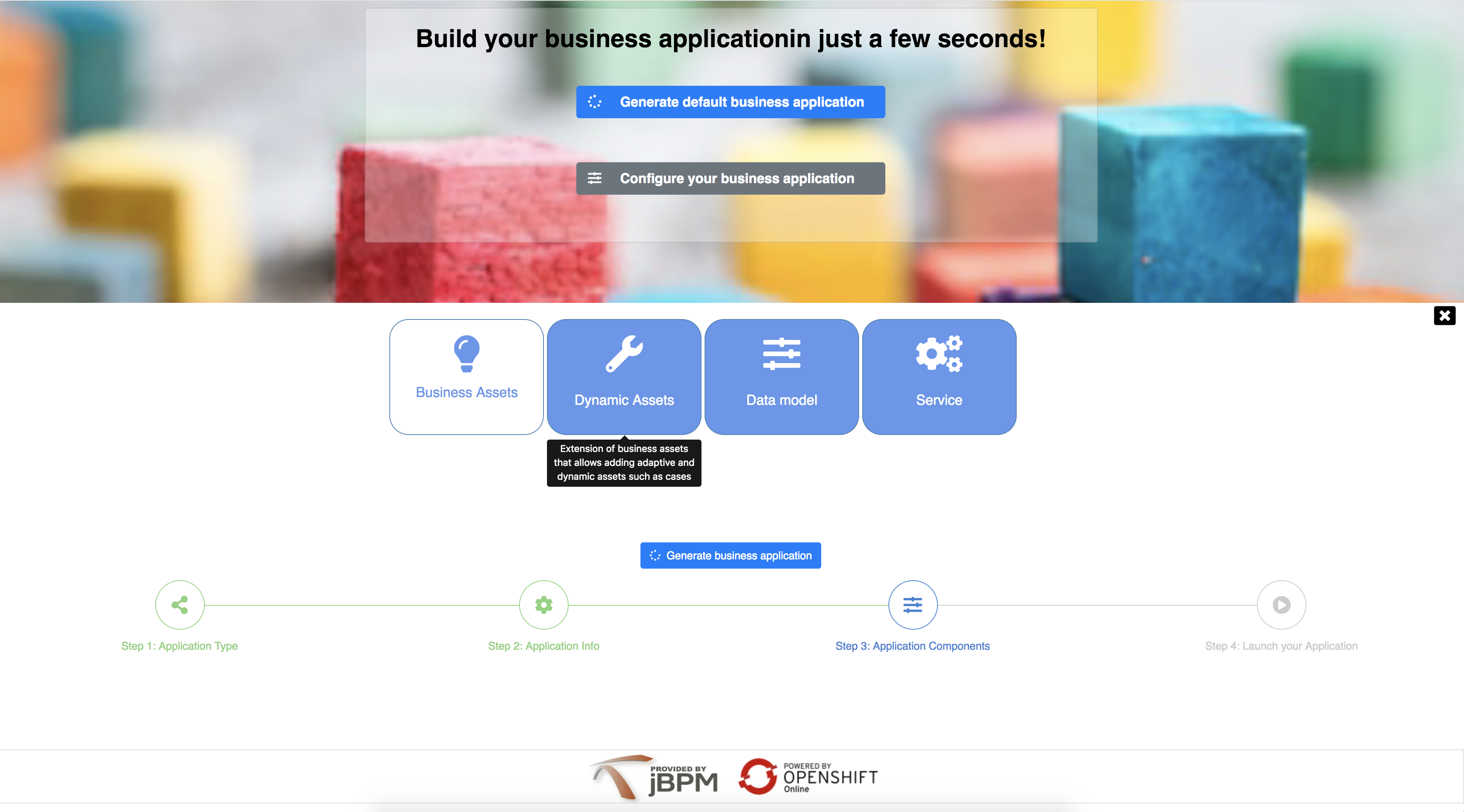

3.2.1. Generate business application

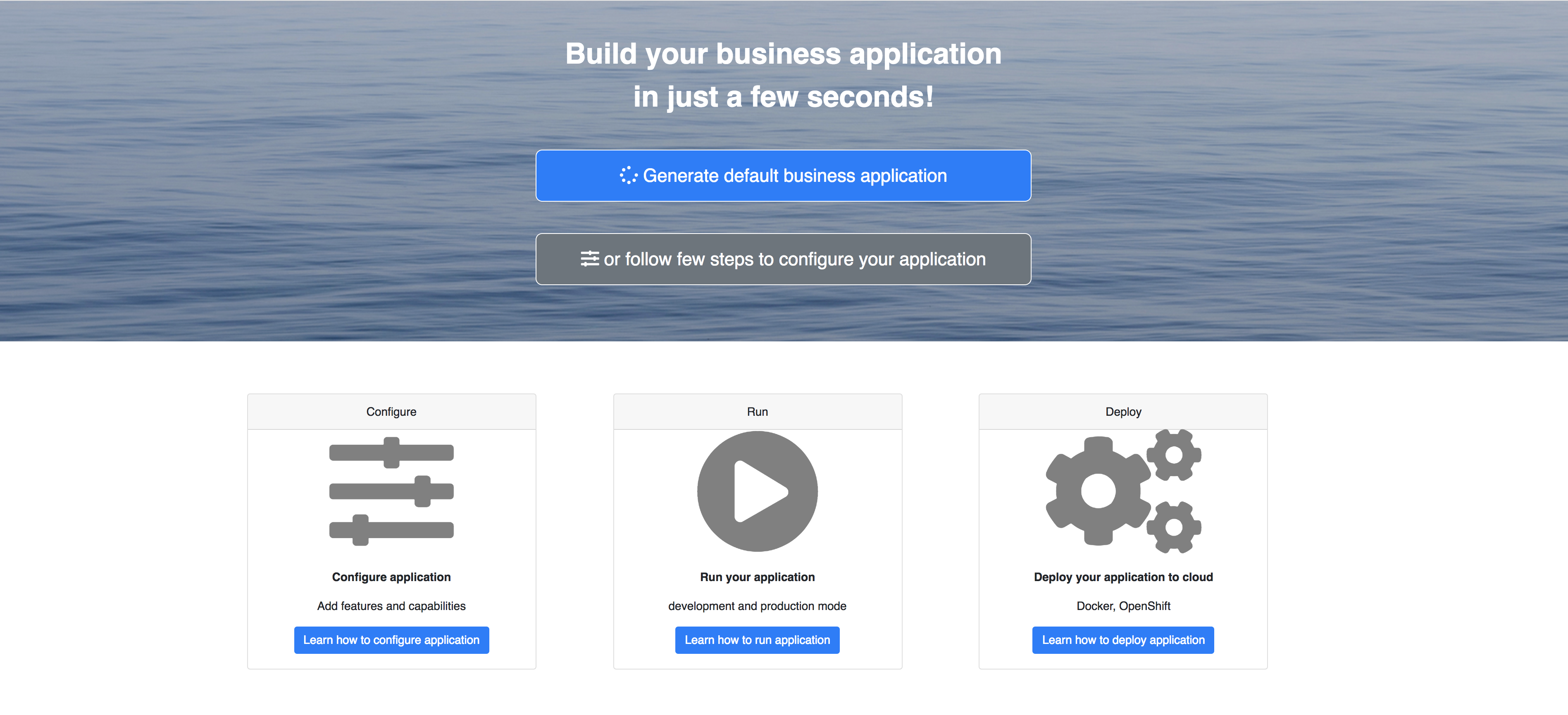

The fastest and recommended way to quickly generate your business application is by using the jBPM online service: start.jbpm.org

With the online service you can:

-

generate your business app using a default (most commonly used) configuration

-

configure your business application to include specific features that you need

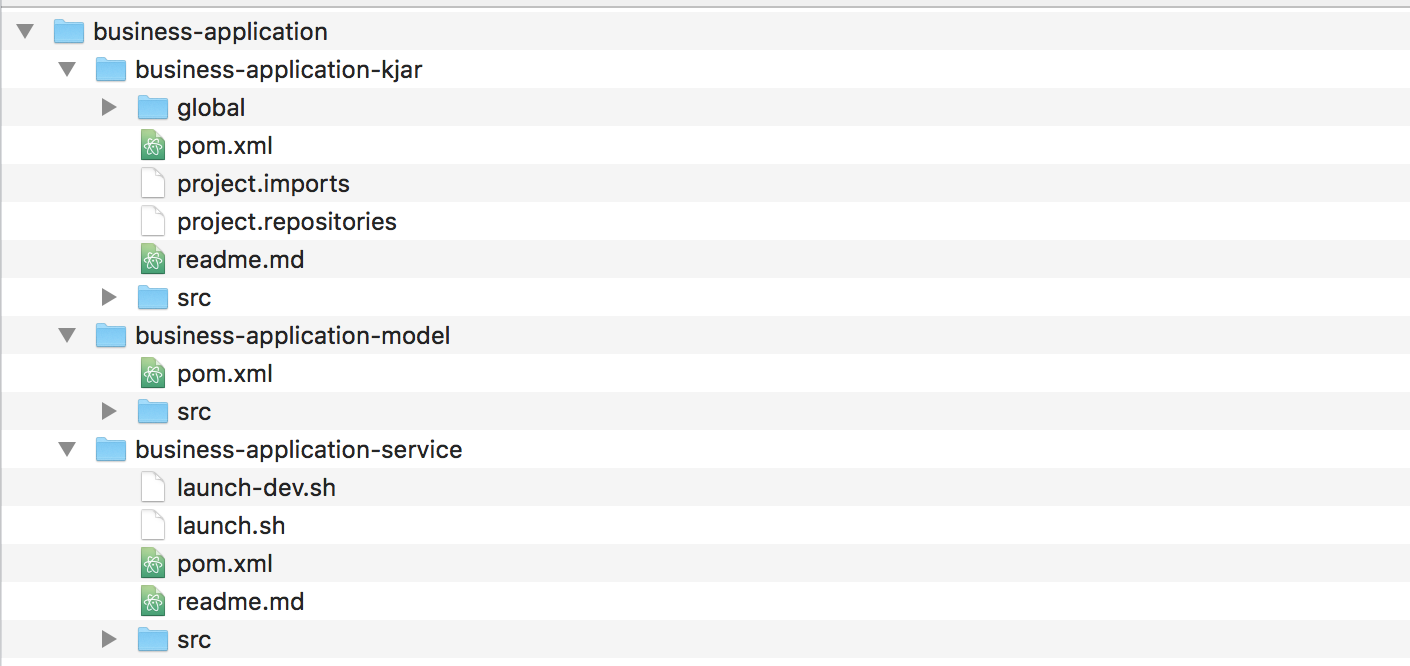

The generated application will be delivered as a zip archive will following structure

To provide more information about individual steps, let’s review different options that user can choose from

3.2.1.1. Capabilities

Capabilities essentially define the features that your business application will be equipped with. Available options are:

-

Business automation covers features for process management, case management, decision management and optimisation. These will be by default configured in the service project of your business application. Although you can turn them off via configuration.

-

Decision management covers mainly decision and rules related features (backed by Drools project)

-

Business optimisation covers planning problems and solutions related features (backed by OptaPlanner project)

3.2.1.2. Application information

General information about the application that is

-

name - the name that will be used for the projects generated

-

package - valid Java package name that will be created in the projects and used as group of maven projects

-

version - selected version of jBPM/KIE that should be used for service project

3.2.1.3. Project types

Selection of project types to be included in the business application

-

data model - basic maven/jar project to keep the data structures

-

business assets - kjar project that can be easily imported into Business Central for development

-

service - service project that will include chosen capabilities with all bits configured

3.2.2. Manually create business application

In case you can’t use jBPM online service to generate the application you can manually create individual projects. jBPM provides maven archetypes that can be easily used to generate the application. In fact jBPM online service uses these archetypes behind the scenes to generate business application.

Business assets project archetype

org.kie:kie-kjar-archetype:7.33.0.Final

Service project archetype

org.kie:kie-service-spring-boot-archetype:7.33.0.Final

Data model archetype

org.apache.maven.archetypes:maven-archetype-quickstart:1.3

Example that allows to generate all three types of projects

mvn archetype:generate -B -DarchetypeGroupId=org.kie -DarchetypeArtifactId=kie-model-archetype -DarchetypeVersion=7.33.0.Final -DgroupId=com.company -DartifactId=test-model -Dversion=1.0-SNAPSHOT -Dpackage=com.company.model

mvn archetype:generate -B -DarchetypeGroupId=org.kie -DarchetypeArtifactId=kie-kjar-archetype -DarchetypeVersion=7.33.0.Final -DgroupId=com.company -DartifactId=test-kjar -Dversion=1.0-SNAPSHOT -Dpackage=com.company

mvn archetype:generate -B -DarchetypeGroupId=org.kie -DarchetypeArtifactId=kie-service-spring-boot-archetype -DarchetypeVersion=7.33.0.Final -DgroupId=com.company -DartifactId=test-service -Dversion=1.0-SNAPSHOT -Dpackage=com.company.service -DappType=bpmWhen generating projects from the archetypes in same directory you should end up with exactly the same structure as generated by jBPM online service.

3.3. Run your business application

Once your business application is created, the next step is to actually run it.

3.3.1. Launch application

By default business application has a single runnable project - that is the service project. The service project is equipped with two scripts (both for linux and windows)

-

launch.sh/launch.bat

-

launch-dev.sh/launch-dev.bat

the main difference between these two scripts is the target execution

-

launch.sh/bat is dedicated to start application in standalone mode, without additional requirements.

-

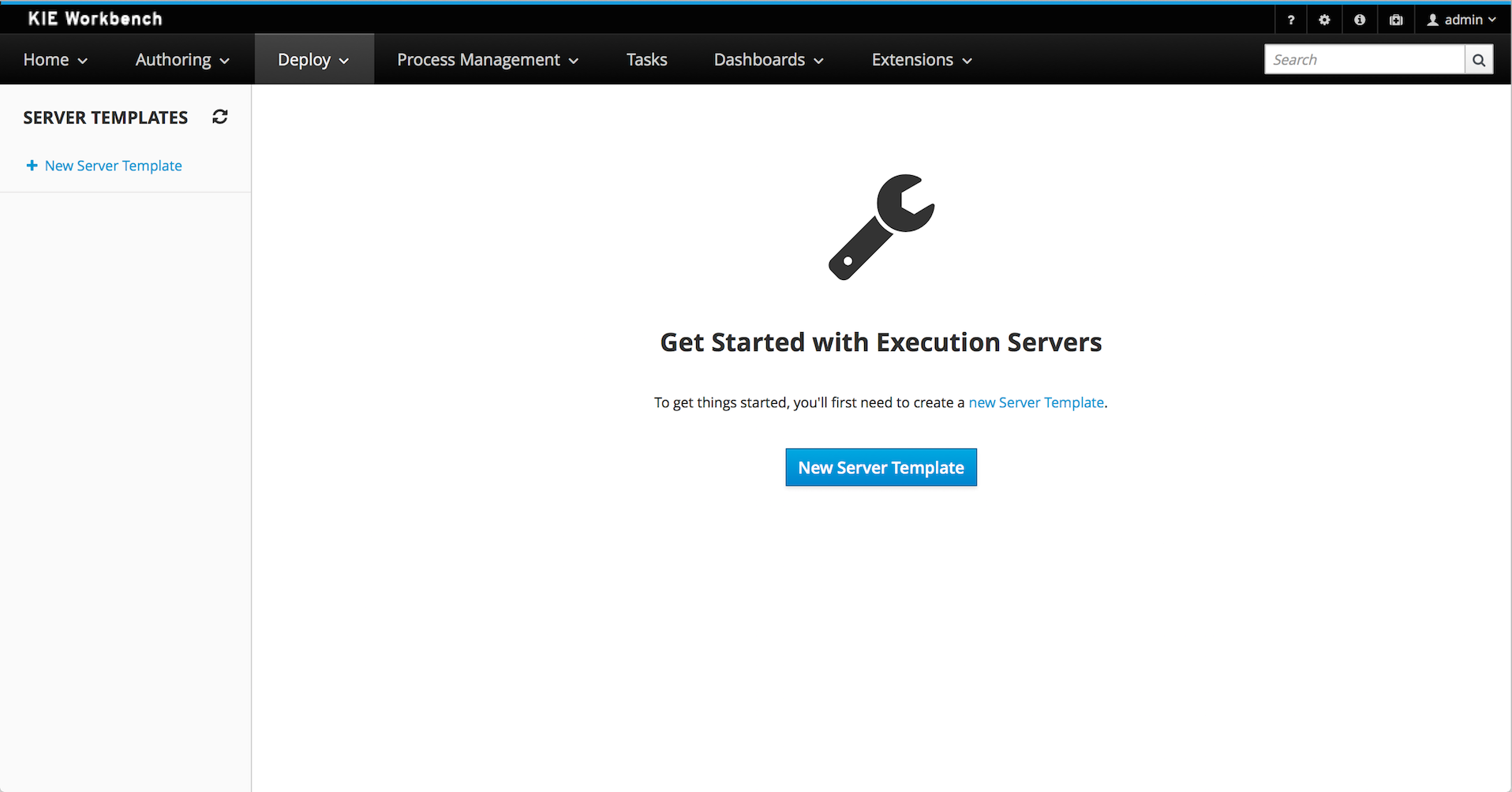

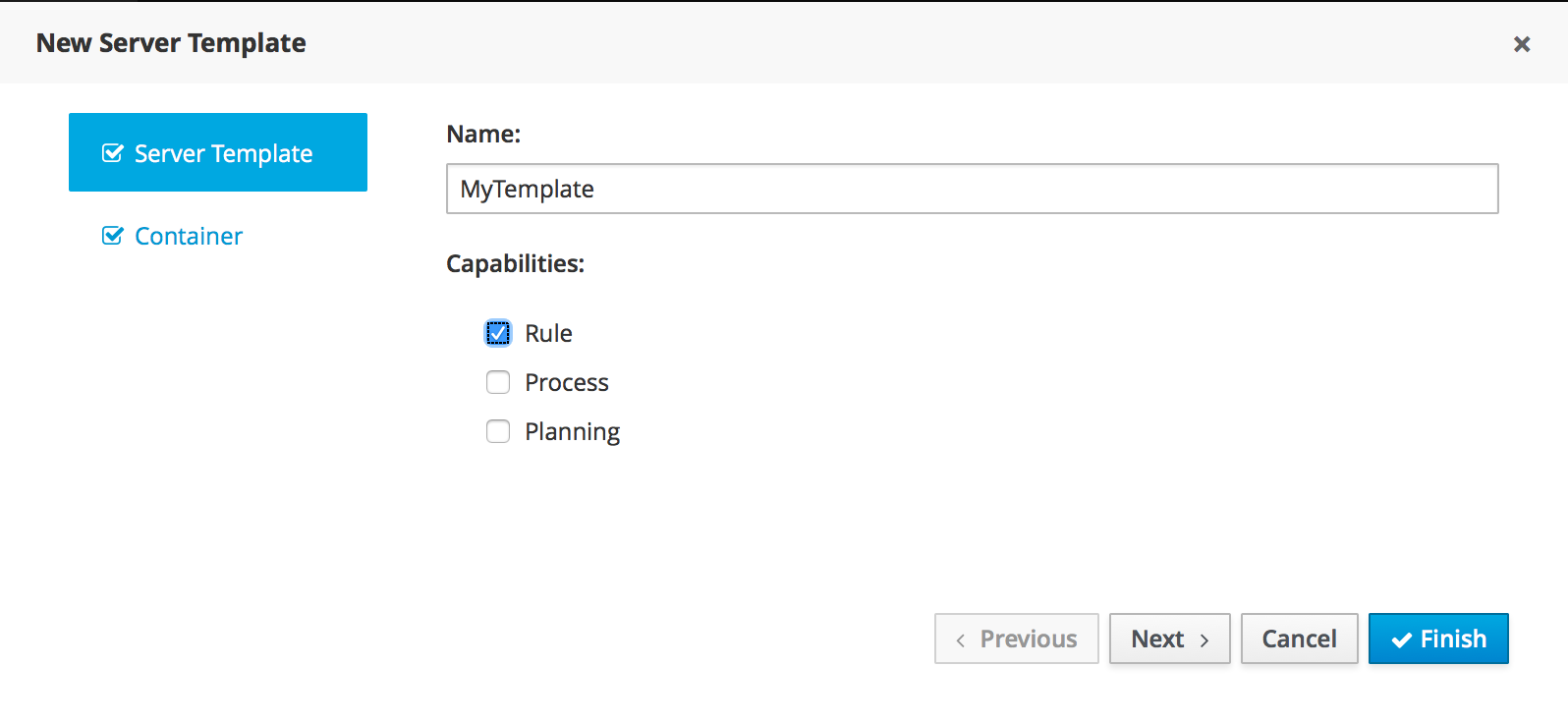

launch-dev.sh/bat is dedicated to start application in sort of development mode (in other words managed mode) so it will require Business Central to be available as jBPM controller.

Development mode is meant to allow people to work on the business assets projects and dynamically deploy changes to the business application without the need to restart it. At the same time it provides a complete monitoring environment over business automation capabilities (process instances, tasks, jobs, etc).

To launch your application just go into service project ({your business application name}-service) and invoke

./launch.sh clean install for Linux/Unix

./launch.bat clean install for Windows

the clean install part of the command is to tell maven how to build. It will then

build projects in following order

-

Data model

-

Business assets

-

Service

the first time it might take a while as it will download all dependencies of the project. At the end of the build it will start the application and after few seconds you should see output similar to following..,

INFO o.k.s.s.a.KieServerAutoConfiguration : KieServer (id business-application-service (name business-application-service)) started initialization process

INFO o.k.server.services.impl.KieServerImpl : Server Default Extension has been successfully registered as server extension

INFO o.k.server.services.impl.KieServerImpl : Drools KIE Server extension has been successfully registered as server extension

INFO o.k.server.services.impl.KieServerImpl : DMN KIE Server extension has been successfully registered as server extension

INFO o.k.s.api.marshalling.MarshallerFactory : Marshaller extensions init

INFO o.k.server.services.impl.KieServerImpl : jBPM KIE Server extension has been successfully registered as server extension

INFO o.k.server.services.impl.KieServerImpl : Case-Mgmt KIE Server extension has been successfully registered as server extension

INFO o.k.server.services.impl.KieServerImpl : jBPM-UI KIE Server extension has been successfully registered as server extension

INFO o.k.s.s.impl.policy.PolicyManager : Registered KeepLatestContainerOnlyPolicy{interval=0 ms} policy under name KeepLatestOnly

INFO o.k.s.s.impl.policy.PolicyManager : Policy manager started successfully, activated policies are []

INFO o.k.server.services.impl.KieServerImpl : Selected startup strategy ControllerBasedStartupStrategy - deploys kie containers given by controller ignoring locally defined

INFO o.k.s.services.impl.ContainerManager : About to install containers '[]' on kie server 'KieServer{id='business-application-service'name='business-application-service'version='7.9.0.Final'location='http://localhost:8090/rest/server'}'

INFO o.k.server.services.impl.KieServerImpl : KieServer business-application-service is ready to receive requests

INFO o.k.s.s.a.KieServerAutoConfiguration : KieServer (id business-application-service) started successfully

INFO o.s.j.e.a.AnnotationMBeanExporter : Registering beans for JMX exposure on startup

INFO s.b.c.e.t.TomcatEmbeddedServletContainer : Tomcat started on port(s): 8090 (http)

INFO c.c.b.service.Application : Started Application in 14.534 seconds (JVM running for 15.193)and you should be able to access your business application at http://localhost:8090/

3.3.2. Launch application in development mode

| Development mode requires Business Central to be available, by default at http://localhost:8080/jbpm-console. The easiest way to get that up and running is to use jBPM single distribution that can be downloaded at jbpm.org Look at the Getting Started guide to get yourself familiar with Business Central. |

Make sure you have Business Central up and running before launching your business application in development mode.

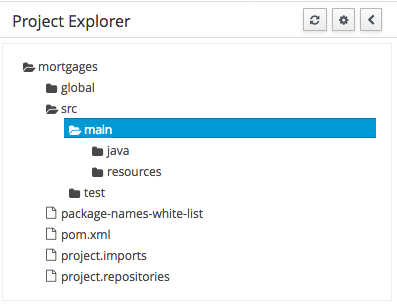

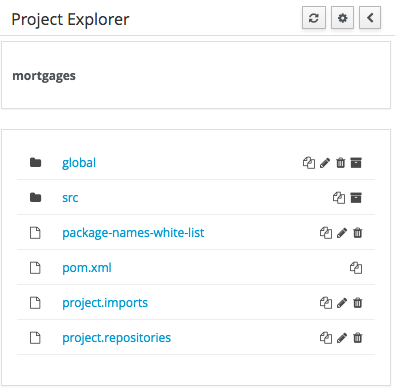

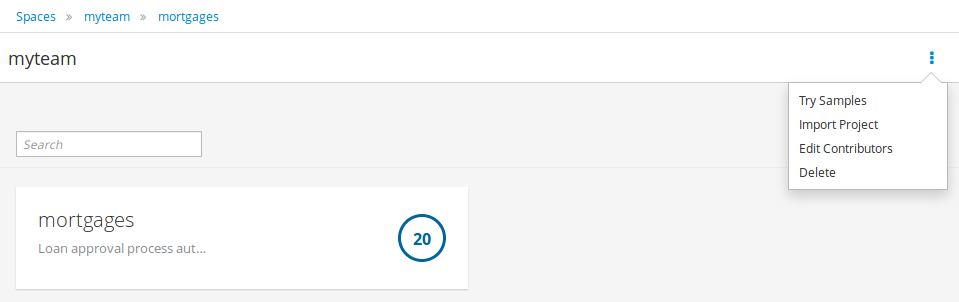

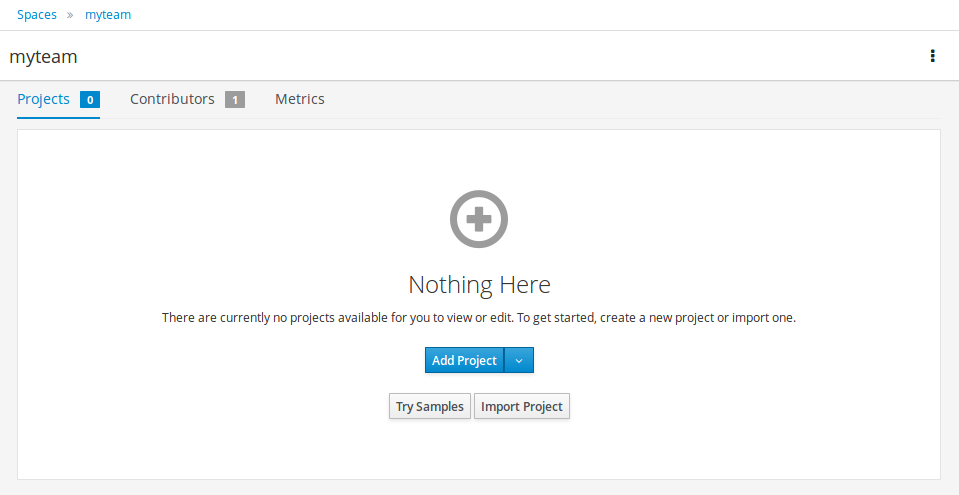

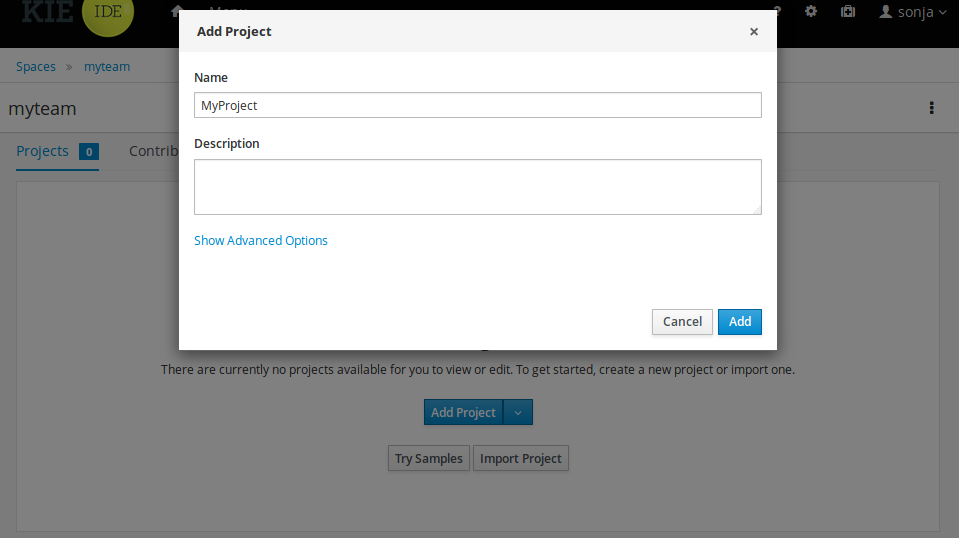

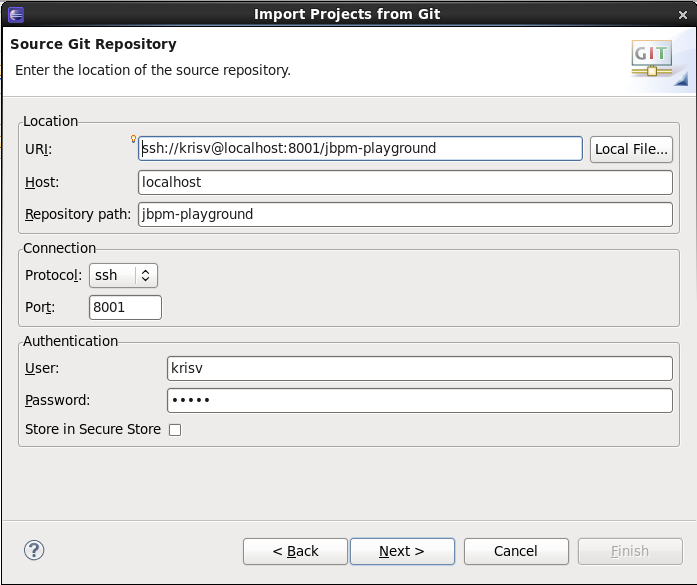

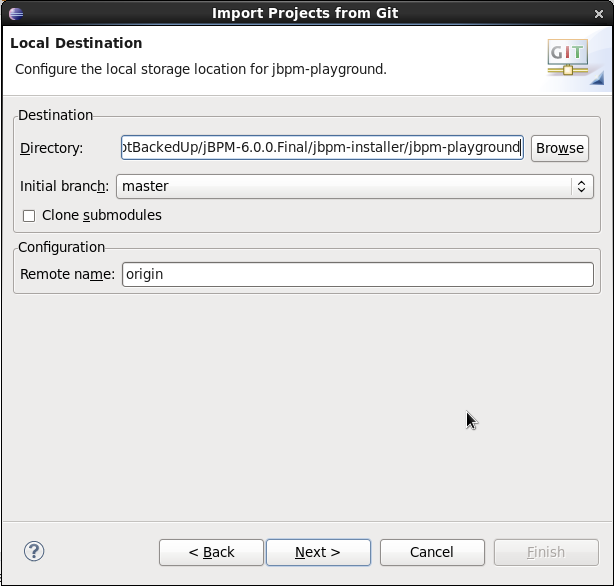

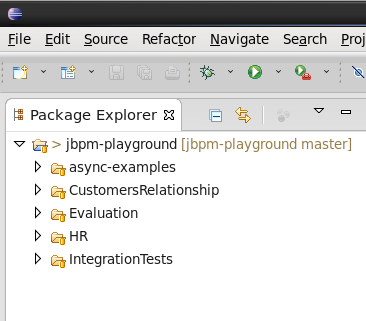

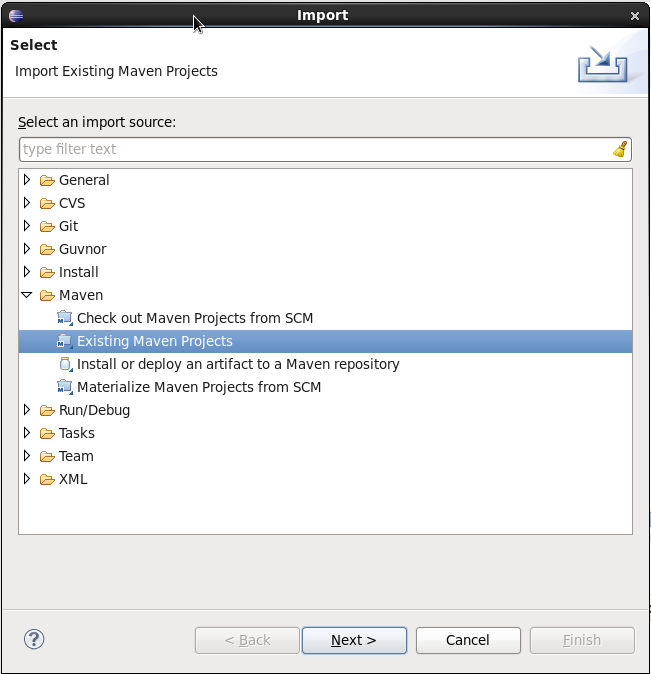

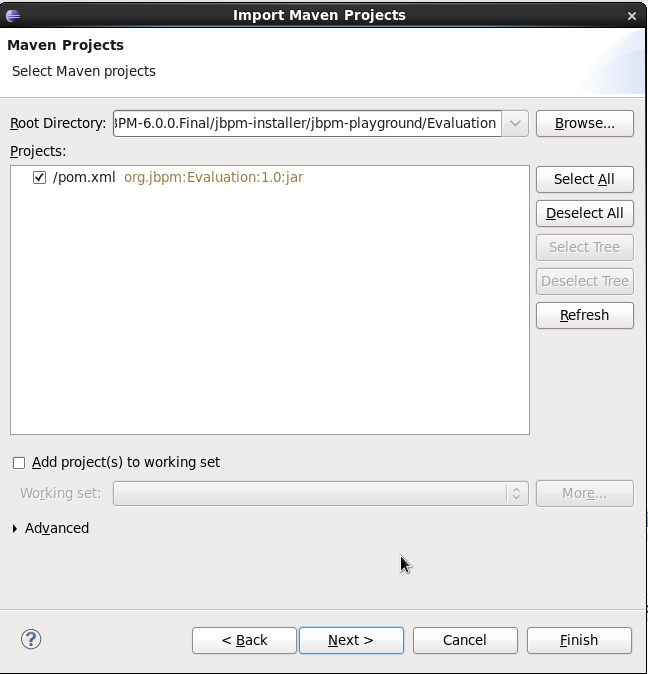

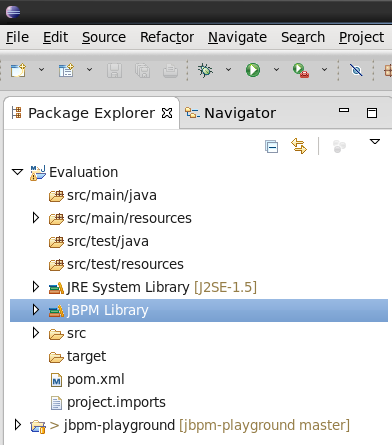

3.3.3. Import your business assets project into Business Central

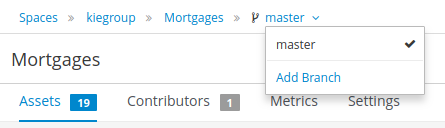

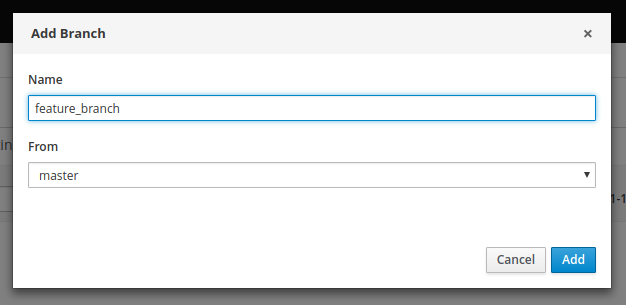

Business assets projects that was just created can be easily imported into Business Central as soon as it’s a valid git repository. To make it as such

-

Go into business assets project - {your business application name}-kjar

-

Execute

git init -

Execute

git add -A -

Execute

git commit -m "Initial project structure" -

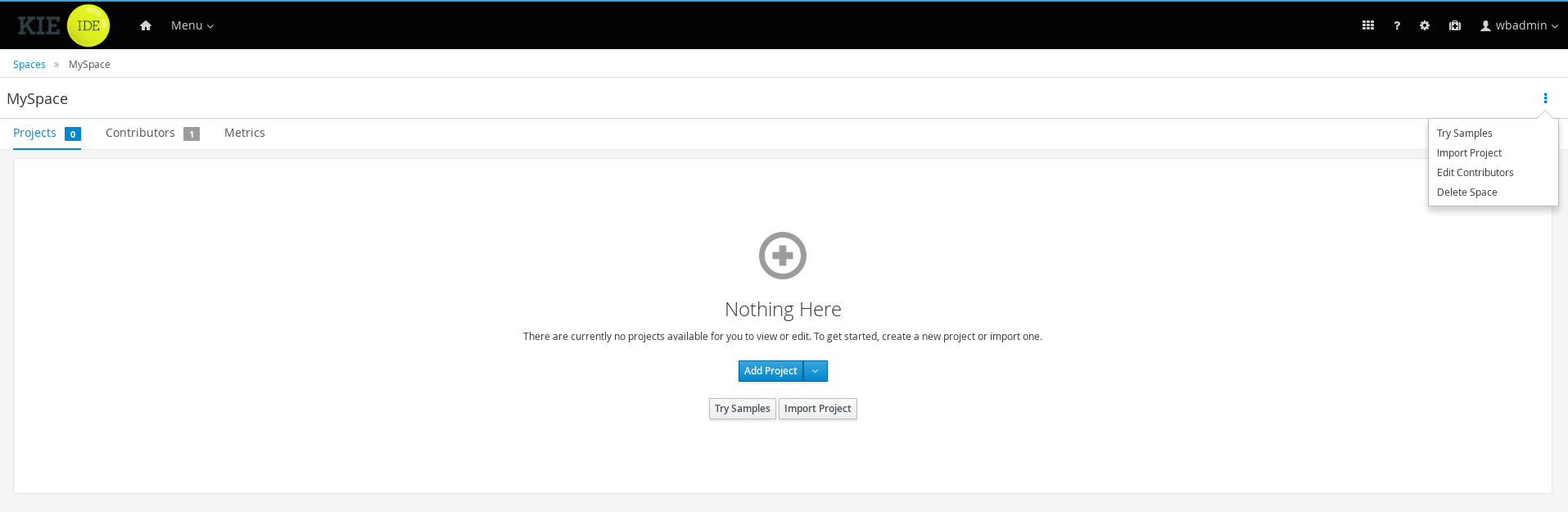

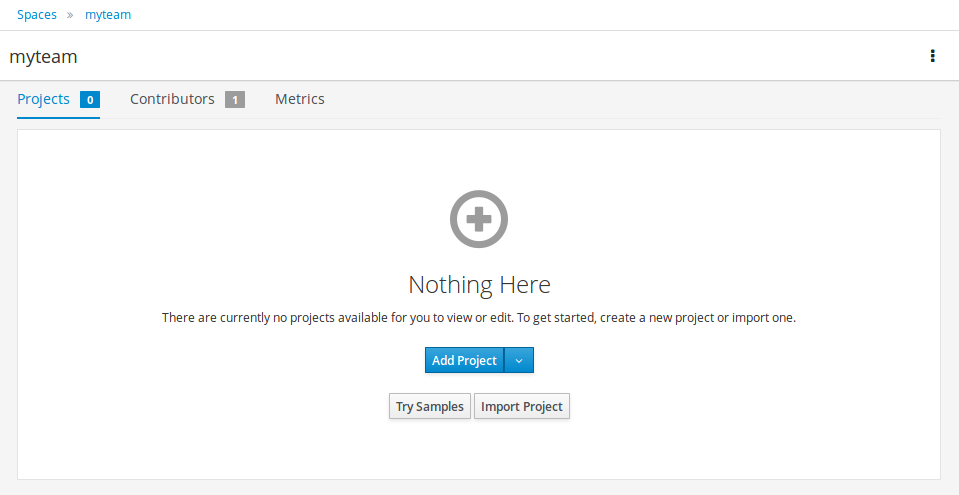

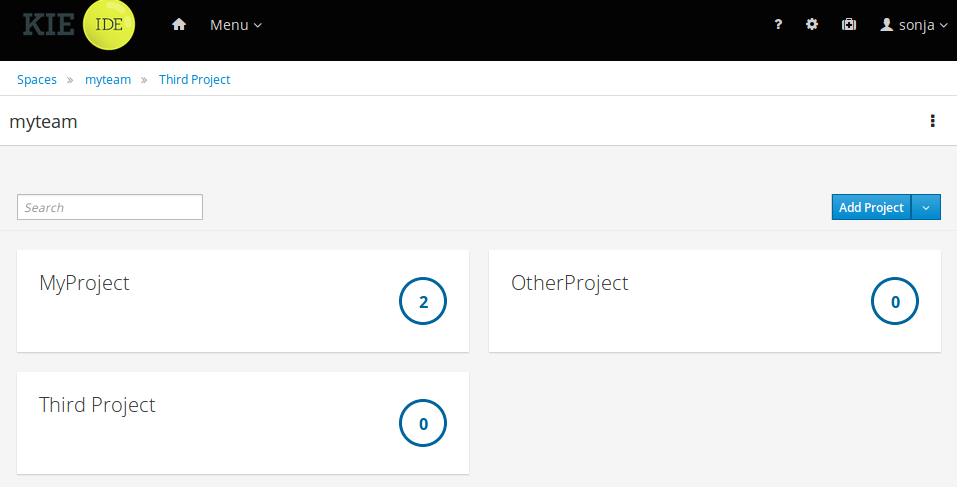

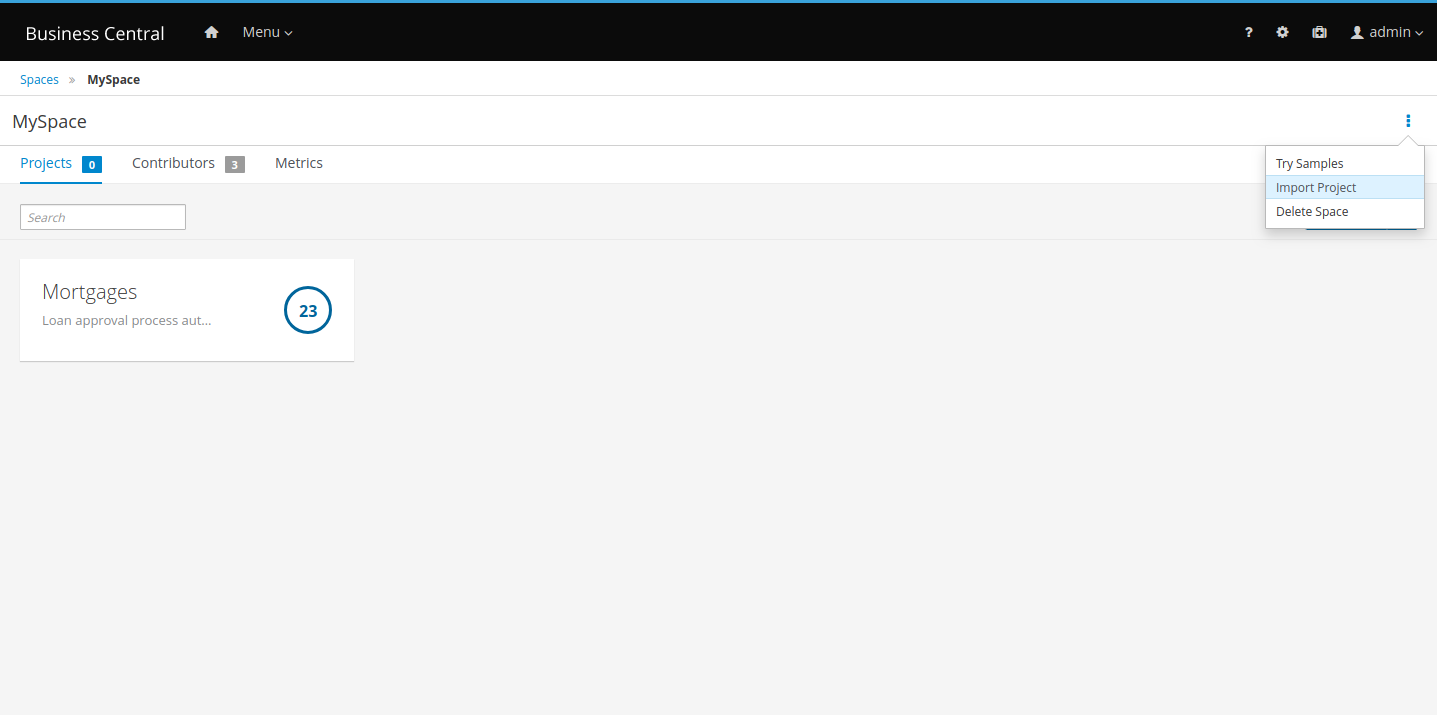

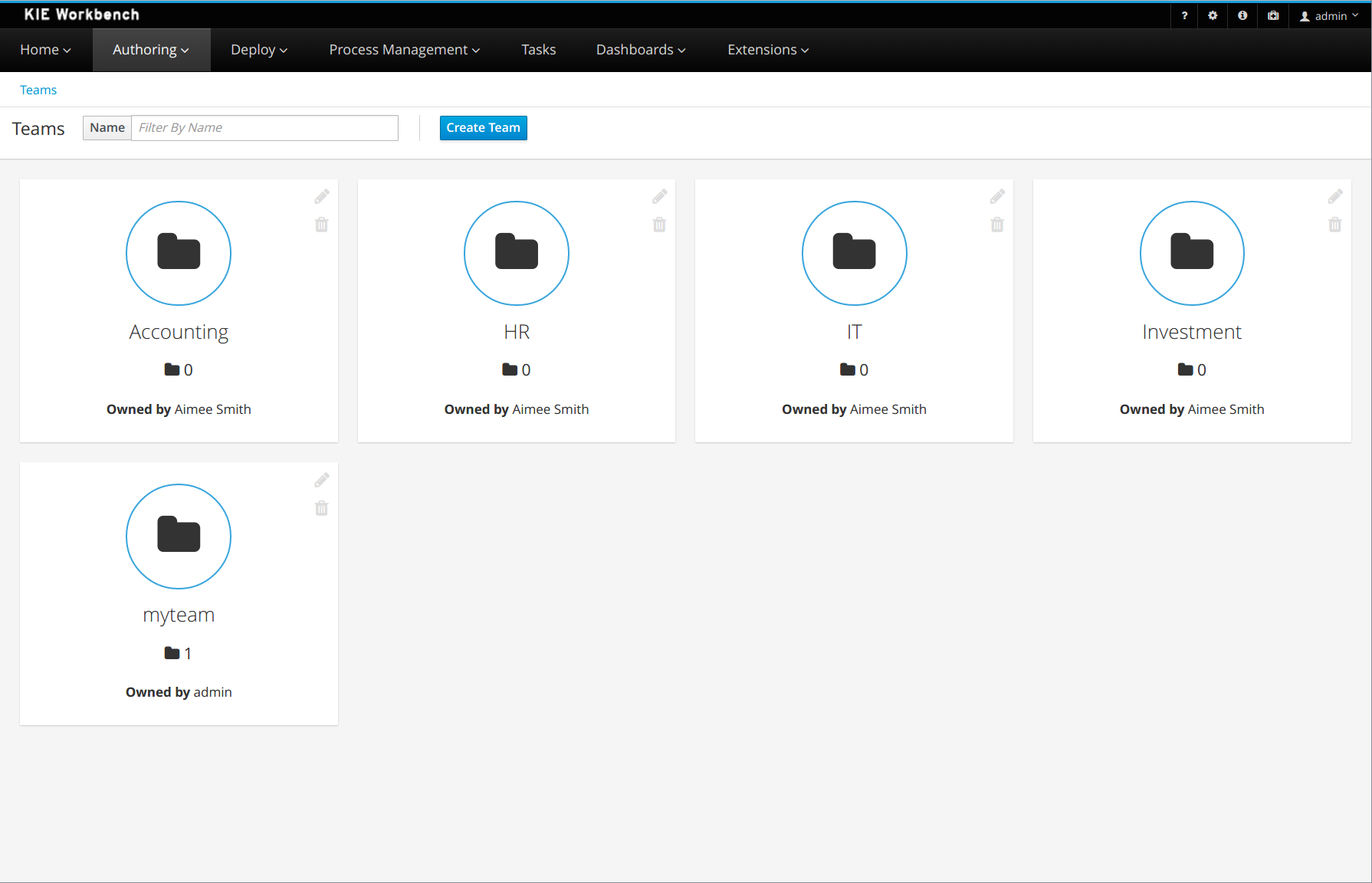

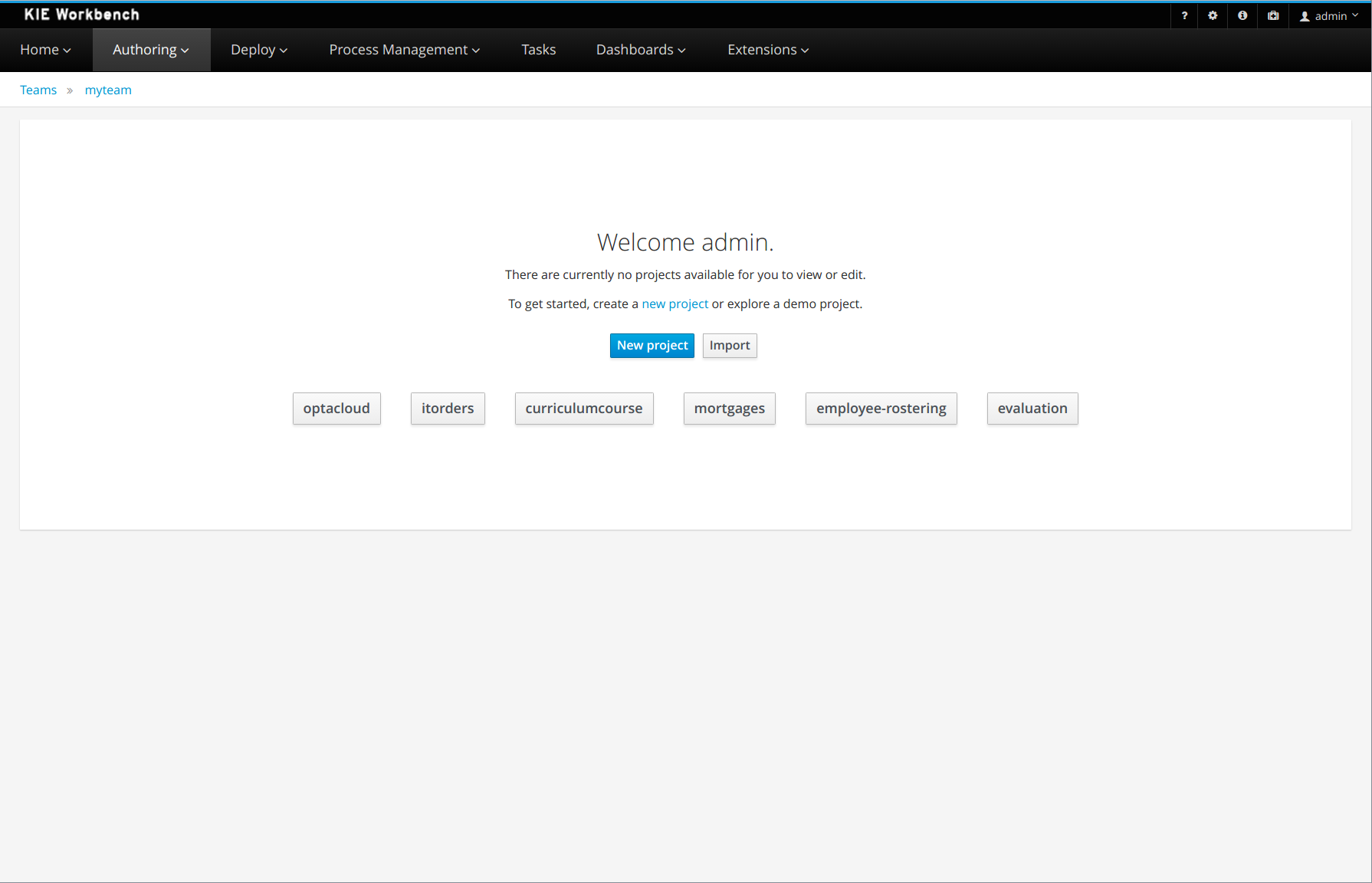

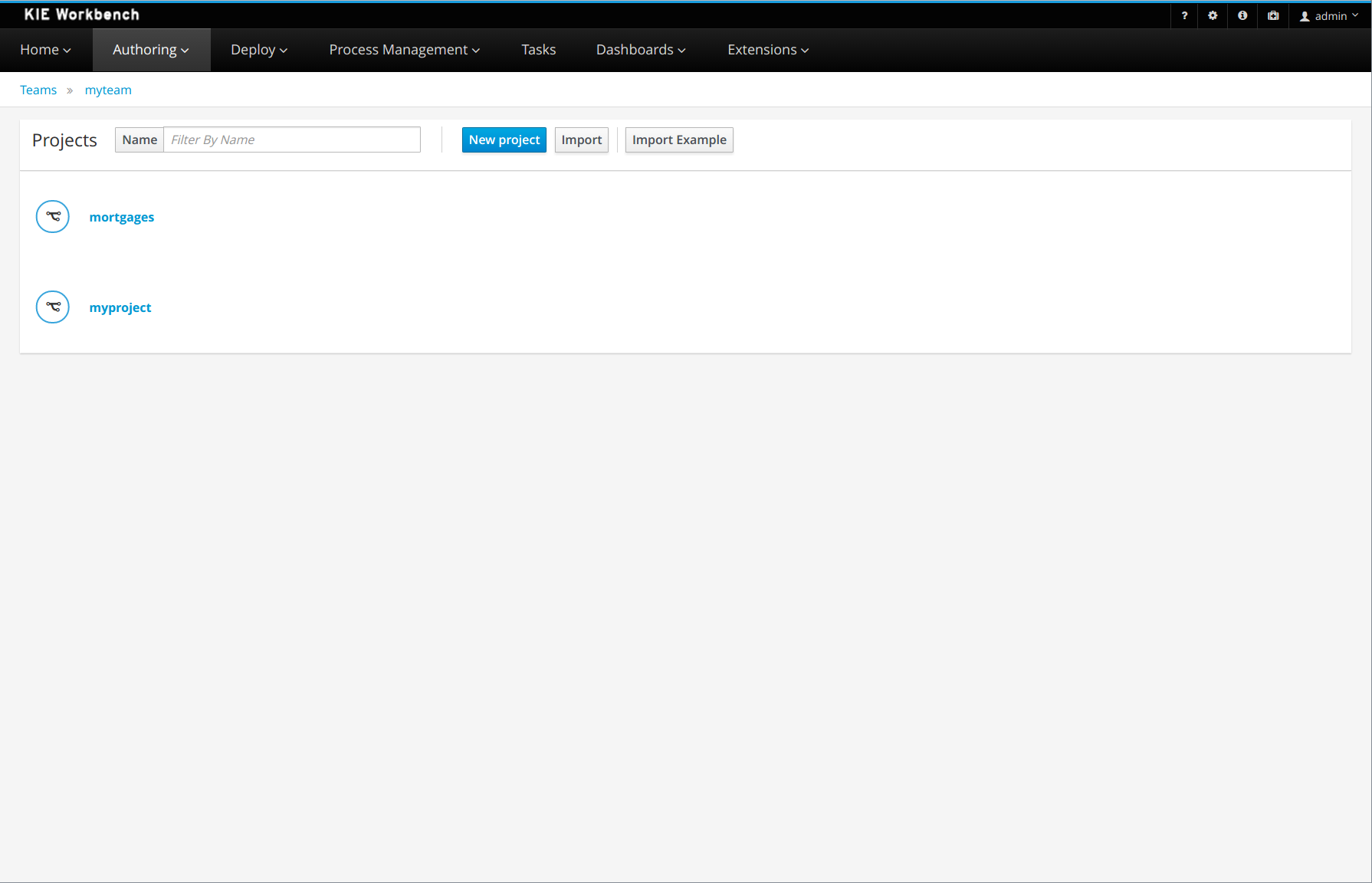

Log in to Business Central and go to projects

-

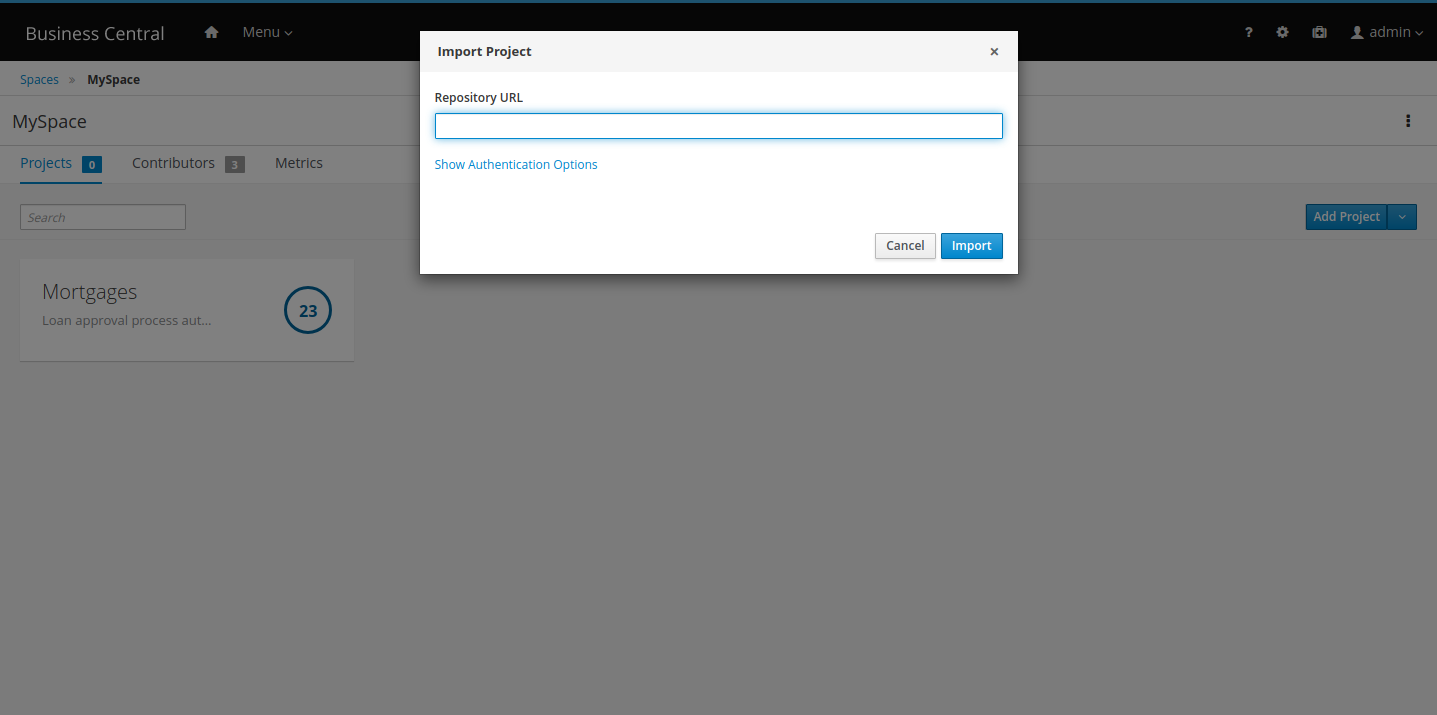

Select import project and enter the following URL

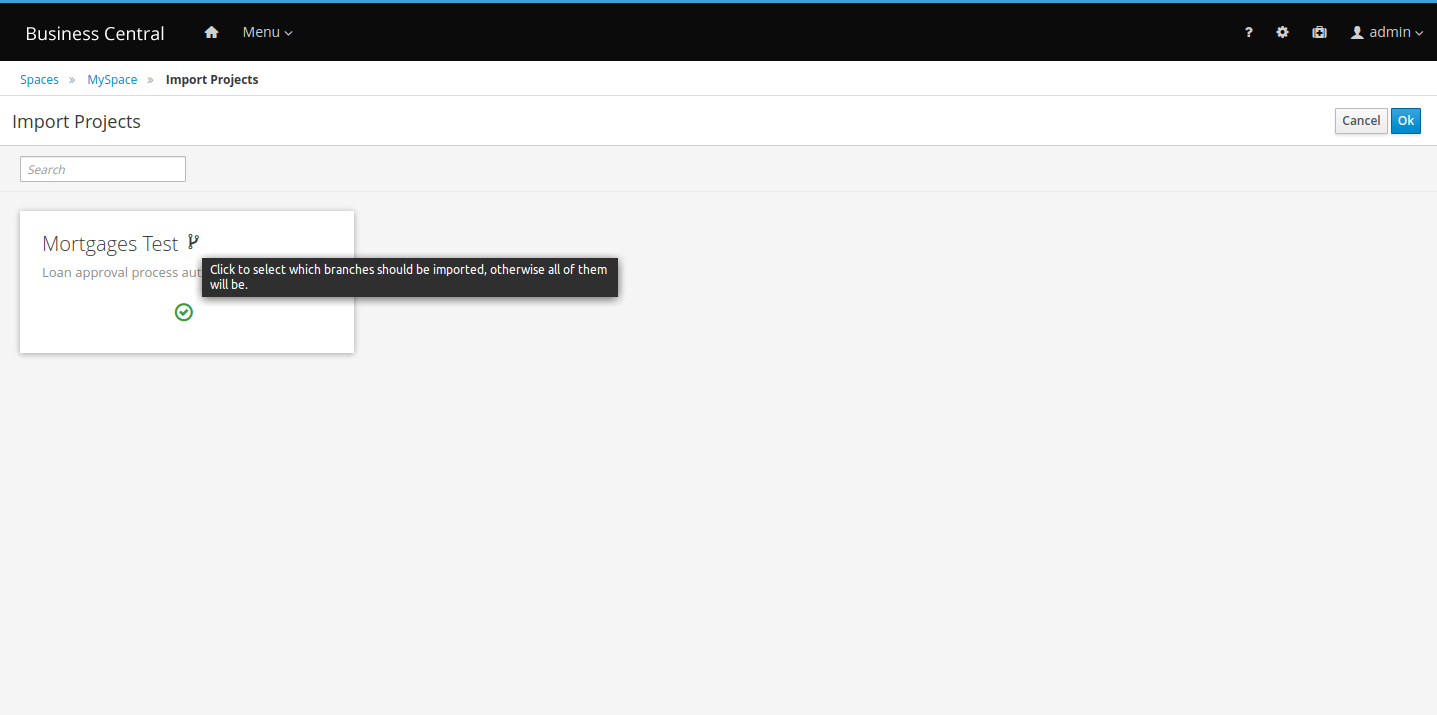

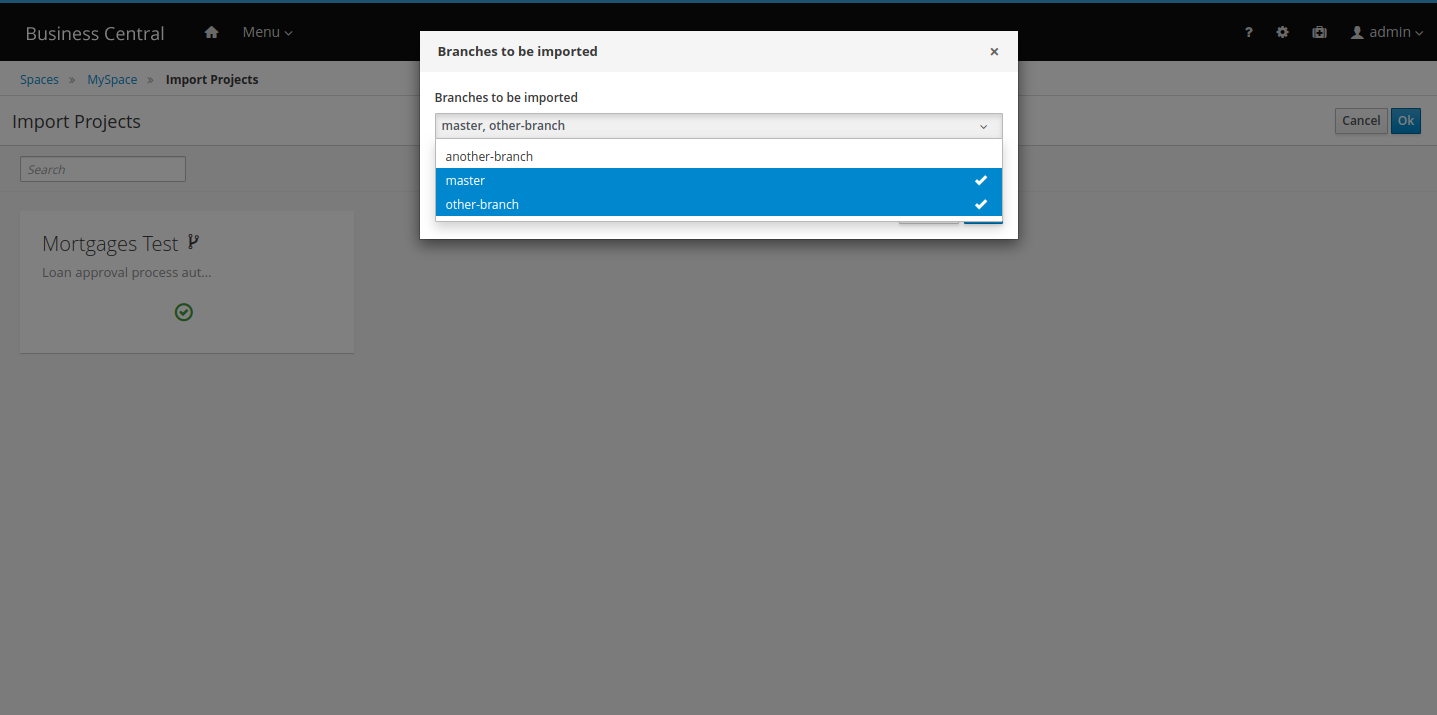

file:///{path to your business application}/{your business application name}-kjar -

Click import and confirm project to be imported

3.3.3.1. Work on your business assets

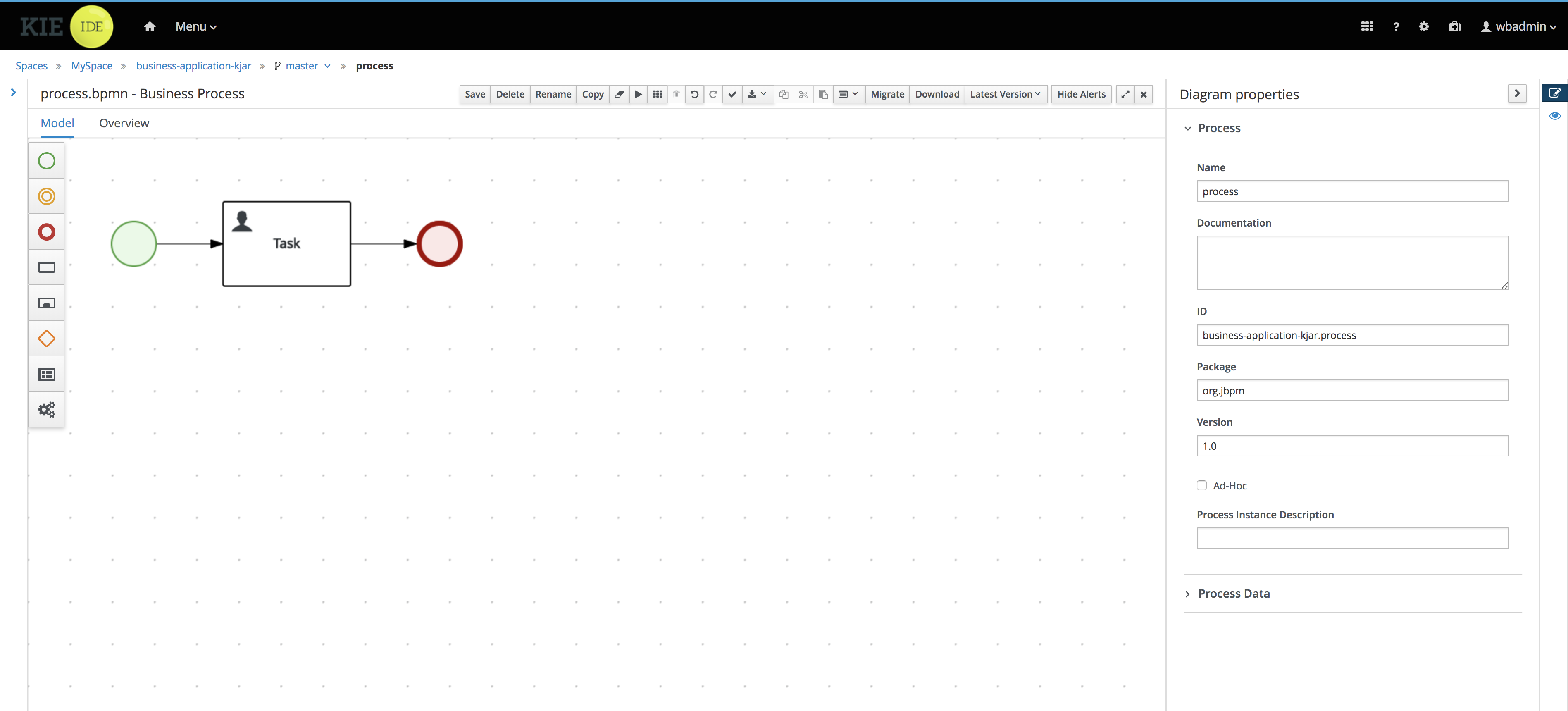

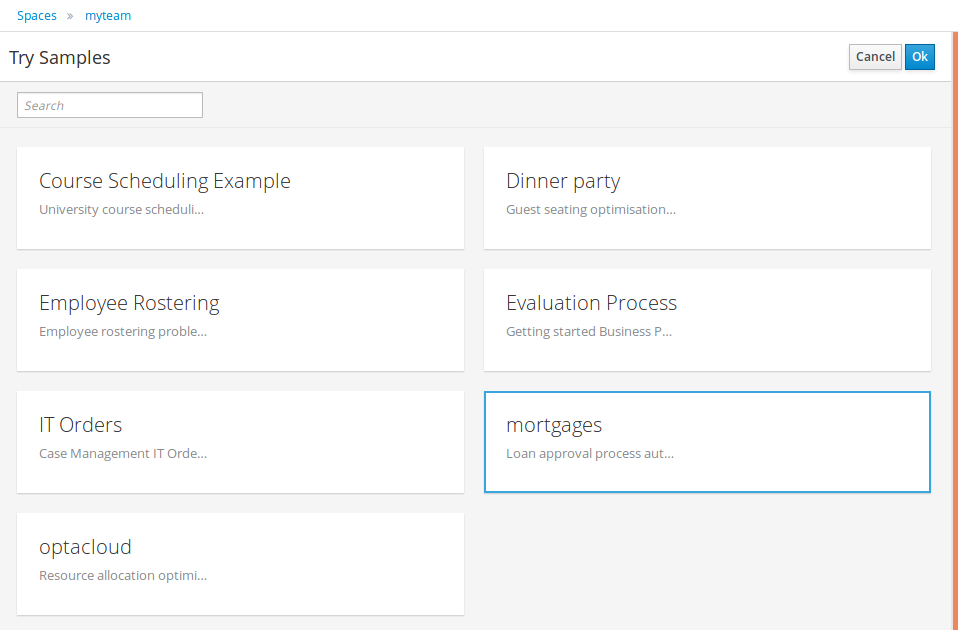

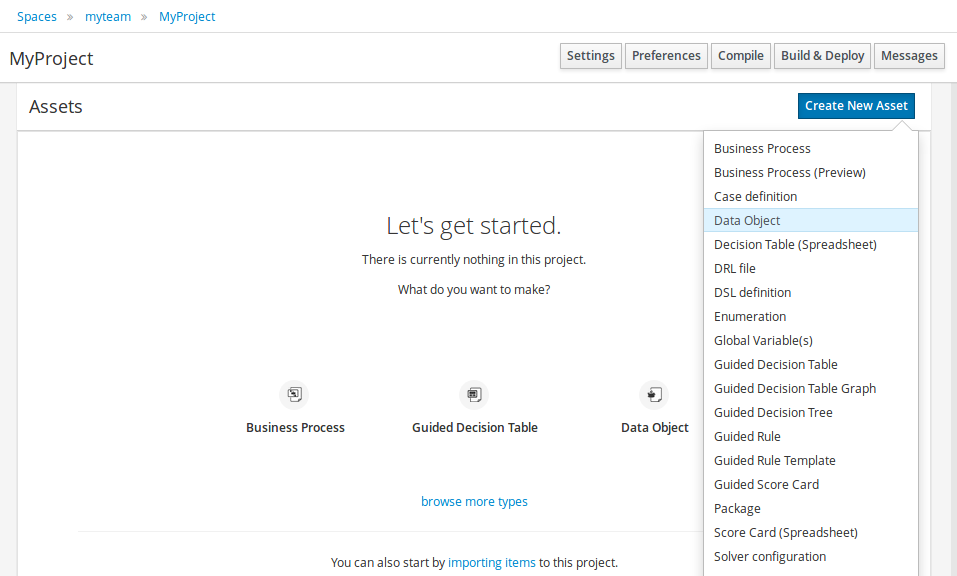

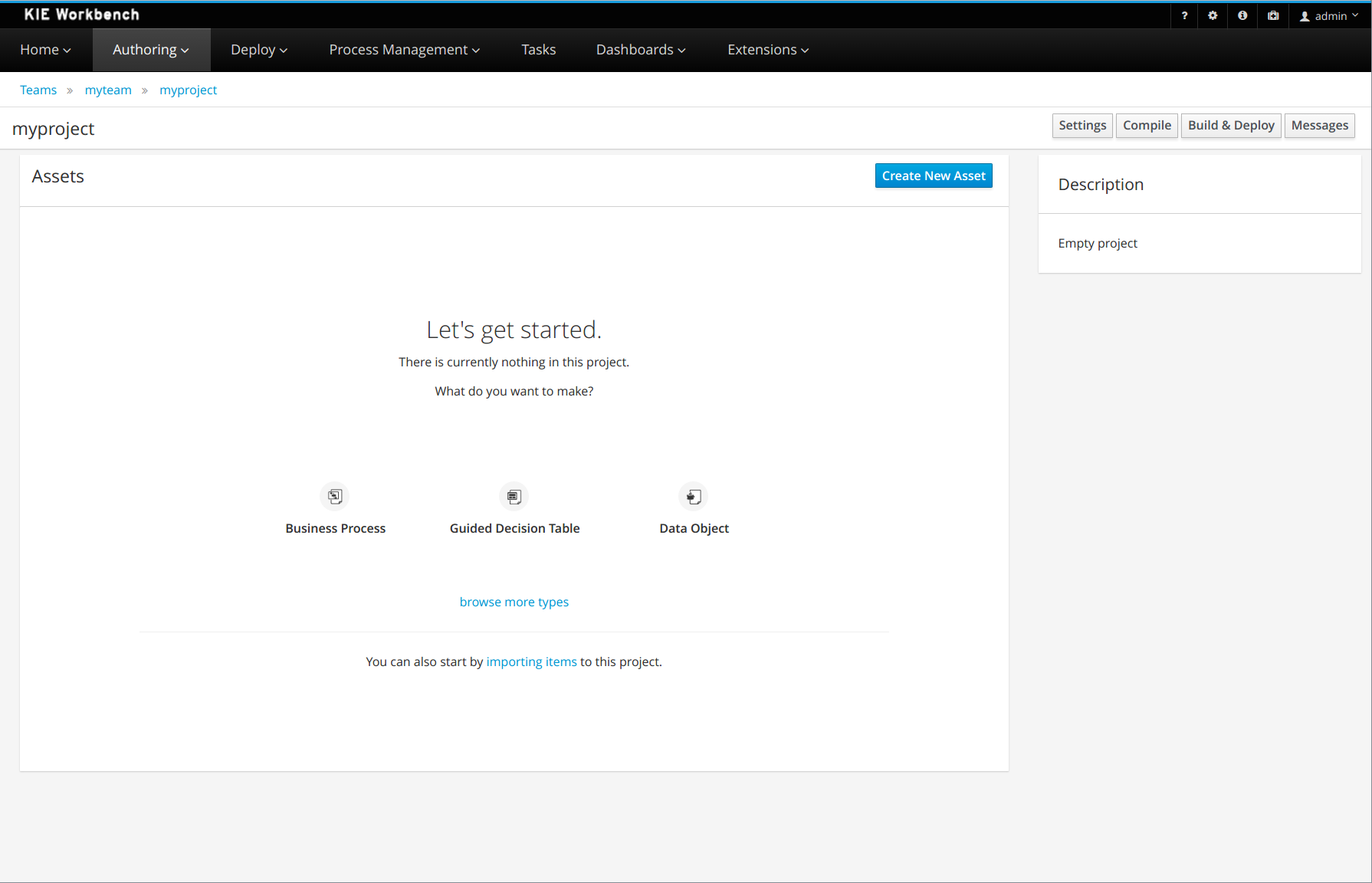

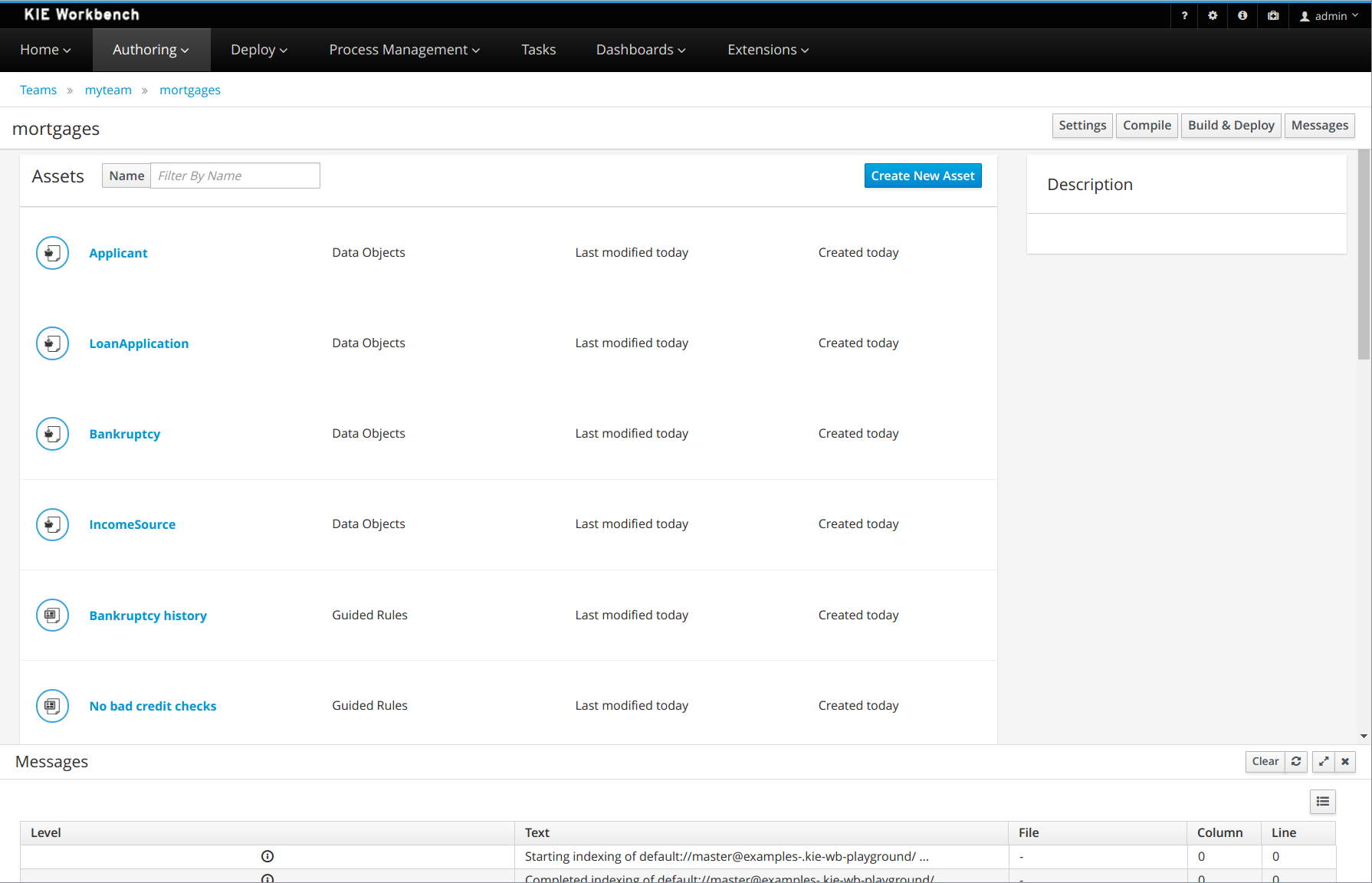

Once the business assets project is imported into Business Central you can start working on it. Just go to the project and add assets such as business process, rules, decision tables etc.

3.3.3.2. Launch business application in development mode

To launch your application just go into service project ({your business application name}-service) and invoke

./launch-dev.sh clean install for Linux/Unix

./launch-dev.bat clean install for Windows

this should print the first entry after the build as follows

Launching the application in development mode - requires connection to controller (Business Central)

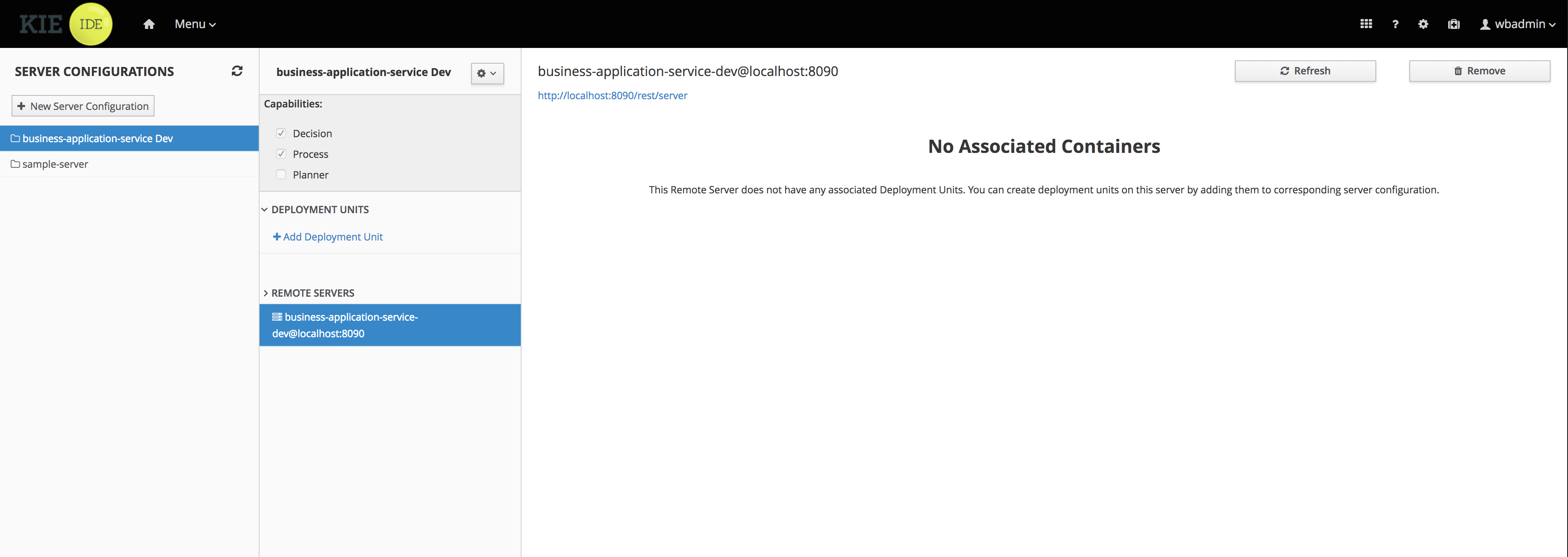

and similar as to launching in the standalone more after couple of seconds should be able to access your business application at http://localhost:8090/

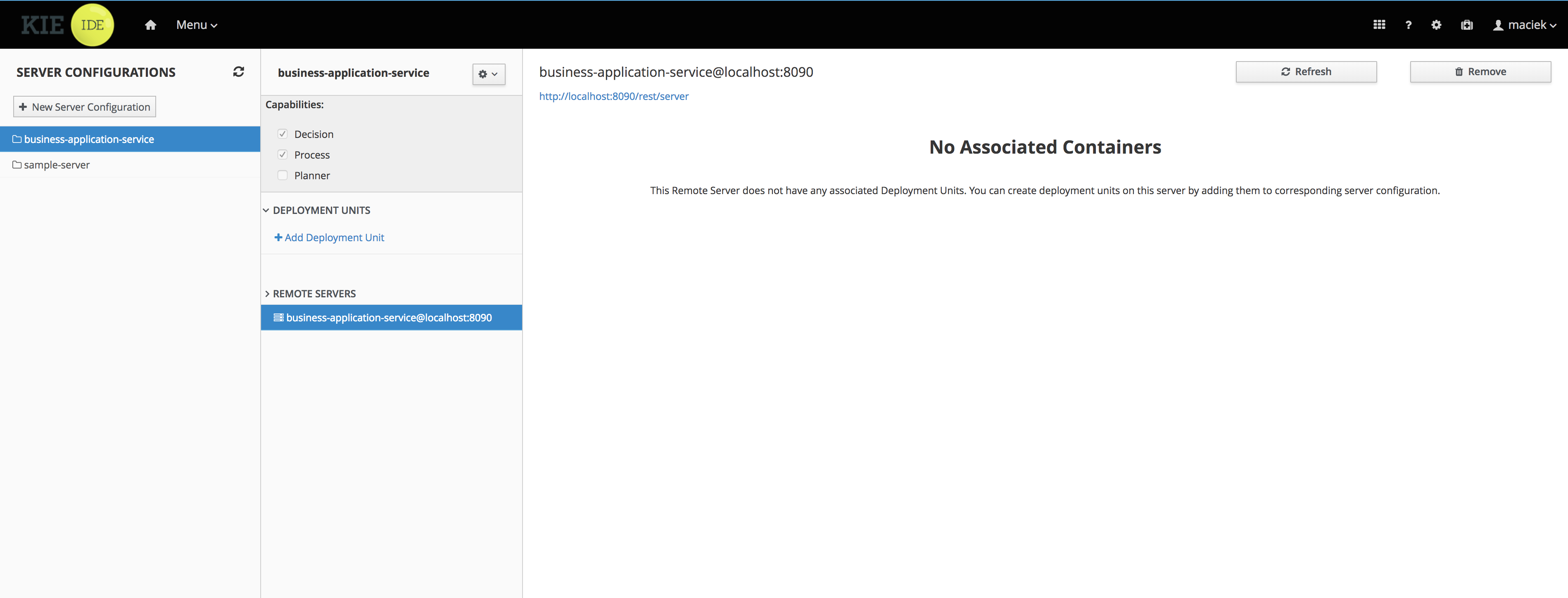

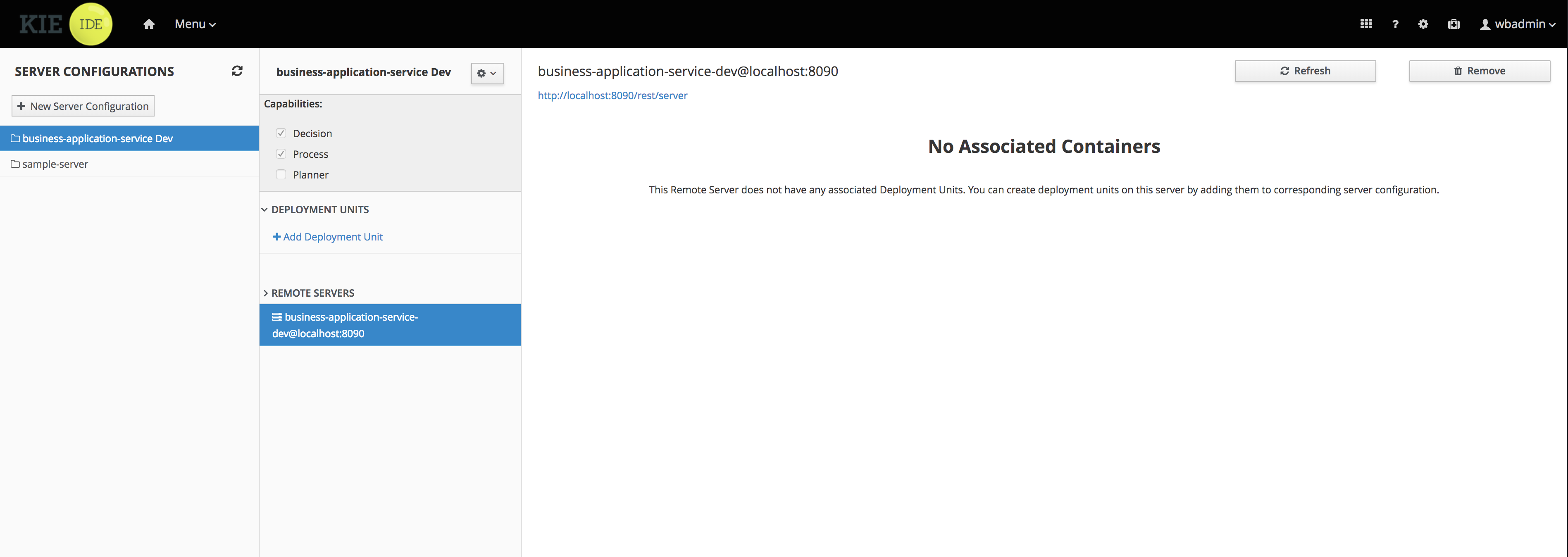

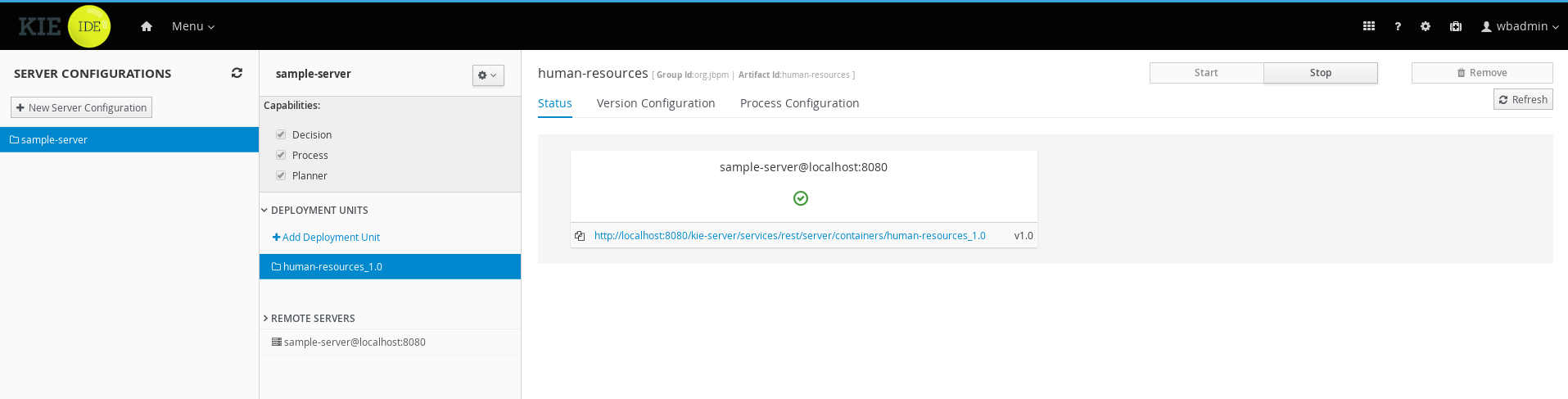

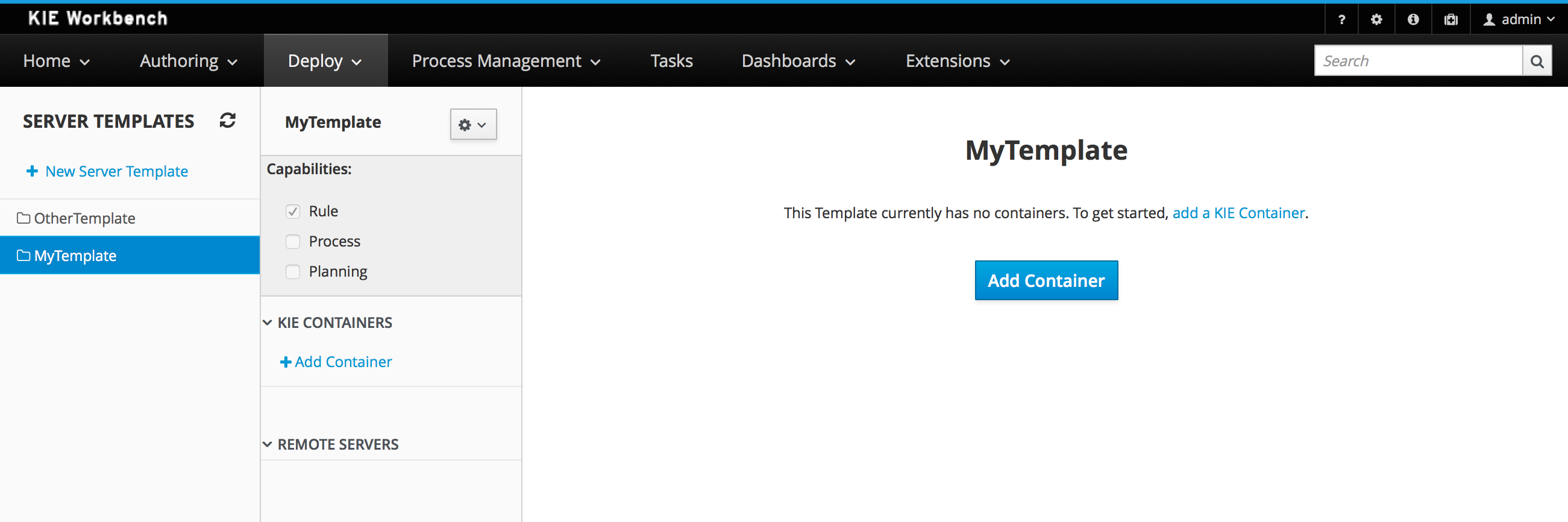

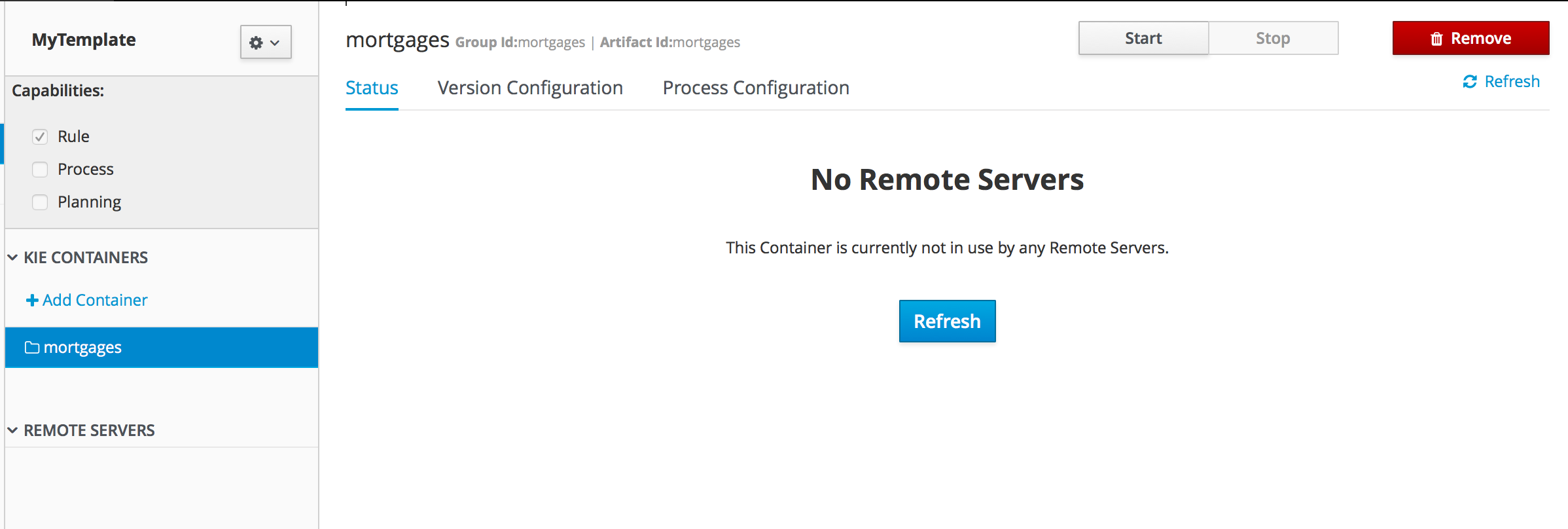

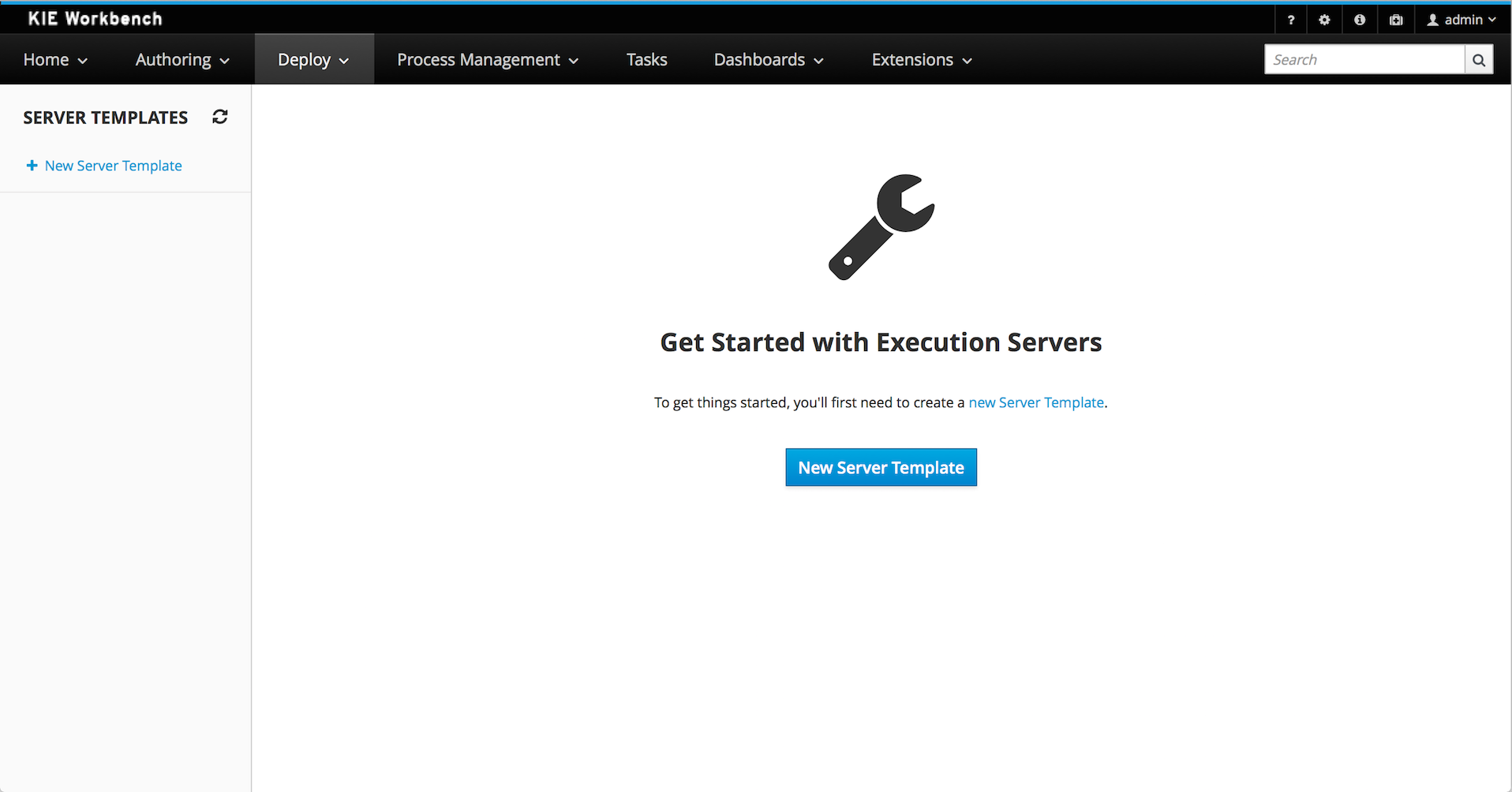

Once the application started, it should be successfully connect to jBPM controller and by that be visible in the servers perspective of Business Central.

3.3.3.3. Deploy business assets project into running business application

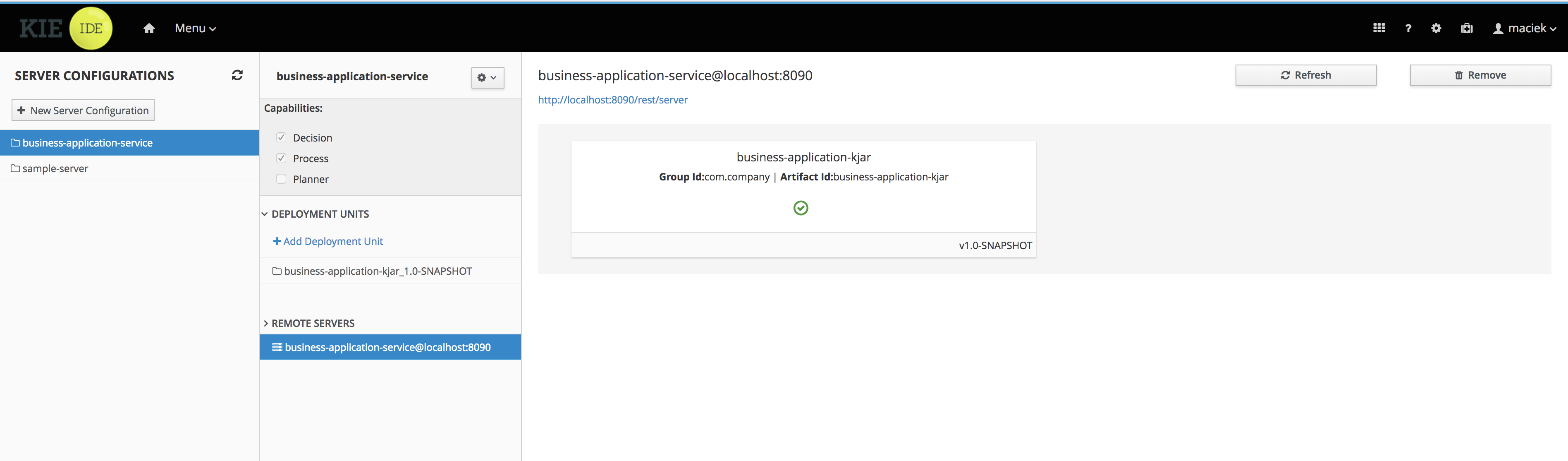

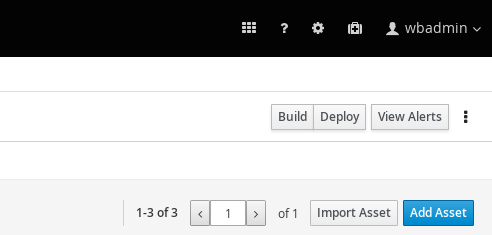

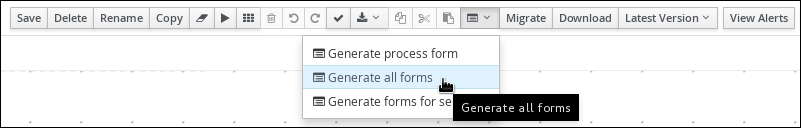

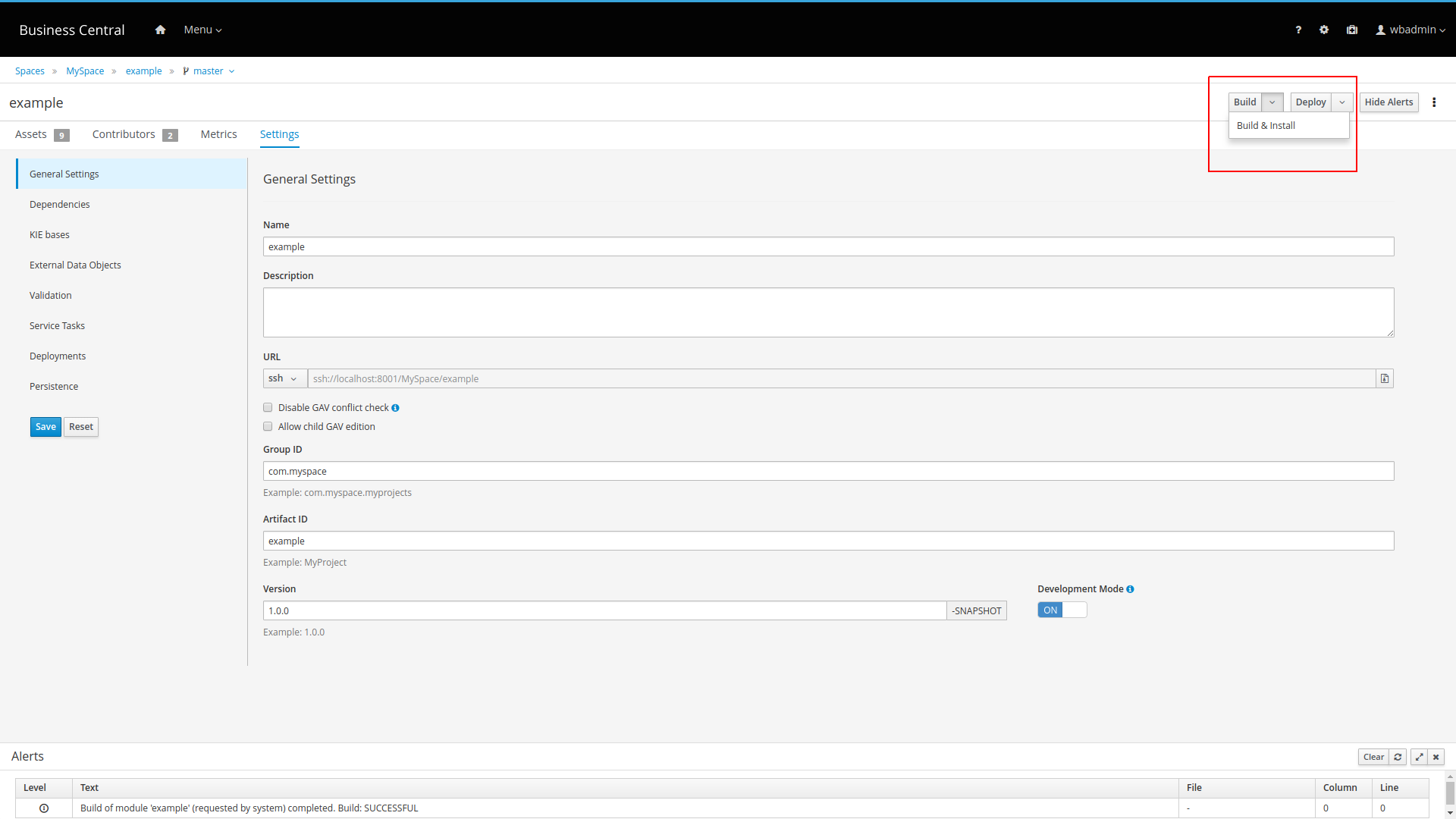

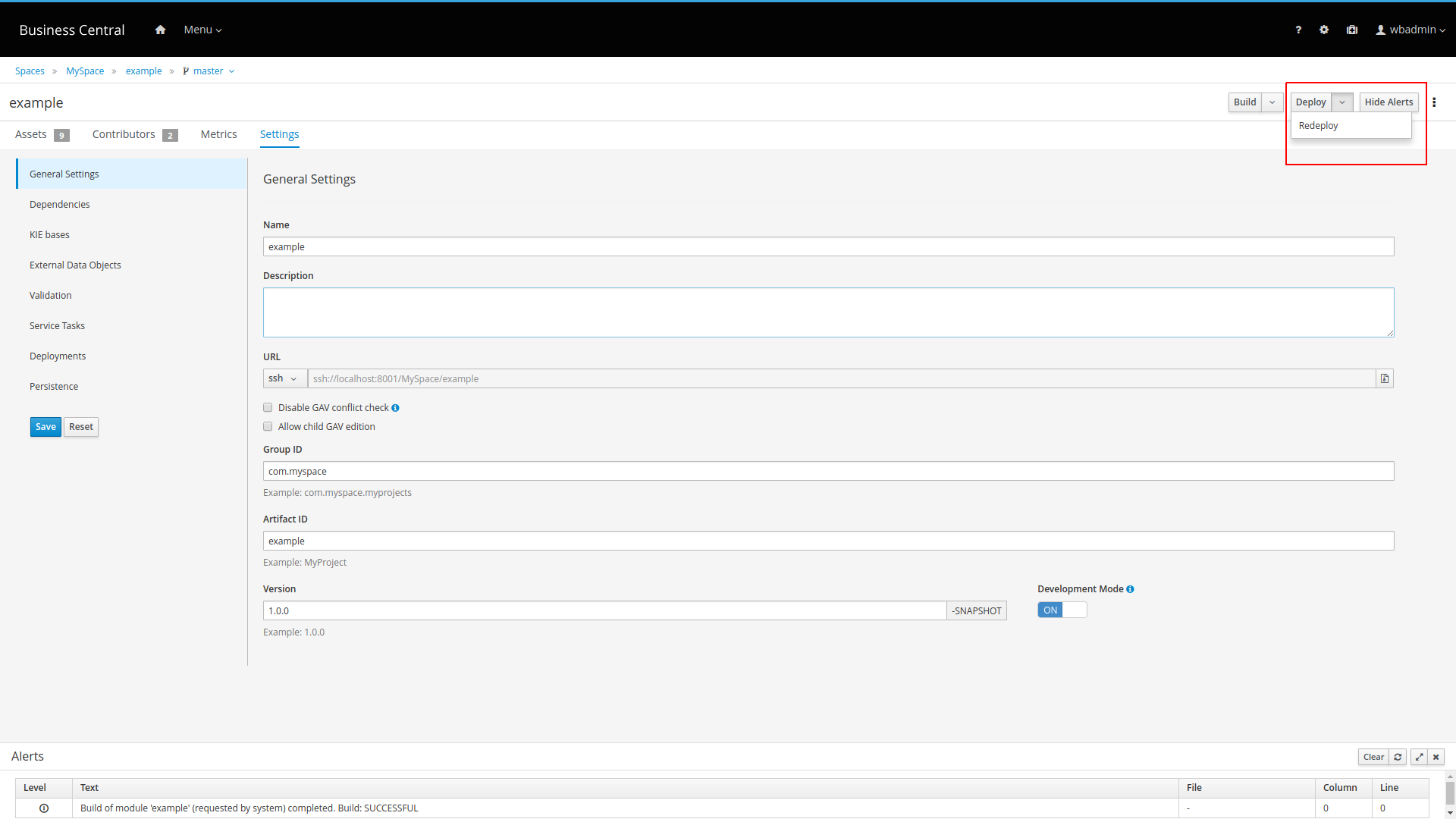

After adding assets to your project in Business Central you can just deploy it to a running server instance.

Click the Deploy button on your project and in few seconds you should see the

project deployed on your business application.

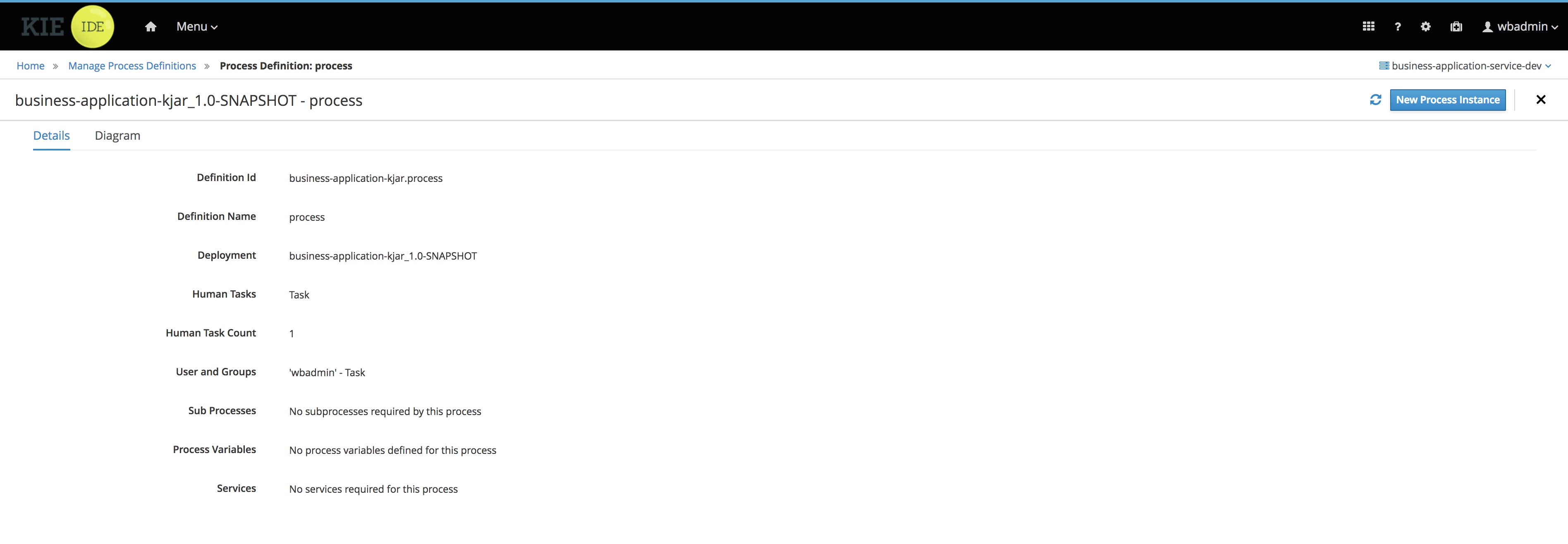

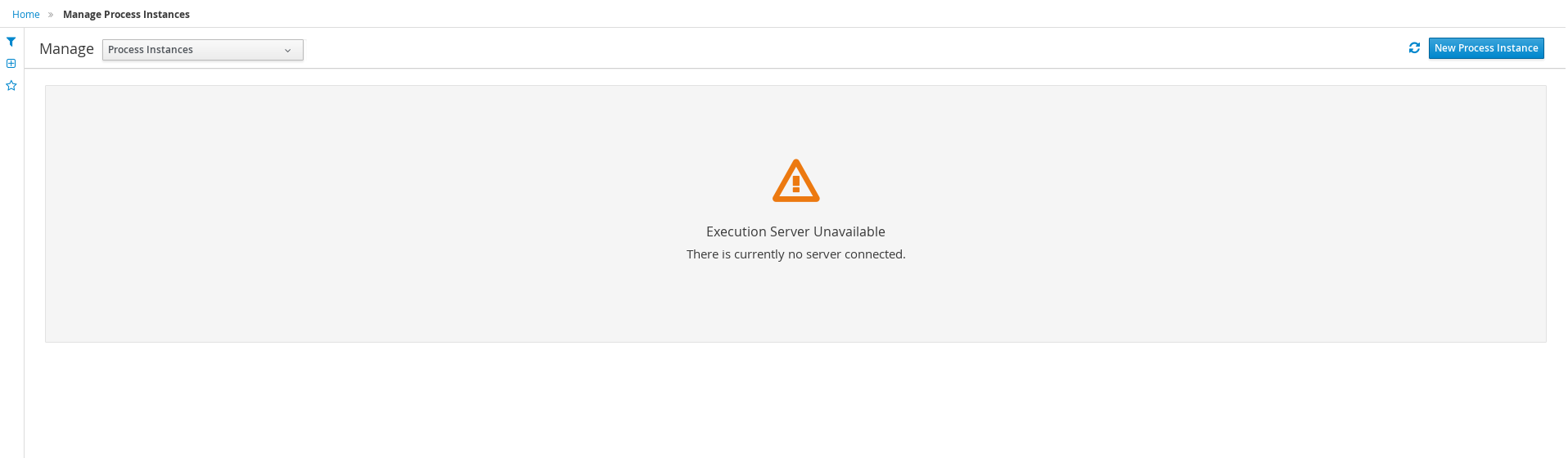

You can use Process Definitions and Process Instance perspectives of Business Central to interact with your newly deployed business assets such as processes or user tasks.

3.4. Configure business application

There are several components that can be configured in the business application. Depending on selected capabilities during application generation, a number of components can differ.

Entire configuration of the business application (service project) is done via application.properties

file that is a standard way to configure SpringBoot applications. It is located under the

src/main/resources directory of {your business application}-service folder.

3.4.1. Configuring core components

3.4.1.1. Configuring server

One of the most important configuration is actually the server itself. That is the host, port and path for the REST endpoints.

# used for server binding

server.address=localhost

server.port=8090

# used to define path for REST apis

cxf.path=/rest3.4.1.2. Configure authentication and authorization

Business application is secured by default by protecting all REST endpoints

(URL pattern /rest/*).

Authentication is enabled for single test user named user with password user.

Additionally there is a default kieserver user that allows to easily connect to

Business Central in development mode.

Both authentication and authorization is based on Spring Security and can be

configured in DefaultWebSecurityConfig.java that is included in the generated

service project (src/main/java/com/company/service/DefaultWebSecurityConfig.java)

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.context.annotation.Configuration;

import org.springframework.security.config.annotation.authentication.builders.AuthenticationManagerBuilder;

import org.springframework.security.config.annotation.web.builders.HttpSecurity;

import org.springframework.security.config.annotation.web.configuration.EnableWebSecurity;

import org.springframework.security.config.annotation.web.configuration.WebSecurityConfigurerAdapter;

@Configuration("kieServerSecurity")

@EnableWebSecurity

public class DefaultWebSecurityConfig extends WebSecurityConfigurerAdapter {

@Override

protected void configure(HttpSecurity http) throws Exception {

http

.csrf().disable()

.authorizeRequests()

.antMatchers("/rest/*").authenticated()

.and()

.httpBasic();

}

@Autowired

public void configureGlobal(AuthenticationManagerBuilder auth) throws Exception {

auth.inMemoryAuthentication().withUser("user").password("user").roles("kie-server");

auth.inMemoryAuthentication().withUser("kieserver").password("kieserver1!").roles("kie-server");

}

}| This security configuration is just starting point and should be altered for all business applications going to production like setup. |

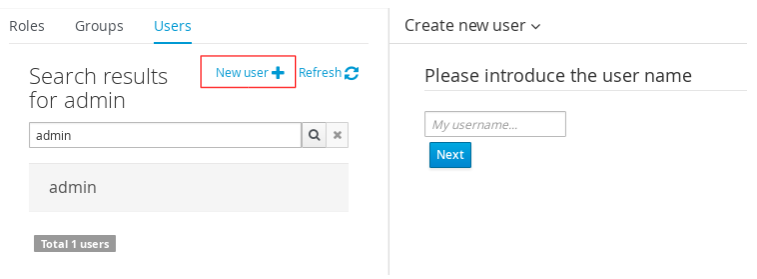

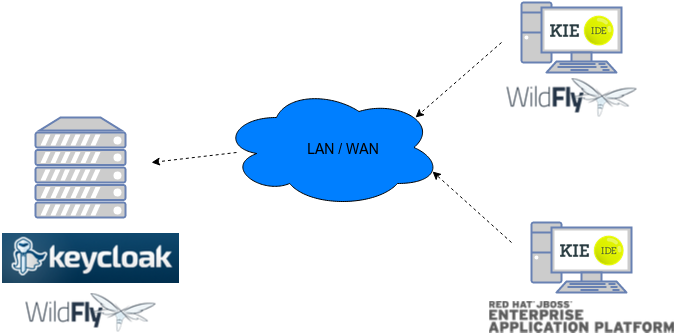

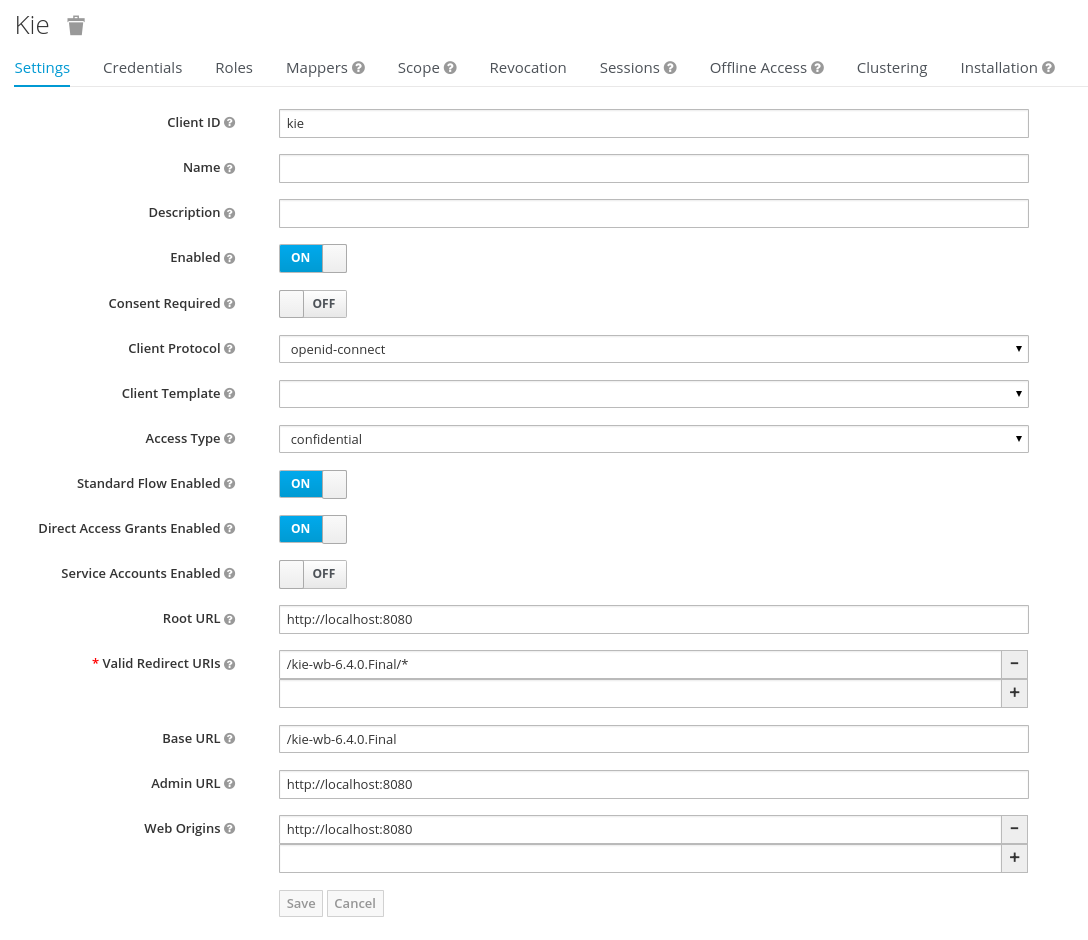

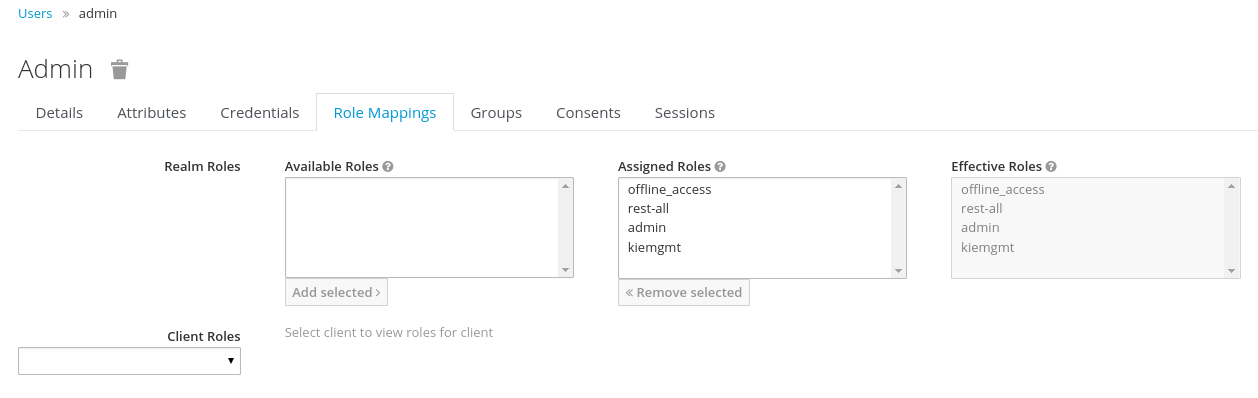

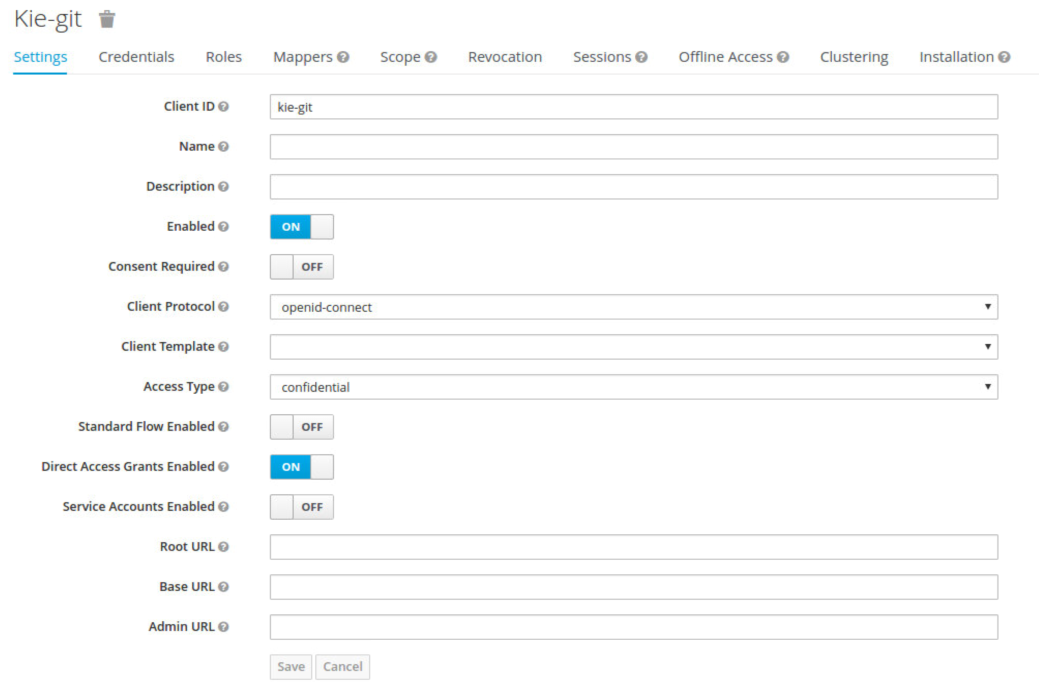

Use Keycloak as authentication provider

Configuring business applications to use Keycloak as authentication and authorisation requires few steps

-

Install Keycloak - follow official documentation at keycloak.org

-

Configure Keycloak once started

-

Use default master realm or create new one

-

Create client named springboot-app and set its AccessType to public

-

Set Valid redirect URI and Web Origin according to your local setup - with default setup they should be set to

-

Valid Redirect URIs: http://localhost:8090/*

-

Web Origins: http://localhost:8090

-

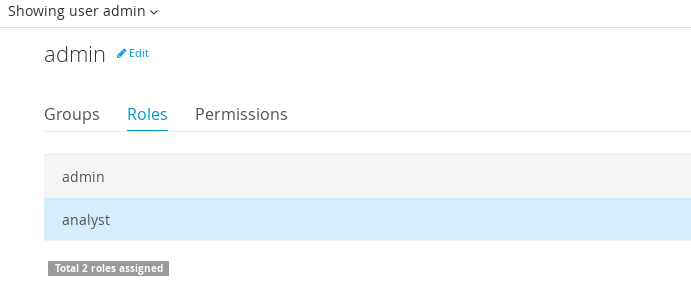

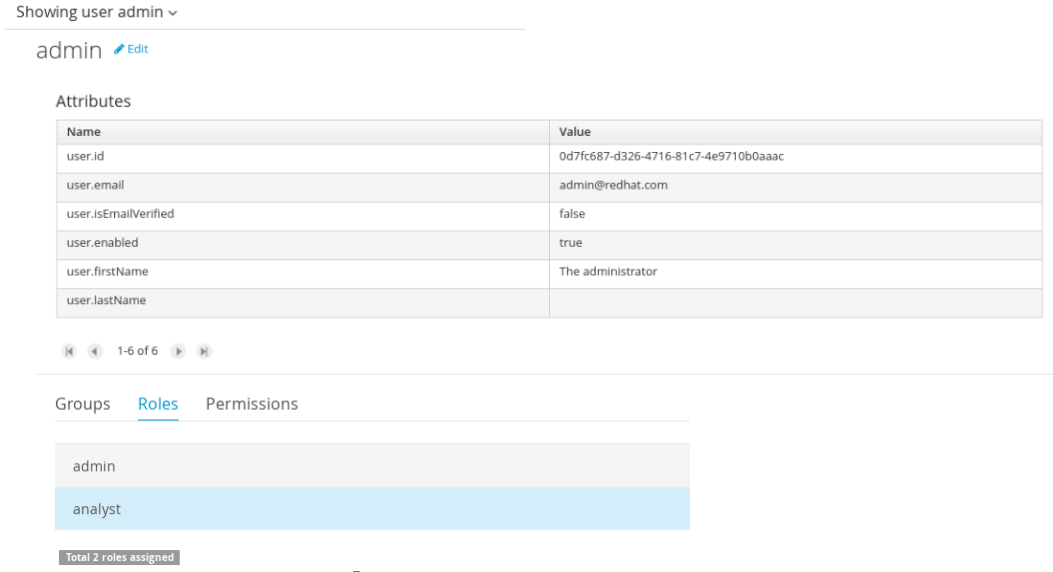

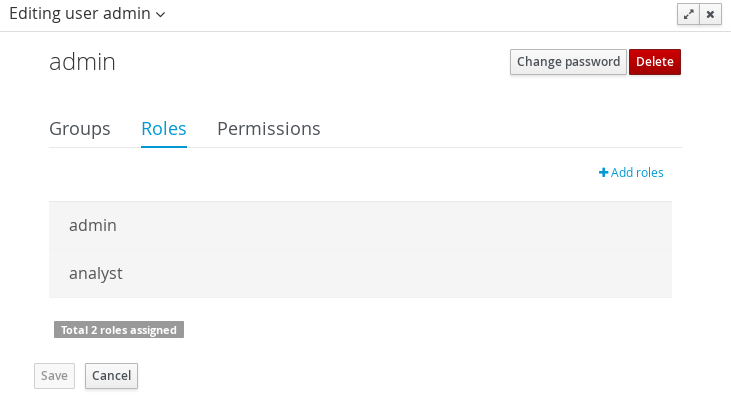

Create realm roles that are used in the application

-

Create users used in the application and assign roles to them

-

-

Configure dependencies in service project pom.xml

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.keycloak.bom</groupId>

<artifactId>keycloak-adapter-bom</artifactId>

<version>${version.org.keycloak}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

....

<dependency>

<groupId>org.keycloak</groupId>

<artifactId>keycloak-spring-boot-starter</artifactId>

</dependency>Business application includes jBPM (KIE) execution server that can be configured to be better identified

kieserver.serverId=business-application-service

kieserver.serverName=business-application-service

kieserver.location=http://localhost:8090/rest/server

kieserver.controllers=http://localhost:8080/business-central/rest/controller-

Configure application.properties

# keycloak security setup

keycloak.auth-server-url=http://localhost:8100/auth

keycloak.realm=master

keycloak.resource=springboot-app

keycloak.public-client=true

keycloak.principal-attribute=preferred_username

keycloak.enable-basic-auth=true-

Modify

DefaultWebSecurityConfig.javato ensure that Spring Security will work correctly with Keycloak

import org.keycloak.adapters.KeycloakConfigResolver;

import org.keycloak.adapters.springboot.KeycloakSpringBootConfigResolver;

import org.keycloak.adapters.springsecurity.authentication.KeycloakAuthenticationProvider;

import org.keycloak.adapters.springsecurity.config.KeycloakWebSecurityConfigurerAdapter;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.security.config.annotation.authentication.builders.AuthenticationManagerBuilder;

import org.springframework.security.config.annotation.web.builders.HttpSecurity;

import org.springframework.security.config.annotation.web.configuration.EnableWebSecurity;

import org.springframework.security.core.authority.mapping.SimpleAuthorityMapper;

import org.springframework.security.core.session.SessionRegistryImpl;

import org.springframework.security.web.authentication.session.RegisterSessionAuthenticationStrategy;

import org.springframework.security.web.authentication.session.SessionAuthenticationStrategy;

@Configuration("kieServerSecurity")

@EnableWebSecurity

public class DefaultWebSecurityConfig extends KeycloakWebSecurityConfigurerAdapter {

@Override

protected void configure(HttpSecurity http) throws Exception {

super.configure(http);

http

.csrf().disable()

.authorizeRequests()

.anyRequest().authenticated()

.and()

.httpBasic();

}

@Autowired

public void configureGlobal(AuthenticationManagerBuilder auth) throws Exception {

KeycloakAuthenticationProvider keycloakAuthenticationProvider = keycloakAuthenticationProvider();

SimpleAuthorityMapper mapper = new SimpleAuthorityMapper();

mapper.setPrefix("");

keycloakAuthenticationProvider.setGrantedAuthoritiesMapper(mapper);

auth.authenticationProvider(keycloakAuthenticationProvider);

}

@Bean

public KeycloakConfigResolver KeycloakConfigResolver() {

return new KeycloakSpringBootConfigResolver();

}

@Override

protected SessionAuthenticationStrategy sessionAuthenticationStrategy() {

return new RegisterSessionAuthenticationStrategy(new SessionRegistryImpl());

}

}These are the steps to configure you business application to use Keycloak as authentication and authorisation service.

3.4.1.3. Configuring execution server

server id and server name refer to how the business application will be identified when connecting to the jBPM controller (Business Central) and thus should provide as meaningful information as possible.

location is used to inform other components that might interact with REST api where the execution server is accessible. It should not be the exact same location as defined by server.address and server.port especially when running in containers (Docker/OpenShift).

controllers allows to specify a (comma separated) list of URLs.

3.4.1.4. Configuring capabilities

In case your business application selected 'Business Automation' as the capability then there you can control which of them should actually be turned on on runtime.

# used for decision management

kieserver.drools.enabled=true

kieserver.dmn.enabled=true

# used for business processes and cases

kieserver.jbpm.enabled=true

kieserver.jbpmui.enabled=true

kieserver.casemgmt.enabled=true

# used for planning

kieserver.optaplanner.enabled=true3.4.1.5. Configuring data source

| Data source configuration is only required for business automation (meaning when jBPM is used) |

spring.datasource.username=sa

spring.datasource.password=sa

spring.datasource.url=jdbc:h2:./target/spring-boot-jbpm;MVCC=true

spring.datasource.driver-class-name=org.h2.DriverAbove configures shows the basic data source settings, next section will deal with connection pooling for efficient data access.

| Depending on the driver class selected, make sure your application adds correct dependency that include the JDBC driver class or data source class. |

narayana.dbcp.enabled=true

narayana.dbcp.maxTotal=20this configuration enables the data source connection pool (that is based on

commons-dbcp2 project) and a complete list of parameters can be found on

configuration page.

All parameters from the configuration page must be prefixed with narayana.dbcp.

3.4.1.6. Configuring JPA

jBPM uses Hibernate as the database access layer and thus needs to be properly configured

spring.jpa.properties.hibernate.dialect=org.hibernate.dialect.H2Dialect

spring.jpa.properties.hibernate.show_sql=false

spring.jpa.properties.hibernate.hbm2ddl.auto=update

spring.jpa.hibernate.naming.physical-strategy=org.hibernate.boot.model.naming.PhysicalNamingStrategyStandardImpl| JPA configuration is completely based on SpringBoot so all options for both hibernate and JPA can be found as SpringBoot configuration page |

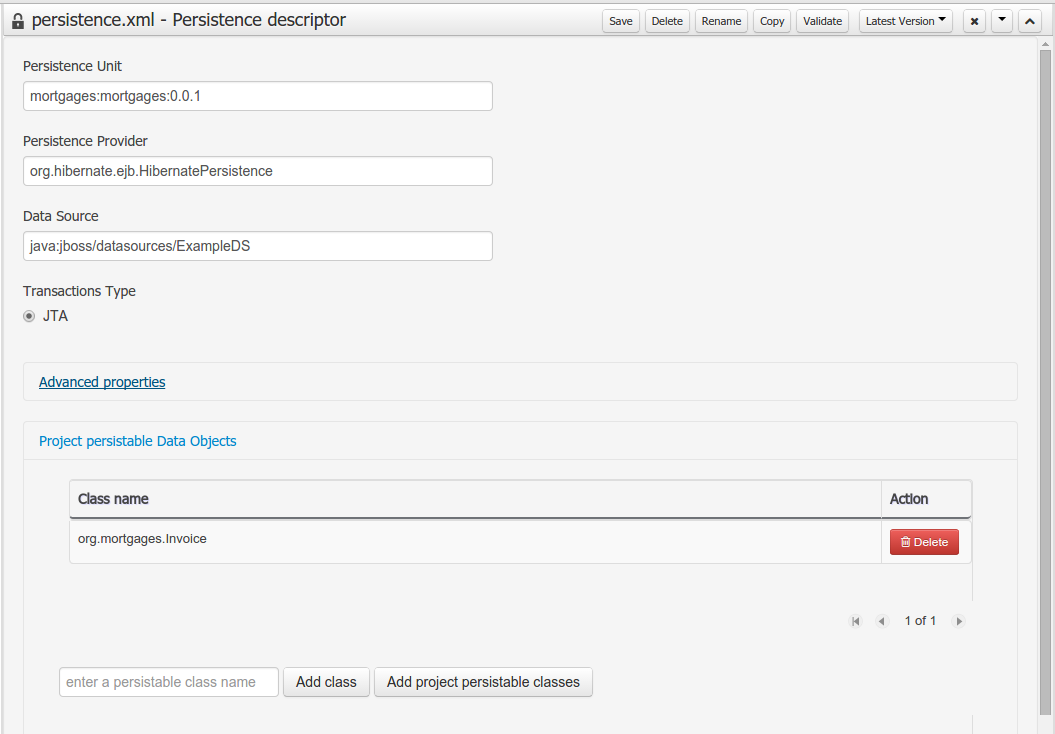

Application with business automation capability creates entity manager factory based on persistence.xml that comes with jBPM. In case there are more entities that should be added to this entity manager factory (e.g. custom entities for the business application) they can easily be added by specifying a comma separated list of packages to scan

spring.jpa.properties.entity-scan-packages=org.jbpm.springboot.samples.entitiesAll entities found in that package will be automatically added to entity manager factory and thus used in the same manner as any other JPA entity in the application.

3.4.1.7. Configuring jBPM executor

jBPM executor is the backbone for asynchronous execution in jBPM. By default it is disabled, but can easily be turned on by configuration parameters.

jbpm.executor.enabled=true

jbpm.executor.retries=5

jbpm.executor.interval=0

jbpm.executor.threadPoolSize=1

jbpm.executor.timeUnit=SECONDS-

jbpm.executor.enabled = true|false - allows to completely disable executor component

-

jbpm.executor.threadPoolSize = Integer - allows to specify thread pool size where default is 1

-

jbpm.executor.retries = Integer - allows to specify number of retries in case of errors while running a job

-

jbpm.executor.interval = Integer - allows to specify interval (by default in seconds) that executor will use to synchronize with database - default is 0 seconds which means it is disabled

-

jbpm.executor.timeUnit = String - allows to specify timer unit used for calculating interval, value must be a valid constant of java.util.concurrent.TimeUnit, by default it’s SECONDS.

3.4.1.8. Configuring distributed timers - Quartz

In case you plan to run your application in a cluster (multiple instances of it at the same time) then you need to take into account the timer service setup. Since the business application is running on top of Tomcat web container the only option for timer service for distributed setup is Quartz based.

jbpm.quartz.enabled=true

jbpm.quartz.configuration=quartz.propertiesAbove are two mandatory parameters and the configuration file that need to be either on the classpath or on the file system (if the path is given).

For distributed timers database storage should be used and properly configured via quartz.properties file.

#============================================================================

# Configure Main Scheduler Properties

#============================================================================

org.quartz.scheduler.instanceName = SpringBootScheduler

org.quartz.scheduler.instanceId = AUTO

org.quartz.scheduler.skipUpdateCheck=true

org.quartz.scheduler.idleWaitTime=1000

#============================================================================

# Configure ThreadPool

#============================================================================

org.quartz.threadPool.class = org.quartz.simpl.SimpleThreadPool

org.quartz.threadPool.threadCount = 5

org.quartz.threadPool.threadPriority = 5

#============================================================================

# Configure JobStore

#============================================================================

org.quartz.jobStore.misfireThreshold = 60000

org.quartz.jobStore.class=org.quartz.impl.jdbcjobstore.JobStoreCMT

org.quartz.jobStore.driverDelegateClass=org.jbpm.process.core.timer.impl.quartz.DeploymentsAwareStdJDBCDelegate

org.quartz.jobStore.useProperties=false

org.quartz.jobStore.dataSource=myDS

org.quartz.jobStore.nonManagedTXDataSource=notManagedDS

org.quartz.jobStore.tablePrefix=QRTZ_

org.quartz.jobStore.isClustered=true

org.quartz.jobStore.clusterCheckinInterval = 5000

#============================================================================

# Configure Datasources

#============================================================================

org.quartz.dataSource.myDS.connectionProvider.class=org.jbpm.springboot.quartz.SpringConnectionProvider

org.quartz.dataSource.myDS.dataSourceName=quartzDataSource

org.quartz.dataSource.notManagedDS.connectionProvider.class=org.jbpm.springboot.quartz.SpringConnectionProvider

org.quartz.dataSource.notManagedDS.dataSourceName=quartzNotManagedDataSource

Data source names in quartz configuration file refer to Spring beans. Additionally

connection provider needs to be set to org.jbpm.springboot.quartz.SpringConnectionProvider

to allow integration with Spring based data sources.

|

By default Quartz requires two data sources:

-

managed data source so it can participate in transaction of the jBPM engine

-

not managed data source so it can look up for timers to trigger without any transaction handling

jBPM based business application assumes that quartz database (schema) will be collocated with jBPM tables and by that produces data source used for transactional operations for Quartz.

The other (non transactional) data source needs to be configured but it should point to the same database as the main data source.

# enable to use database as storage

jbpm.quartz.db=true

quartz.datasource.name=quartz

quartz.datasource.username=sa

quartz.datasource.password=sa

quartz.datasource.url=jdbc:h2:./target/spring-boot-jbpm;MVCC=true

quartz.datasource.driver-class-name=org.h2.Driver

# used to configure connection pool

quartz.datasource.dbcp2.maxTotal=15

# used to initialize quartz schema

quartz.datasource.initialization=true

spring.datasource.schema=classpath*:quartz_tables_h2.sql

spring.datasource.initialization-mode=alwaysThe last three lines of the above configuration is responsible for initialising database schema automatically. When configured it should point to a proper DDL script.

3.4.1.9. Configuring different databases

Business application is generated with default H2 database - just to get started quickly and without any extra requirements. Since this default setup may not valid for production use the generated business applications come with configuration dedicated to:

-

MySQL

-

PostgreSQL

There are dedicated profiles - both Maven and Spring to get you started really fast without much work. The only thing you need to do is to alight the configuration with your databases.

MySQL configuration

spring.datasource.username=jbpm

spring.datasource.password=jbpm

spring.datasource.url=jdbc:mysql://localhost:3306/jbpm

spring.datasource.driver-class-name=com.mysql.jdbc.jdbc2.optional.MysqlXADataSource

#hibernate configuration

spring.jpa.properties.hibernate.dialect=org.hibernate.dialect.MySQL5InnoDBDialectPostgreSQL configuration

spring.datasource.username=jbpm

spring.datasource.password=jbpm

spring.datasource.url=jdbc:postgresql://localhost:5432/jbpm

spring.datasource.driver-class-name=org.postgresql.xa.PGXADataSource

#hibernate configuration

spring.jpa.properties.hibernate.dialect=org.hibernate.dialect.PostgreSQLDialectOnce the updates to the configuration are done you can launch your application via

./launch.sh clean install -Pmysql for MySQL on Linux/Unix

./launch.bat clean install -Pmysql for MySQL on Windows

./launch.sh clean install -Ppostgres for MySQL on Linux/Unix

./launch.bat clean install -Ppostgres for MySQL on Windows

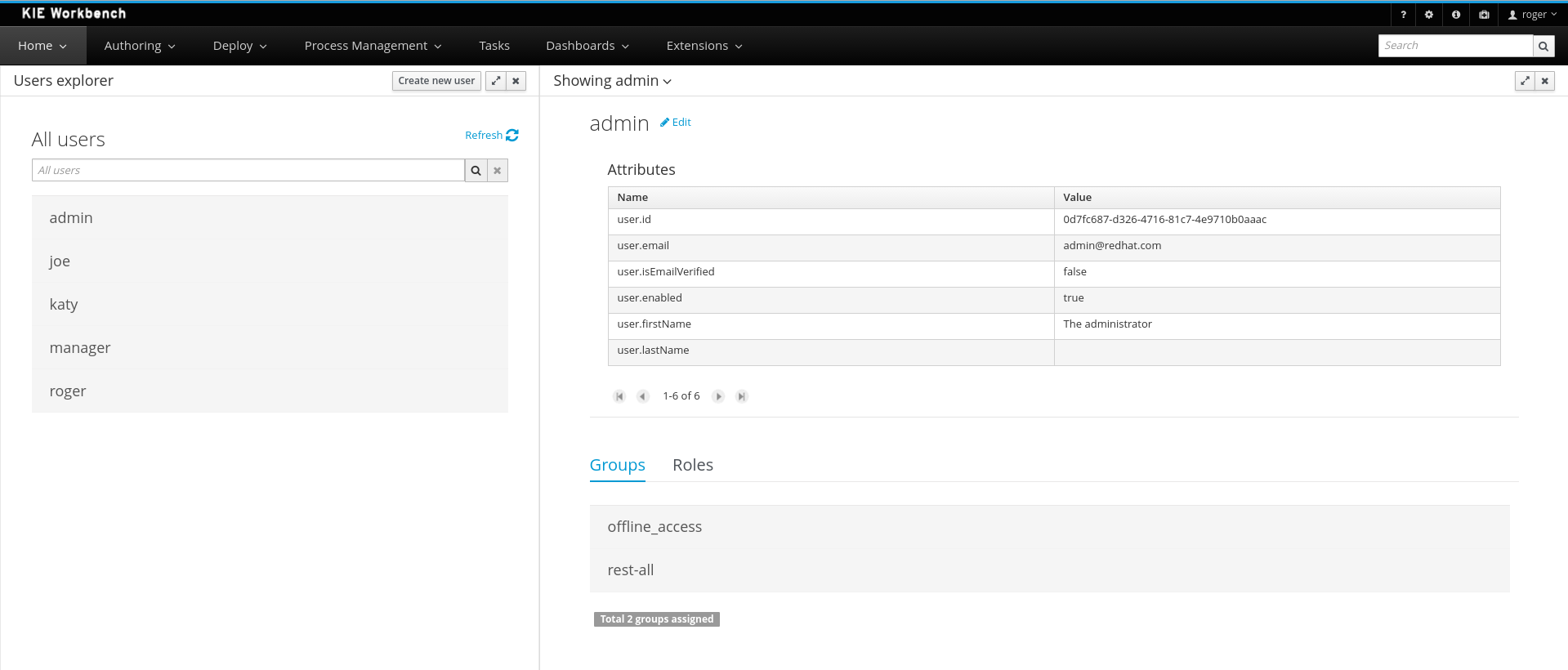

3.4.1.10. Configuring user group providers

Business automation capability supports human centric activities to be managed, to provide integration with user and group repositories there is a built in mechanism in jBPM. There are two entry points

-

UserGroupCallback- responsible for verification if user/group exists and for collecting groups for given user -

UserInfo- responsible for collecting additional information about user/group such as email address, preferred language, etc

Both of these can be configured by providing alternative implementation - either one of the provided out of the box or custom developed.

When it comes to UserGroupCallback it is recommended to stick to the default one as it is based on the security

context of the application. That means whatever backend store is used for authentication and authorisation

(e.g. Keycloak) it will be used as source information for collecting user/group information.

UserInfo requires more advanced information to be collected and thus is a separate component. Not all user/group repositories will

provide expect data especially those that are purely used for authentication and authorisation.

Following code is needed to provide alternative implementation of UserGroupCallback

@Bean(name = "userGroupCallback")

public UserGroupCallback userGroupCallback(IdentityProvider identityProvider) throws IOException {

return new MyCustomUserGroupCallback(identityProvider);

}Following code is needed to provide alternative implementation of UserInfo

@Bean(name = "userInfo")

public UserInfo userInfo() throws IOException {

return new MyCustomUserInfo();

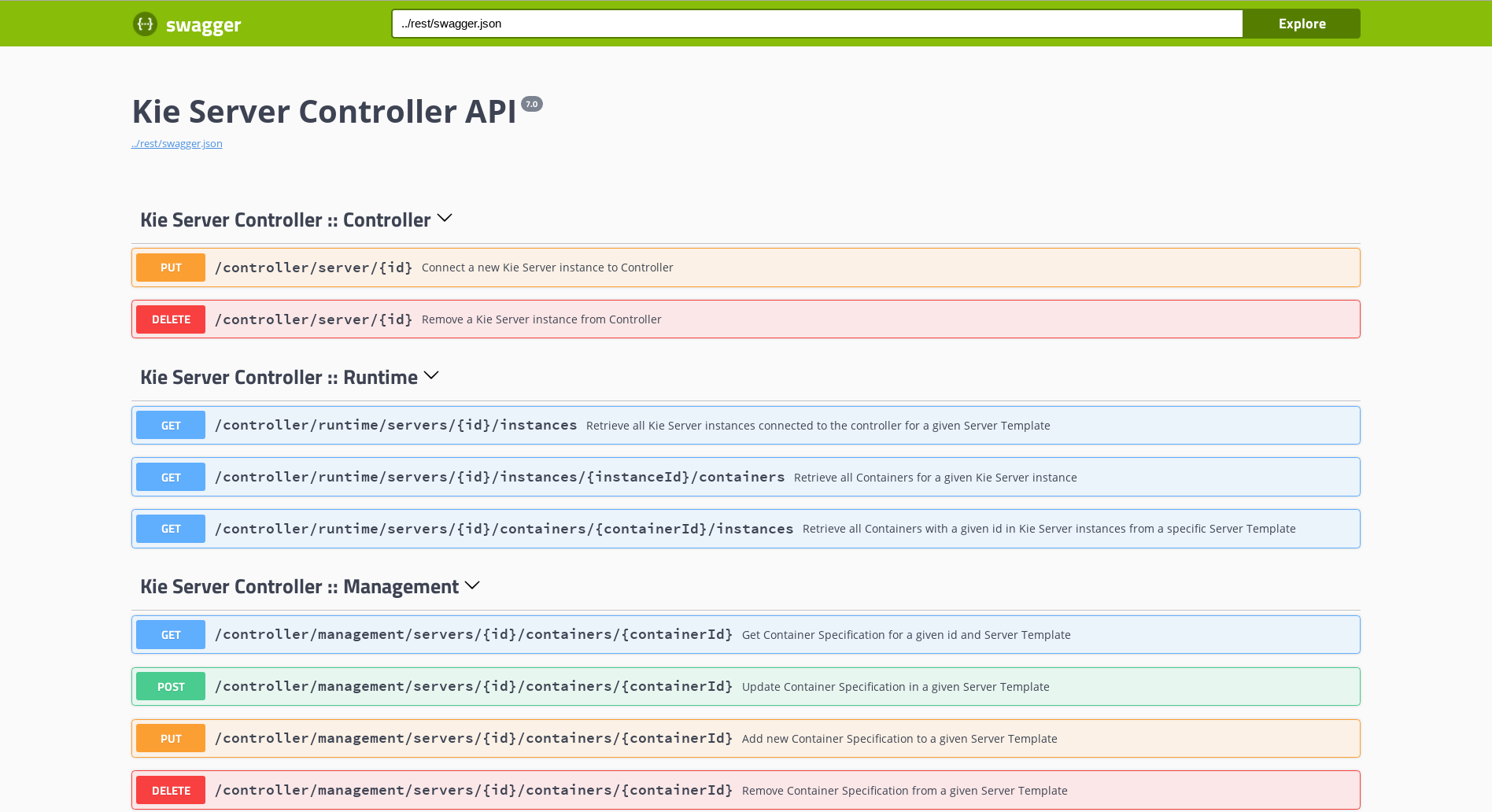

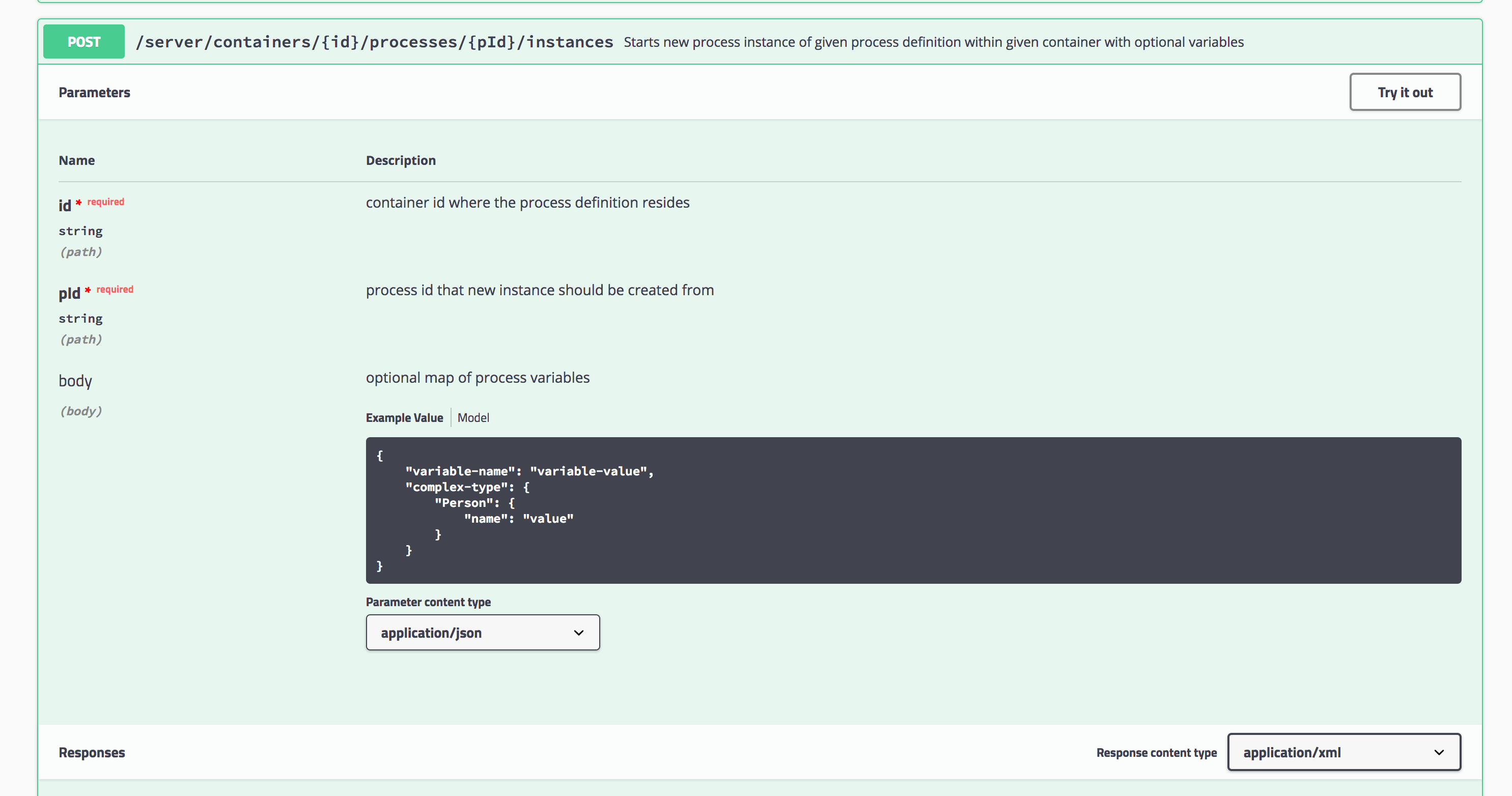

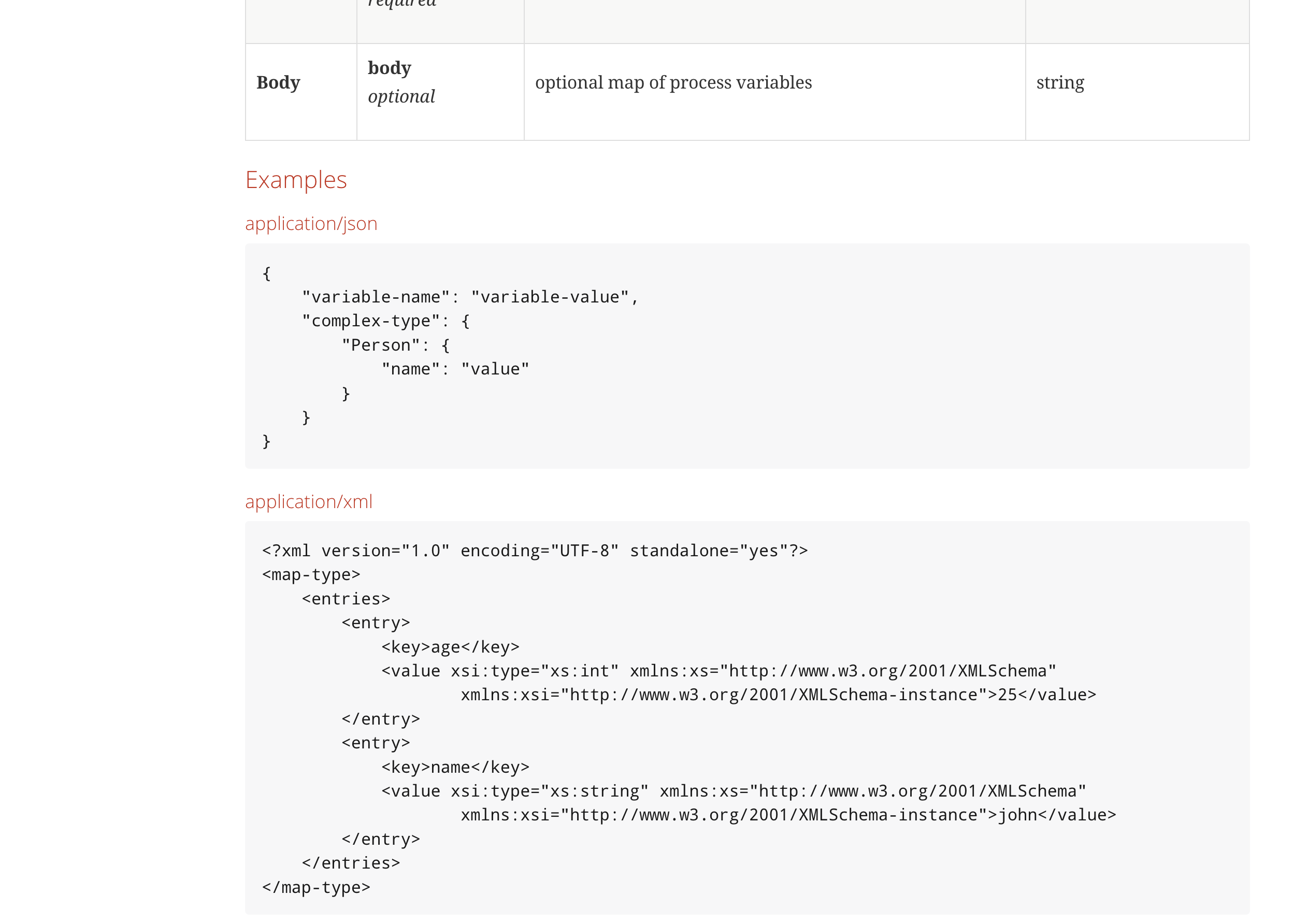

}3.4.1.11. Enable Swagger documentation

Business application can easily enable Swagger based documentation for all endpoints available in the service project.

Add required dependencies to service project pom.xml

<dependency>

<groupId>org.apache.cxf</groupId>

<artifactId>cxf-rt-rs-service-description-swagger</artifactId>

<version>3.1.11</version>

</dependency>

<dependency>

<groupId>io.swagger</groupId>

<artifactId>swagger-jaxrs</artifactId>

<version>1.5.15</version>

<exclusions>

<exclusion>

<groupId>javax.ws.rs</groupId>

<artifactId>jsr311-api</artifactId>

</exclusion>

</exclusions>

</dependency>Enable Swagger support in application.properties

kieserver.swagger.enabled=trueSwagger document can be found at http://localhost:8090/rest/swagger.json

Enable Swagger UI

To enable Swagger UI add following dependency to pom.xml of the service project.

<dependency>

<groupId>org.webjars</groupId>

<artifactId>swagger-ui</artifactId>

<version>2.2.10</version>

</dependency>Once the Swagger UI is enabled and server is started, complete set of endpoints can be found at http://localhost:8090/rest/api-docs/?url=http://localhost:8090/rest/swagger.json

3.5. Develop your business application

Developing custom logic in business application strictly depends on your specific requirements. In this guide we will provide some common steps that developers might need to get started.

3.5.1. Data model

The data model project in your generated business application promotes the idea (and best practice in fact) of designing data models with reuse in mind. At the same time it avoids putting too much in the model (which usually happens when model is colocated with the service itself).

Data model project should be seen as the API of the business application or one of its services. In case of application that is composed of several services it’s recommended that each service exposes its own data model (API).

That API then can be used by both service project and the business assets project.

| Generated application model is not added as dependency to service nor business assets projects. |

3.5.2. Business assets development

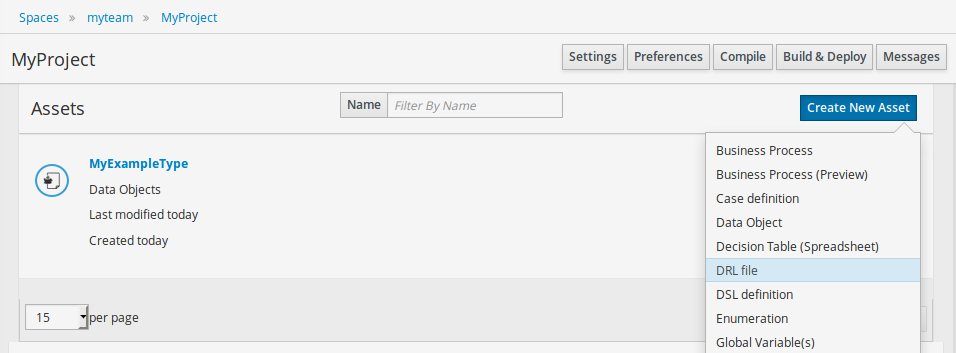

Business assets are usually developed in Business Central, where developers can create different assets types such as

-

Business processes

-

Case definitions

-

Rules

-

Decision tables

-

Data objects

-

Forms

-

Others

Before these assets can be created the business assets project needs to be imported into Business Central as described in Import your business assets project into Business Central

Whenever working with business assets you can easily try them out in your business application by running the application in development mode. That allows developer to build and deploy the assets project directly to a running application. Moreover Business Central can also be used to quickly interact with processes, tasks and cases. To learn more see Launch application in development mode

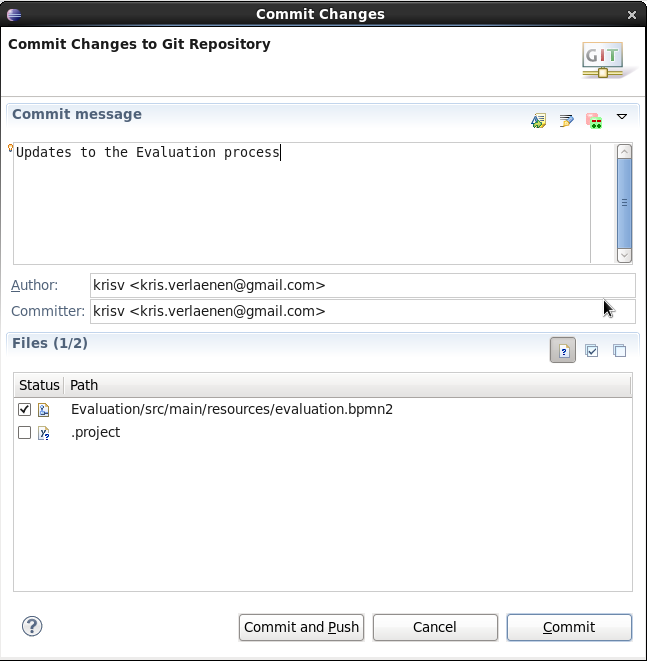

Once the work on business assets is finished it should be fetch back to your business application source.

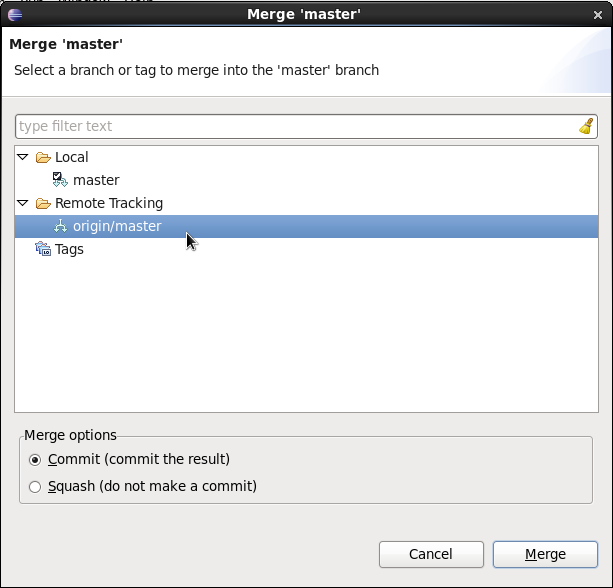

-

go into business assets project - {your business application name}-kjar

-

execute

git fetch origin -

execute

git rebase origin/master

With this your business assets are now part of the business application source tree and can be launched in standalone mode - without Business Central as jBPM controller.

To launch your application just go into service project ({your business application name}-service) and invoke

./launch.sh clean install for Linux/Unix

./launch.bat clean install for Windows

| In case the version of your business assets project changes you will have to update that information in the service project. Locate the configuration file that is used for standalone mode {your business application name}-service.xml Edit it and update the version for the specific container. |

Business assets project has two special files

-

pom.xml

-

src/main/resources/META-INF/kie-deployment-descriptor.xml

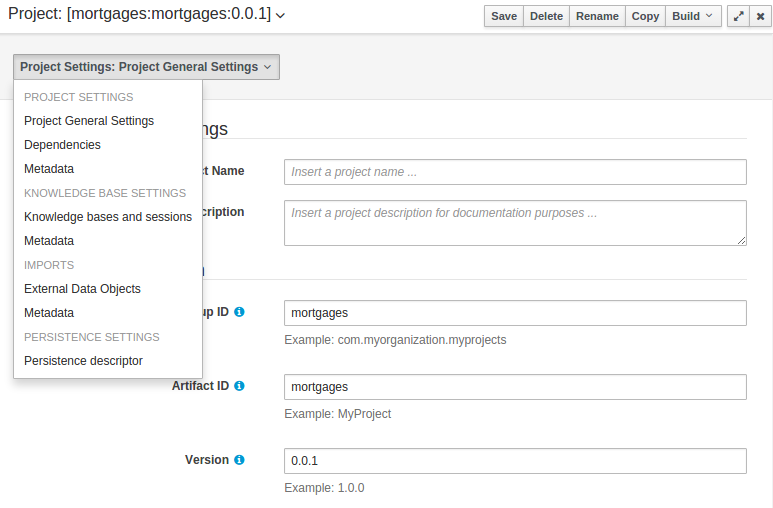

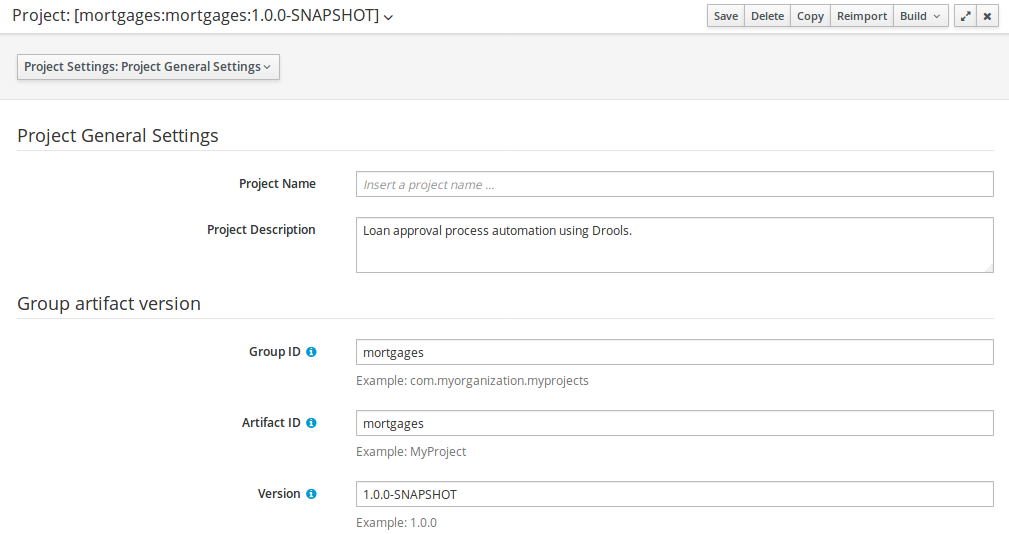

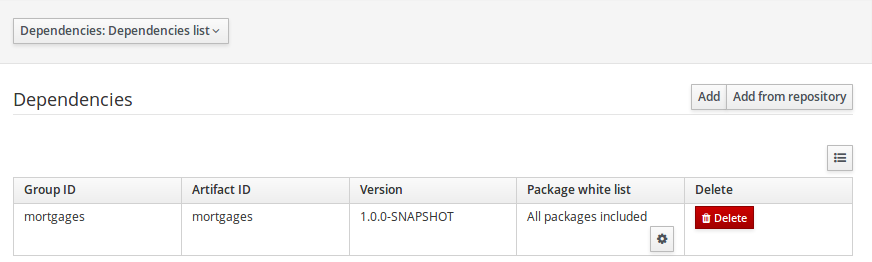

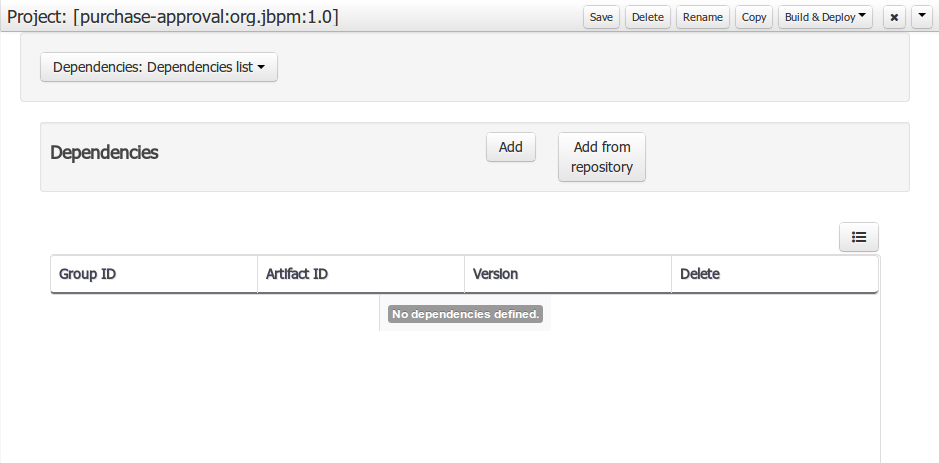

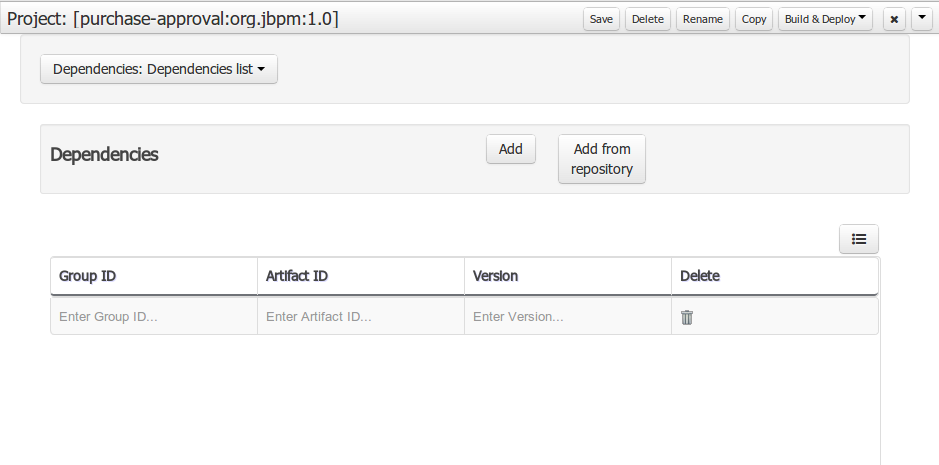

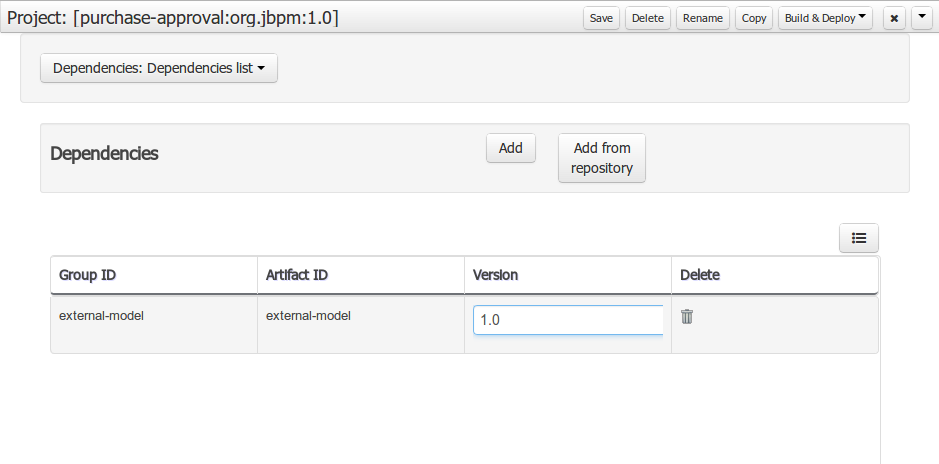

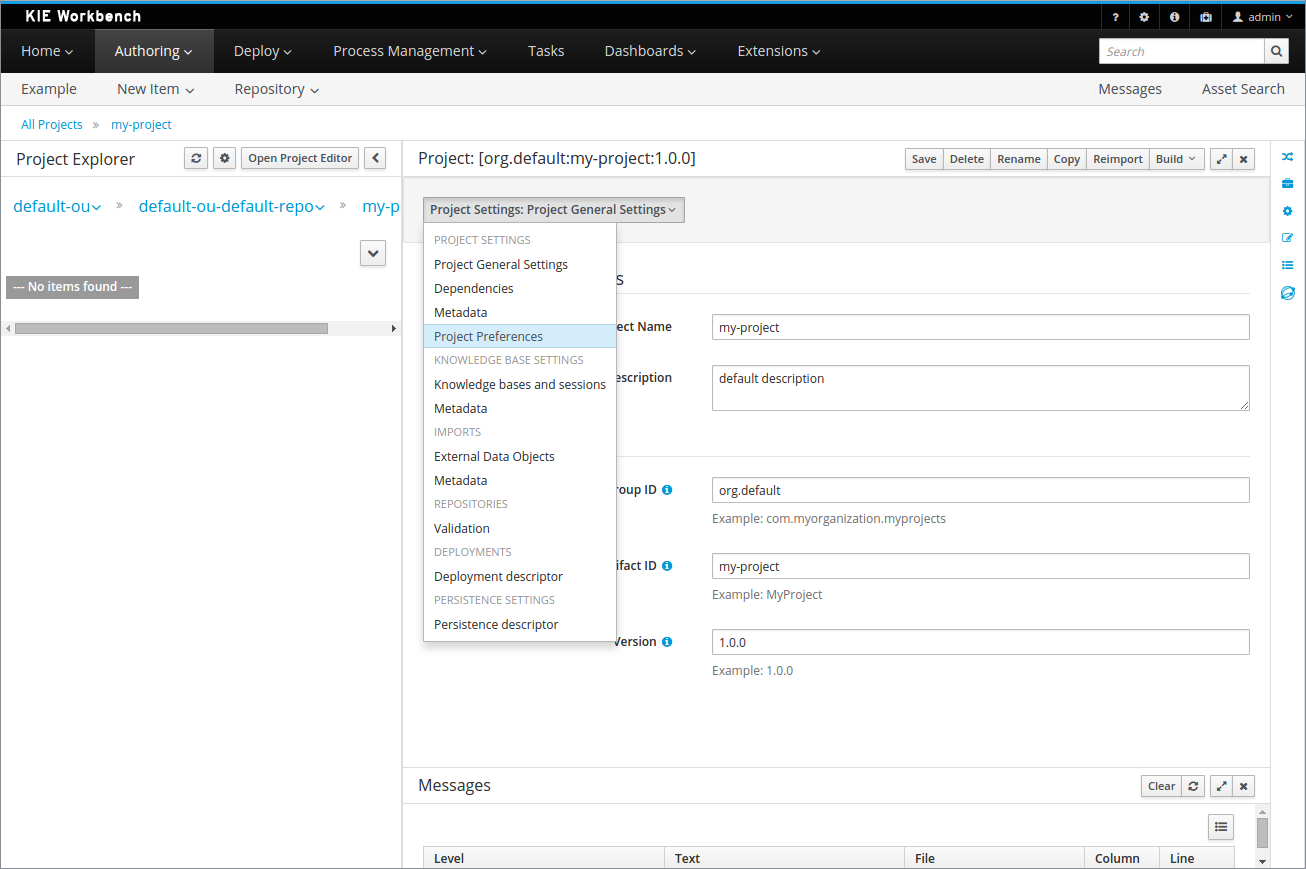

The first one is Apache Maven project file and is managed via Project Settings in Business Central. It allows to define project information (group id, artifact id, version, name, description). In addition it allows to define dependencies the project will have e.g. data model project.

|

Whenever dependencies are added from the following group ids they should be marked as scope provided

|

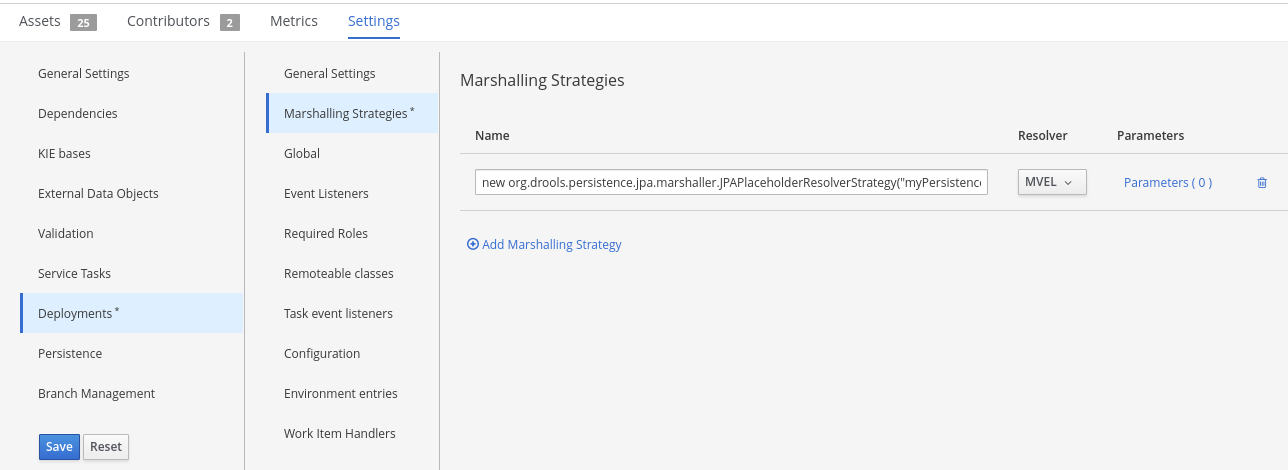

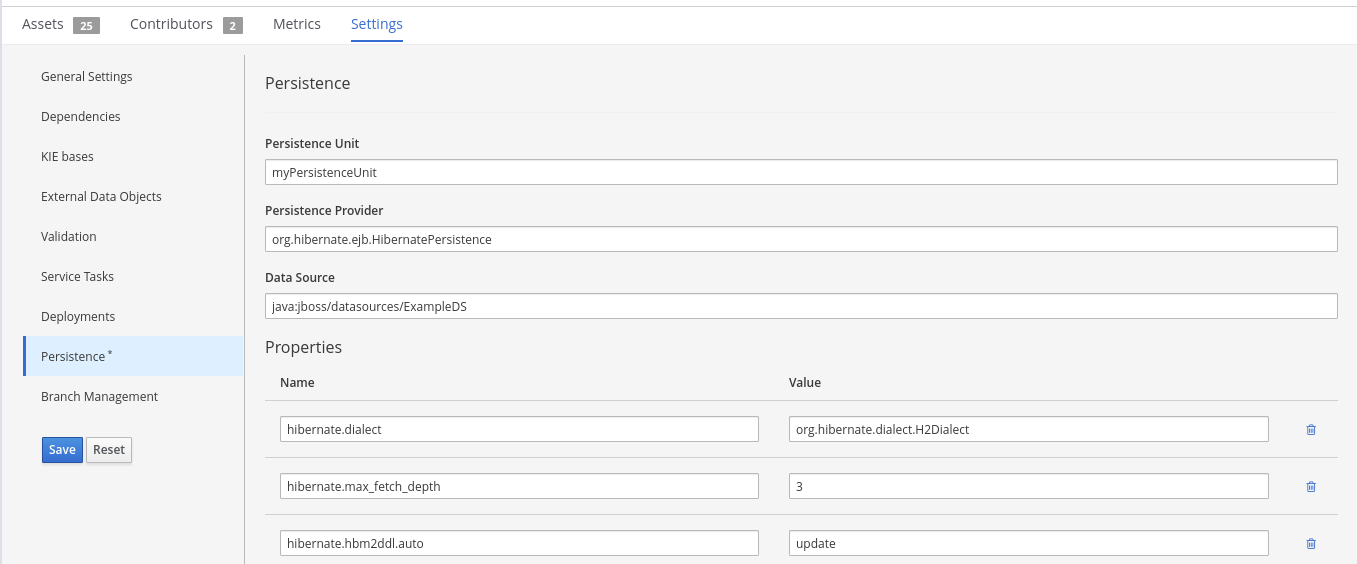

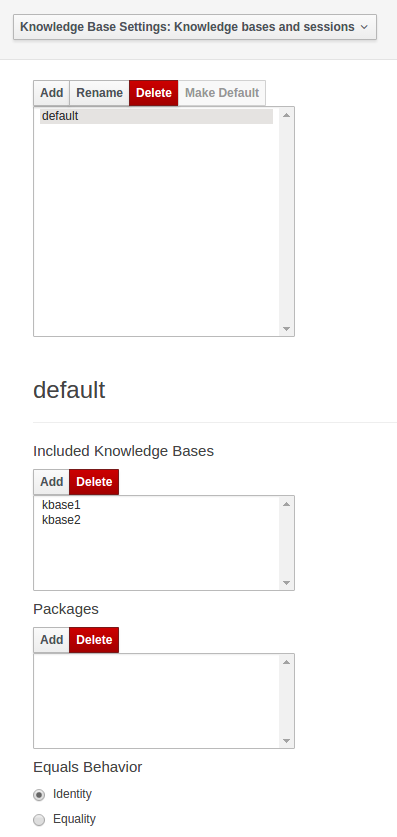

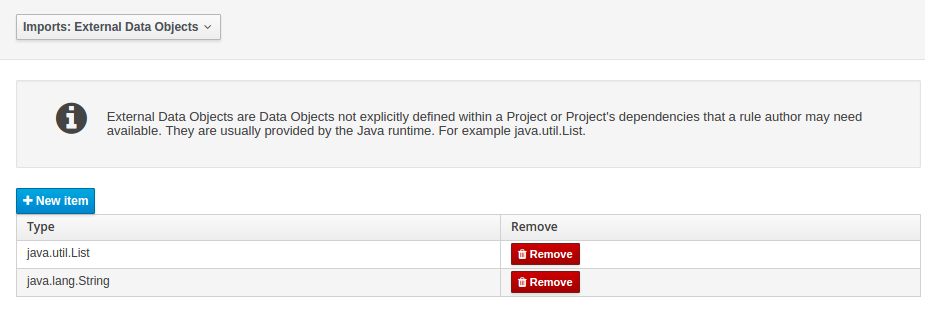

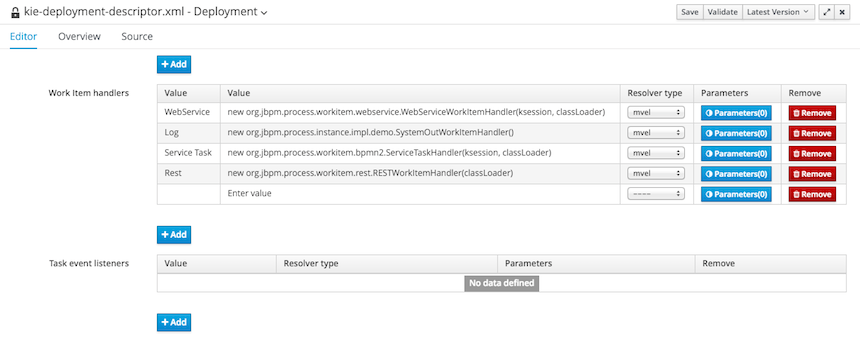

Deployment descriptor allows to configure various components of the business automation capability such as

-

Persistence for jBPM

-

Runtime strategy

-

Event listeners

-

Work item handlers

-

Marshalling strategies

-

And more

for complete description of the deployment descriptor see Deployment descriptor

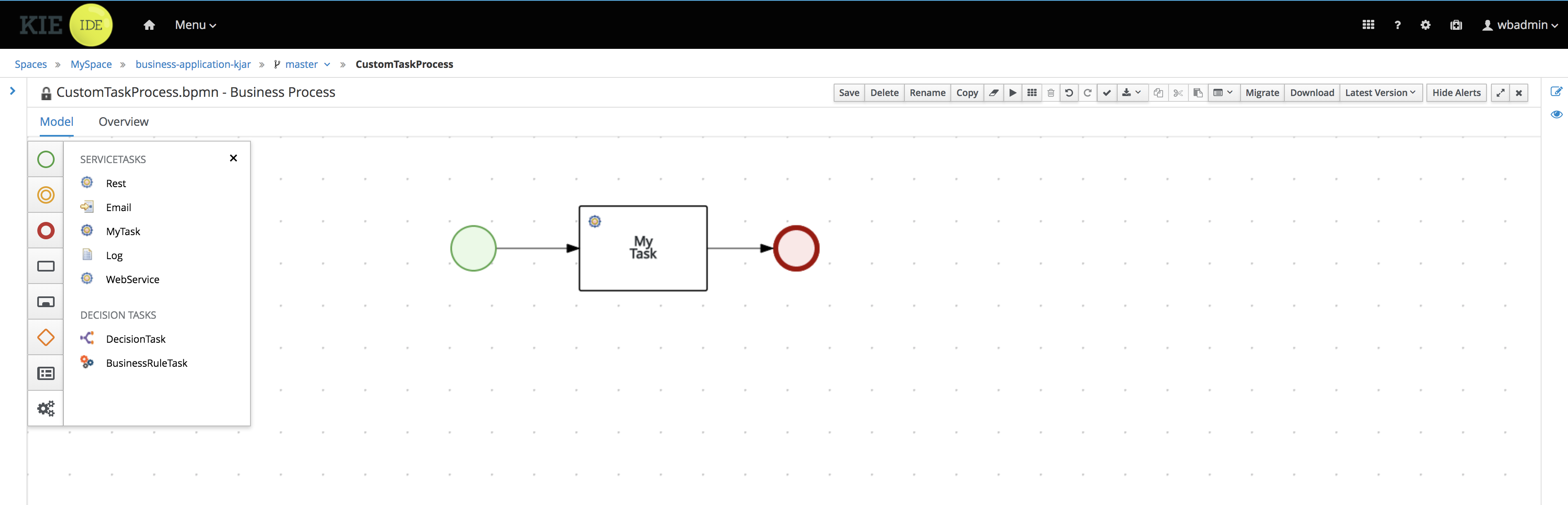

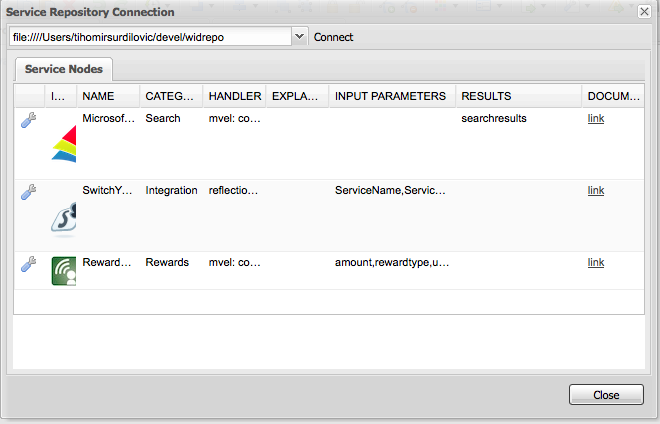

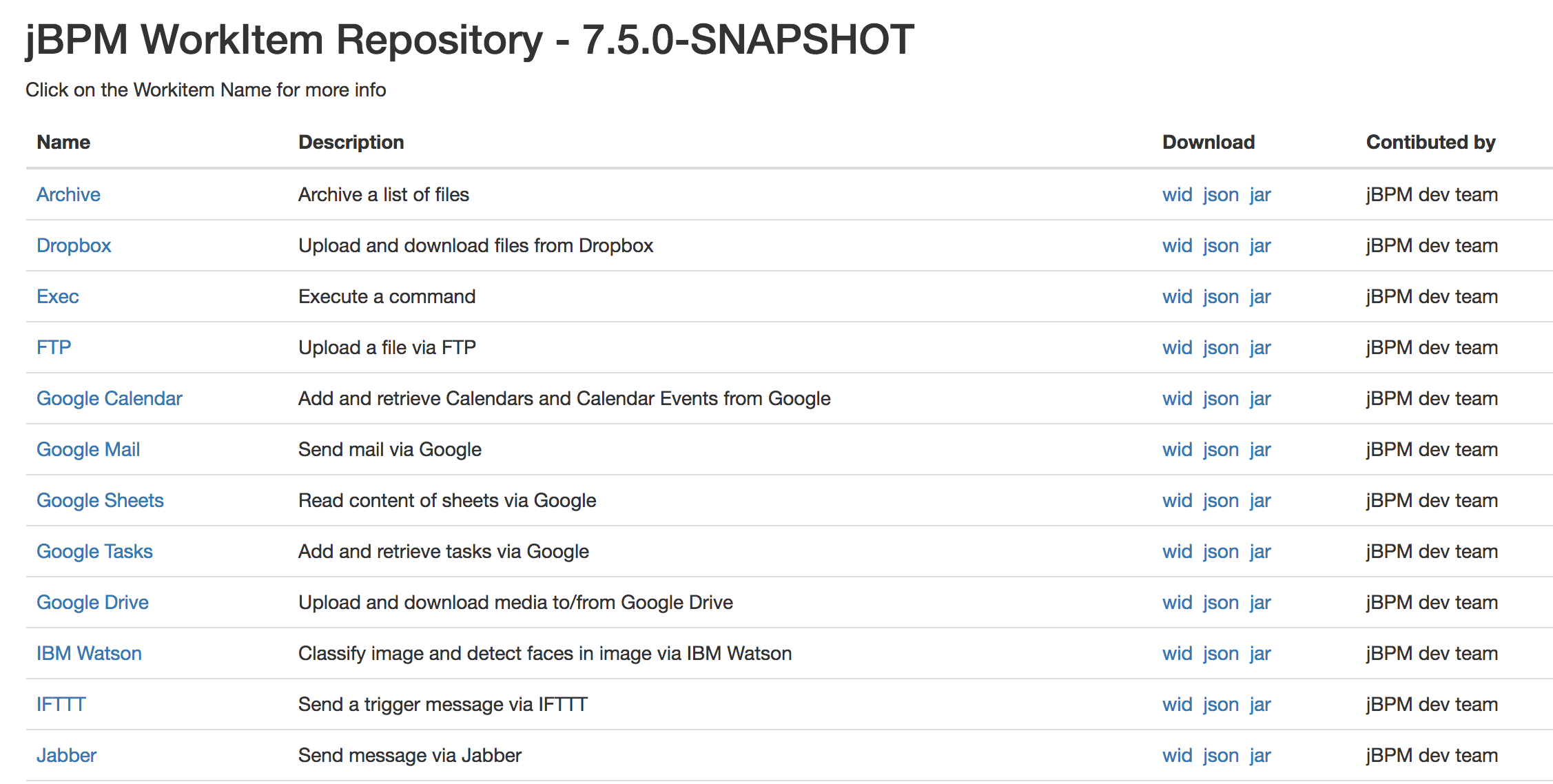

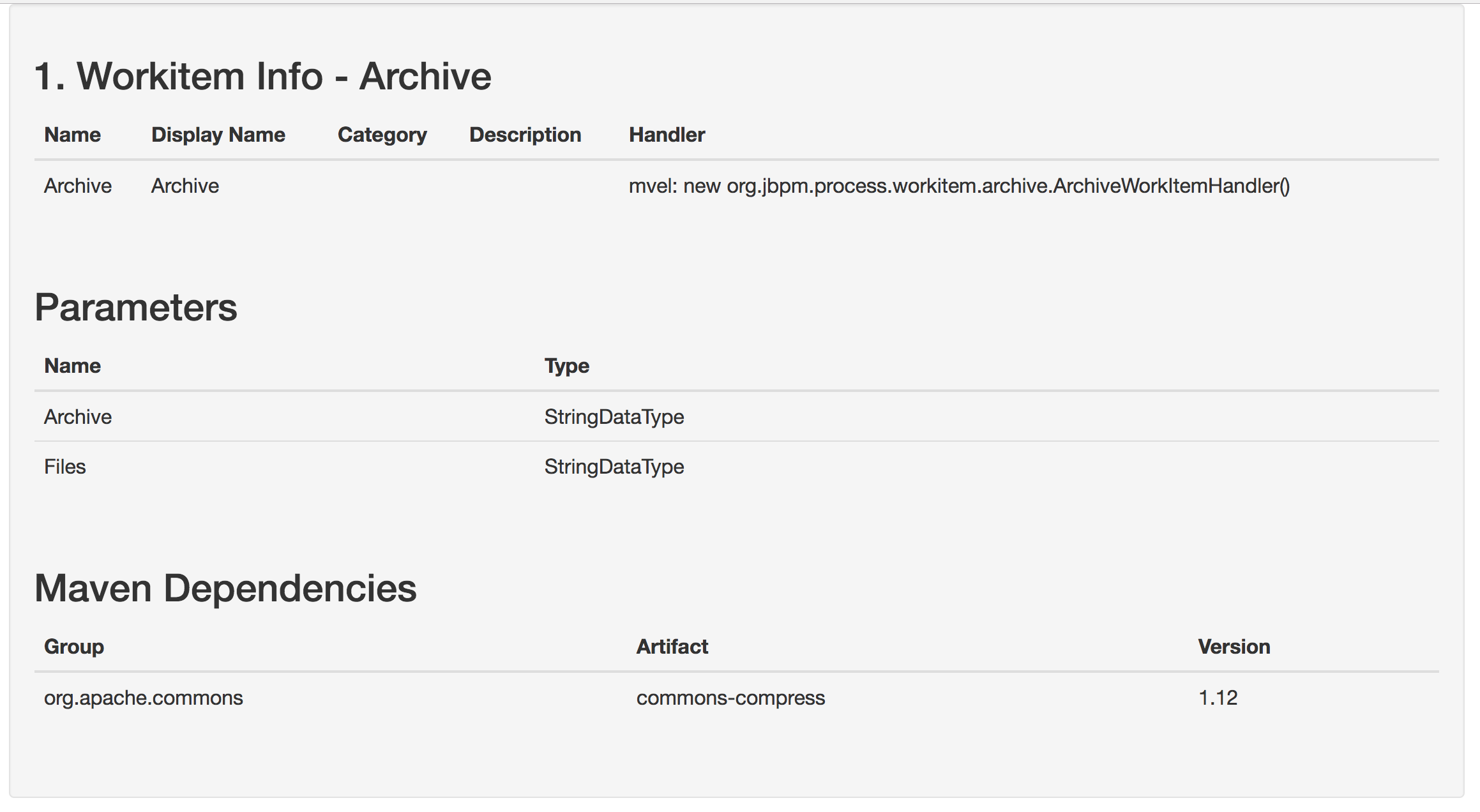

3.5.3. Work Item Handlers

Business processes can take advantage of so-called domain specific services which are implemented as work items and their actual execution is carried out by work item handlers. Work items defined in the process or case definition are linked by name with work item handler (the implementation).

Work item handlers can be registered in three ways

-

via deployment descriptor - use this approach if you want to decouple life cycle of the handler from your business application

-

via auto registration of Spring Components - use this when you have your handlers implemented as Spring beans (components) that are bound to the life cycle of the application

-

via manual registration of any work handler implementation - use this when the handler is not implemented by you and thus there is no way to use the Spring Component approach or it has advanced initialisation logic that does not fit the deployment descriptor approach

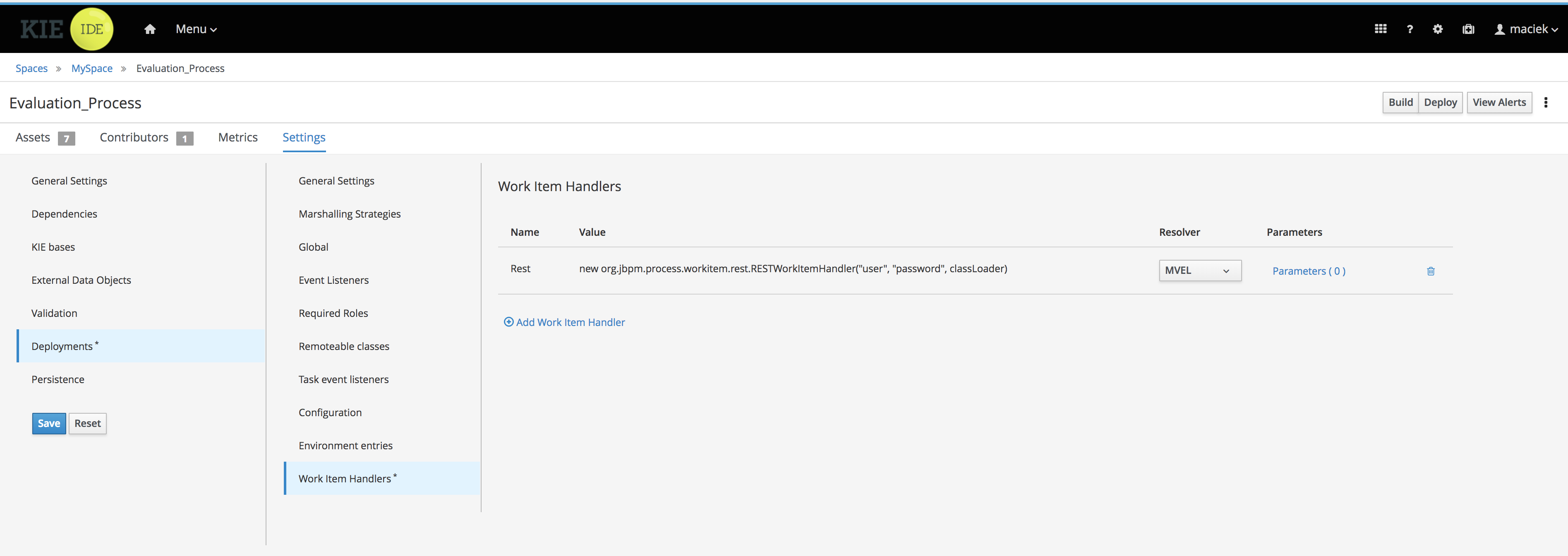

3.5.3.1. Register Work Item Handler via deployment descriptor

Registration in deployment descriptor can be done directly in Business Central via Project settings → Deployments

Add the work item handler mapped to the name of the work item

this will result in following source code of the deployment descriptor

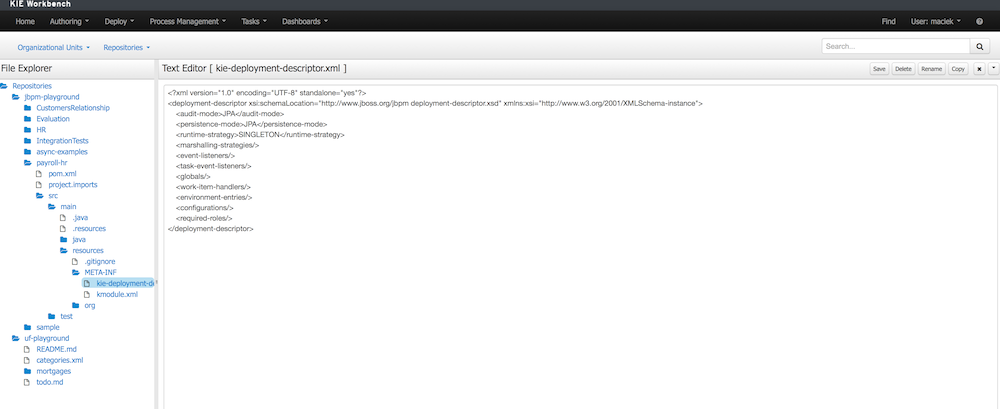

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<deployment-descriptor xsi:schemaLocation="http://www.jboss.org/jbpm deployment-descriptor.xsd" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">

<persistence-unit>org.jbpm.domain</persistence-unit>

<audit-persistence-unit>org.jbpm.domain</audit-persistence-unit>

<audit-mode>JPA</audit-mode>

<persistence-mode>JPA</persistence-mode>

<runtime-strategy>SINGLETON</runtime-strategy>

<marshalling-strategies/>

<event-listeners/>

<task-event-listeners/>

<globals/>

<work-item-handlers>

<work-item-handler>

<resolver>mvel</resolver>

<identifier>new org.jbpm.process.workitem.rest.RESTWorkItemHandler("user", "password", classLoader)</identifier>

<parameters/>

<name>Rest</name>

</work-item-handler>

</work-item-handlers>

<environment-entries/>

<configurations/>

<required-roles/>

<remoteable-classes/>

<limit-serialization-classes>true</limit-serialization-classes>

</deployment-descriptor>3.5.3.2. Register Work Item Handler via auto registration of Spring Components

The easiest way to register work item handlers is to rely on Spring discovery and configuration

of beans. It’s enough to annotate your work item handler class with @Component("WorkItemName")

and that bean will be automatically registered in jBPM.

import org.kie.api.runtime.process.WorkItem;

import org.kie.api.runtime.process.WorkItemHandler;

import org.kie.api.runtime.process.WorkItemManager;

import org.springframework.stereotype.Component;

@Component("Custom")

public class CustomWorkItemHandler implements WorkItemHandler {

@Override

public void executeWorkItem(WorkItem workItem, WorkItemManager manager) {

manager.completeWorkItem(workItem.getId(), null);

}

@Override

public void abortWorkItem(WorkItem workItem, WorkItemManager manager) {

}

}This will register CustomWorkItemHandler under Custom name so every work item named Custom

will use that handler to execute it’s logic.

The name attribute of @Component annotations is mandatory for registration to happen.

In case the name is missing work item handler won’t be registered and warning will be logged.

|

3.5.3.3. Register Work Item Handler programmatically

Last resort option is to get hold of DeploymentService and register handlers programmatically

@Autowire

private SpringKModuleDeploymentService deploymentService;

...

@PostConstruct

public void configure() {

deploymentService.registerWorkItemHandler("Custom", new CustomWorkItemHandler());

}3.5.4. Event listeners

jBPM allows to register various event listeners that will be invoked upon various events triggered by the jBPM engine. Supported event listener types are

-

ProcessEventListener

-

AgendaEventListener

-

RuleRuntimeEventListener

-

TaskLifeCycleEventListener

-

CaseEventListener

Similar to work item handlers, event listeners can be registered in three ways

-

via deployment descriptor - use this approach if you want to decouple life cycle of the listener from your business application

-

via auto registration of Spring Components - use this when you have your listeners implemented as Spring beans (components) that are bound to the life cycle of the application

-

via manual registration of any work handler implementation - use this when the listener is not implemented by you and thus there is no way to use the Spring Component approach or it has advanced initialisation logic that does not fit the deployment descriptor approach

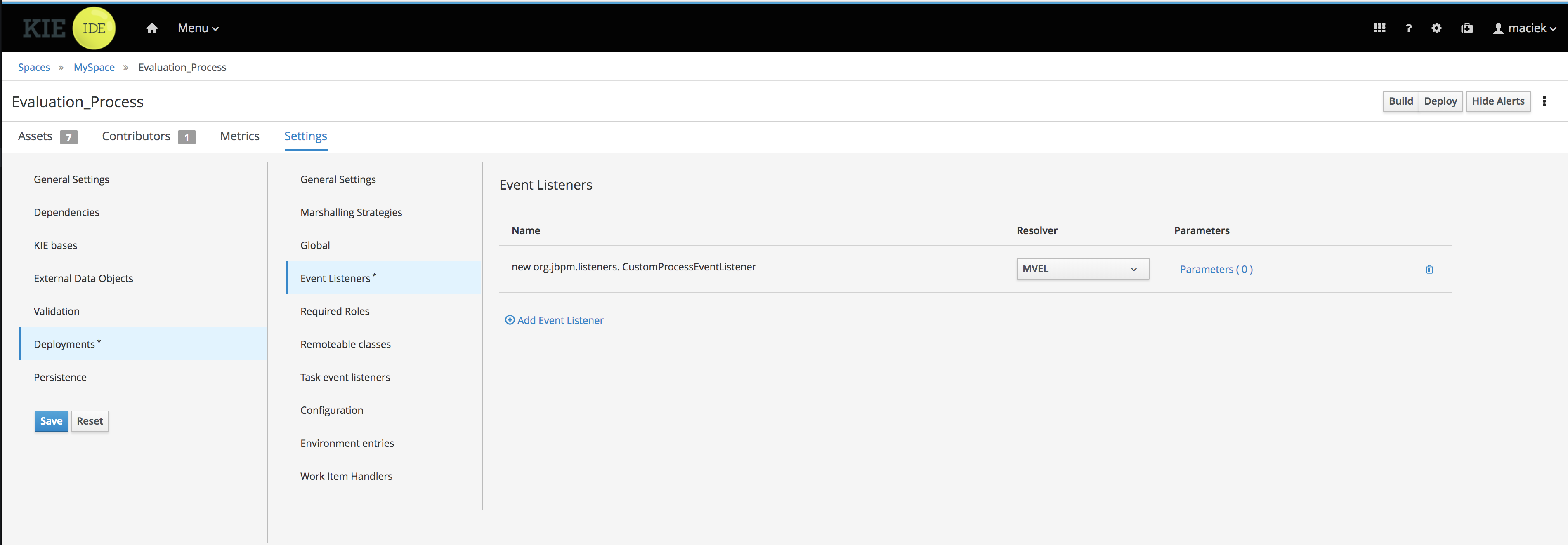

3.5.4.1. Register event listener via deployment descriptor

Registration in deployment descriptor can be done directly in Business Central via Project settings → Deployments

this will result in following source code of the deployment descriptor

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<deployment-descriptor xsi:schemaLocation="http://www.jboss.org/jbpm deployment-descriptor.xsd" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">

<persistence-unit>org.jbpm.domain</persistence-unit>

<audit-persistence-unit>org.jbpm.domain</audit-persistence-unit>

<audit-mode>JPA</audit-mode>

<persistence-mode>JPA</persistence-mode>

<runtime-strategy>SINGLETON</runtime-strategy>

<marshalling-strategies/>

<event-listeners>

<event-listener>

<resolver>mvel</resolver>

<identifier>new org.jbpm.listeners.CustomProcessEventListener</identifier>

<parameters/>

</event-listener>

</event-listeners>

<task-event-listeners/>

<globals/>

<work-item-handlers/>

<environment-entries/>

<configurations/>

<required-roles/>

<remoteable-classes/>

<limit-serialization-classes>true</limit-serialization-classes>

</deployment-descriptor>3.5.4.2. Register event listener via auto registration of Spring Components

The easiest way to register event listeners is to rely on Spring discovery and configuration

of beans. It’s enough to annotate your event listener implementation class with @Component()

and that bean will be automatically registered in jBPM.

import org.kie.api.event.process.ProcessCompletedEvent;

import org.kie.api.event.process.ProcessEventListener;

import org.kie.api.event.process.ProcessNodeLeftEvent;

import org.kie.api.event.process.ProcessNodeTriggeredEvent;

import org.kie.api.event.process.ProcessStartedEvent;

import org.kie.api.event.process.ProcessVariableChangedEvent;

import org.springframework.stereotype.Component;

@Component

public class CustomProcessEventListener implements ProcessEventListener {

@Override

public void beforeProcessStarted(ProcessStartedEvent event) {

}

...

}

Event listener can extend default implementation of given event listener to avoid

implementing all methods e.g. org.kie.api.event.process.DefaultProcessEventListener

|

Type of the event listeners is determined by the interface (or super class) it implements.

3.5.4.3. Register event listener programmatically

Last resort option is to get hold of DeploymentService and register handlers programmatically

@Autowire

private SpringKModuleDeploymentService deploymentService;

...

@PostConstruct

public void configure() {

deploymentService.registerProcessEventListener(new CustomProcessEventListener());

}3.5.5. Custom REST endpoints

In many (if not all) cases there will be a need to expose additional REST endpoints for your business application (in your service project). This can be easily achieved by creating a JAX-RS compatible class (with JAX-RS annotations). It will automatically be registered with the running service when the following scanning option is configured in your apps application.properties config file:

cxf.jaxrs.classes-scan=true

cxf.jaxrs.classes-scan-packages=org.kie.server.springboot.samples.restThe endpoint will be bound to the global REST api path defined in the cxf.path property.

An example of a custom endpoint can be found below

package org.kie.server.springboot.samples.rest;

import javax.ws.rs.GET;

import javax.ws.rs.Path;

import javax.ws.rs.Produces;

import javax.ws.rs.core.MediaType;

import javax.ws.rs.core.Response;

@Path("extra")

public class AdditionalEndpoint {

@GET

@Produces({MediaType.APPLICATION_XML, MediaType.APPLICATION_JSON})

public Response listContainers() {

return Response.ok().build();

}

}3.6. Deploy business application

Business applications are designed to run in pretty much any environment but for production the usual target is cloud-based runtimes that allow scalability and operational efficiency.

Business application deployable components are composed of services. Every application consists of one or more services that are deployed in isolation and in many cases will follow different release cycle.

3.6.1. OpenShift deployment

Business applications can be easily deployed to OpenShift Container Platform. It’s as easy as starting the application locally, meaning by using launch.sh/bat scripts.

| You need to have OpenShift installed (good choice for local installation is minishift) or remote installation that can be accessed over network. |

So first of all login to OpenShift Cluster

oc login -u system:adminonce successfully logged in following output (or similar) should be displayed

Logged into "https://192.168.64.2:8443" as "system:admin" using existing credentials.

You have access to the following projects and can switch between them with 'oc project <projectname>':

default

kube-public

kube-system

* myproject

openshift

openshift-infra

openshift-node

openshift-web-console

Using project "myproject".To deploy your application as to OpenShift Container Platform just go into service project ({your business application name}-service) and invoke

./launch.sh clean install -Popenshift,h2 for Linux/Unix

./launch.bat clean install -Popenshift,h2 for Windows

The launch script will perform the build with openshift profile (see pom.xml in the business assets project and service project for details). The significant difference that is done for openshift is that the business assets project will generate an offline maven repository with the project itself and all its dependencies. Next this maven repository will be included in the image itself and maven (used by business automation capability) will work in offline mode - meaning no access to internet will be attempted.

Launching the application on OpenShift...

--> Found image ef440f7 (15 seconds old) in image stream "myproject/business-application-service" under tag "1.0-SNAPSHOT" for "business-application-service:1.0-SNAPSHOT"

* This image will be deployed in deployment config "business-application-service"

* Ports 8090/tcp, 8778/tcp, 9779/tcp will be load balanced by service "business-application-service"

* Other containers can access this service through the hostname "business-application-service"

--> Creating resources ...

deploymentconfig "business-application-service" created

service "business-application-service" created

--> Success

Application is not exposed. You can expose services to the outside world by executing one or more of the commands below:

'oc expose svc/business-application-service'

Run 'oc status' to view your app.

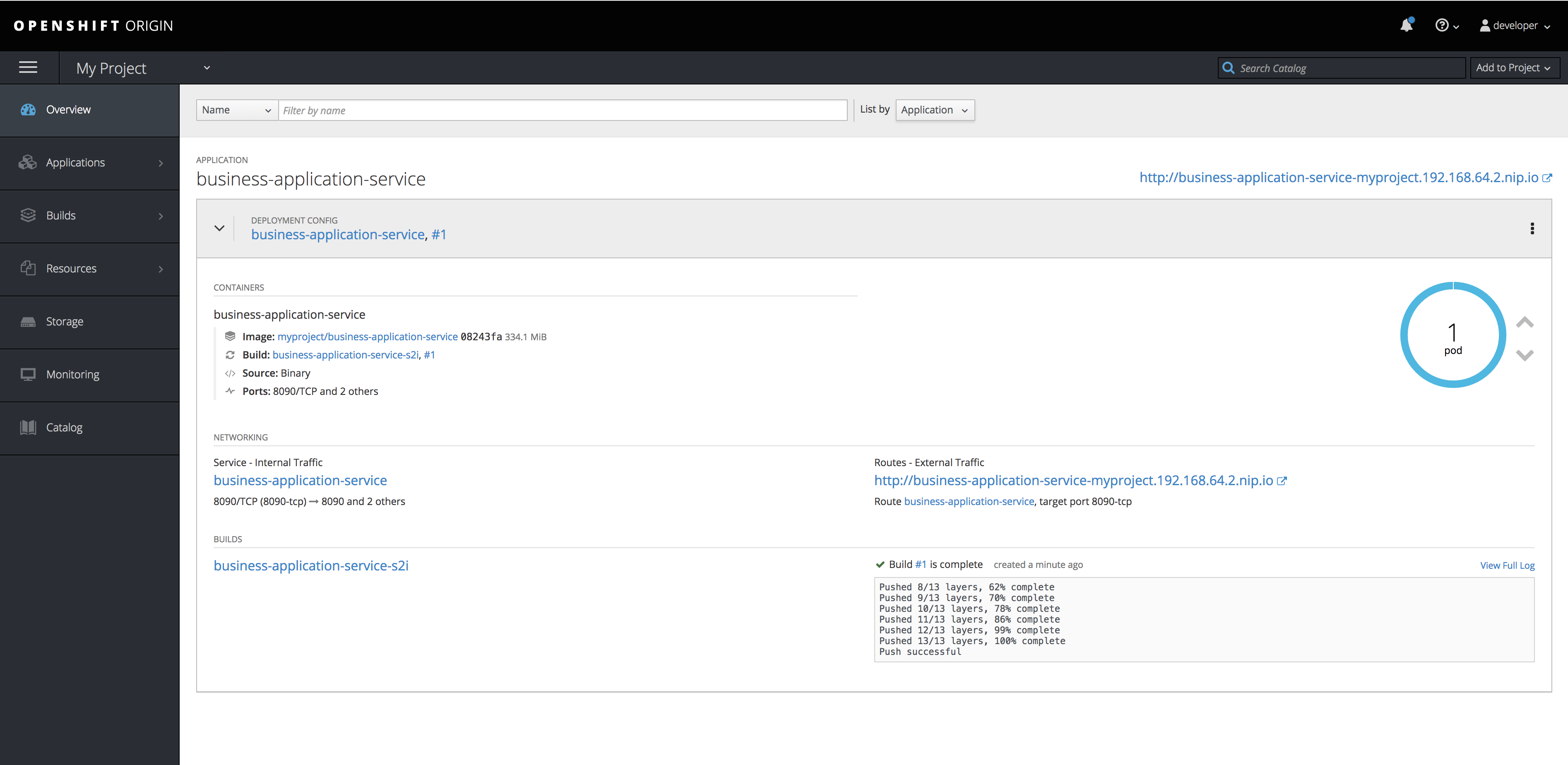

route "business-application-service" exposedYou can then go to OpenShift Web Console and look at the Overview of your project (myproject by default)

By clicking on the route url (in this case http://business-application-service-myproject.192.168.64.2.nip.io)

you can go to the application already deployed and running.

3.6.2. Docker deployment

Business applications are by default configured with option to deploy service as docker container.

This is done in very similar way as launching the service locally - via launch.sh/bat script.

| You must have Docker installed on your machine to make this work! |

To deploy your application as docker container just go into service project ({your business application name}-service) and invoke

./launch.sh clean install -Pdocker,h2 for Linux/Unix

./launch.bat clean install -Pdocker,h2 for Windows

| When building with docker proper database profile needs to be selected as well - this is done via -Pdocker,{db} so the image and the application gets proper JDBC driver selected. |

The launch script will perform the build with docker profile (see pom.xml in the business assets project and service project for details). The significant difference that is done for docker container is that the business assets project will generate an offline maven repository with the project itself and all its dependencies. Next this maven repository will be included in the docker image itself and maven (used by business automation capability) will work in offline mode - meaning no access to internet will be attempted.

Once the build is complete launch script will directly create container and start it, this should be done once the following line is printed to console

Launching the application as docker container...

d40e4cdb662d3b1d9ddee27c5a843be31cb6e7dc4936b0fc1937ce8e48f440aethe second line is the container id that can be later on used to interact with the container, for instance to follow the logs

docker logs -f d40e4cdb662d3b1d9ddee27c5a843be31cb6e7dc4936b0fc1937ce8e48f440aethe business application will be accessible at the same port as configured by default that is 8090, simply go to http://localhost:8090 to see your application running as docker container.

3.6.3. Using external database

Currently business applications that require an external database need to provide the database in advance - before the application is launched and properly configured within the application configuration files.

Further releases will improve this by relying on docker compose/OpenShift templates.

3.7. Tutorials

3.7.1. My First Business Application

3.7.1.1. What will you do

You will build a simple but fully functional business application. Once you build it you will explore basic services exposed by the application.

3.7.1.2. What do you need

-

About 10 minutes of your time

-

Java (JDK) 8 or later

-

Maven 3.5.x

-

Access to the Internet

3.7.1.3. What should I do

To get started with business applications the easiest way is to generate the,.

Go to start.jbpm.org and click button

Generate default business application.

This will generate and download a business-application.zip file that will consists of

three projects

-

business-application-model

-

business-application-kjar

-

business-application-service

Unzip the business-application.zip file into desired location and go into

business-application-service directory. There you will find launch scripts

(for both linux/unix and windows).

./launch.sh clean install for Linux/Unix

./launch.bat clean install for Windows

Execute one applicable to your operating system and wait for it to finish.

| It might take quite some time (depending on your network) as it will download bunch of dependencies required to execute both build and application itself. |

3.7.1.4. Results

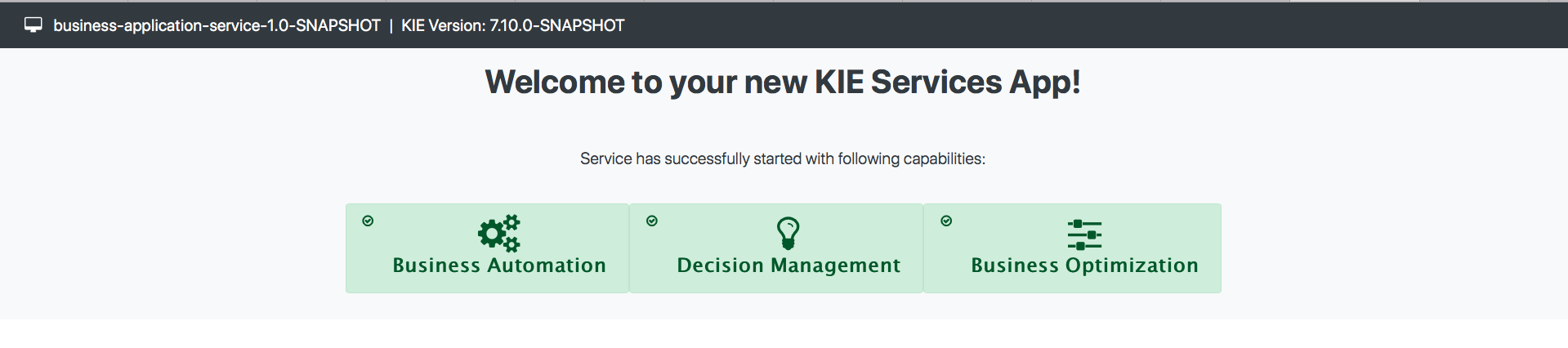

Once the build and launch is complete you can open your browser http://localhost:8090 to see your first business application up and running.

It presents with a welcome screen that is mainly for verification purpose to illustrate that application started successfully.

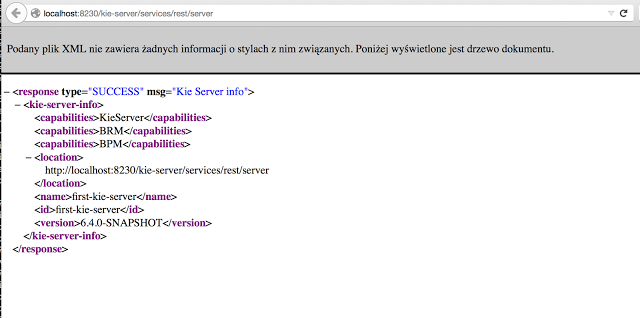

You can point the browser to http://localhost:8090/rest/server to see the actual Business Automation capability services

By default all REST endpoints (url pattern /rest/*) are secured and require

authentication. Default user that can be used to logon is user with password user

|

Business Automation service supports three types of data format

-

XML (JAXB based)

-

JSON

-

XML (XStream based)

To display Business Automation capability service details in different format set HTTP headers

-

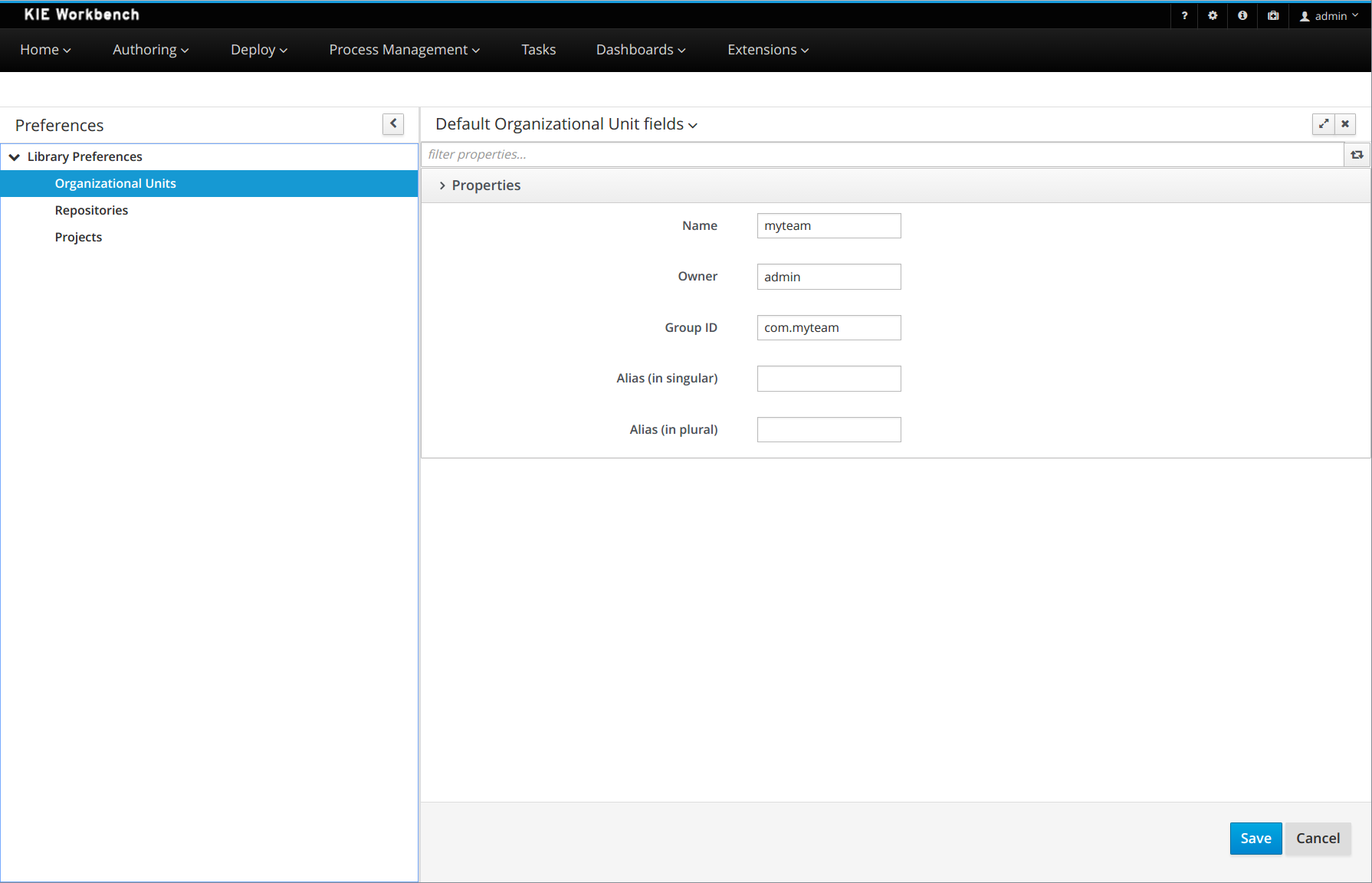

Accept: application/json for JSON format