- I. Getting Started

- 1. Overview

- 2. Getting Started

- 3. jBPM Installer

- 4. Examples

- II. jBPM Core

- 5. Core Engine API

- 6. Processes

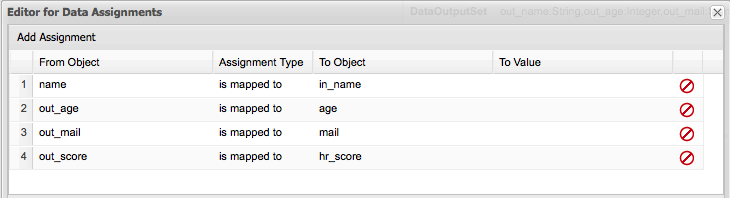

- 7. Human Tasks

- 8. Persistence and Transactions

- III. Workbench

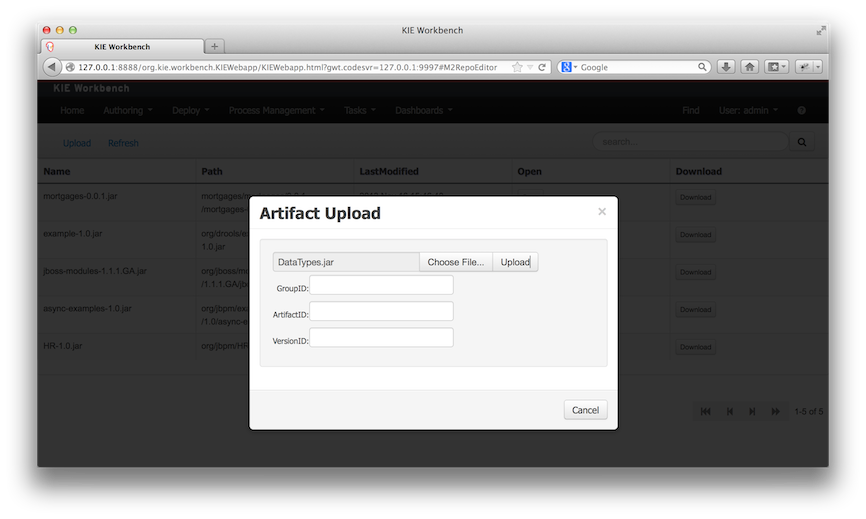

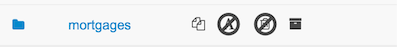

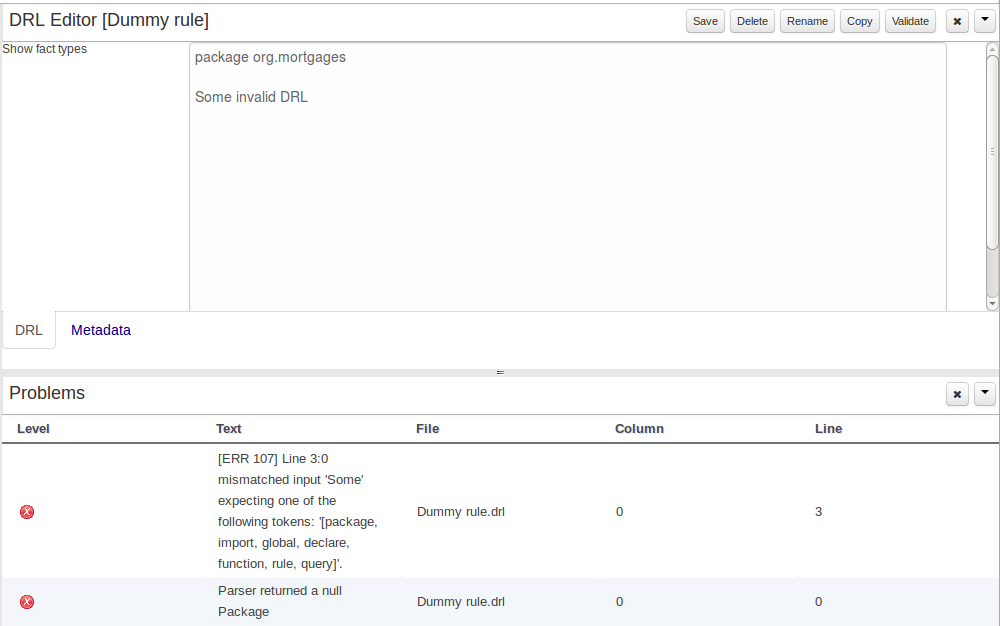

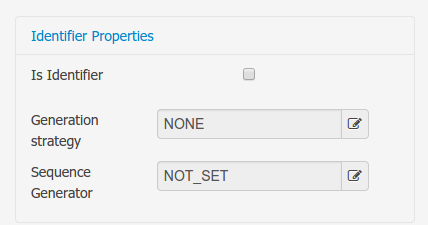

- 9. Workbench

- 10. Workbench Integration

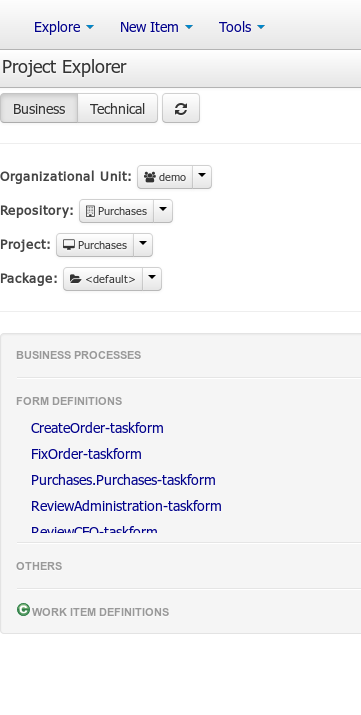

- 11. Workbench High Availability

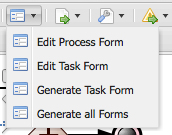

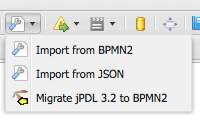

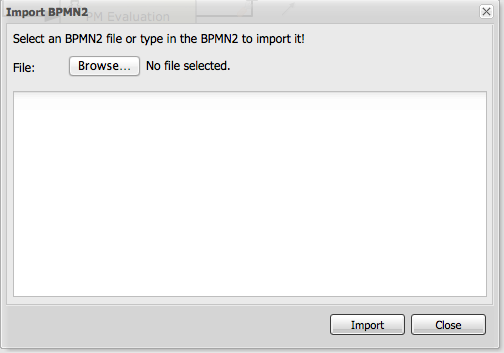

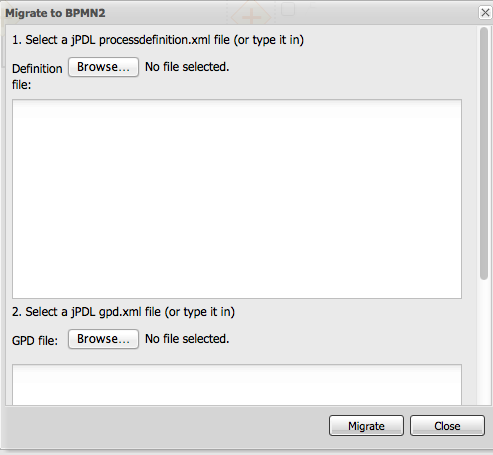

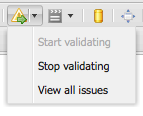

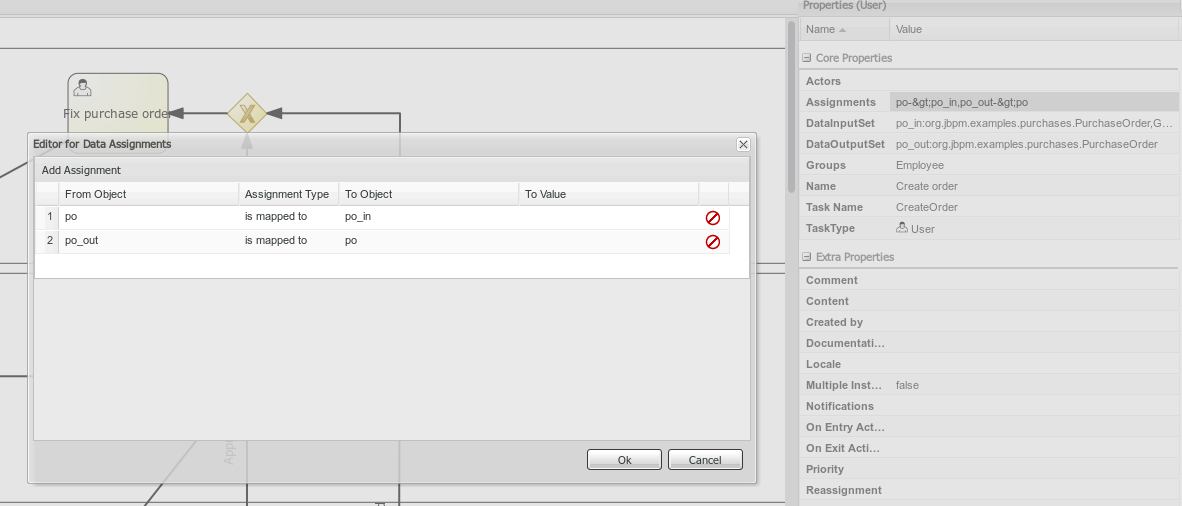

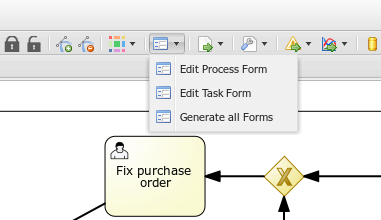

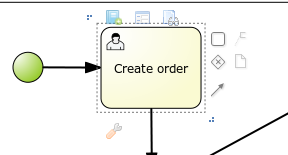

- 12. Designer

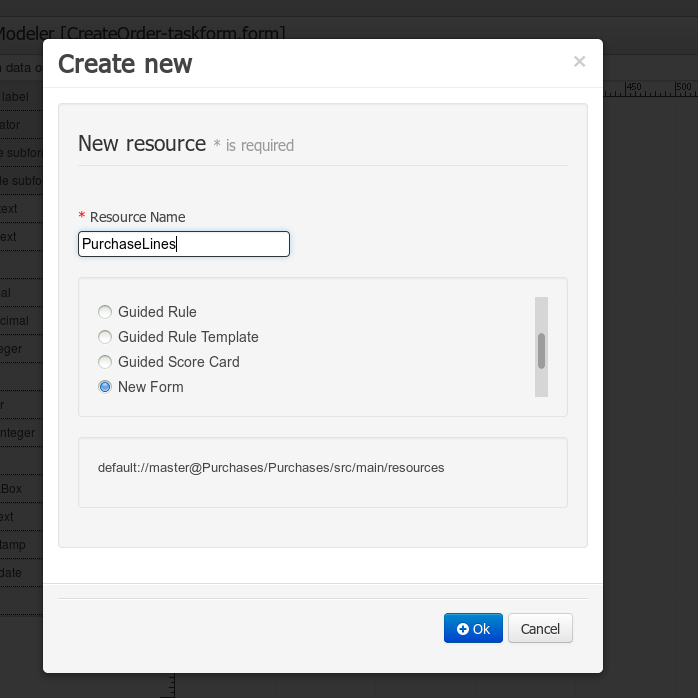

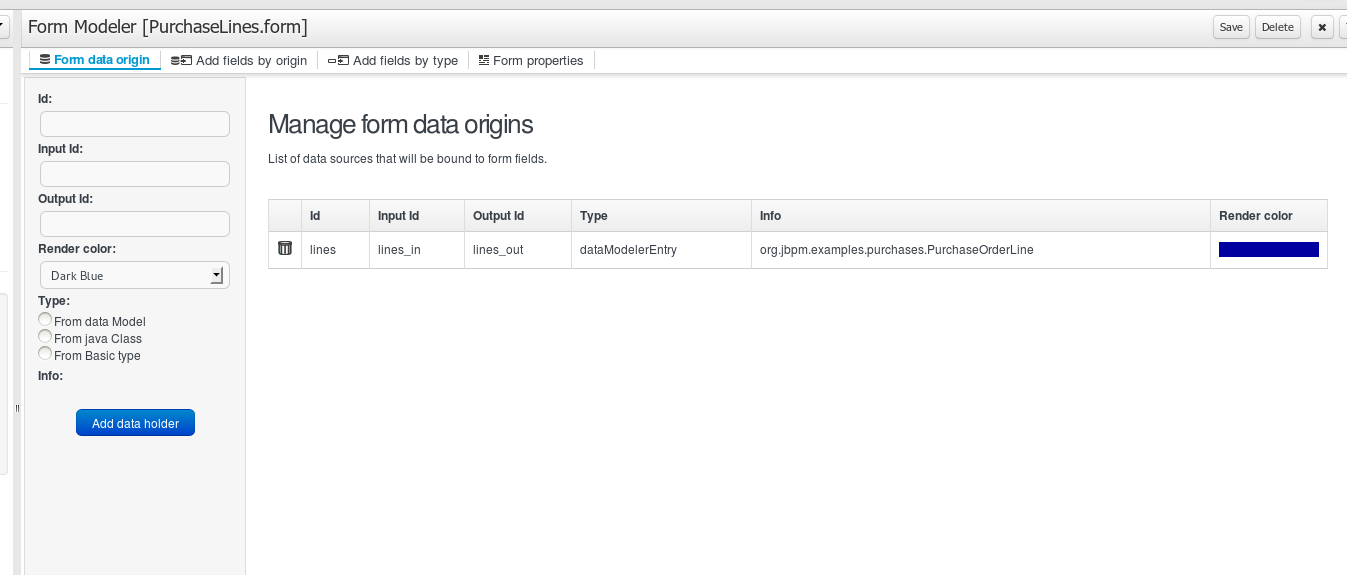

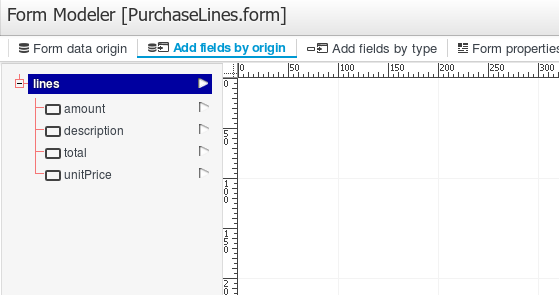

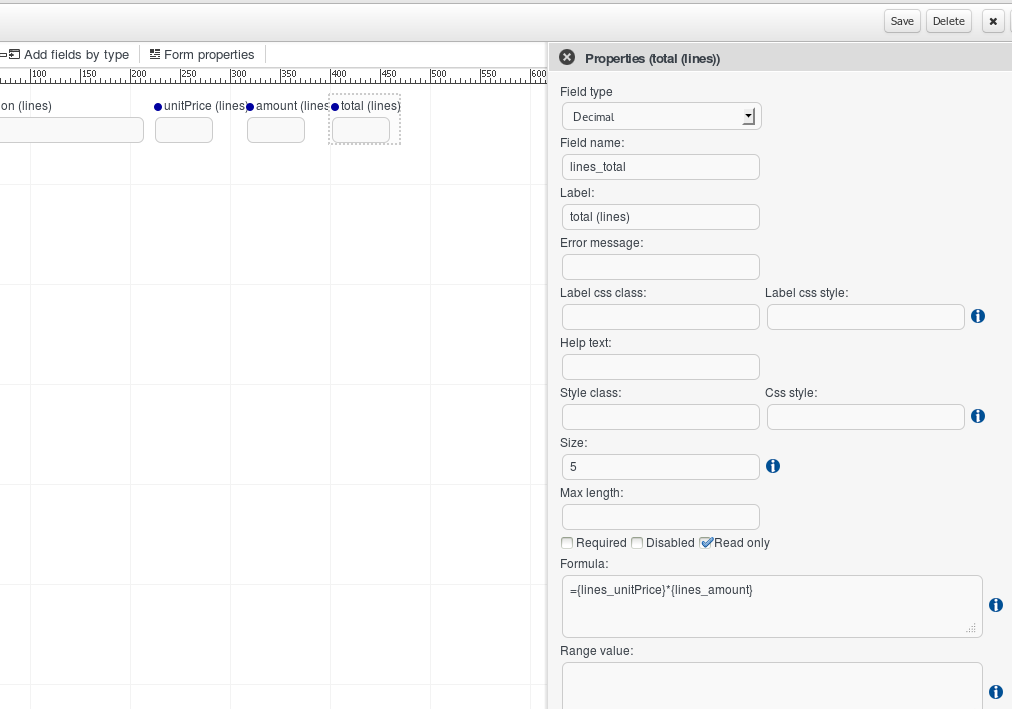

- 13. Forms

- 14. Runtime Management

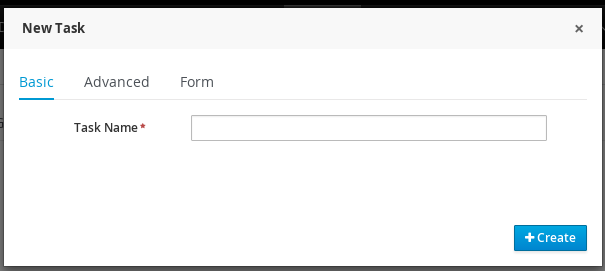

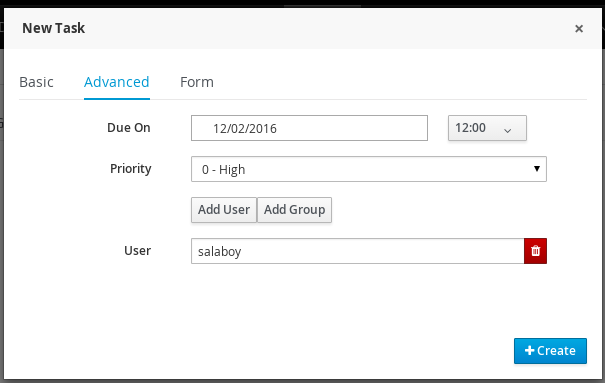

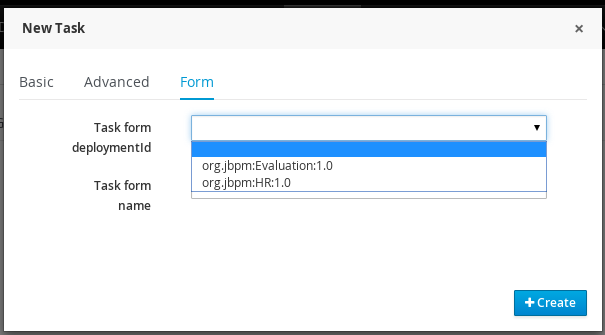

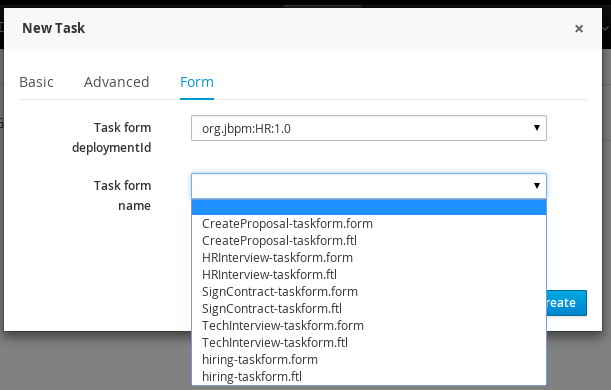

- 15. Process and Task Management

- 16. Business Activity Monitoring

- 17. Remote API

- IV. Eclipse

- V. Integration

- VI. Advanced Topics

- 25. Release Notes

Introduction and getting started with jBPM

Table of Contents

- 1. Overview

- 2. Getting Started

- 3. jBPM Installer

- 4. Examples

jBPM is a flexible Business Process Management (BPM) Suite. It is light-weight, fully open-source (distributed under Apache license) and written in Java. It allows you to model, execute, and monitor business processes throughout their life cycle.

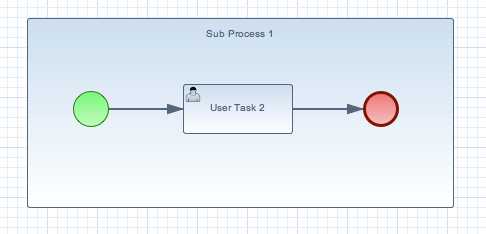

A business process allows you to model your business goals by describing the steps that need to be executed to achieve those goals, and the order of those goals are depicted using a flow chart. This process greatly improves the visibility and agility of your business logic. jBPM focuses on executable business processes, which are business processes that contain enough detail so they can actually be executed on a BPM engine. Executable business processes bridge the gap between business users and developers as they are higher-level and use domain-specific concepts that are understood by business users but can also be executed directly.

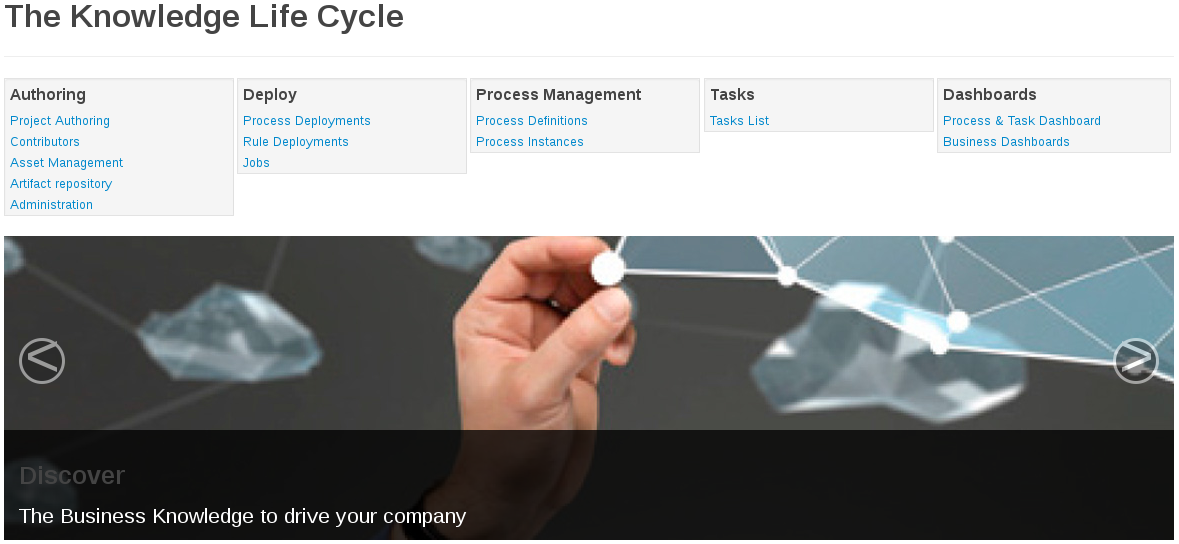

Business processes need to be supported throughout their entire life cycle: authoring, deployment, process management and task lists, and dashboards and reporting.

The core of jBPM is a light-weight, extensible workflow engine written in pure Java that allows you to execute business processes using the latest BPMN 2.0 specification. It can run in any Java environment, embedded in your application or as a service.

On top of the core engine, a lot of features and tools are offered to support business processes throughout their entire life cycle:

- Pluggable human task service based on WS-HumanTask for including tasks that need to be performed by human actors.

- Pluggable persistence and transactions (based on JPA / JTA).

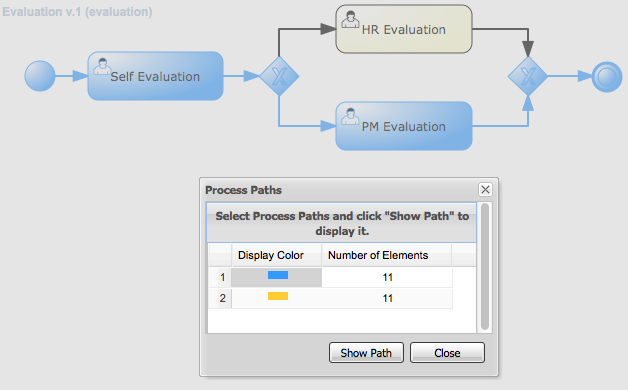

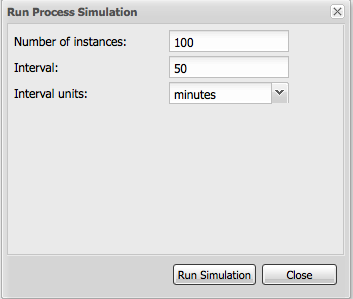

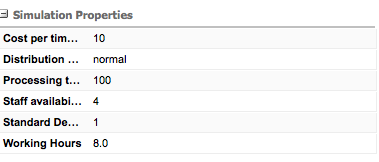

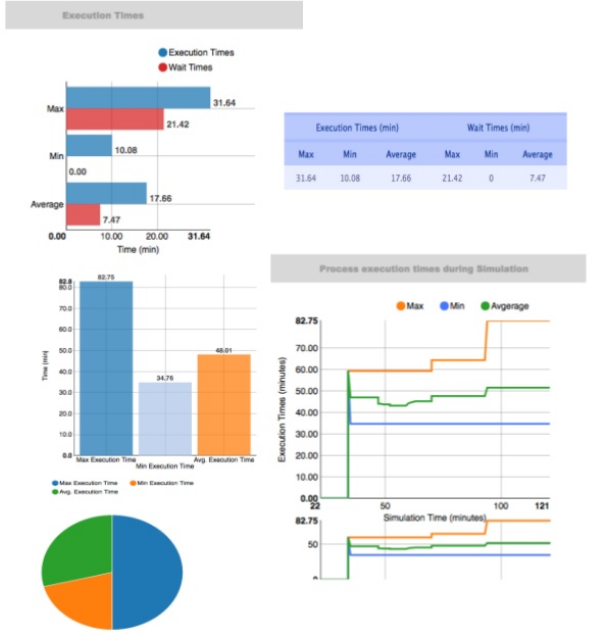

- Web-based process designer to support the graphical creation and simulation of your business processes (drag and drop).

- Web-based data modeler and form modeler to support the creation of data models and process and task forms

- Web-based, customizable dashboards and reporting

- All combined in one web-based workbench, supporting the complete BPM life cycle:

- Modeling and deployment - author your processes, rules, data models, forms and other assets

- Execution - execute processes, tasks, rules and events on the core runtime engine

- Runtime Management - work on assigned task, manage process instances, etc

- Reporting - keep track of the execution using Business Activity Monitoring capabilities

- Eclipse-based developer tools to support the modeling, testing and debugging of processes

- Remote API to process engine as a service (REST, JMS, Remote Java API)

- Integration with Maven, Spring, OSGi, etc.

BPM creates the bridge between business analysts, developers and end users by offering process management features and tools in a way that both business users and developers like. Domain-specific nodes can be plugged into the palette, making the processes more easily understood by business users.

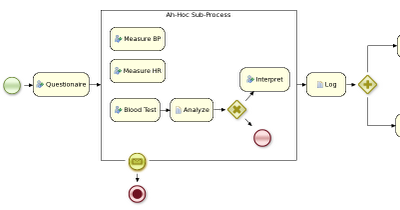

jBPM supports adaptive and dynamic processes that require flexibility to model complex, real-life situations that cannot easily be described using a rigid process. We bring control back to the end users by allowing them to control which parts of the process should be executed; this allows dynamic deviation from the process.

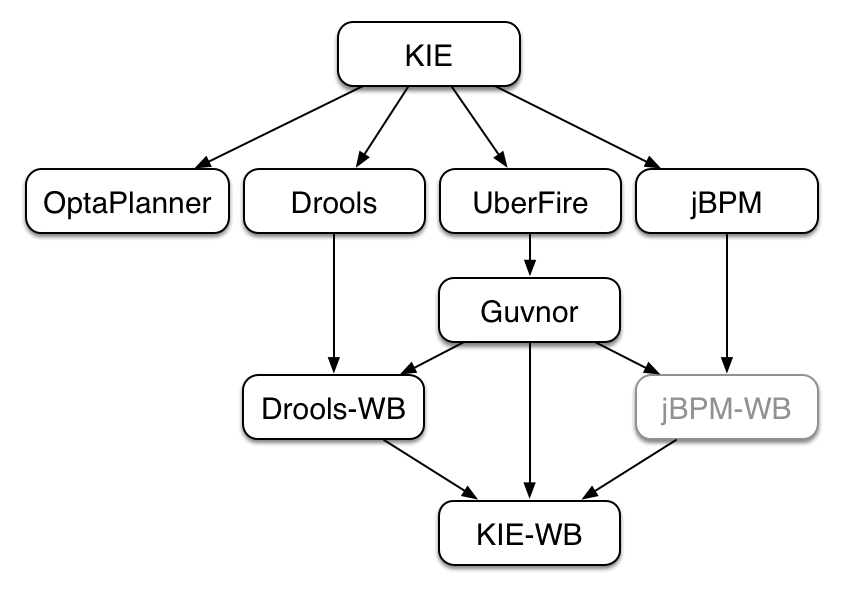

jBPM is not just an isolated process engine. Complex business logic can be modeled as a combination of business processes with business rules and complex event processing. jBPM can be combined with the Drools project to support one unified environment that integrates these paradigms where you model your business logic as a combination of processes, rules and events.

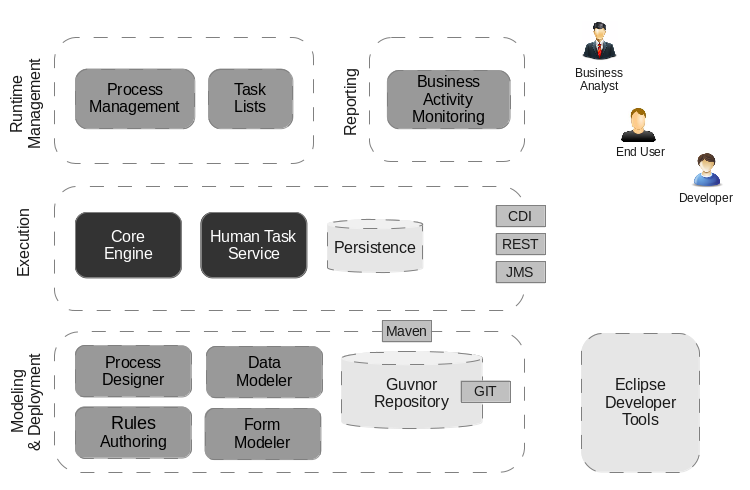

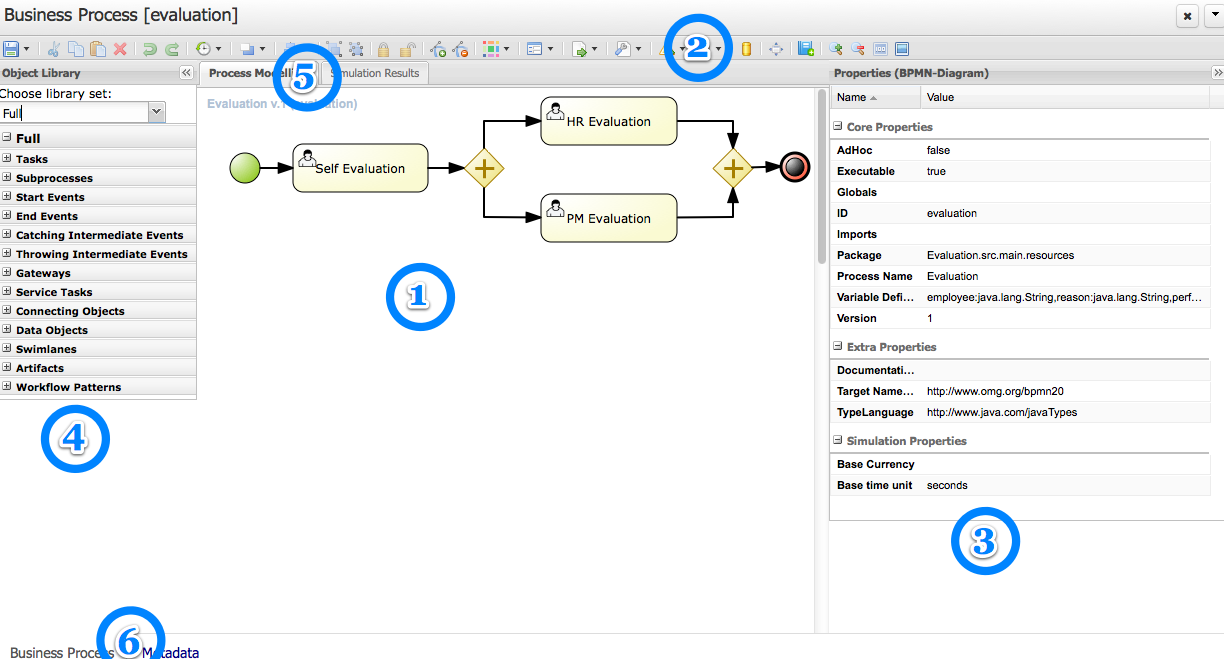

This figure gives an overview of the different components of the jBPM project.

- The core engine is the heart of the project and allows you to

execute business processes in a flexible manner. It is a pure Java component that you can

choose to embed as part of your application or deploy it as a service and connect

to it through the web-based UI or remote APIs.

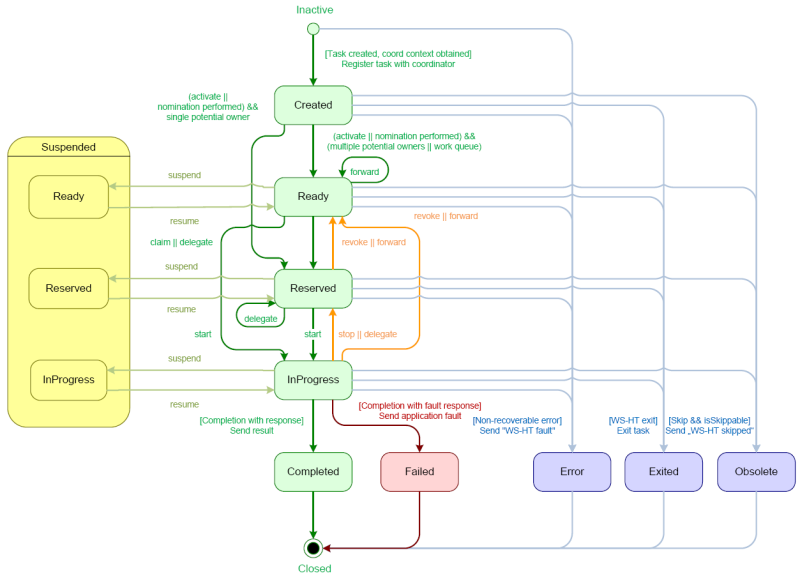

- An optional core service is the human task service that will take care of the human task life cycle if human actors participate in the process.

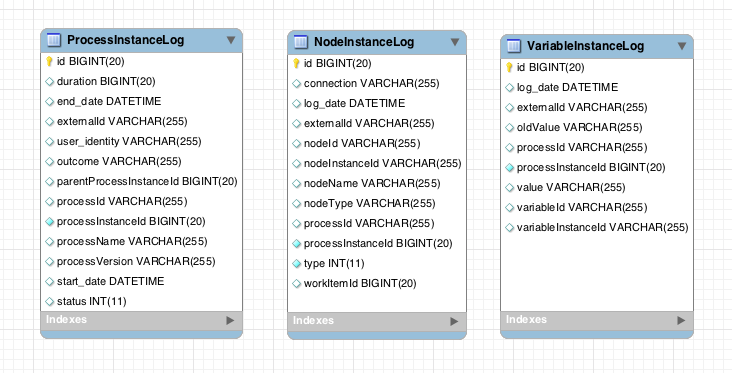

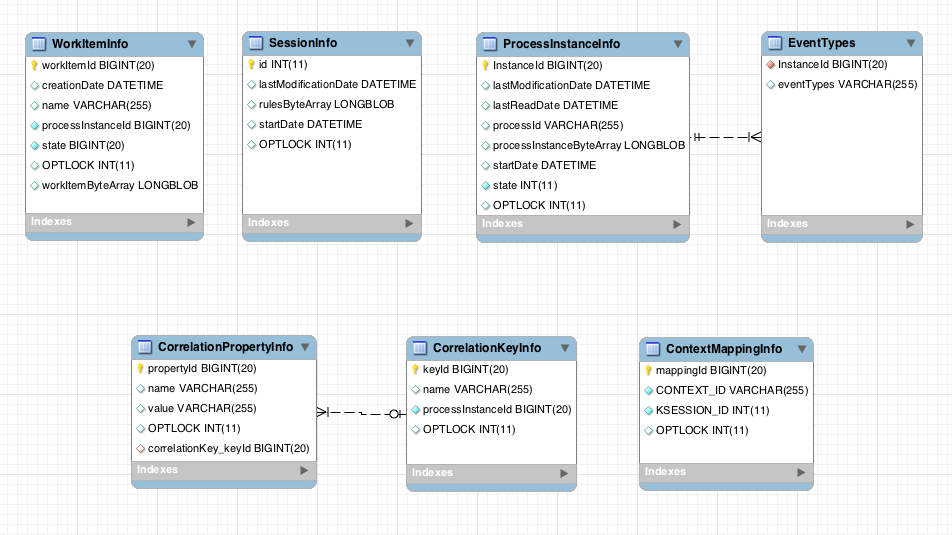

- Another optional core service is runtime persistence; this will persist the state of all your process instances and log audit information about everything that is happening at runtime.

- Applications can connect to the core engine by through its Java API or as a set of CDI services, but also remotely through a REST and JMS API.

- Web-based tools allows you to model, simulate and deploy your processes and other

related artifacts (like data models, forms, rules, etc.):

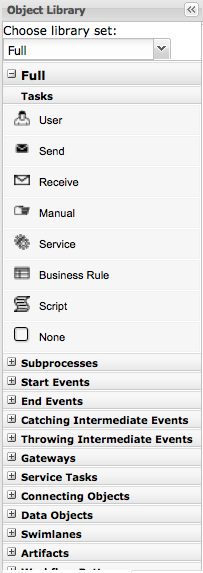

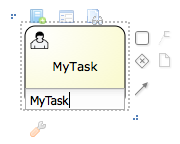

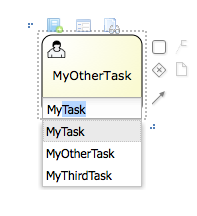

- The process designer allows business users to design and simulate business processes in a web-based environment.

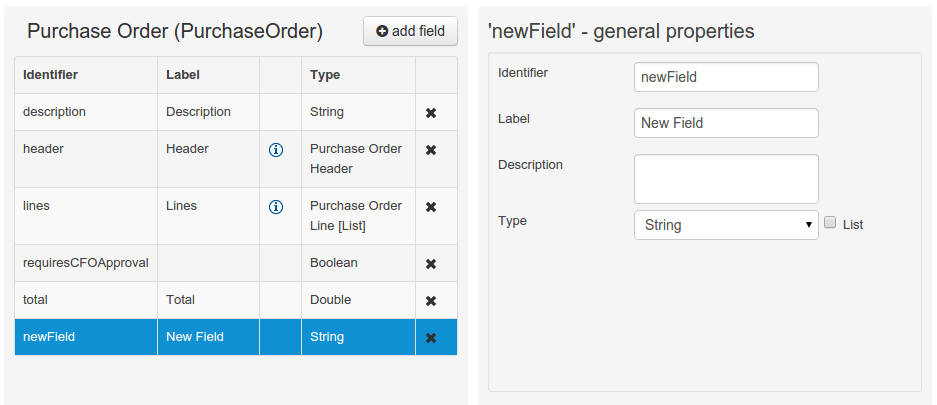

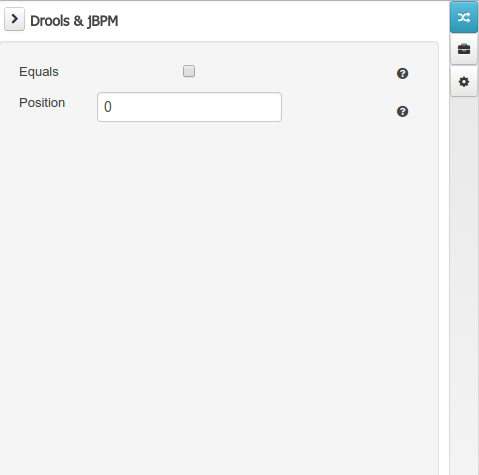

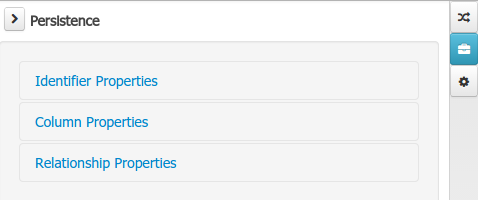

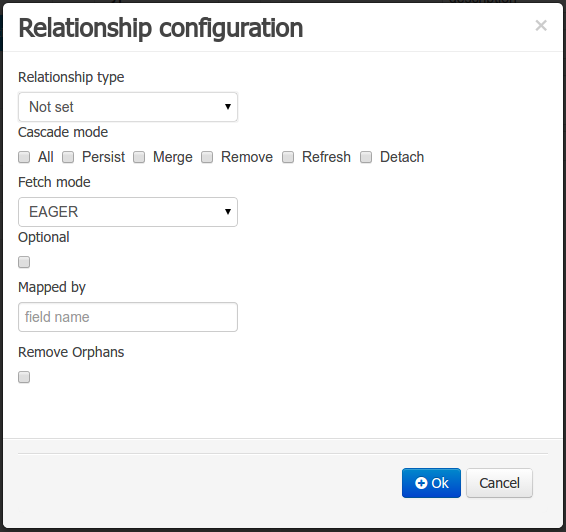

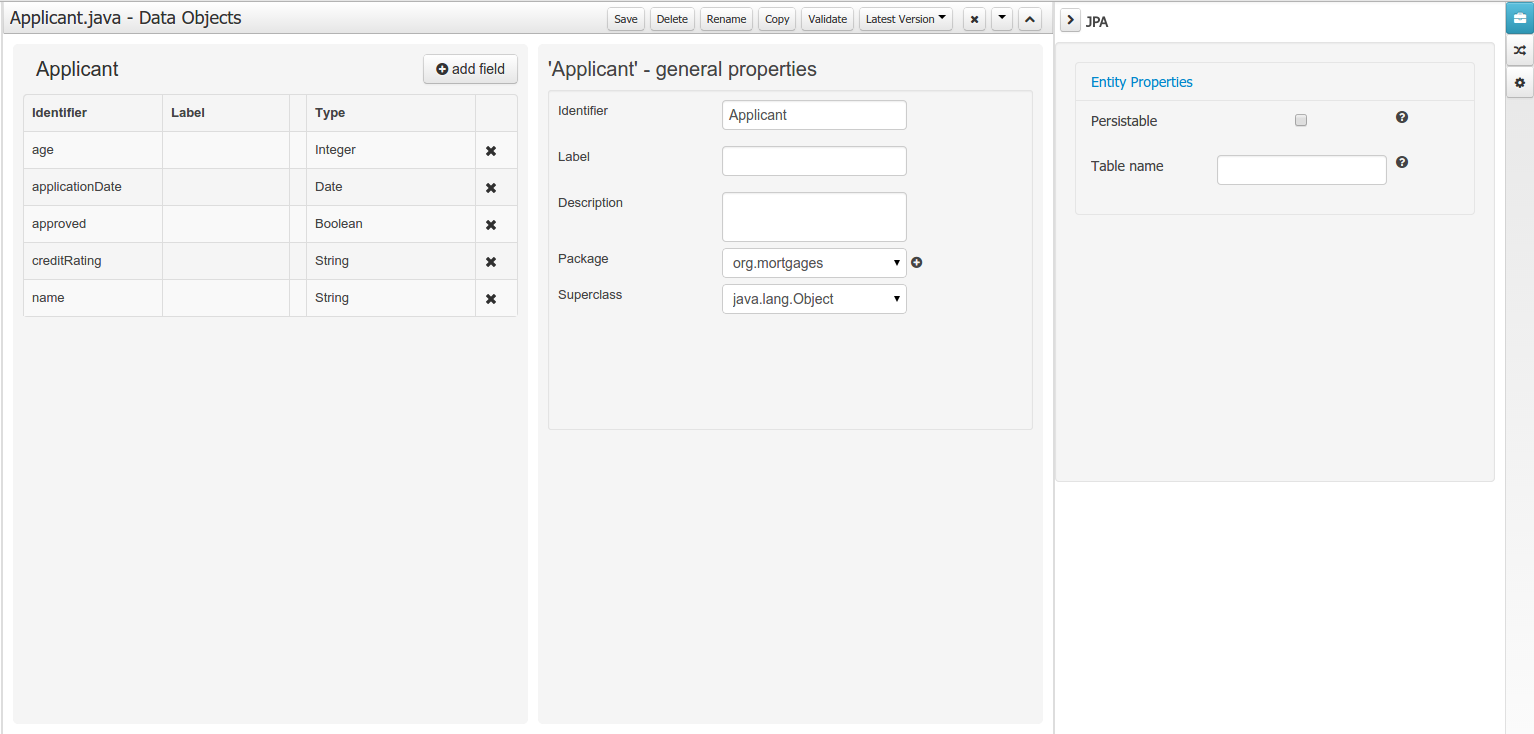

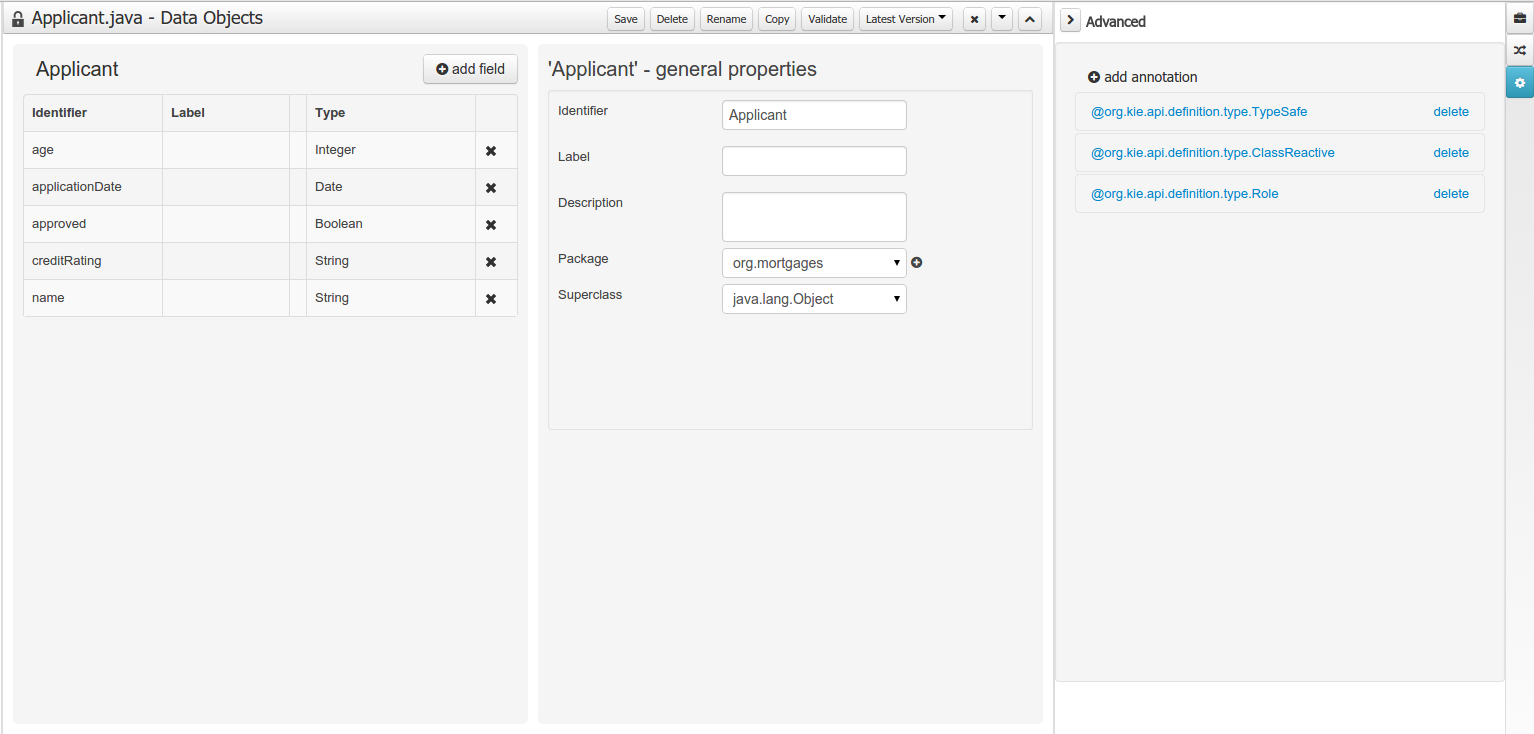

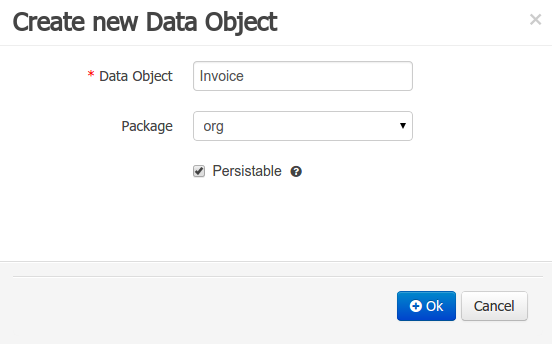

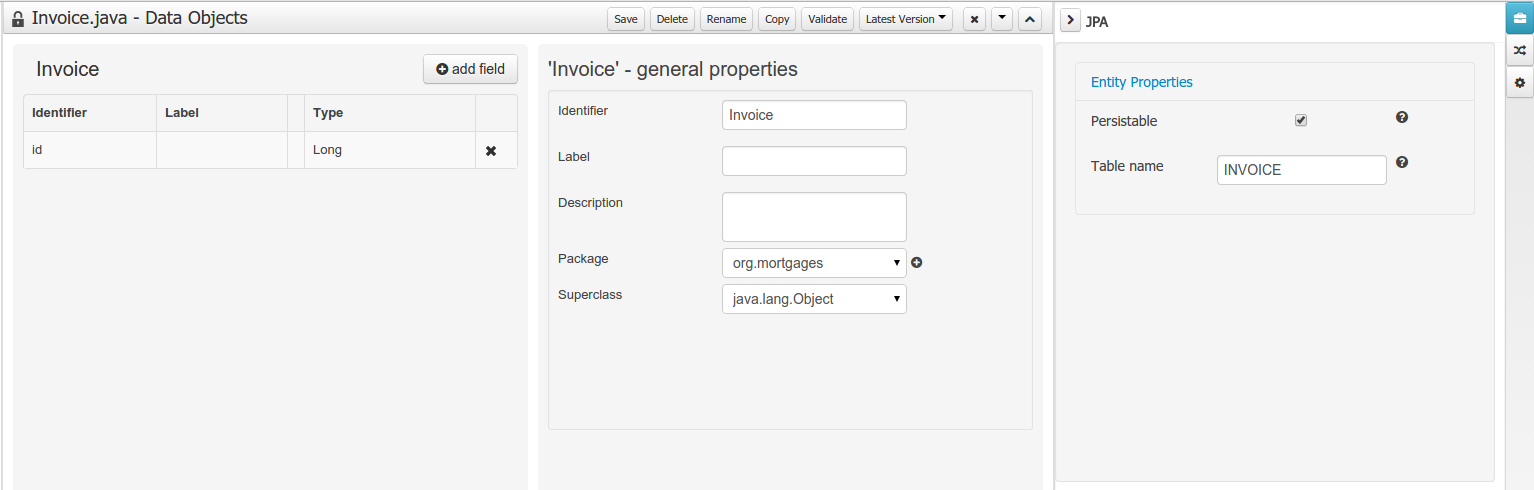

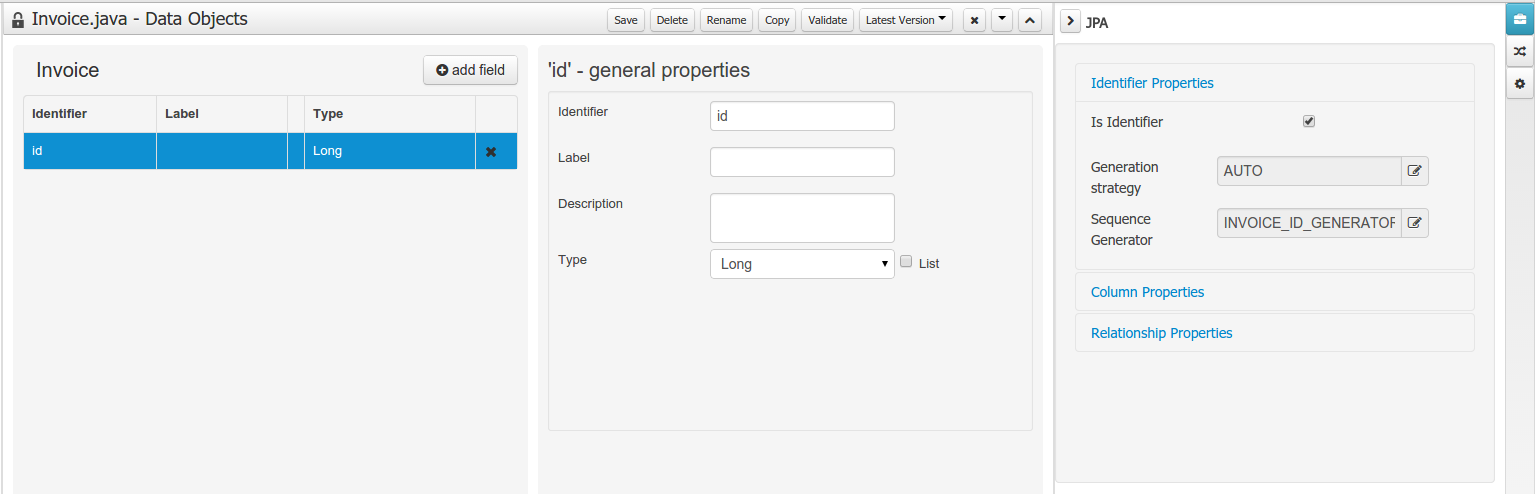

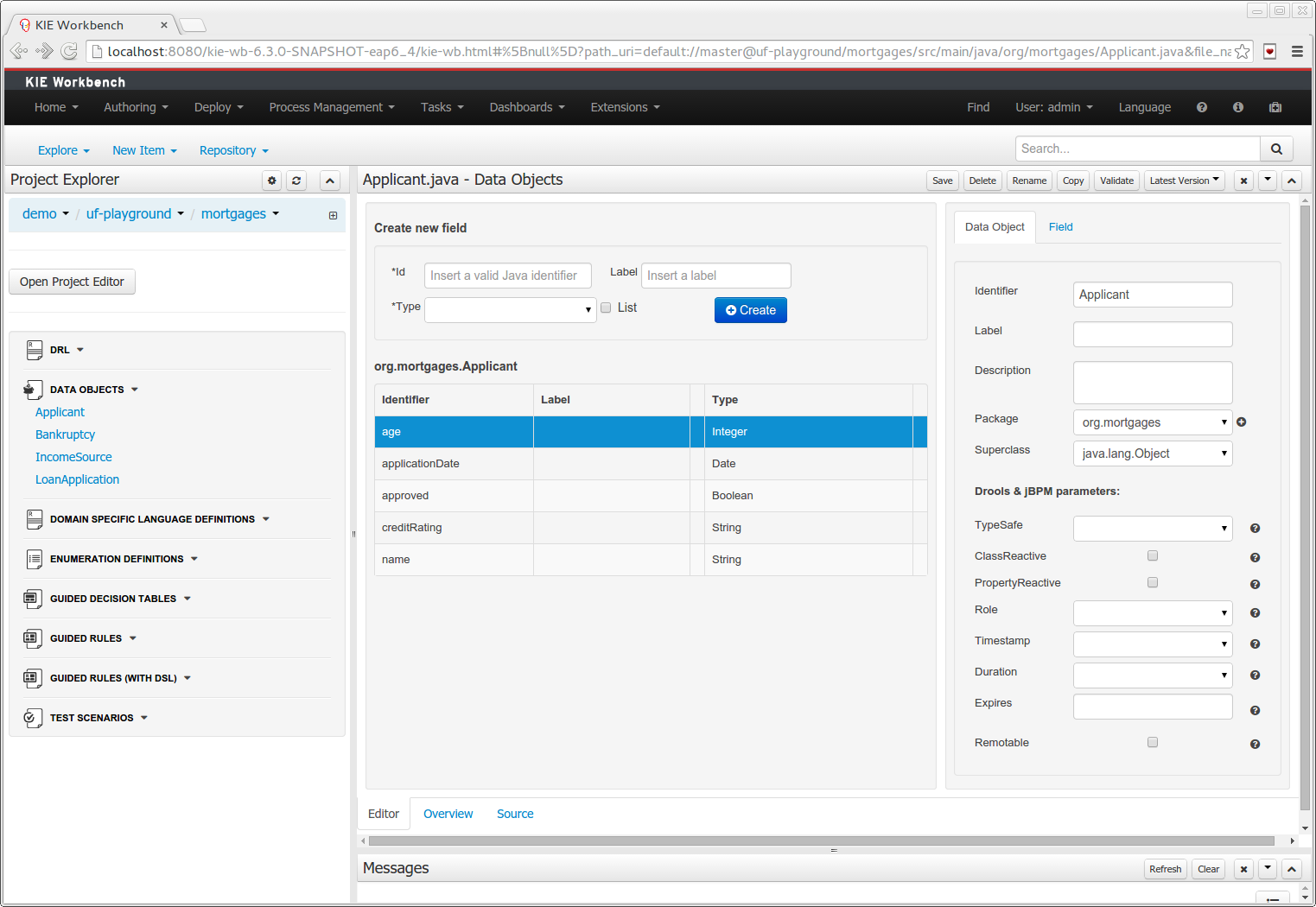

- The data modeler allows non-technical users to view, modify and create data models for use in your processes.

- A web-based form modeler also allows you to create, generate or edit forms related to your processes (to start the process or to complete one of the user tasks).

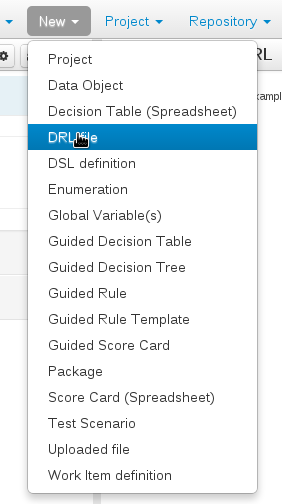

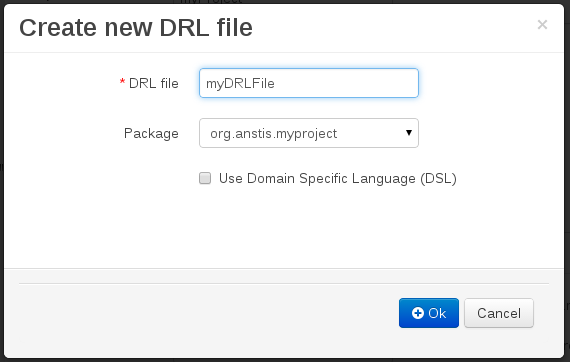

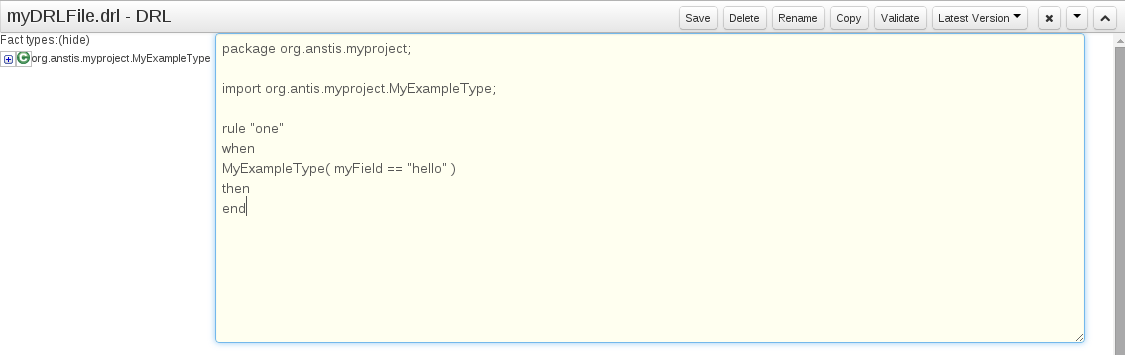

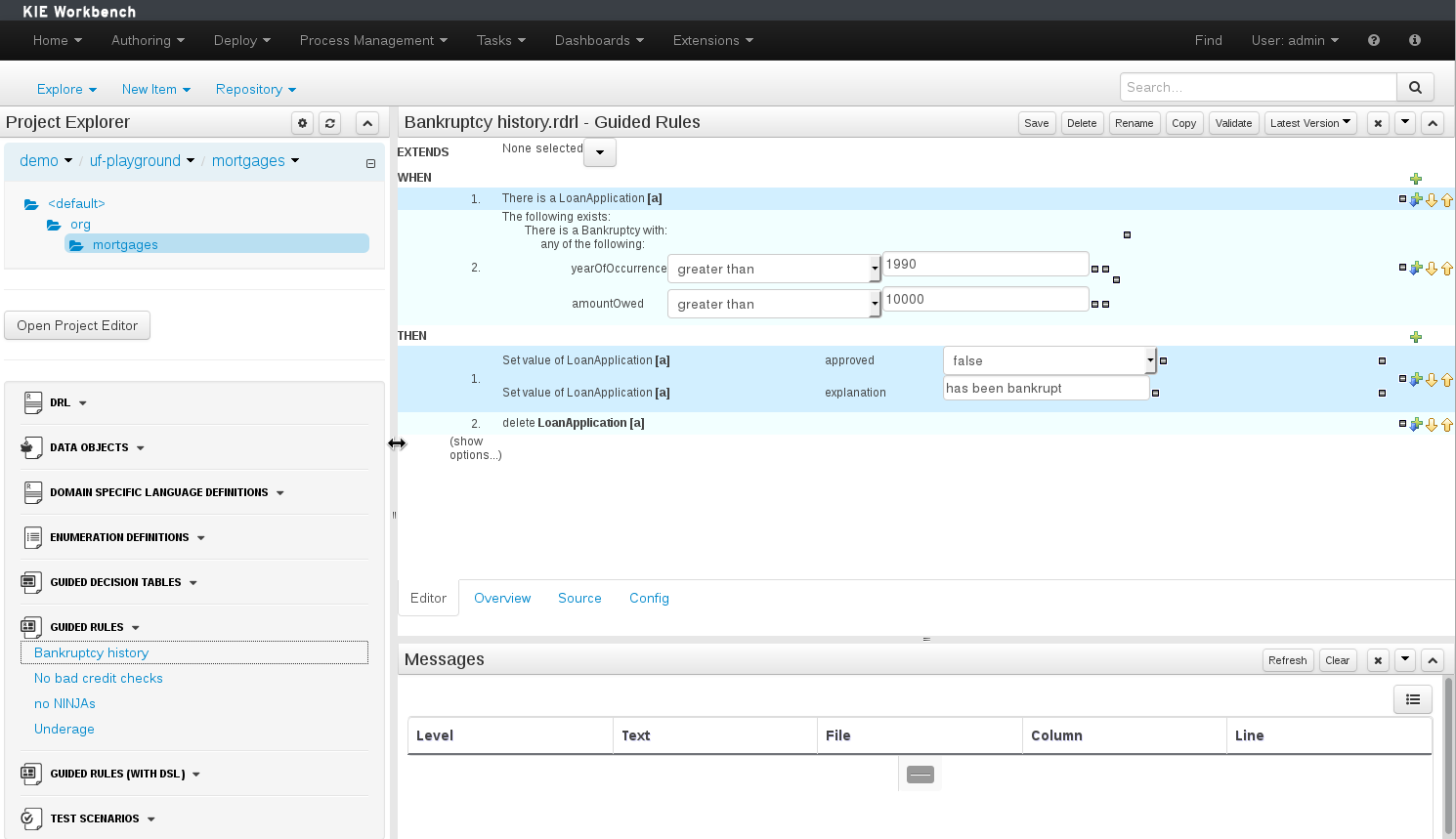

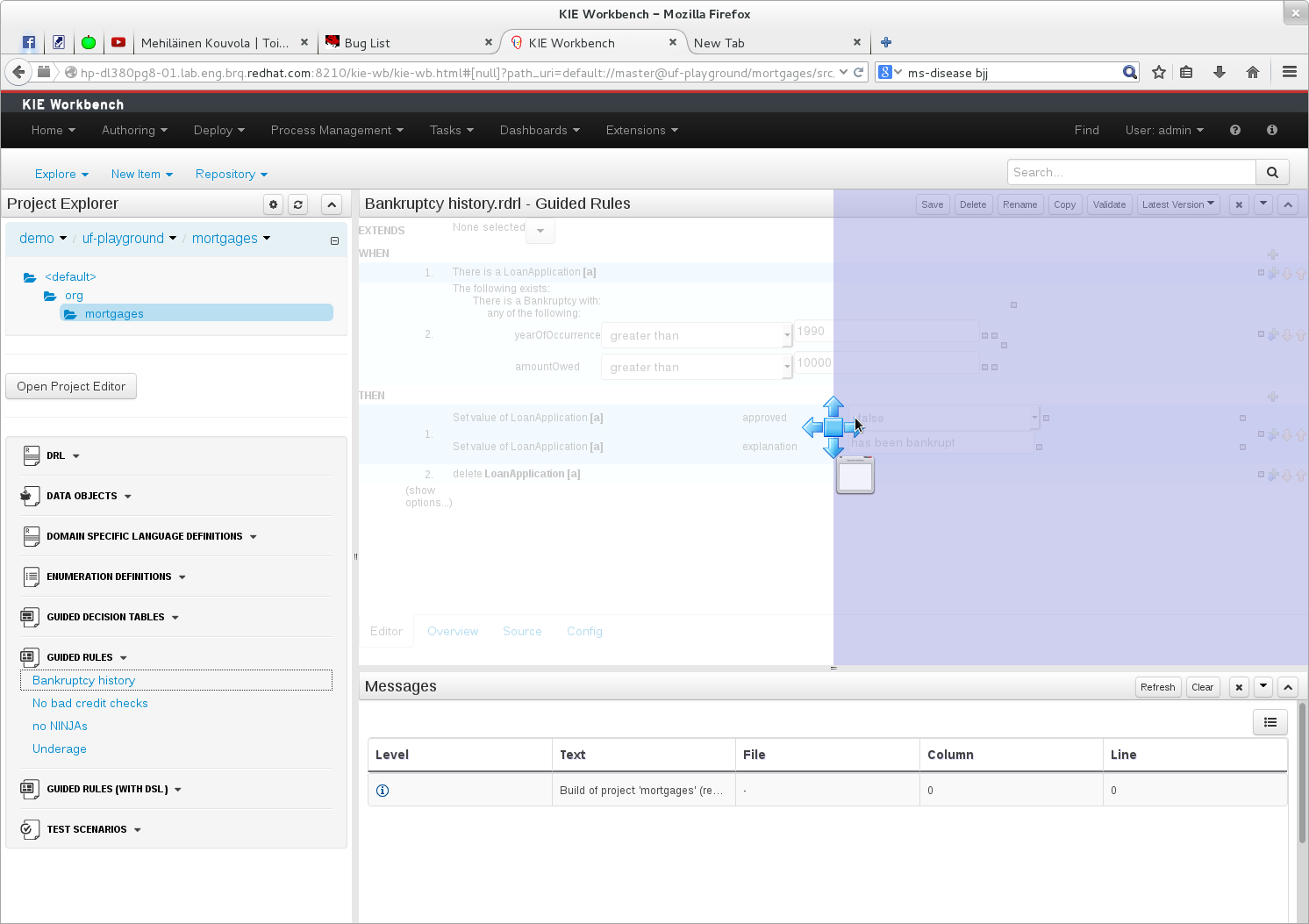

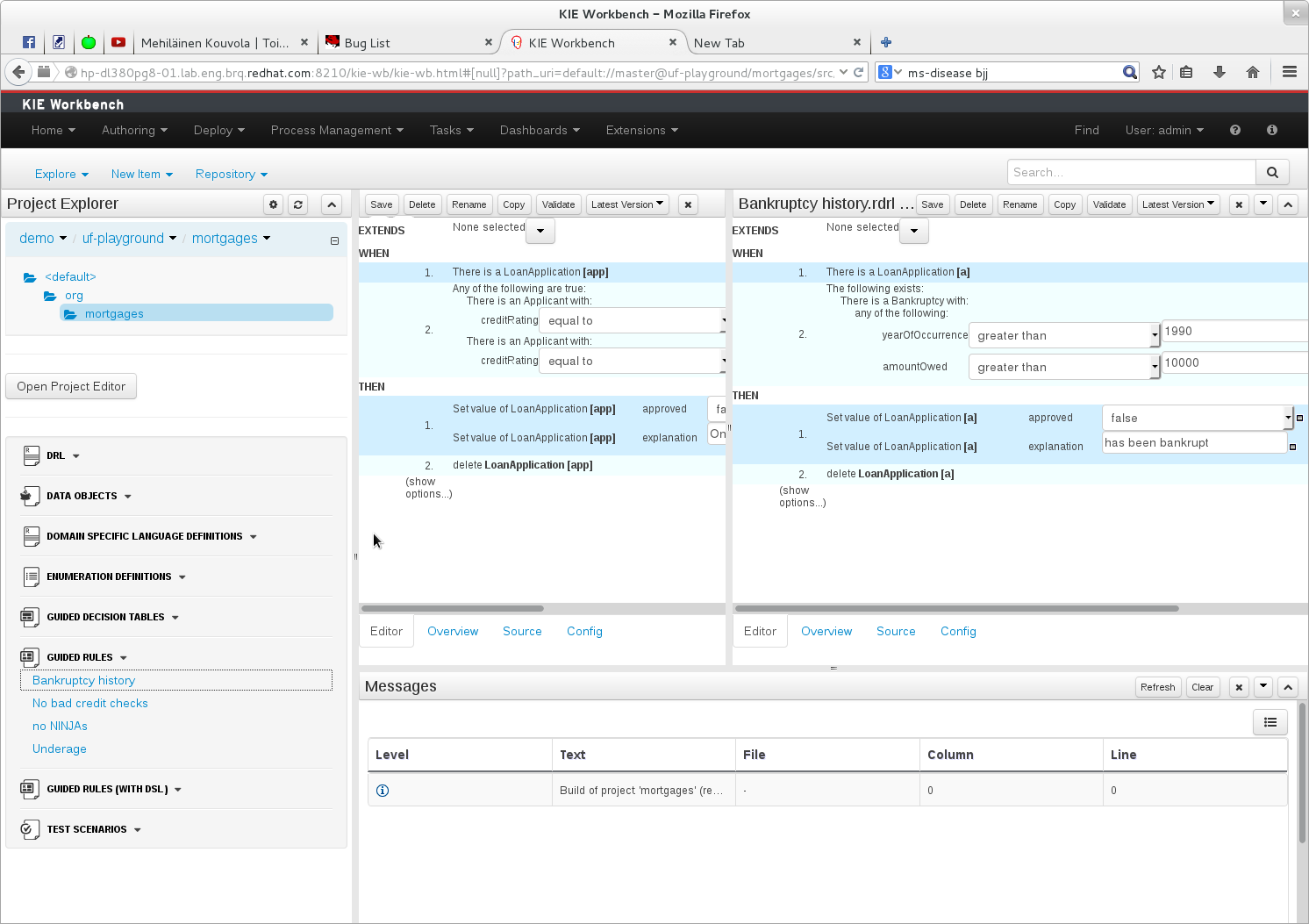

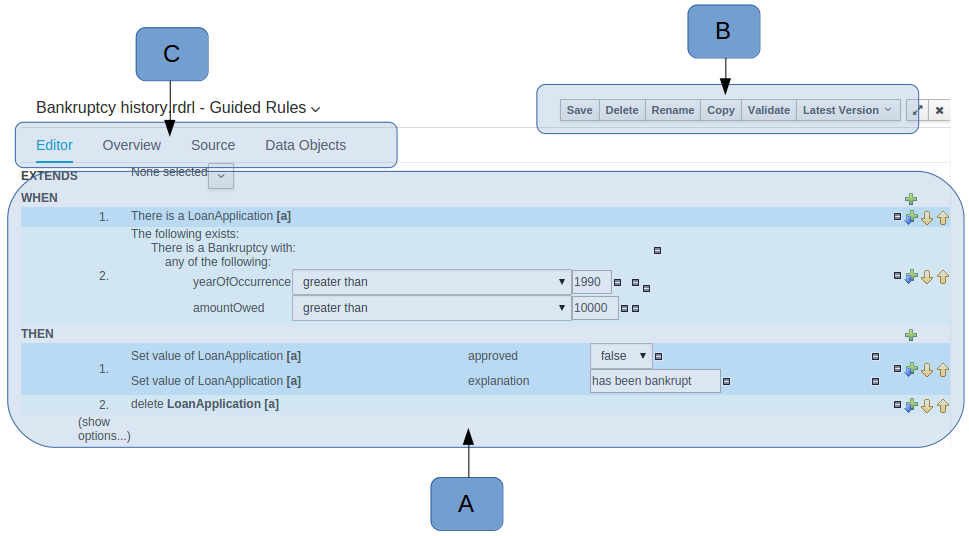

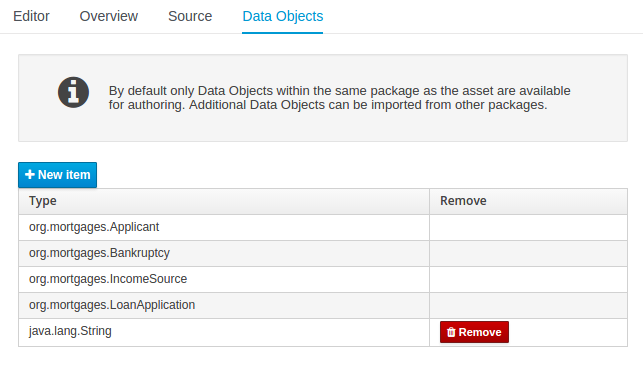

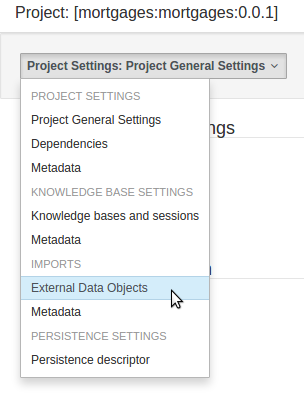

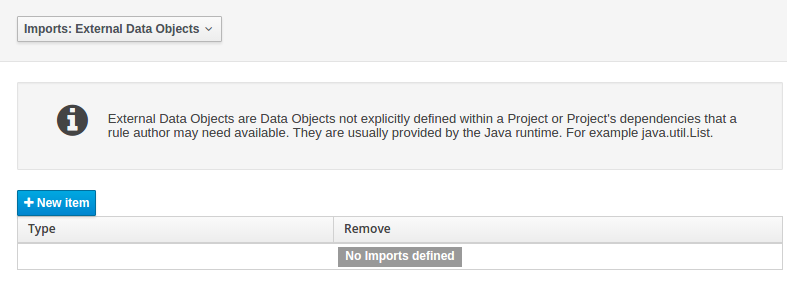

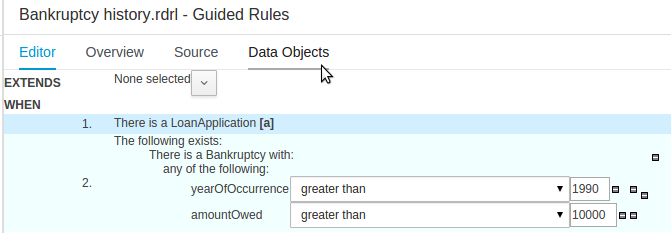

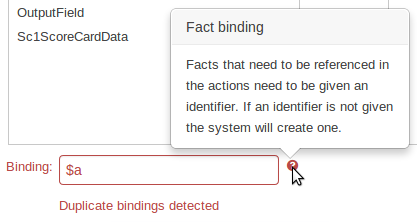

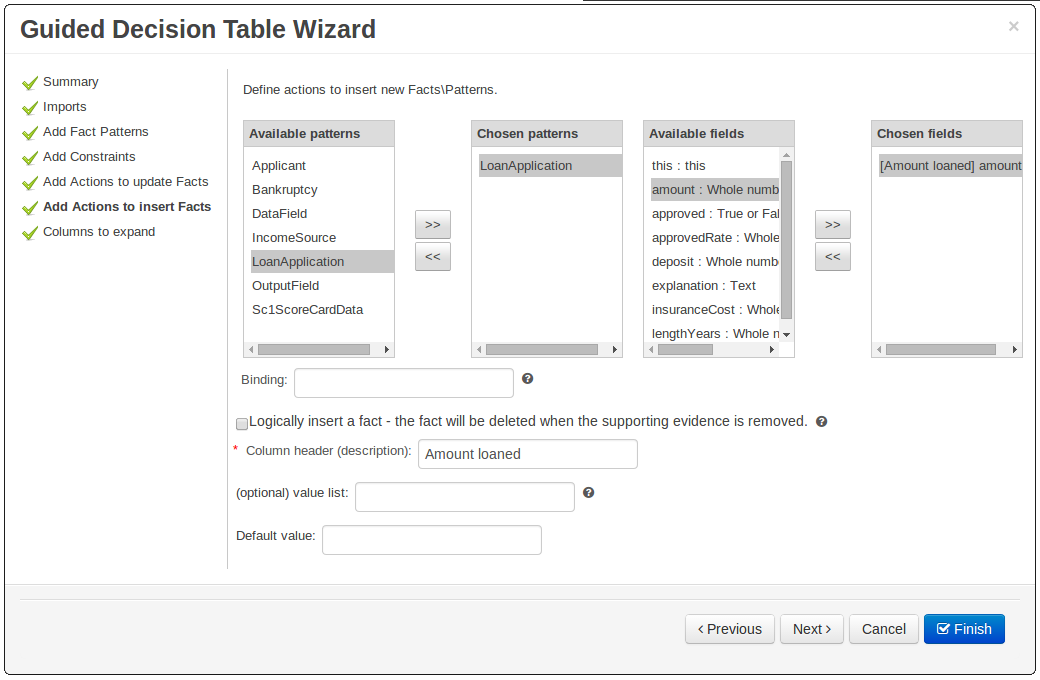

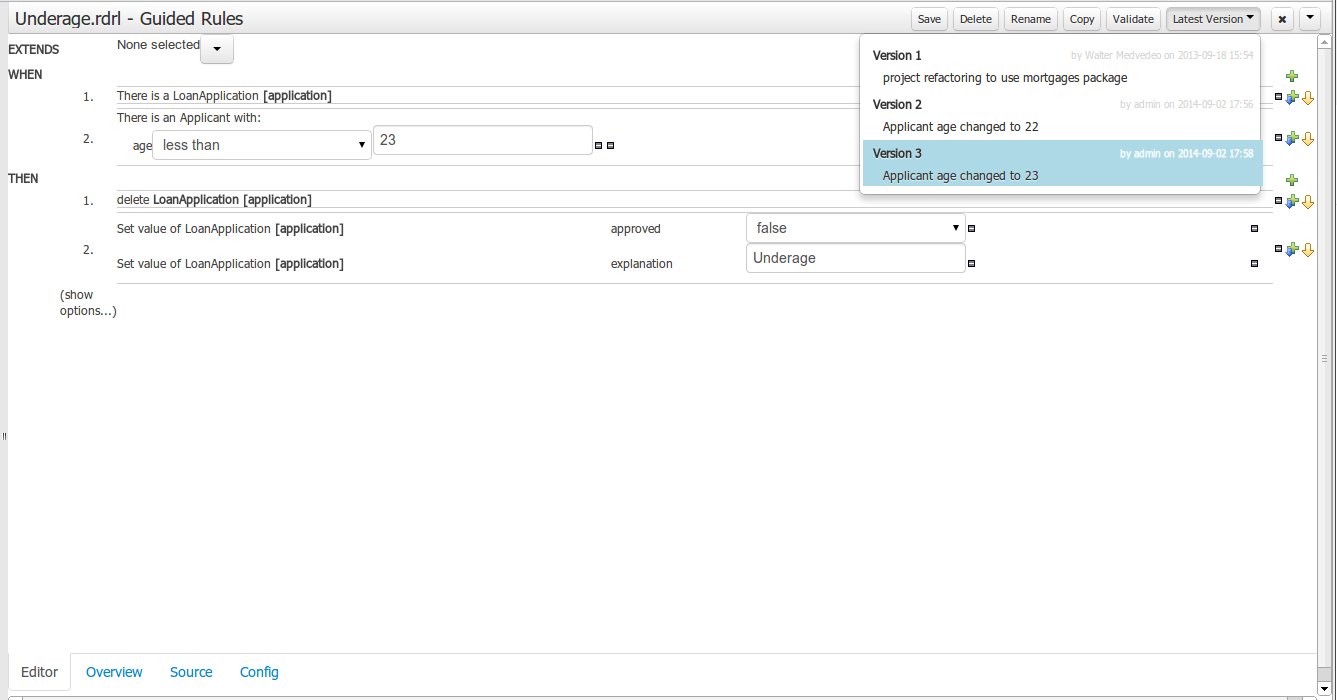

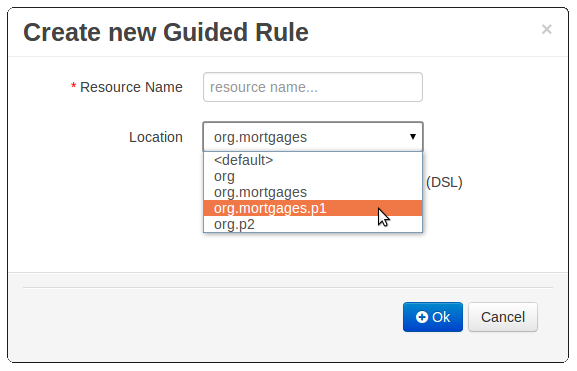

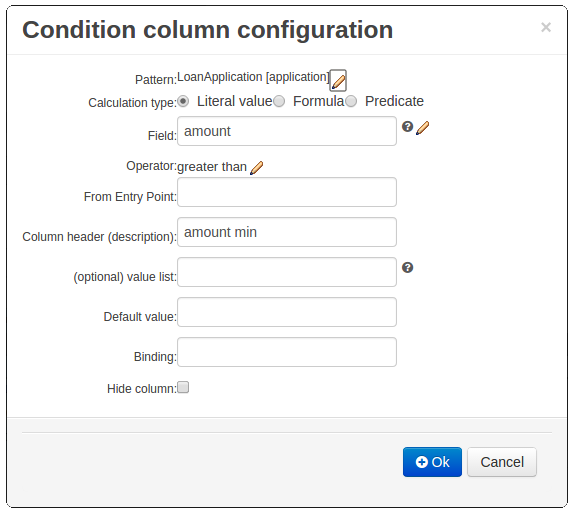

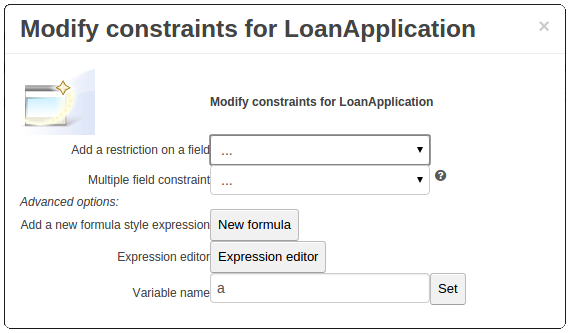

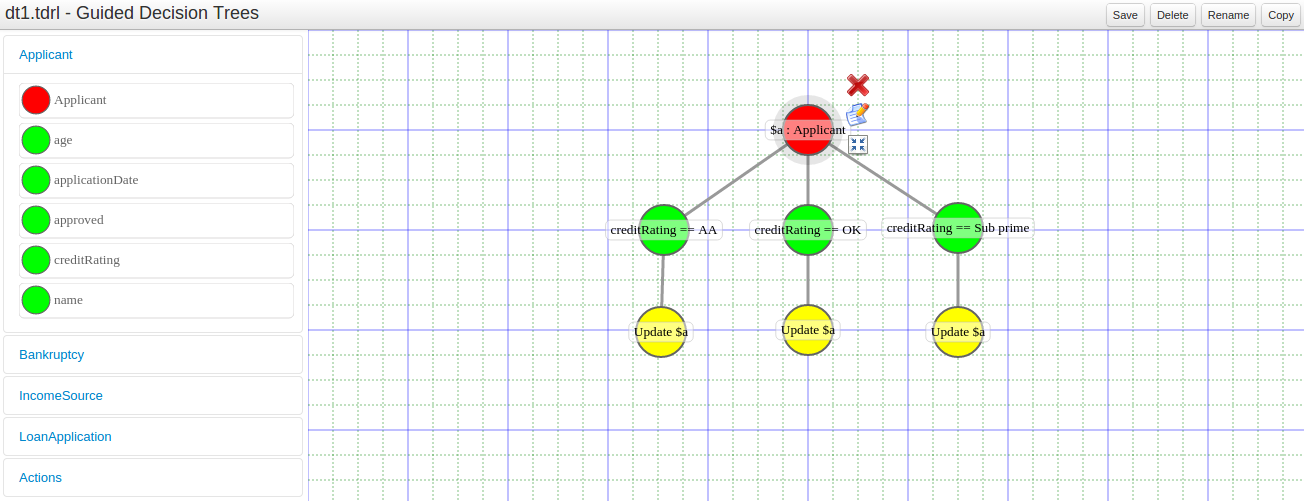

- Rule authoring allows you to specify different types of business rules (decision tables, guided rules, etc.) for combination with your processes.

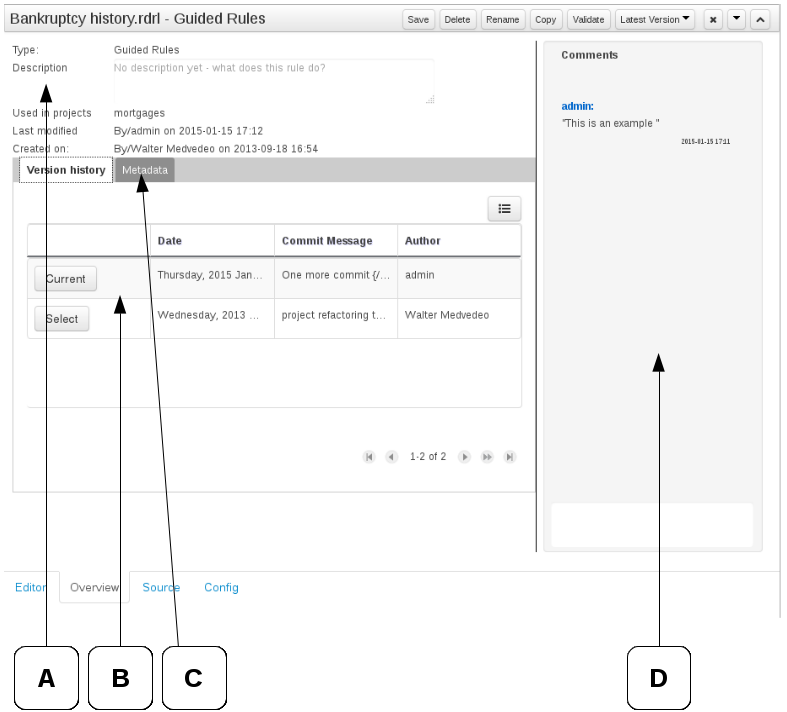

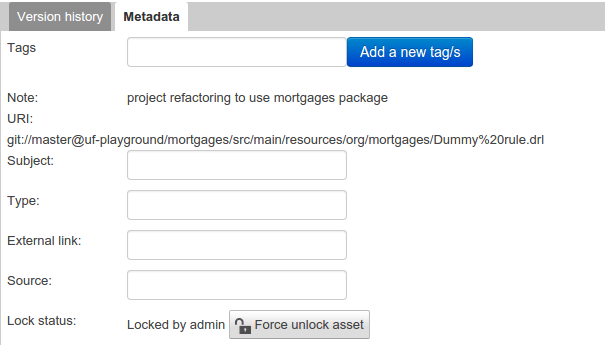

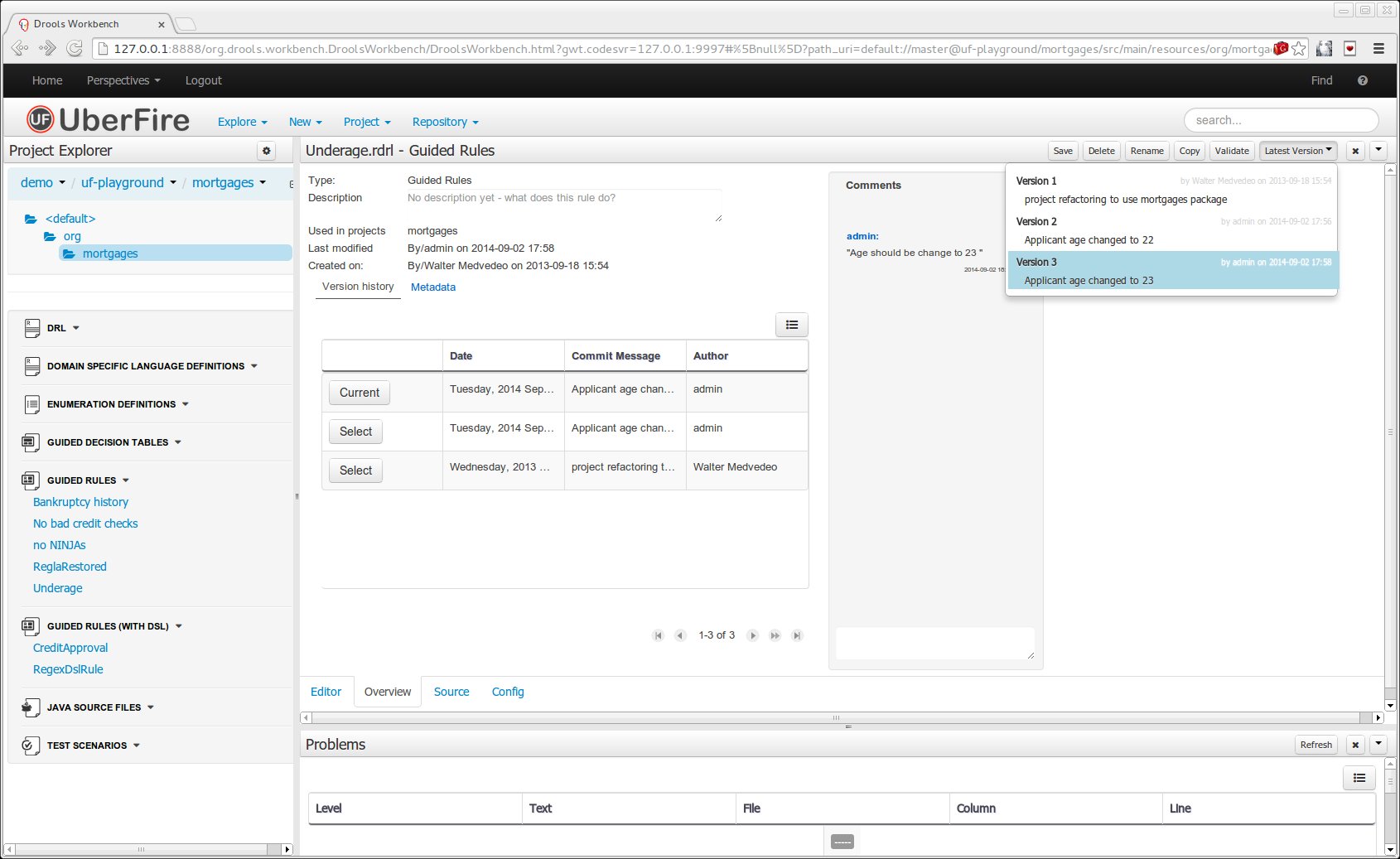

- All assets are stored and managed on the Guvnor repository (exposed through Git) and can be managed (versioning), built and deployed.

- The web-based management console allows business users to manage their runtime (manage business processes like start new processes, inspect running instances, etc.), to manage their task list and to perform Business Activity Monitoring (BAM) and see reports.

- The Eclipse-based developer tools are an extension to the Eclipse IDE, targeted towards developers, and allows you to create business processes using drag and drop, test and debug your processes, etc.

Each of the components are described in more detail below.

The core jBPM engine is the heart of the project. It's a light-weight workflow engine that executes your business processes. It can be embedded as part of your application or deployed as a service (possibly on the cloud). Its most important features are the following:

- Solid, stable core engine for executing your process instances.

- Native support for the latest BPMN 2.0 specification for modeling and executing business processes.

- Strong focus on performance and scalability.

- Light-weight (can be deployed on almost any device that supports a simple Java Runtime Environment; does not require any web container at all).

- (Optional) pluggable persistence with a default JPA implementation.

- Pluggable transaction support with a default JTA implementation.

- Implemented as a generic process engine, so it can be extended to support new node types or other process languages.

- Listeners to be notified of various events.

- Ability to migrate running process instances to a new version of their process definition

The core engine can also be integrated with a few other (independent) core services:

- The human task service can be used to manage human tasks when human actors need to participate in the process. It is fully pluggable and the default implementation is based on the WS-HumanTask specification and manages the life cycle of the tasks, task lists, task forms, and some more advanced features like escalation, delegation, rule-based assignments, etc.

- The history log can store all information about the execution of all the processes in the engine. This is necessary if you need access to historic information as runtime persistence only stores the current state of all active process instances. The history log can be used to store all current and historic states of active and completed process instances. It can be used to query for any information related to the execution of process instances, for monitoring, analysis, etc.

The web-based designer allows you to model your business processes in a web-based environment. It is targeted towards business users and offers a graphical editor for viewing and editing your business processes (using drag and drop), similar to the Eclipse plugin. It supports round-tripping between the Eclipse editor and the web-based designer. It also supports simulation of processes.

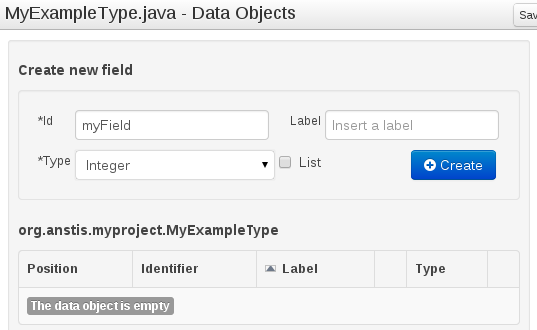

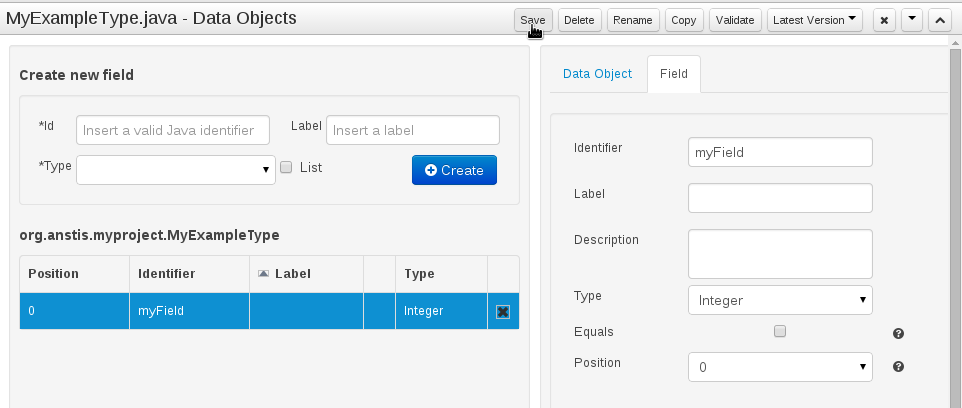

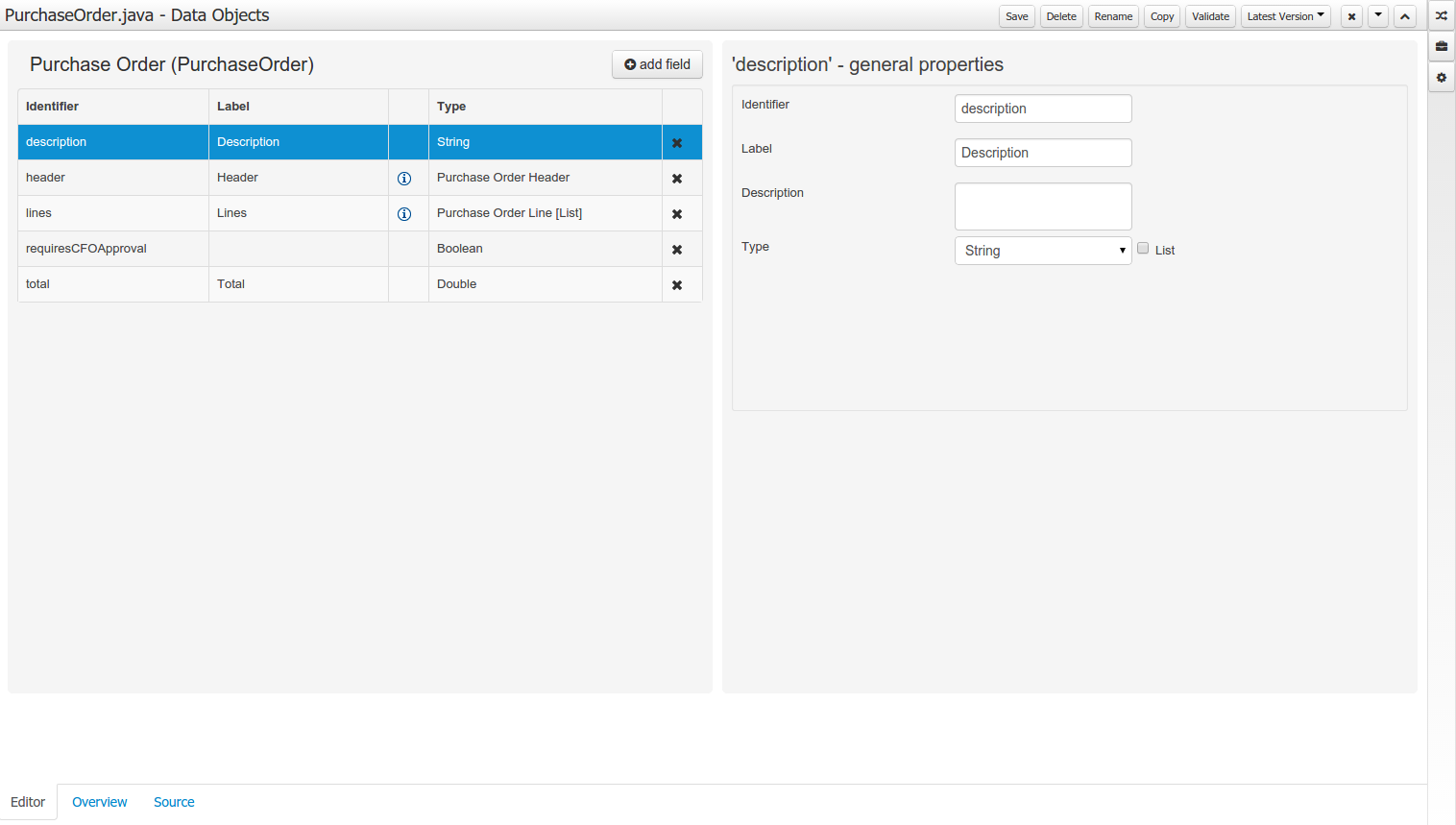

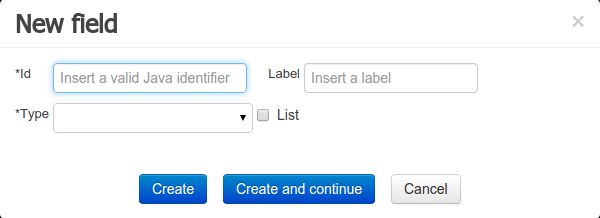

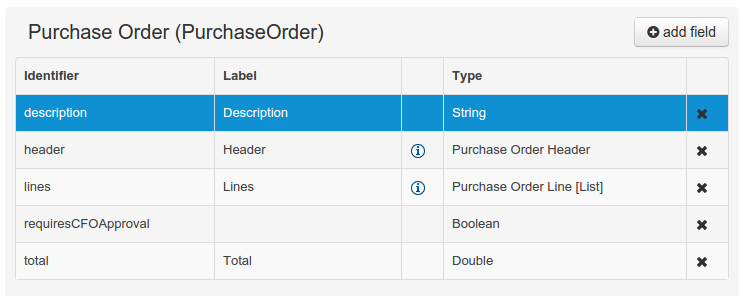

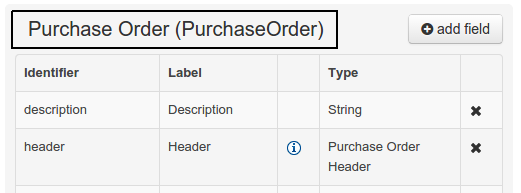

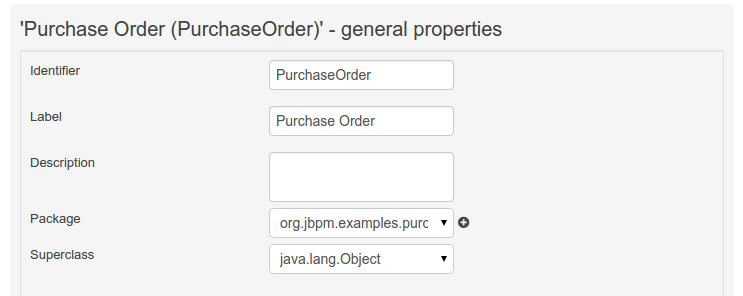

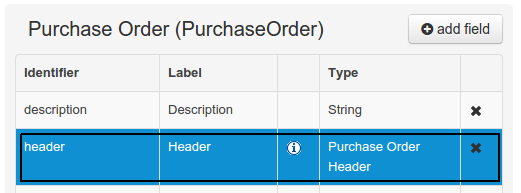

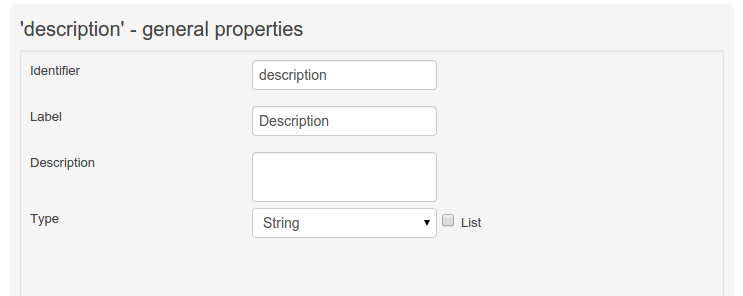

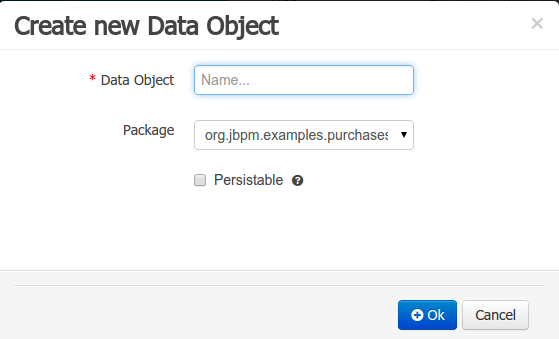

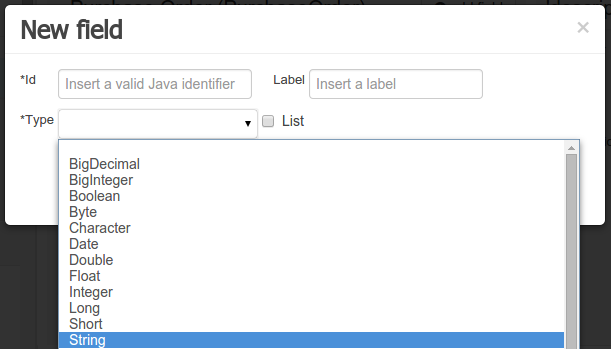

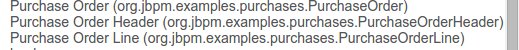

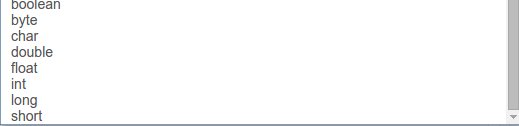

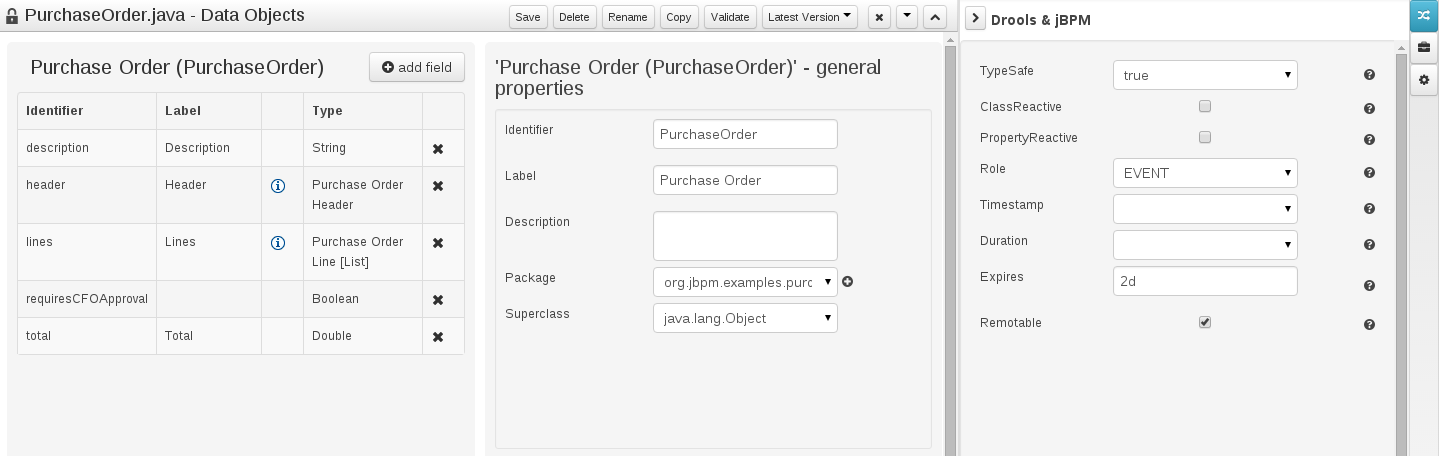

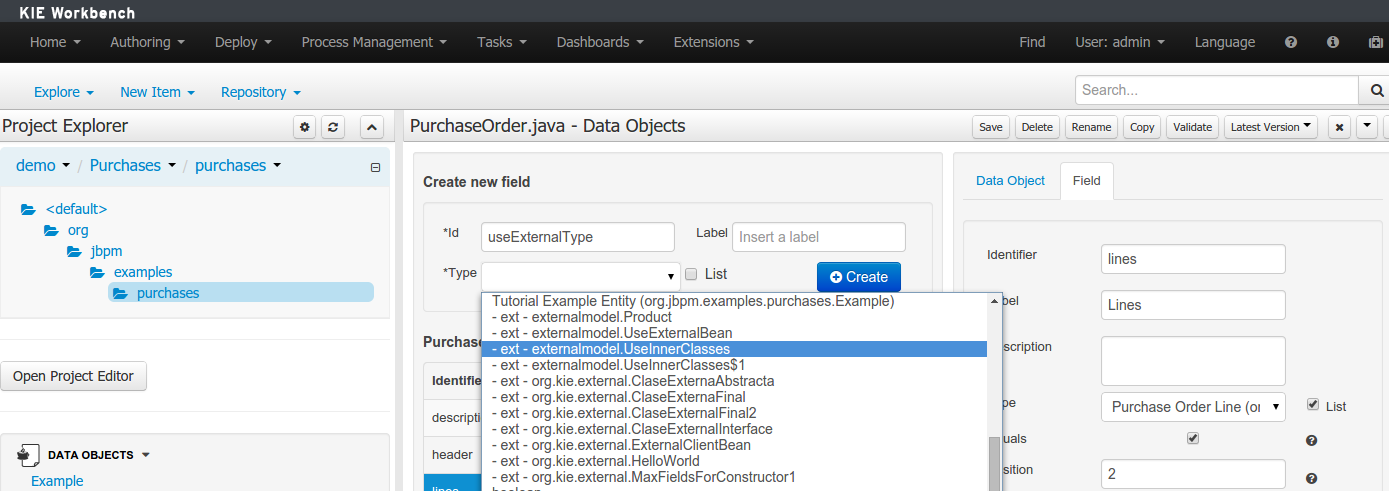

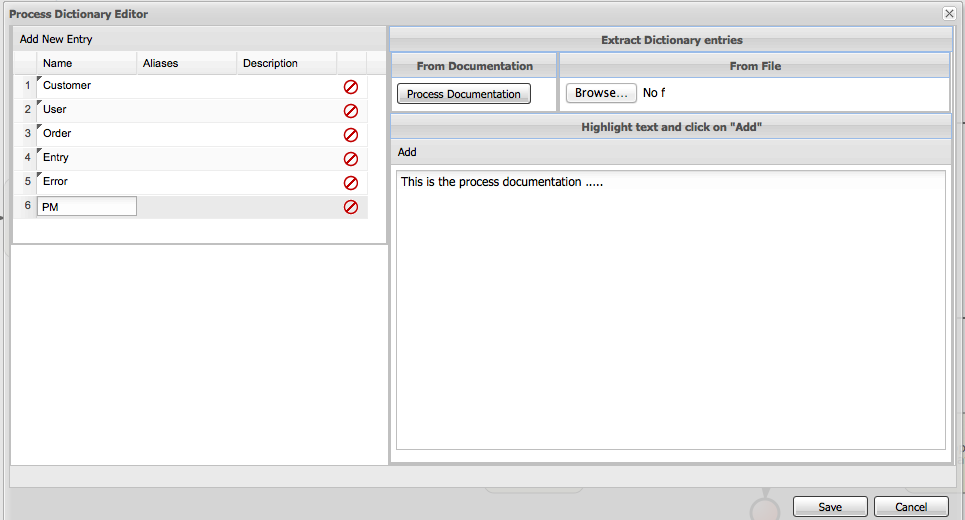

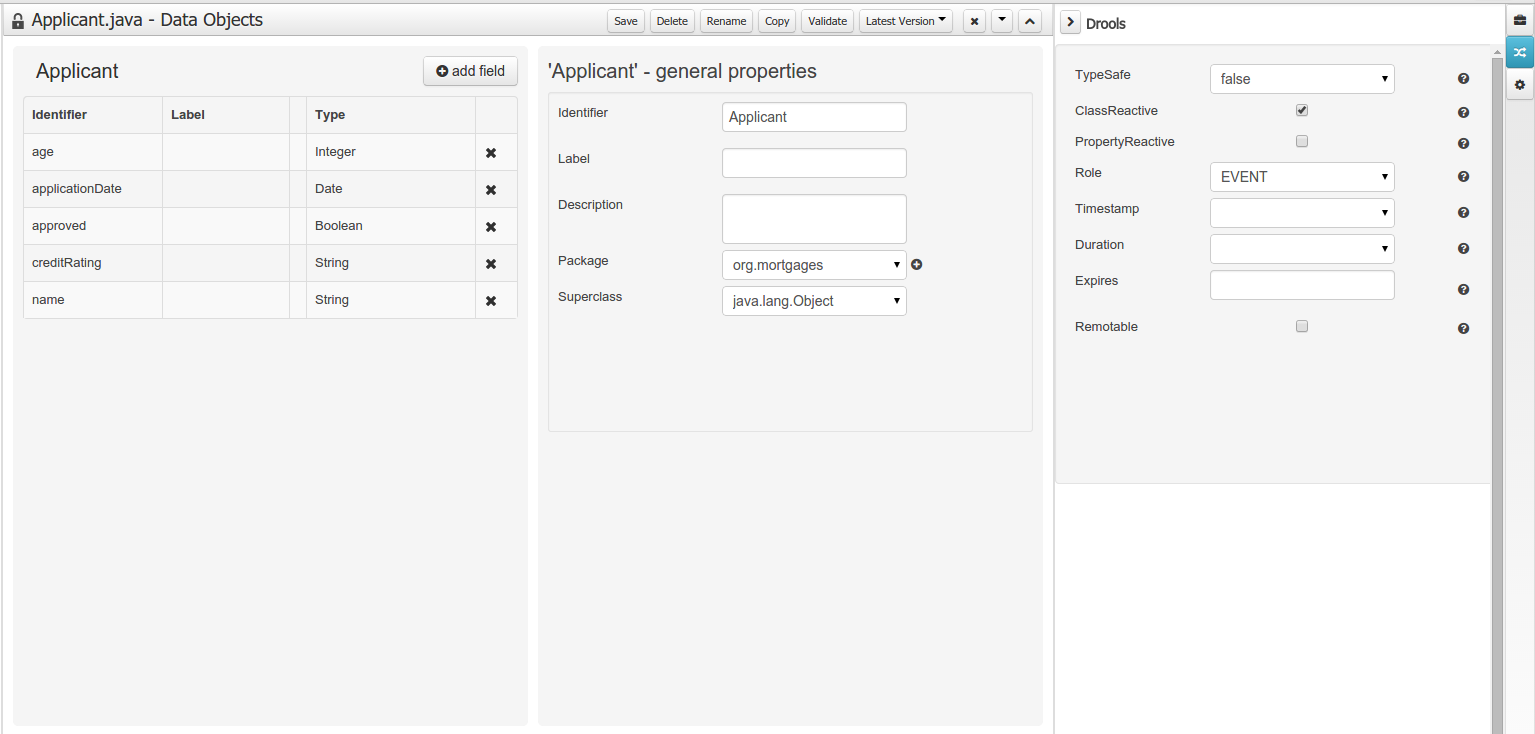

Processes almost always have some kind of data to work with. The data modeler allows non-technical users to view, edit or create these data models.

Typically, a business process analyst or data analyst will capture the requirements for a process or application and turn these into a formal set of interrelated data structures. The new Data Modeler tool provides an easy, straightforward and visual aid for building both logical and physical data models, without the need for advanced development skills or explicit coding. The data modelers is transparently integrate into the workbench. Its main goals are to make data models into first class citizens in the process improvement cycle and allow for full process automation through the integrated use of data structures (and the forms that will be used to interact with them).

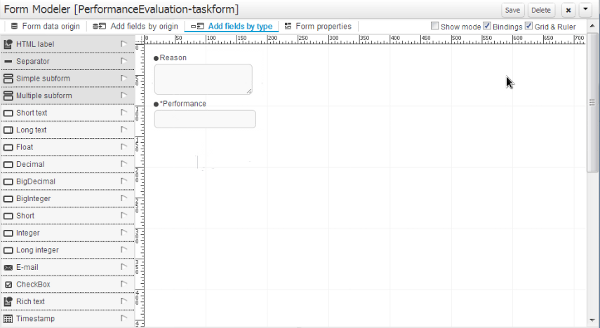

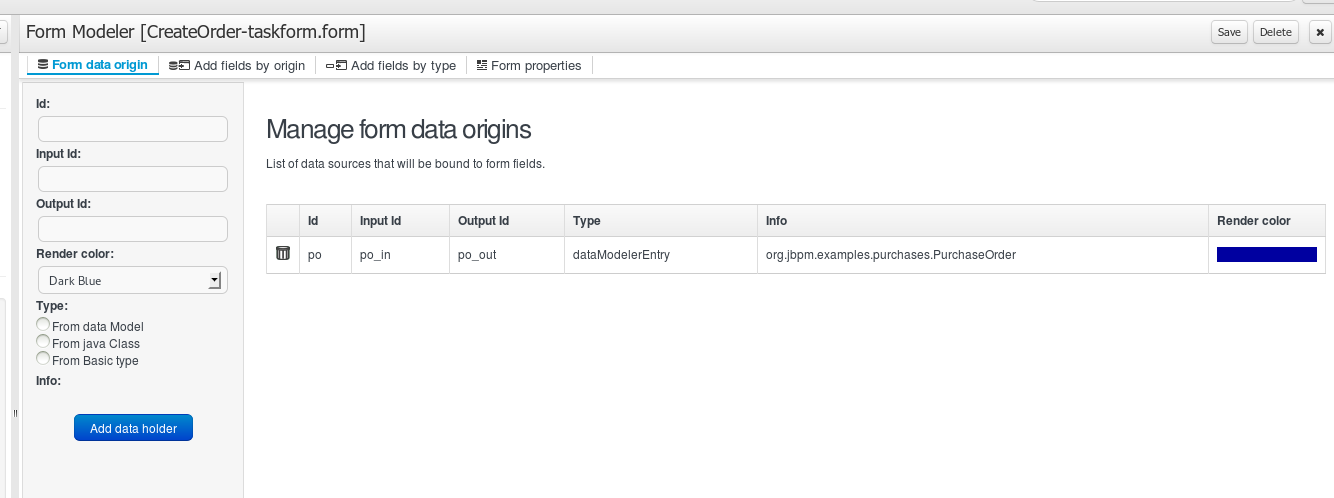

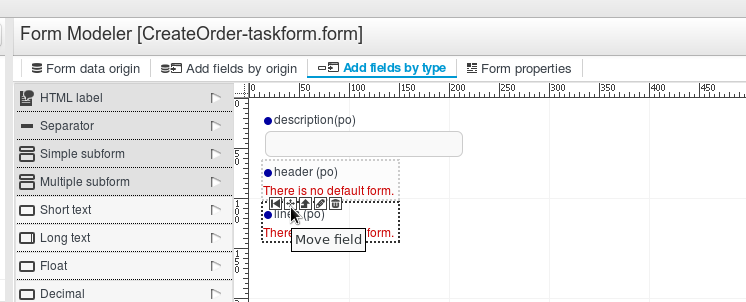

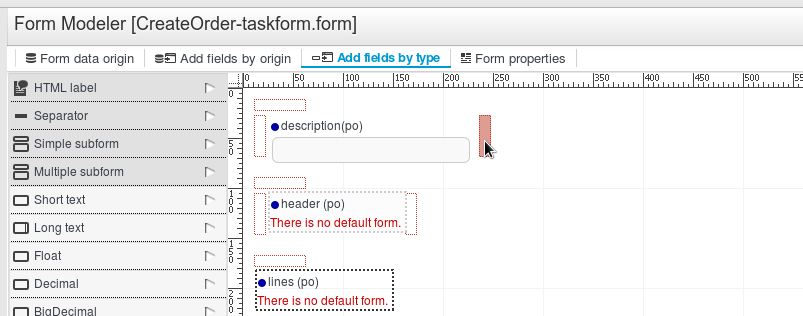

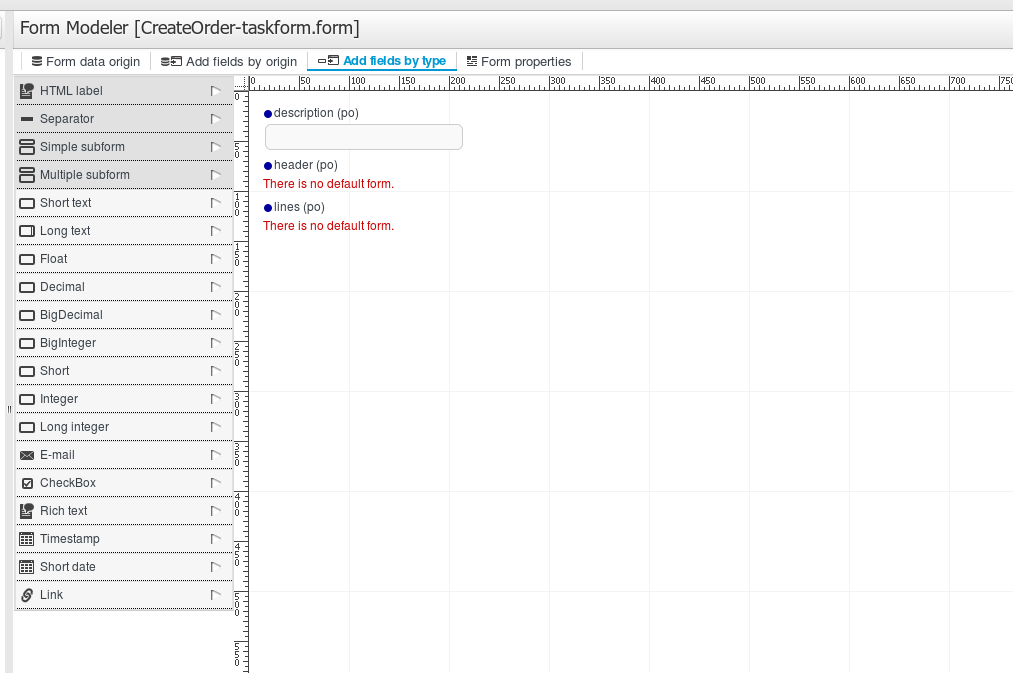

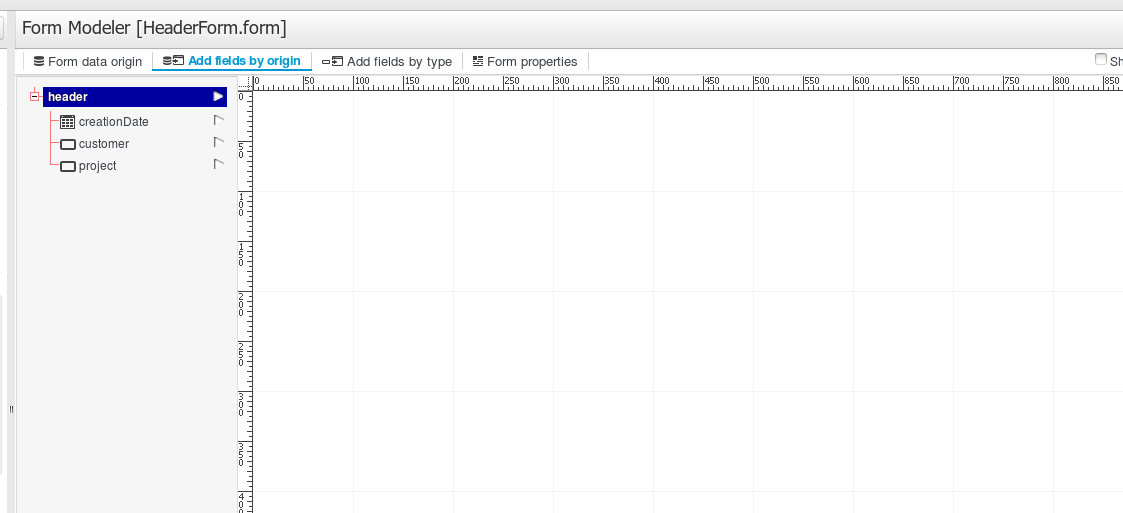

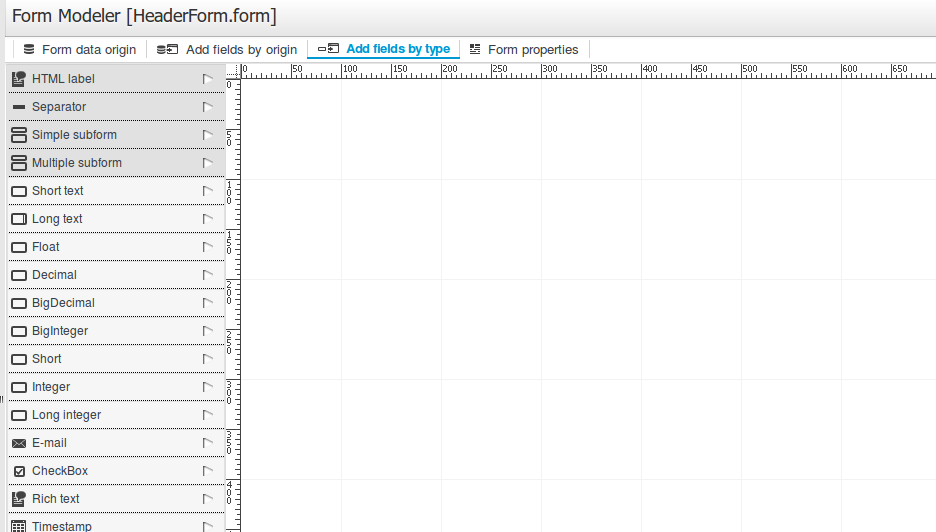

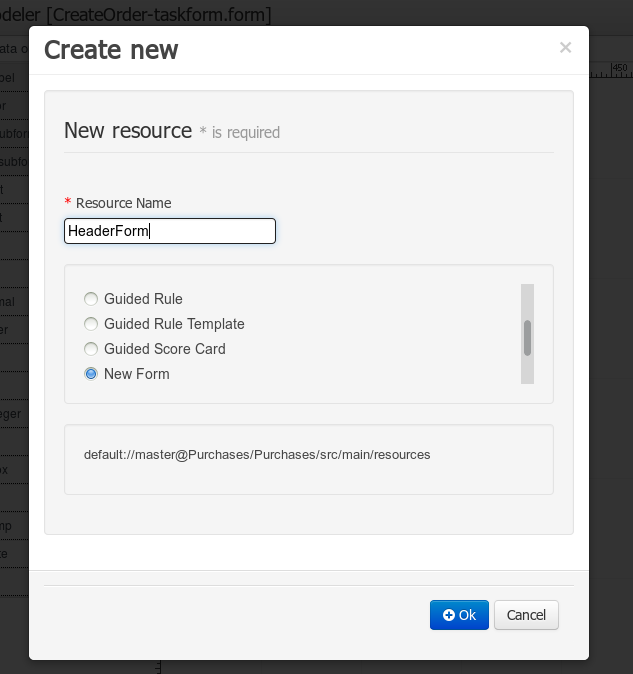

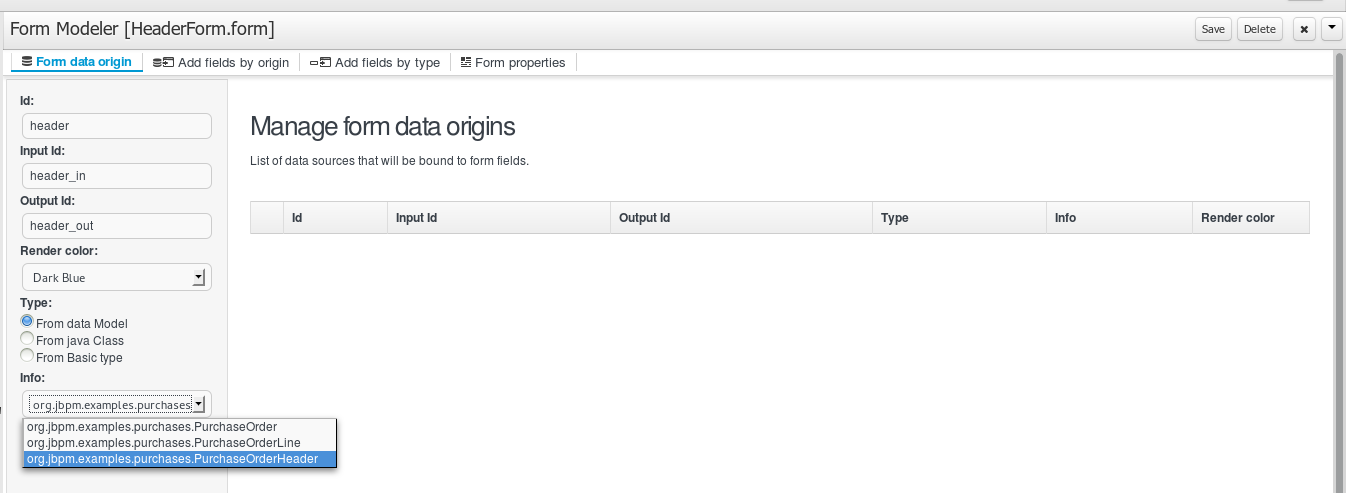

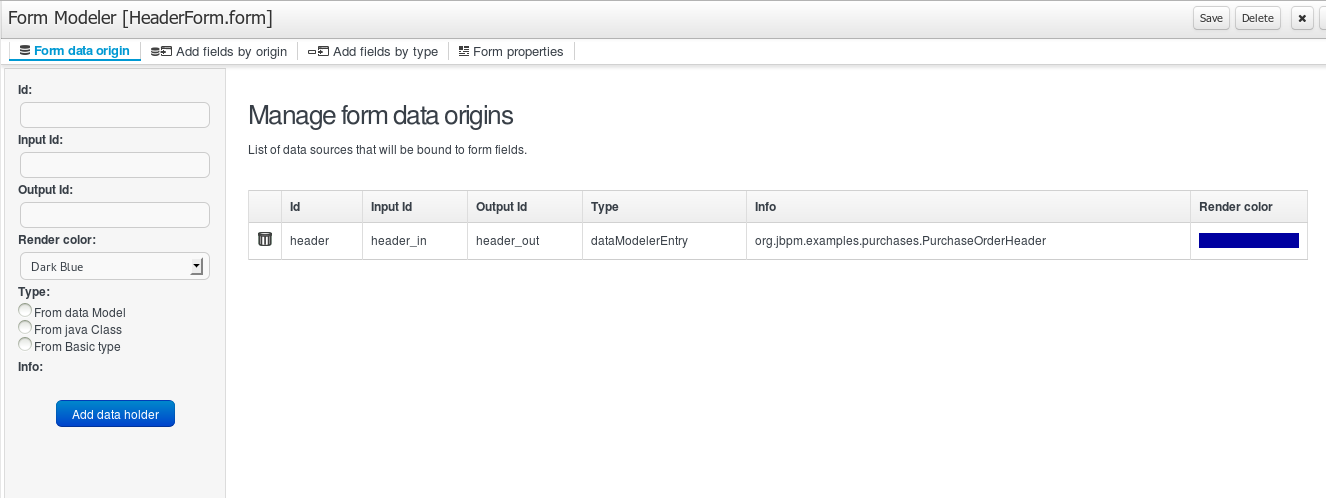

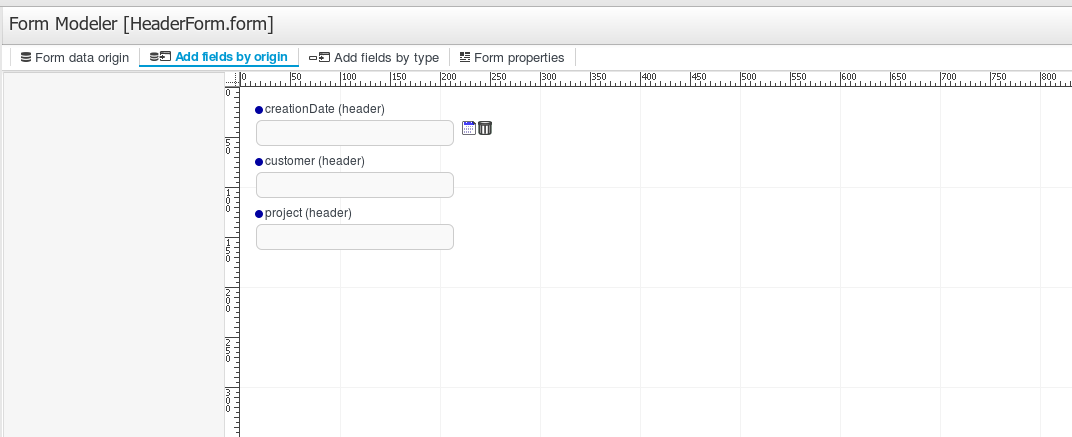

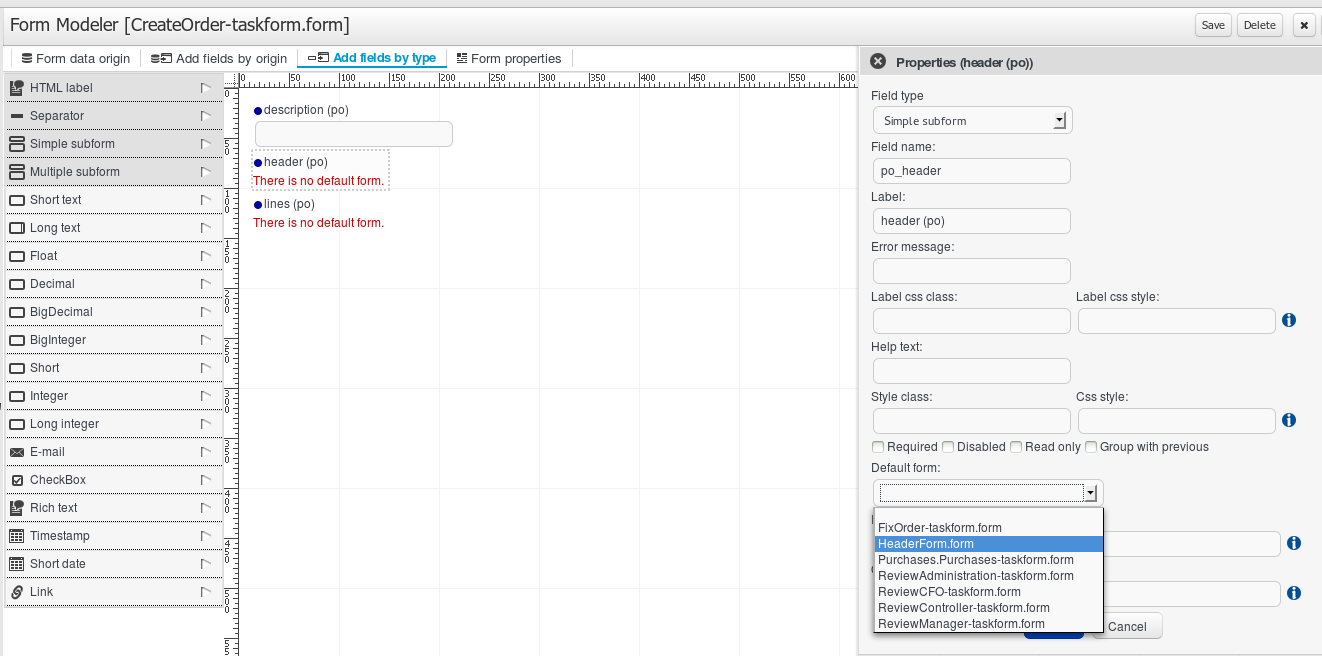

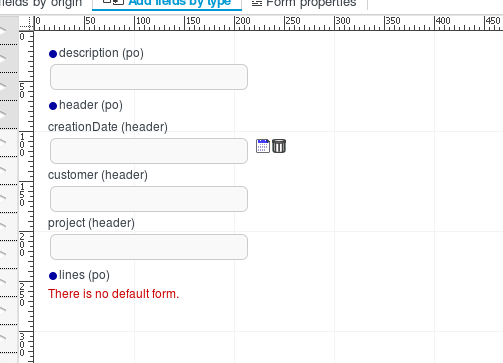

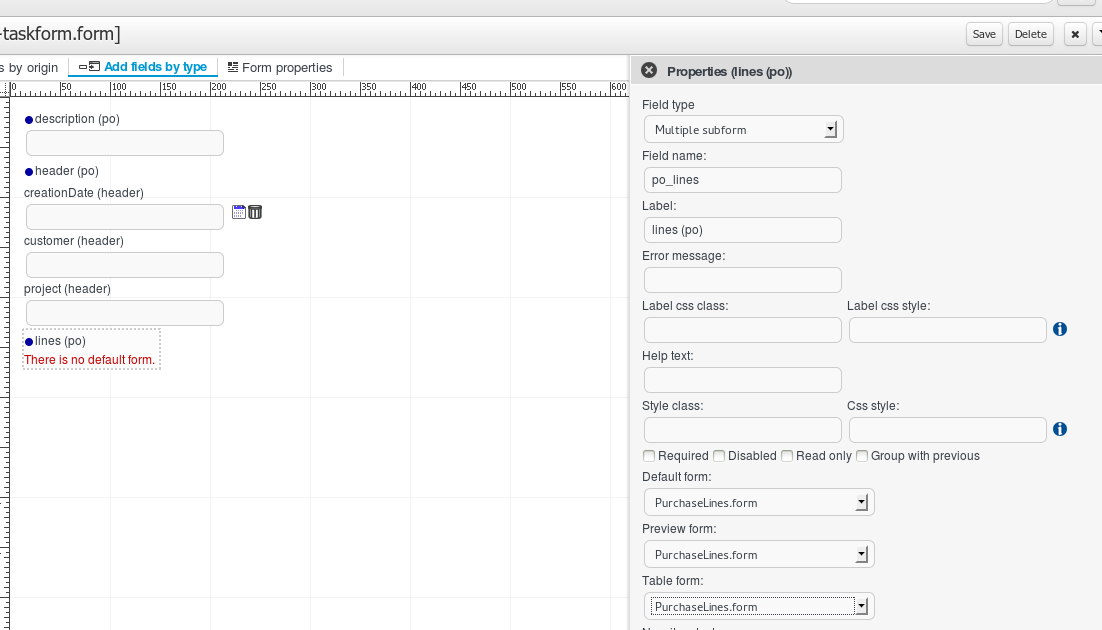

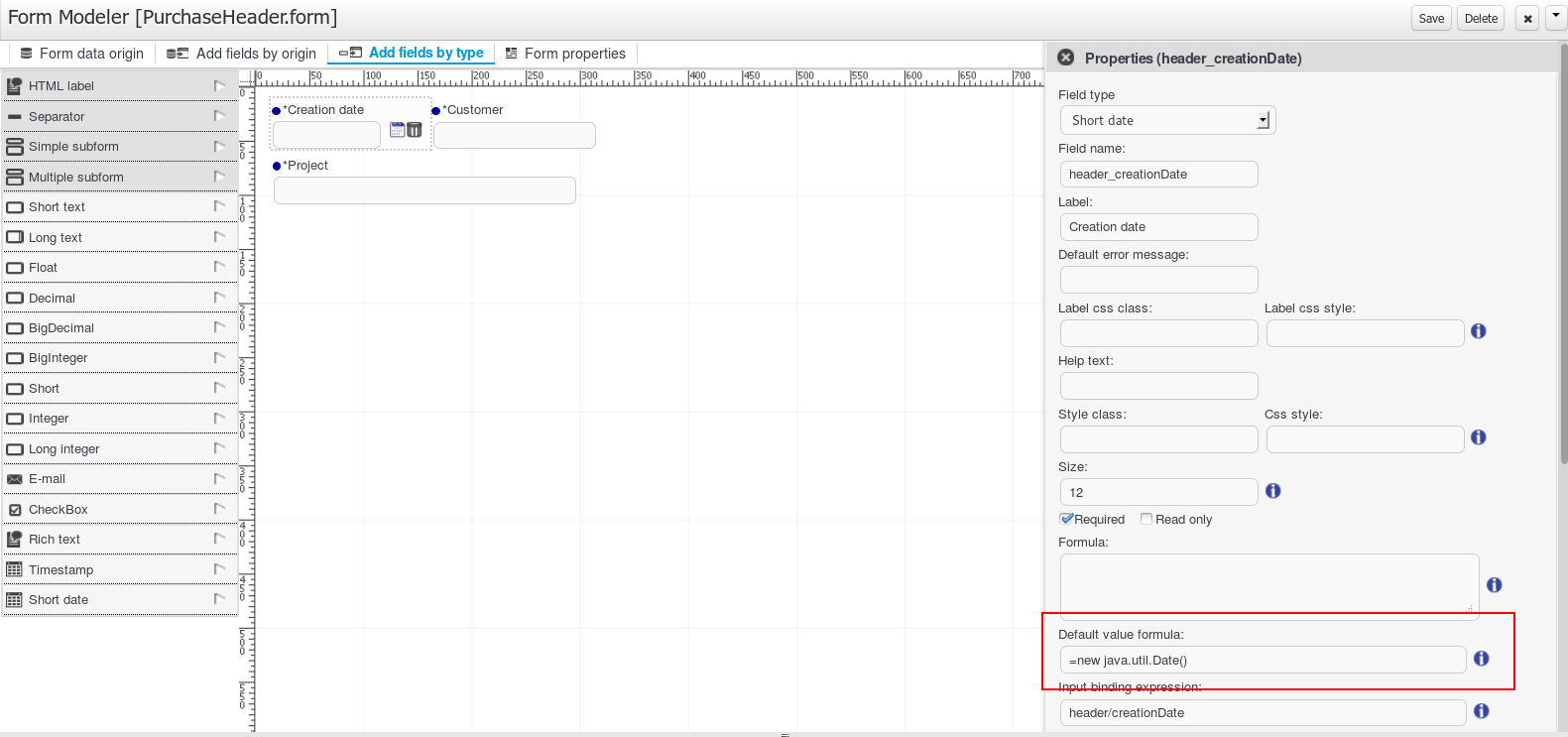

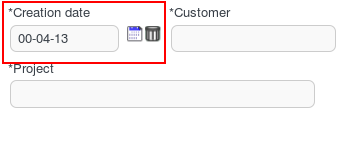

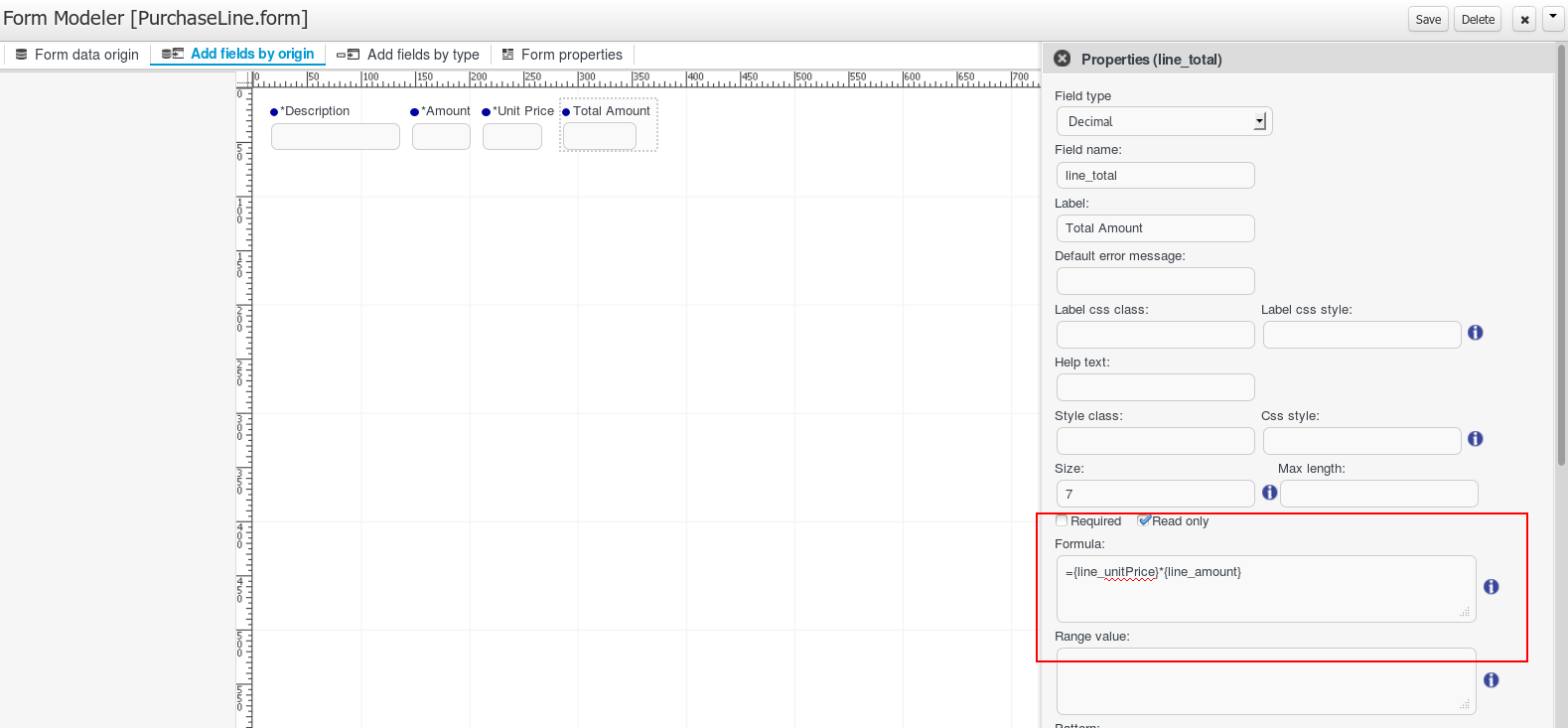

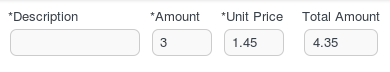

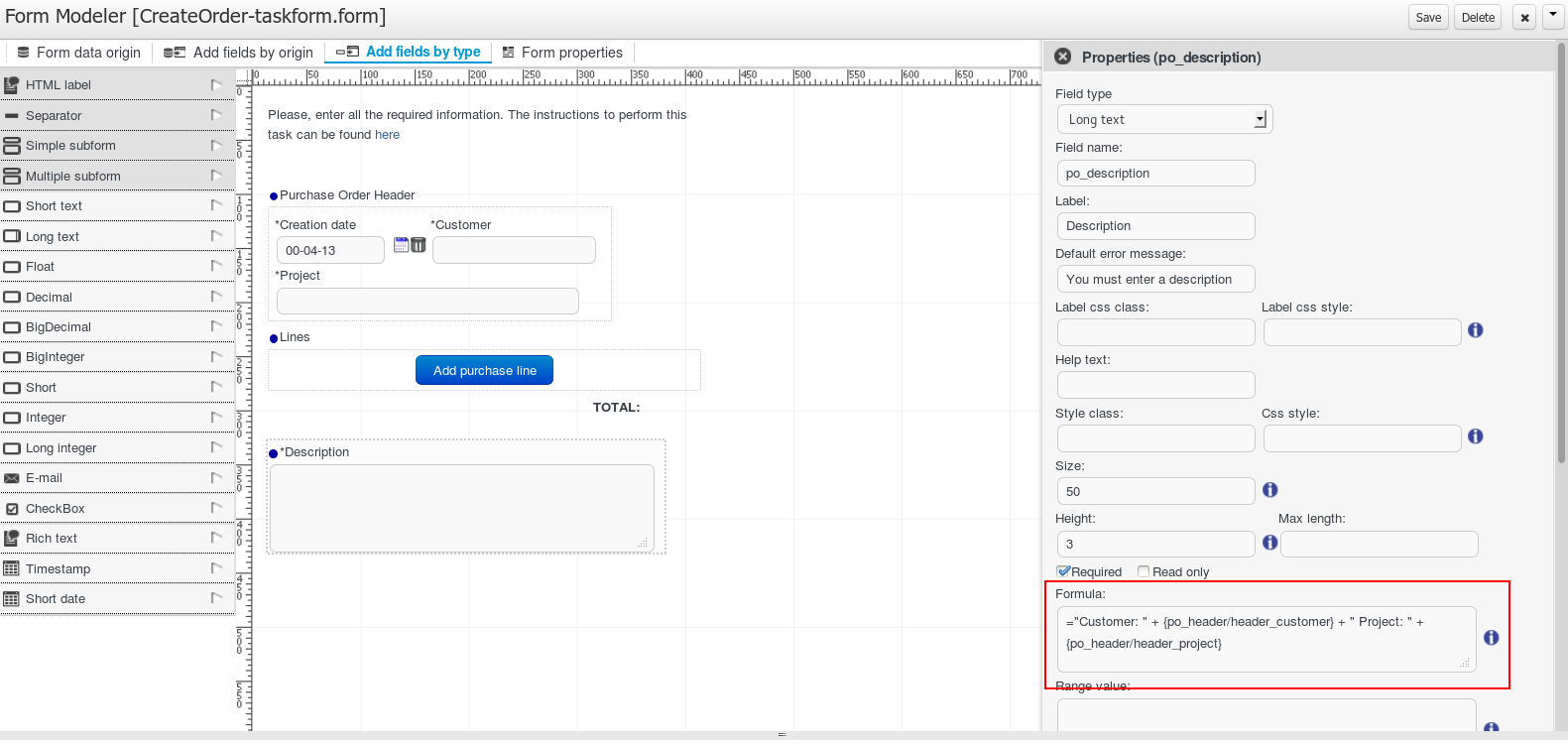

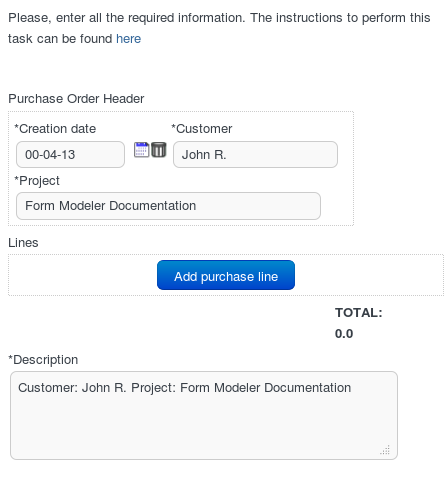

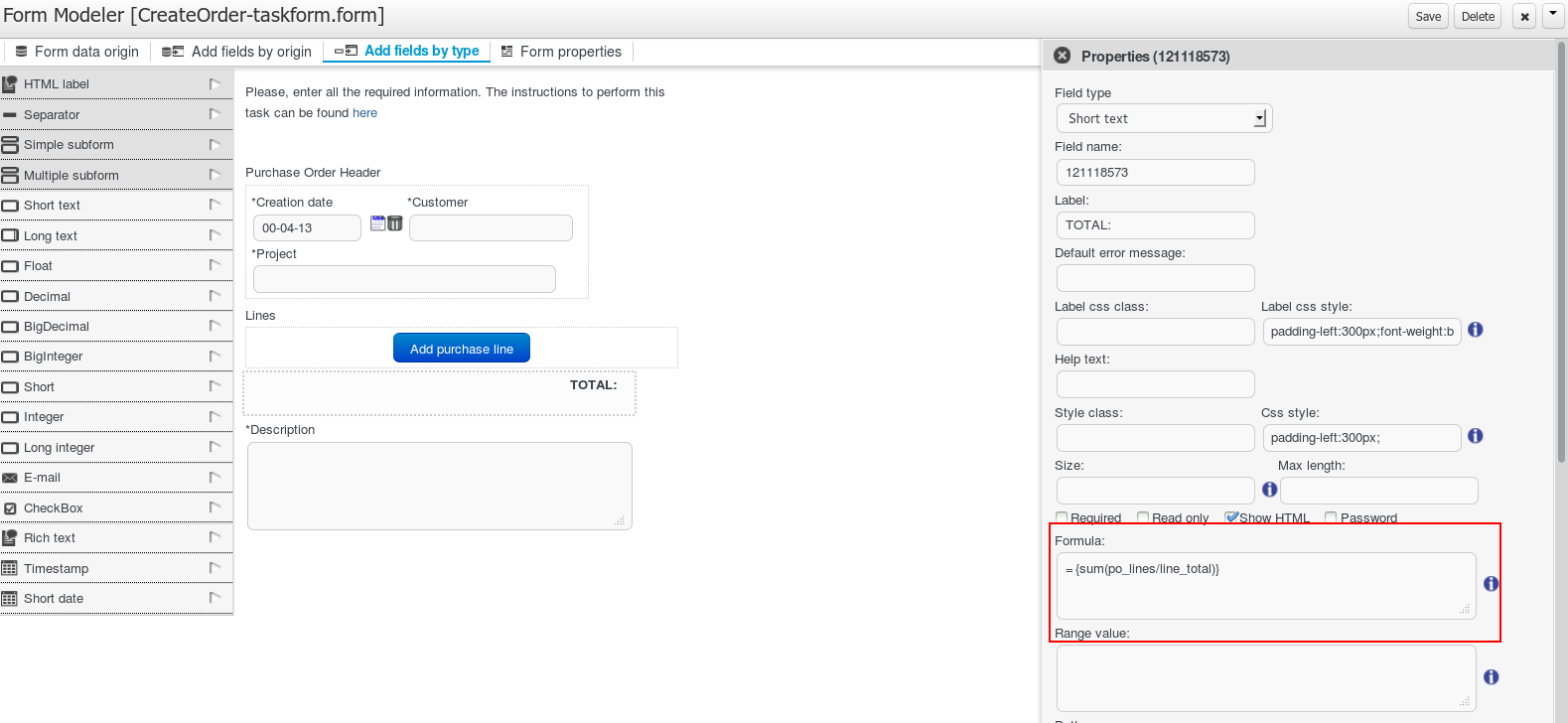

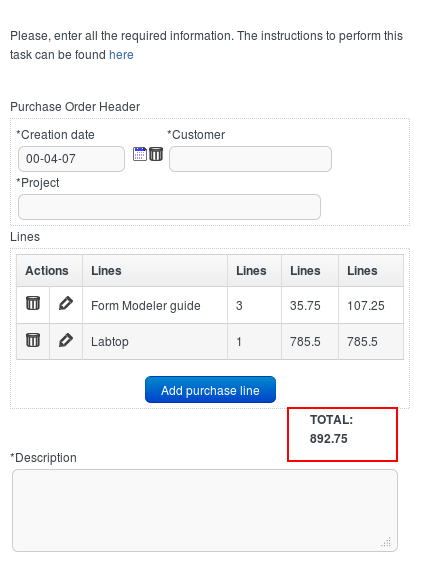

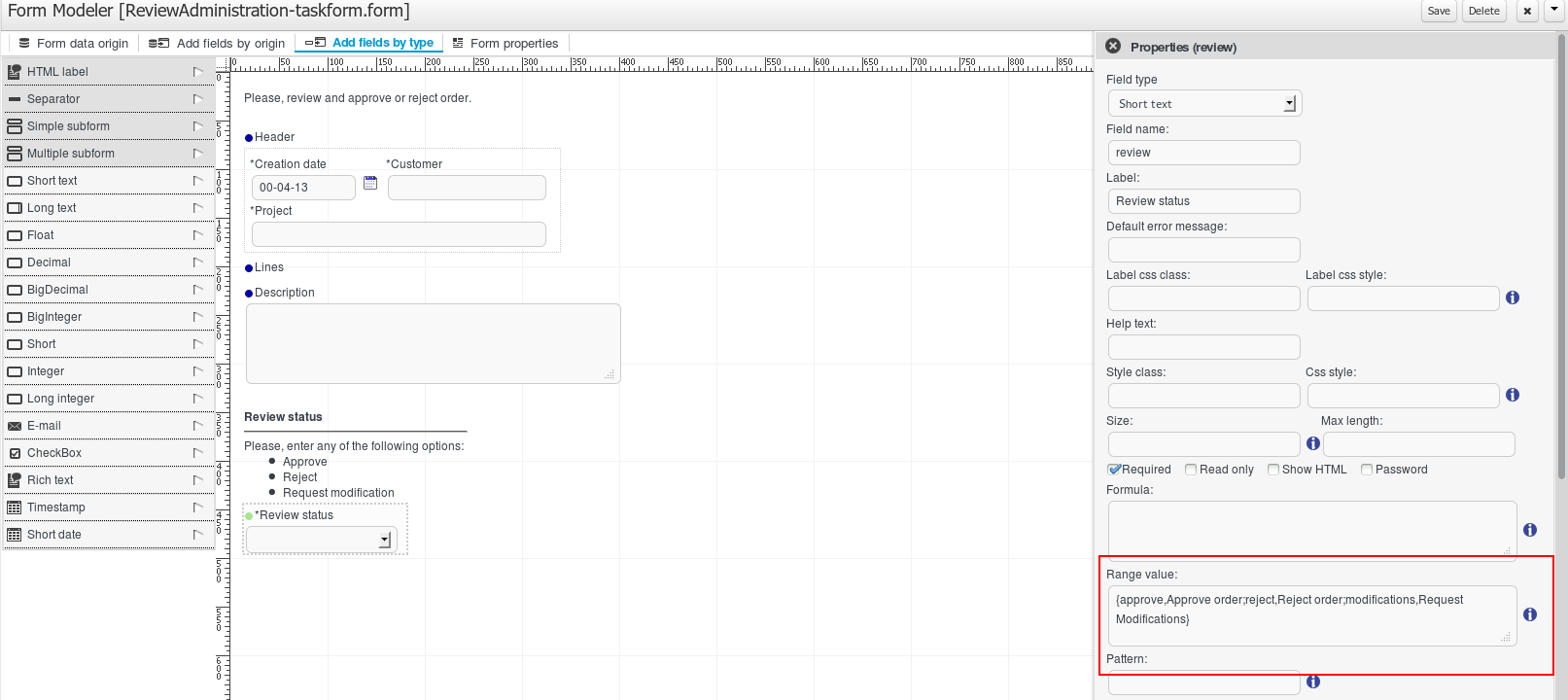

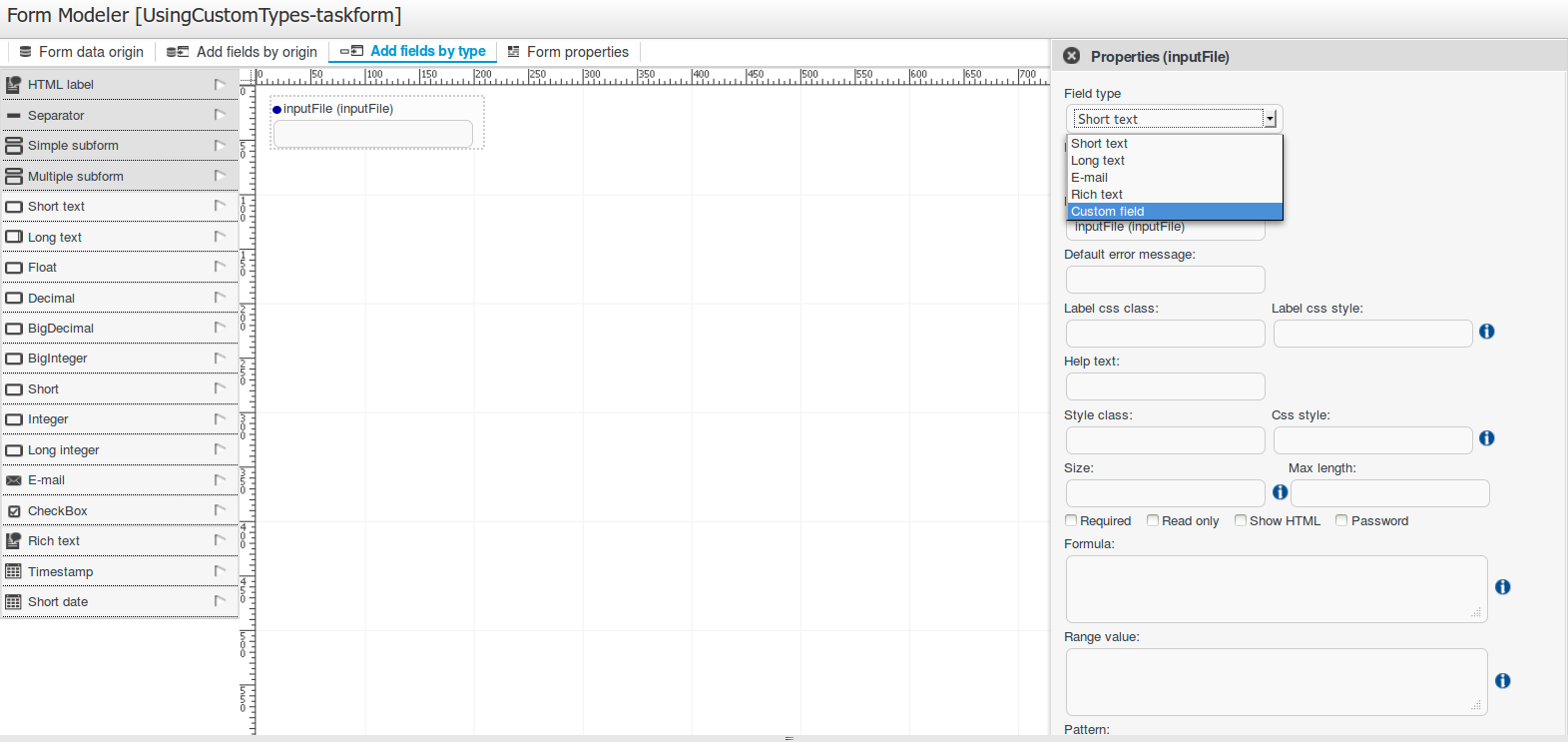

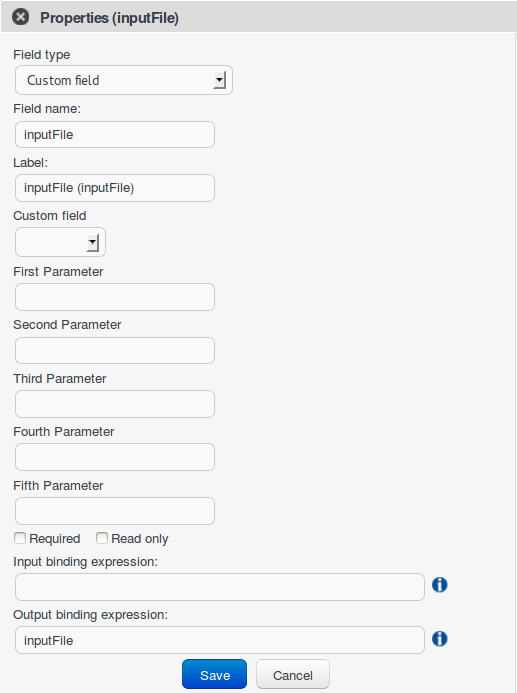

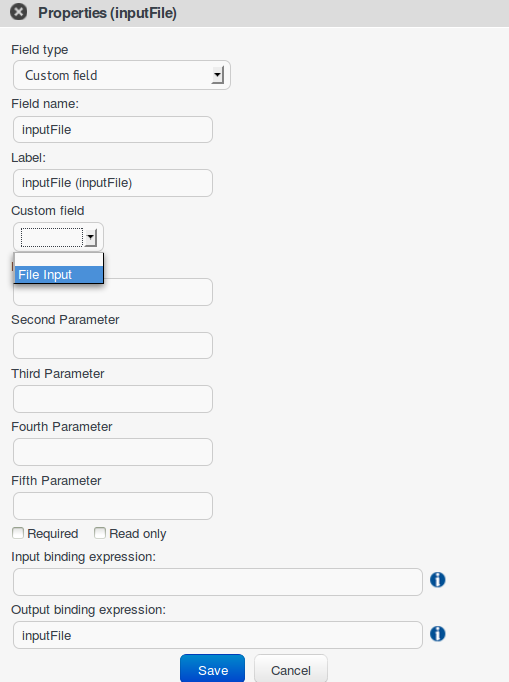

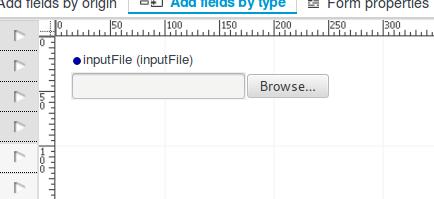

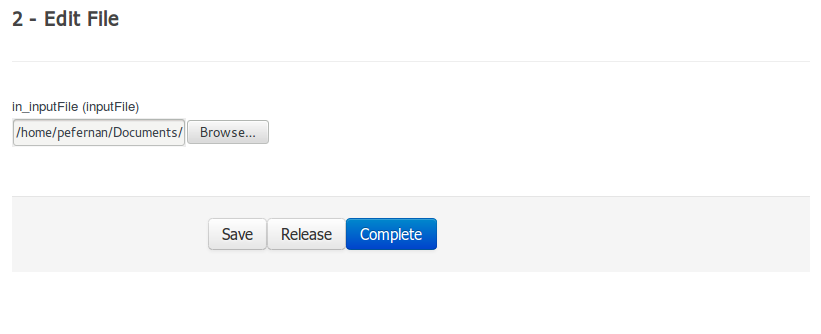

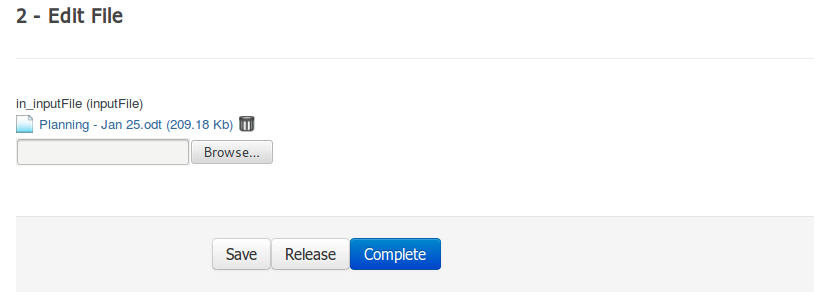

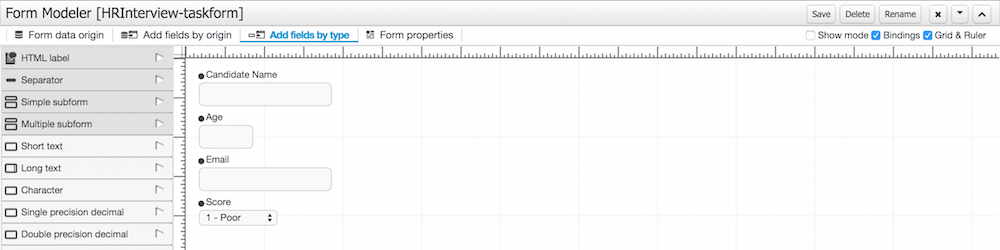

The jBPM Form Modeler is a form engine and editor that enables users to create forms to capture and display information during process or task execution, without needing any coding or template markup skills.

It provides a WYSIWYG environment to model forms that it's easy to use for less technical users.

Key features:

Form Modeling WYSIWYG UI for forms

Form autogeneration from data model / Java objects

Data binding for Java objects

Formula and expressions

Customized forms layouts

Forms embedding

The form modeler's user interfaces is aimed both at process analyst and developers for building and testing forms.

Developers or advanced used will also have some advanced features to customize form behavior and look&feel.

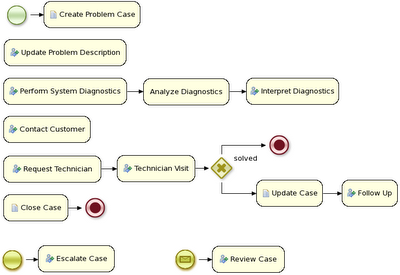

Business processes can be managed through a web-based management console. It is targeted towards business users and its main features are the following:

- Process instance management: the ability to start new process instances, get a list of running process instances, visually inspect the state of a specific process instances.

- Human task management: being able to get a list of all your current tasks (either assigned to you or that you might be able to claim), and completing tasks on your task list (using customizable task forms).

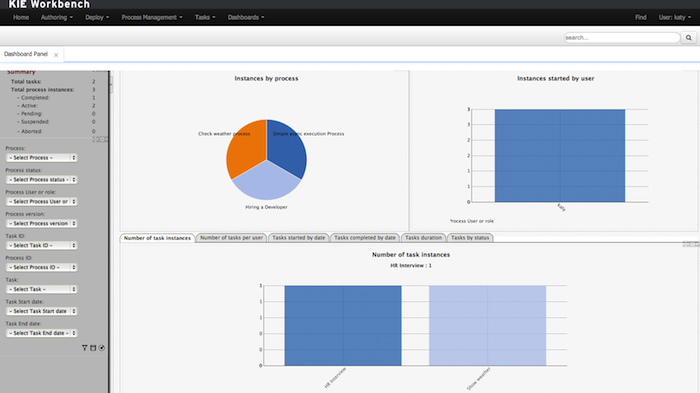

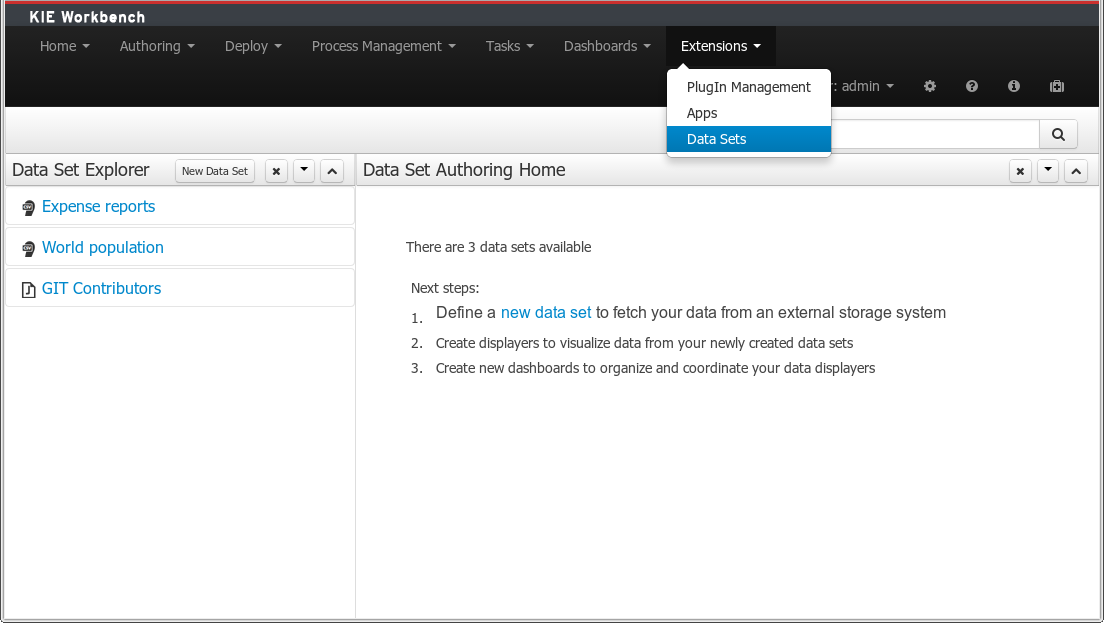

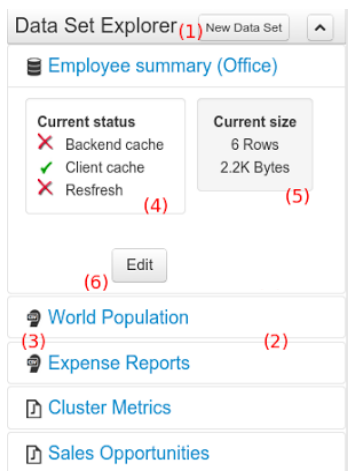

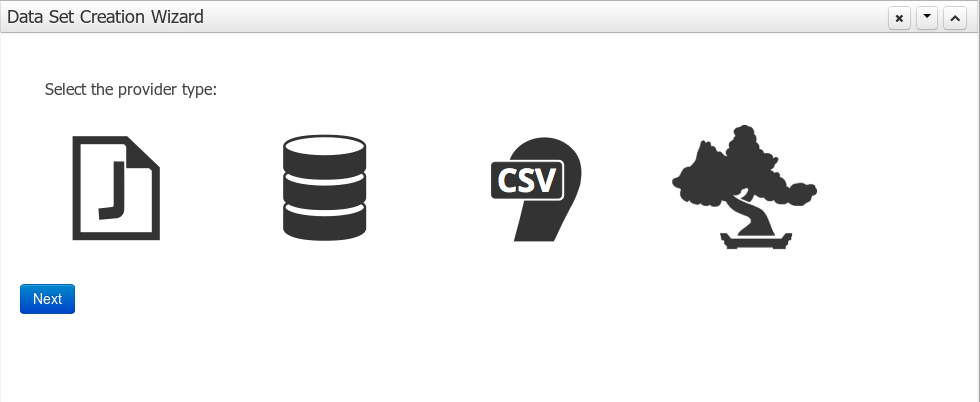

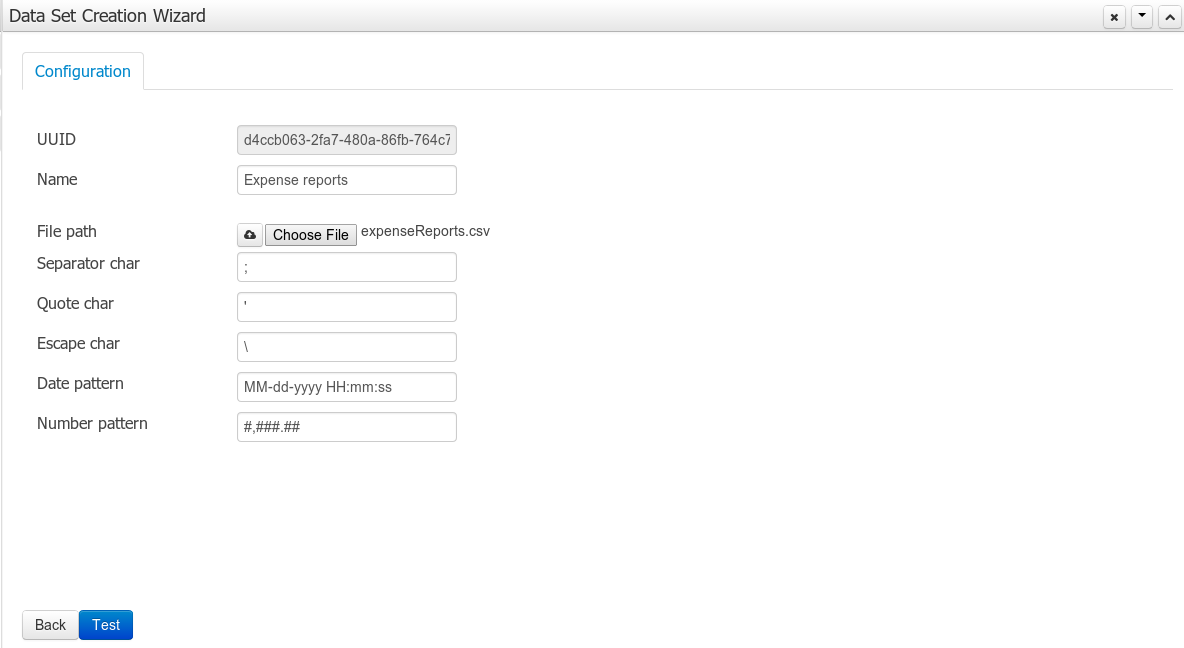

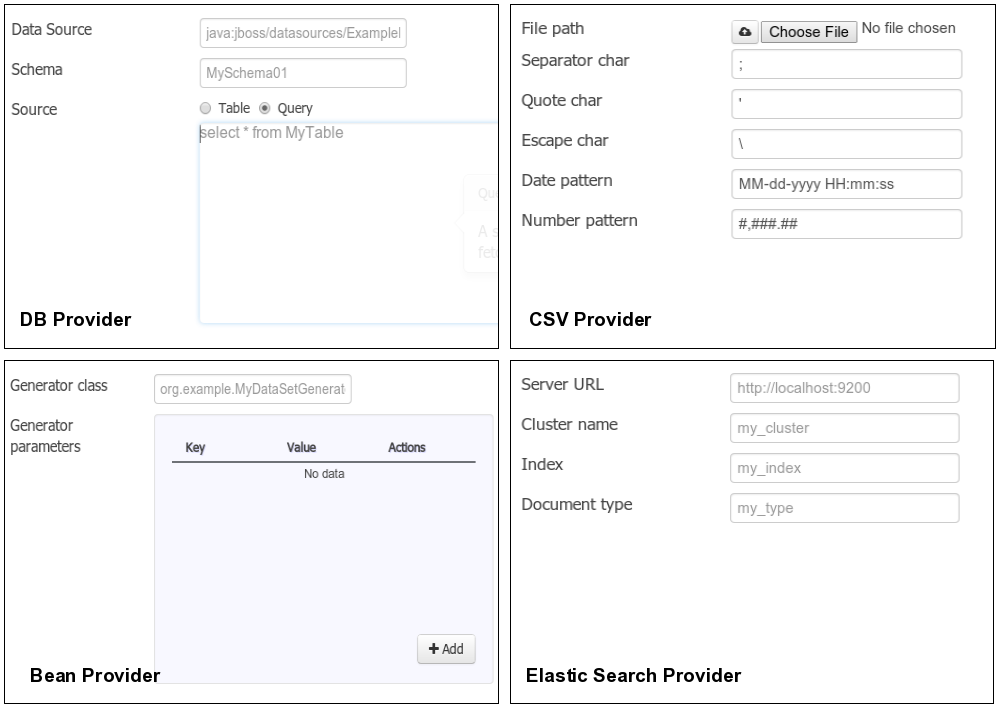

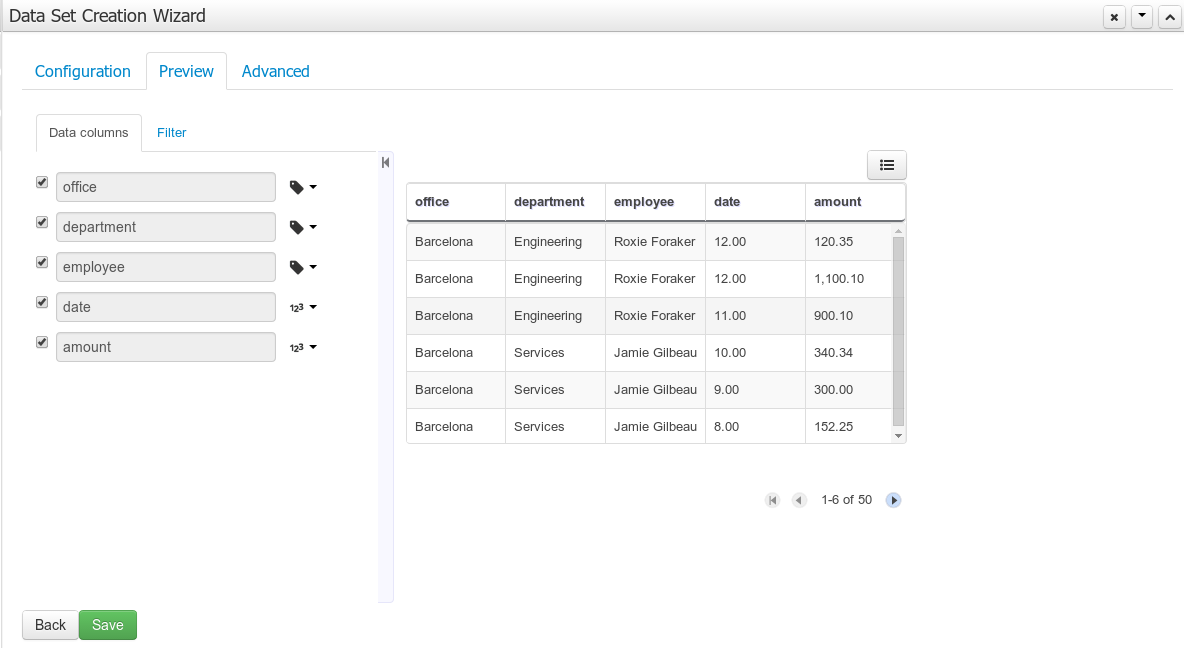

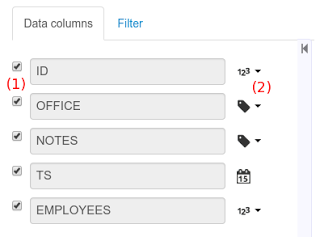

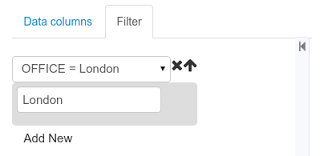

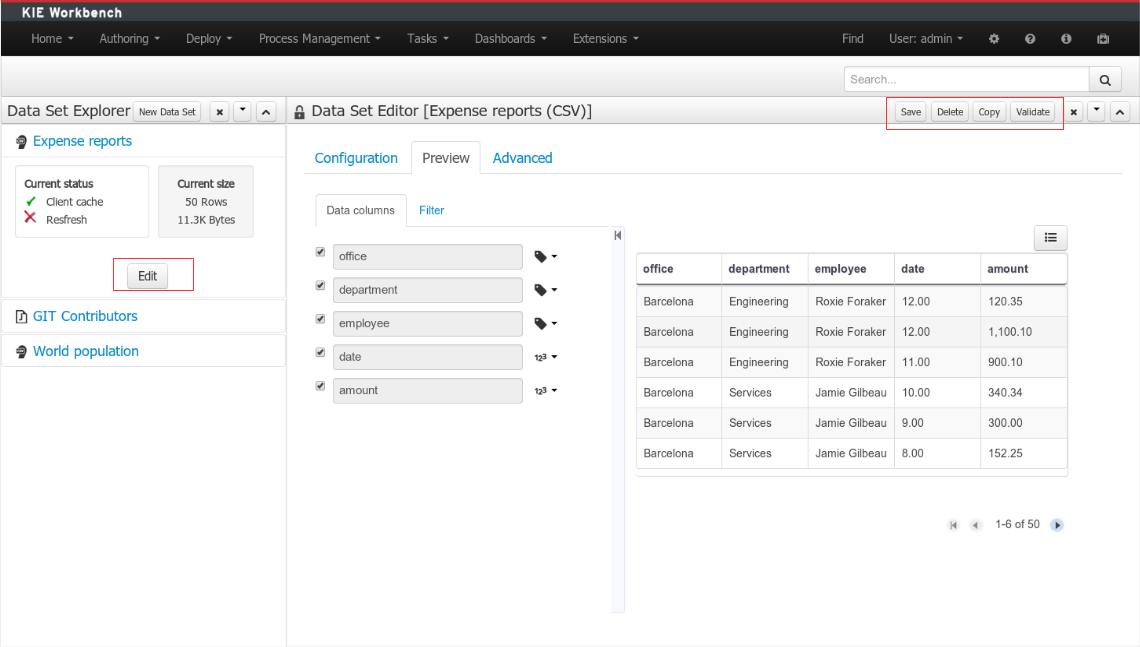

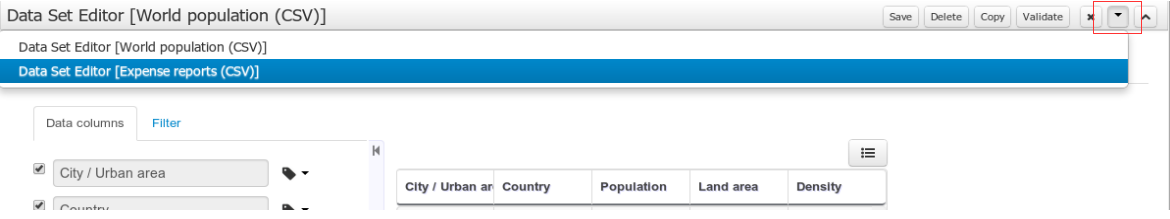

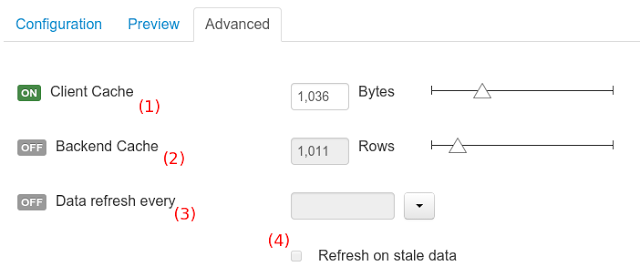

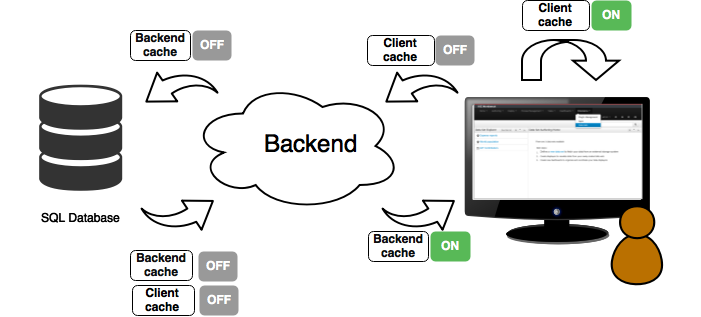

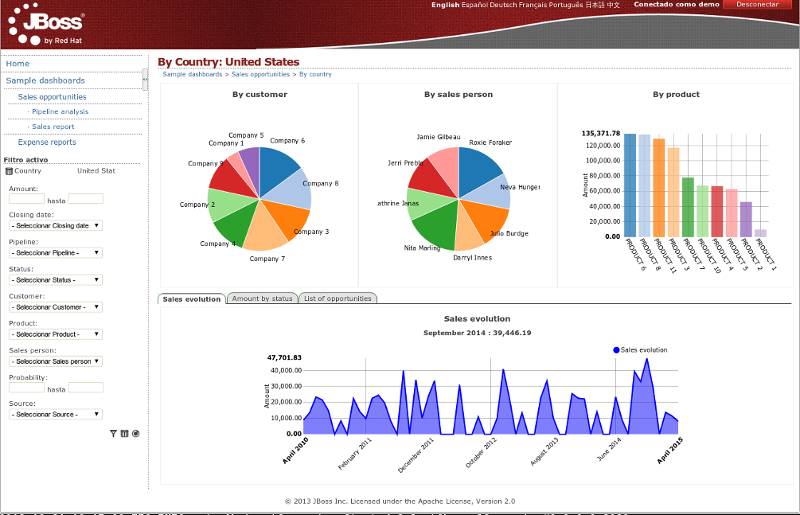

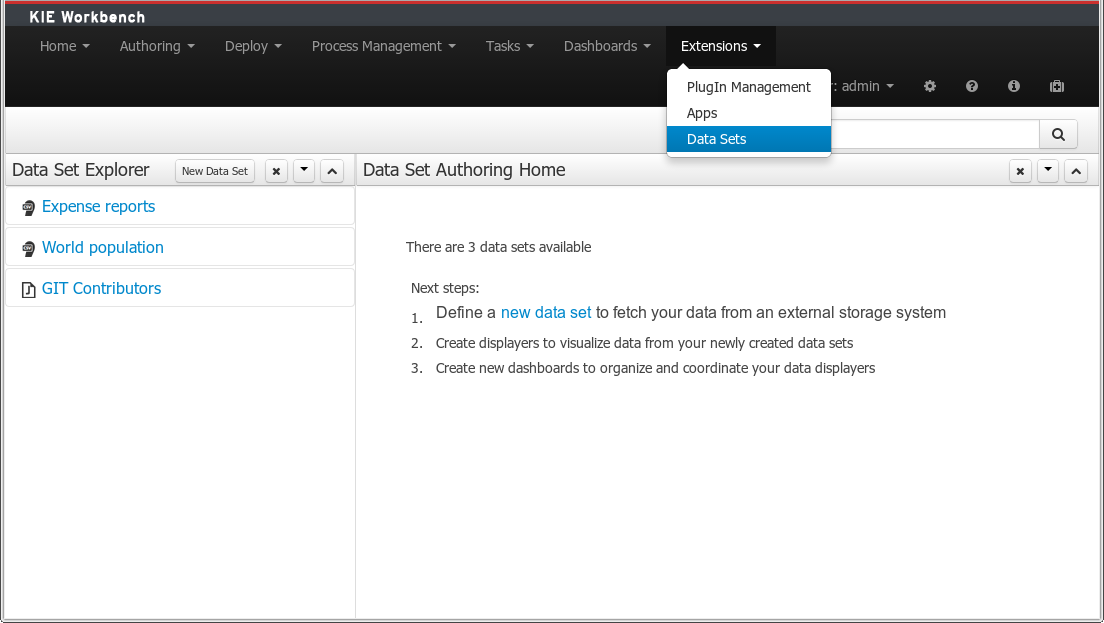

As of version 6.0, jBPM comes with a full-featured BAM tooling which allows non-technical users to visually compose business dashboards. With this brand new module, to develop business activity monitoring and reporting solutions on top of jBPM has never been so easy!

Key features:

Visual configuration of dashboards (Drag'n'drop).

Graphical representation of KPIs (Key Performance Indicators).

Configuration of interactive report tables.

Data export to Excel and CSV format.

Filtering and search, both in-memory or SQL based.

Data extraction from external systems, through different protocols.

Granular access control for different user profiles.

Look'n'feel customization tools.

Pluggable chart library architecture.

Chart libraries provided: NVD3 & OFC2.

Target users:

Managers / Business owners. Consumer of dashboards and reports.

IT / System architects. Connectivity and data extraction.

Analysts. Dashboard composition & configuration.

To get further information about the new and noteworthy BAM capabilities of jBPM please read the chapter Business Activity Monitoring.

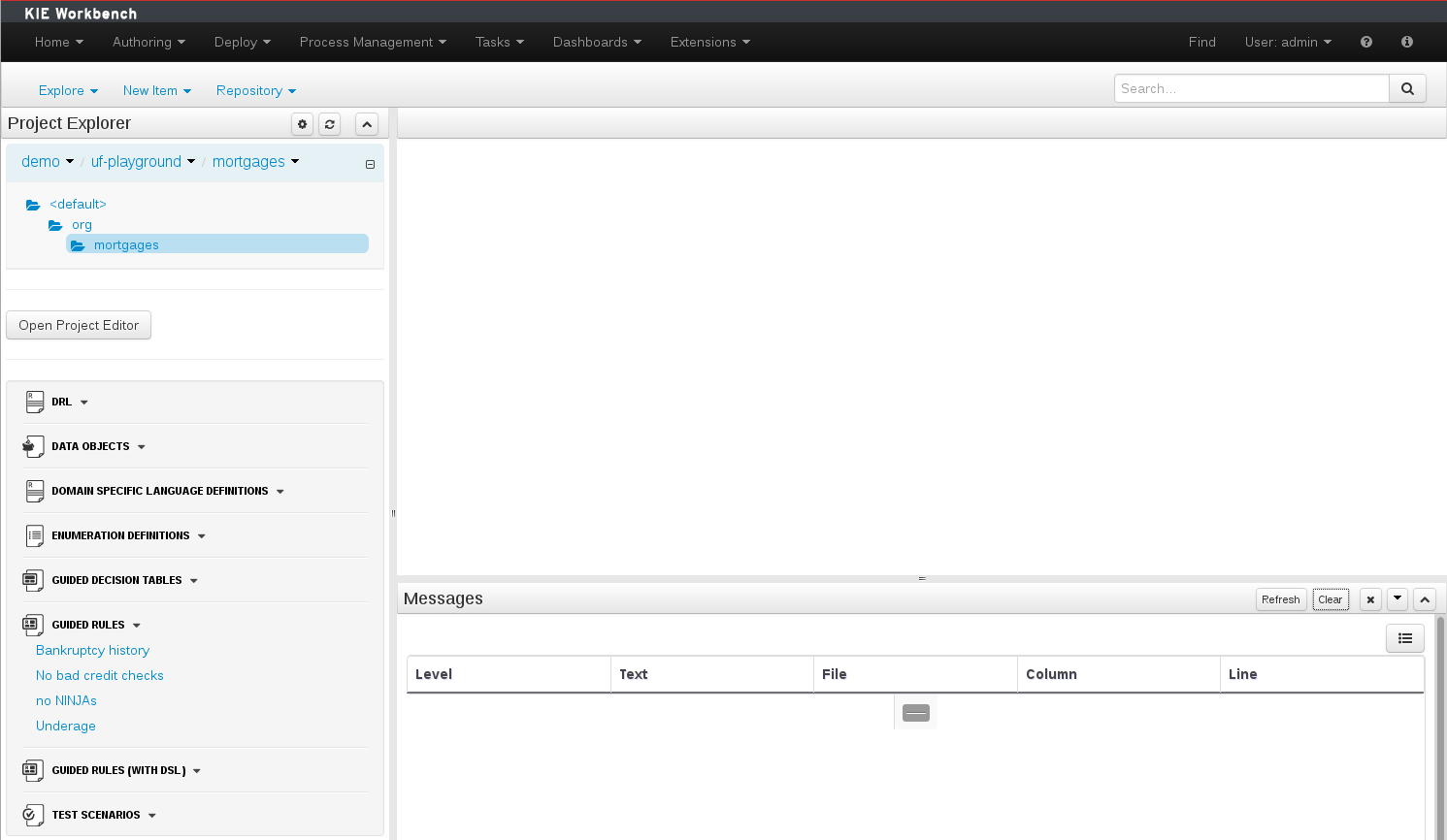

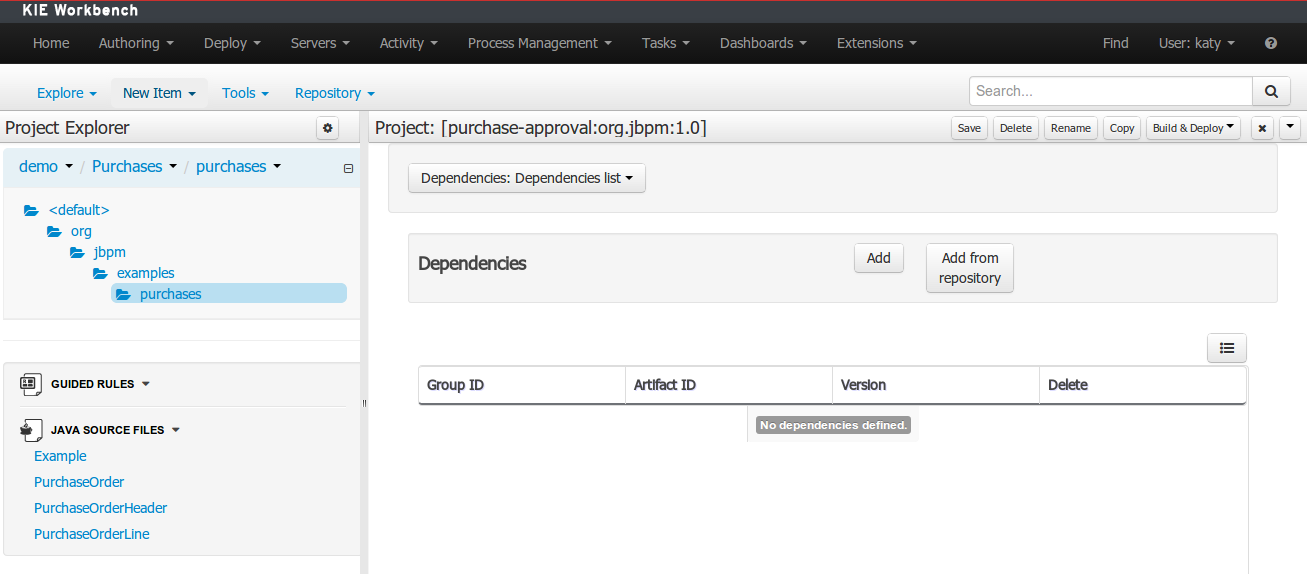

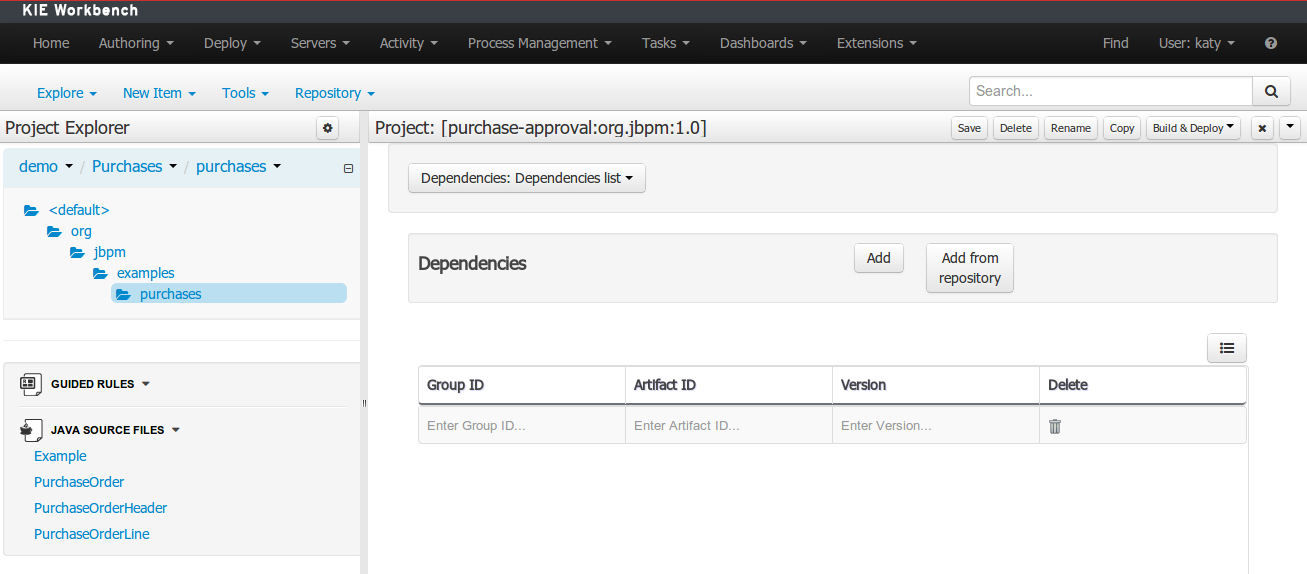

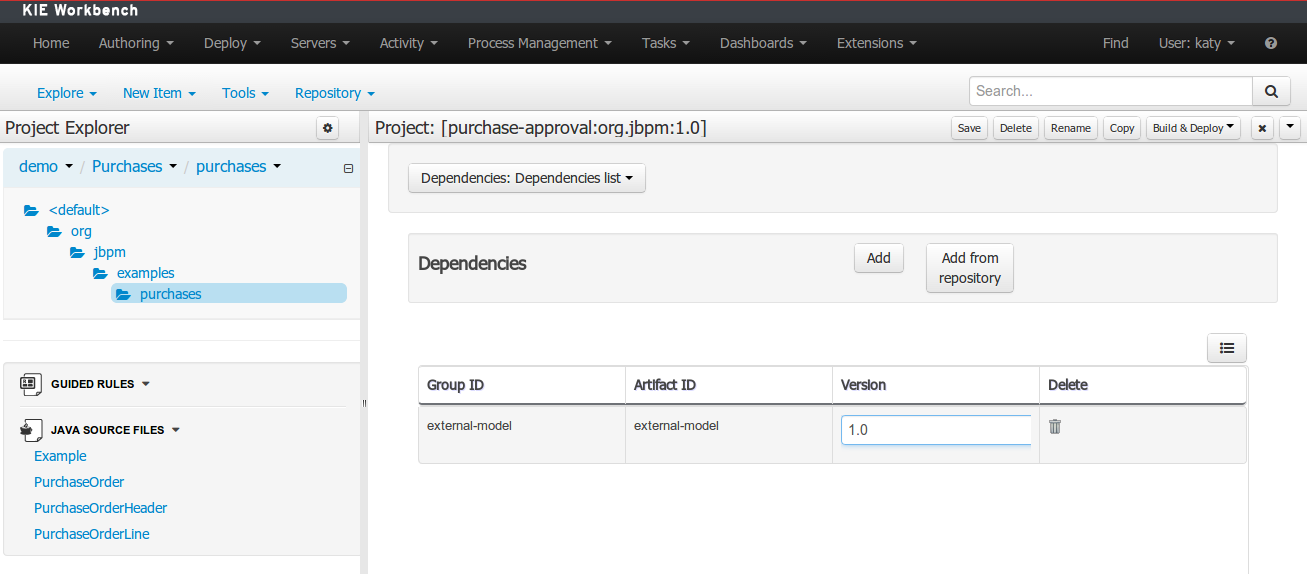

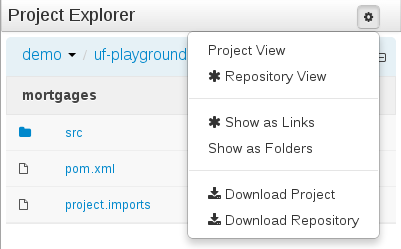

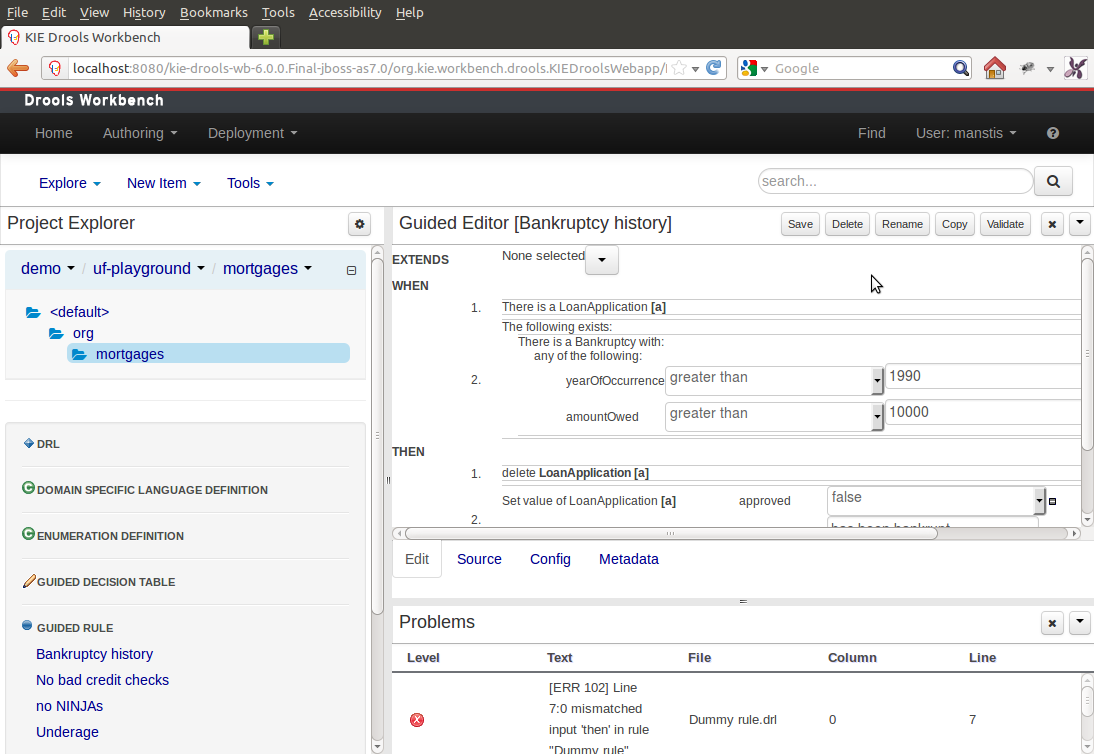

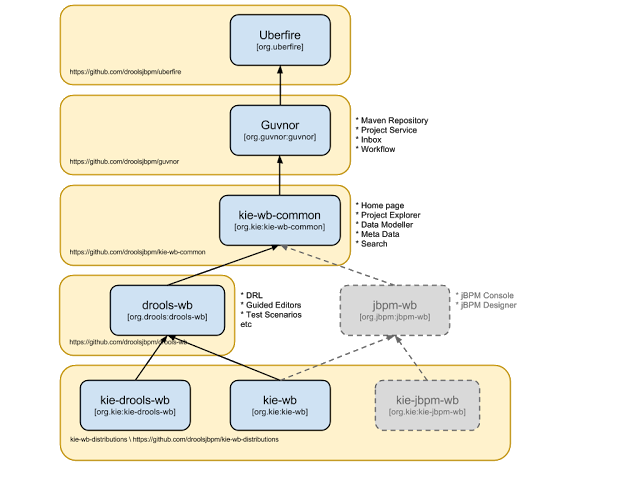

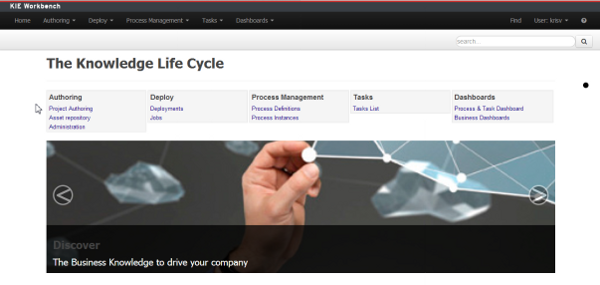

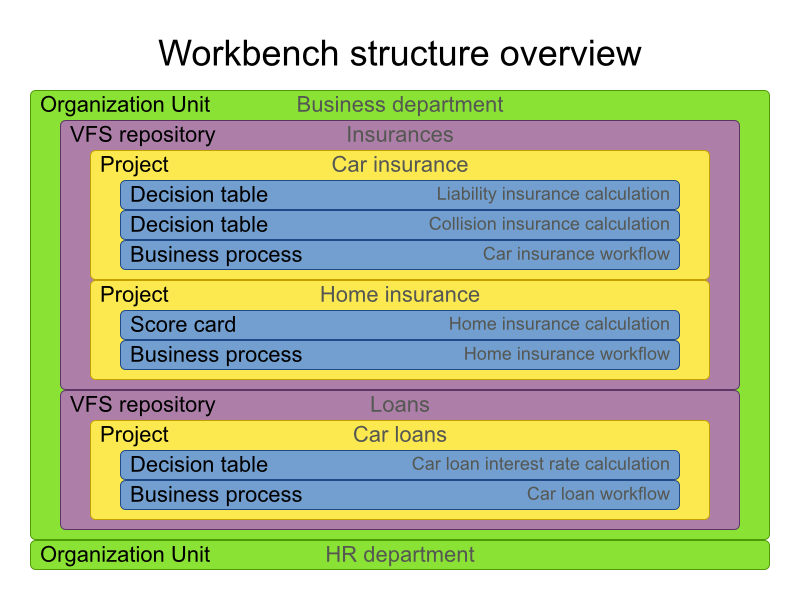

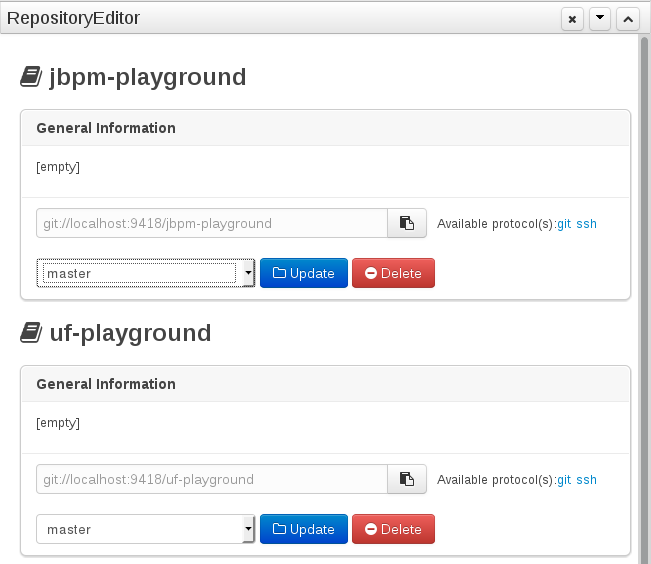

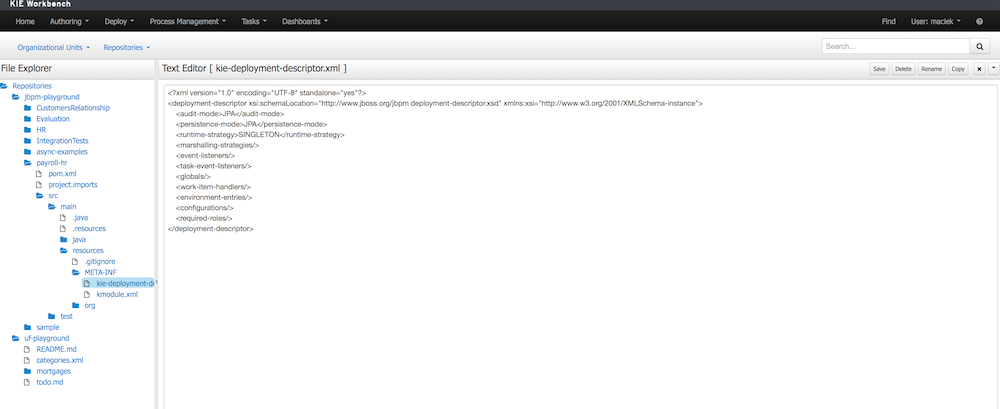

The workbench is the web-based application that combines all of the above web-based tools into one configurable solution.

It supports the following:

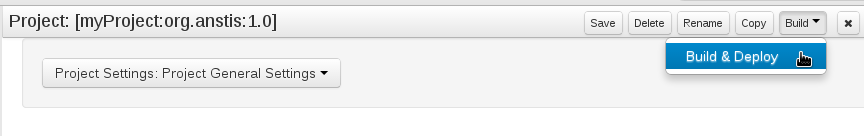

- A repository service to store your business processes and related artefacts, using a Git repository, which supports versioning, remote accessing (as a file system), and using REST services.

- A web-based user interface to manage your business processes, targeted towards business users; it also supports the visualization (and editing) of your artifacts (the web-based editors like designer, data and form modeler are integrated here), but also categorisation, build and deployment, etc..

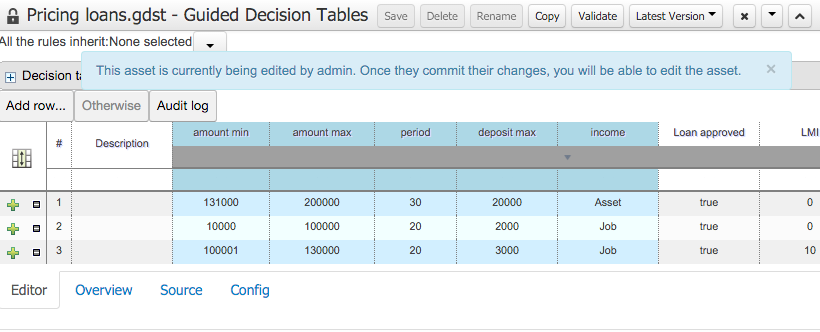

- Collaboration features to have multiple actors (for example business users and developers) work together on the same project.

Workbench application covers complete life cycle of BPM projects starting at authoring phase, going through implementation, execution and monitoring.

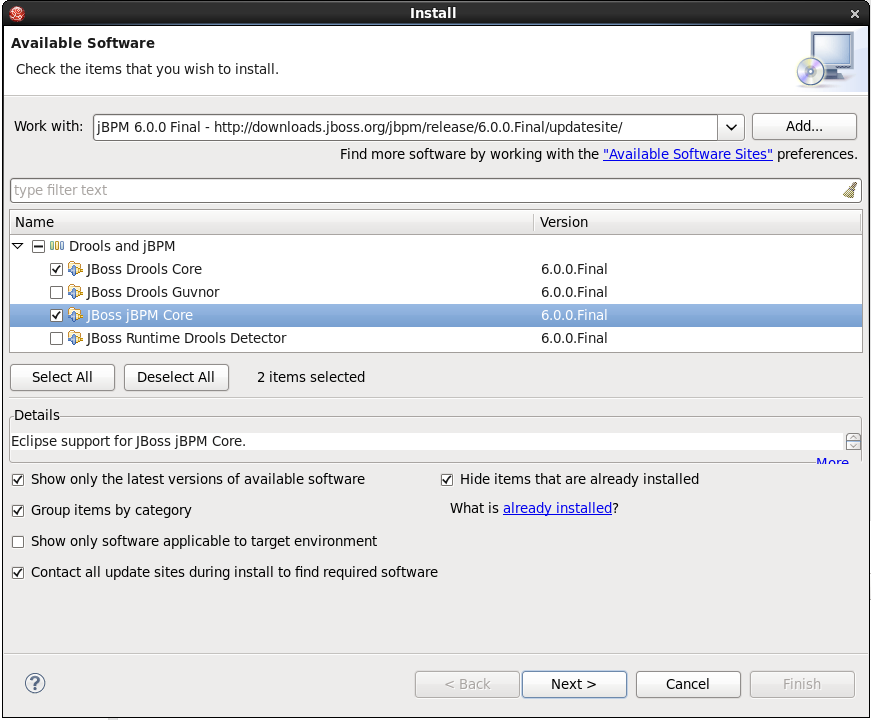

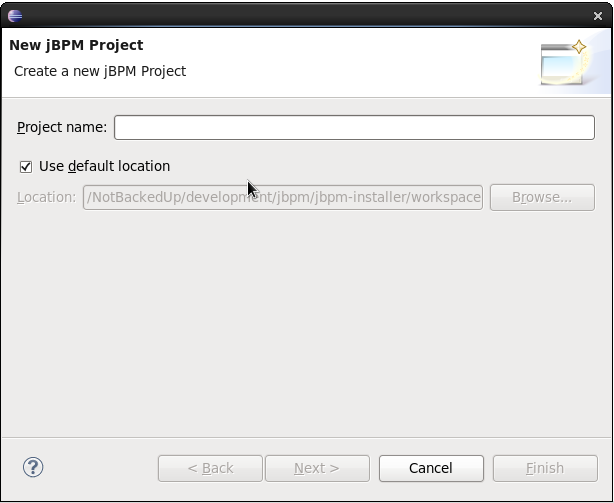

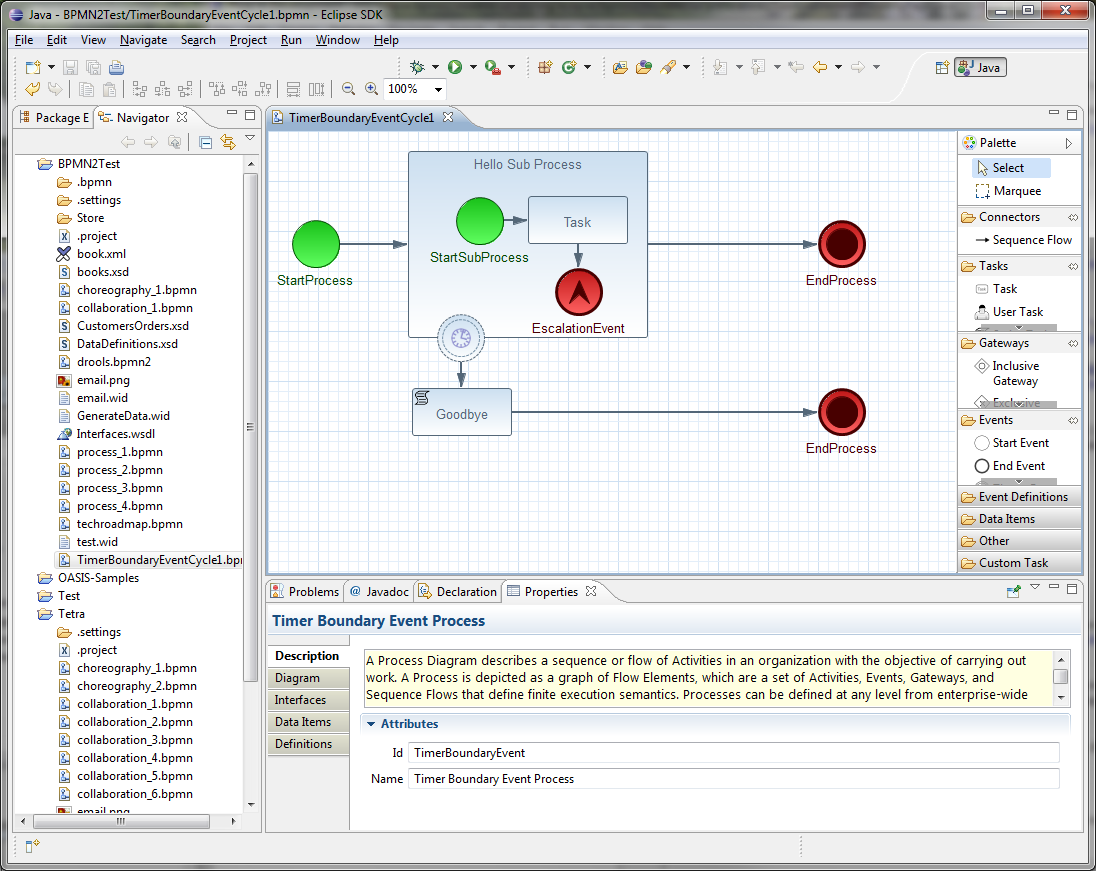

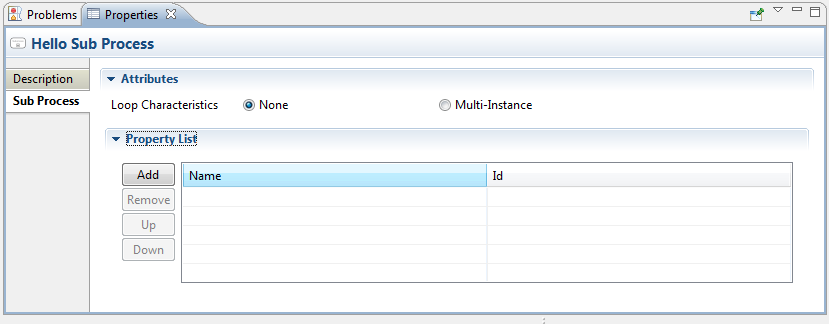

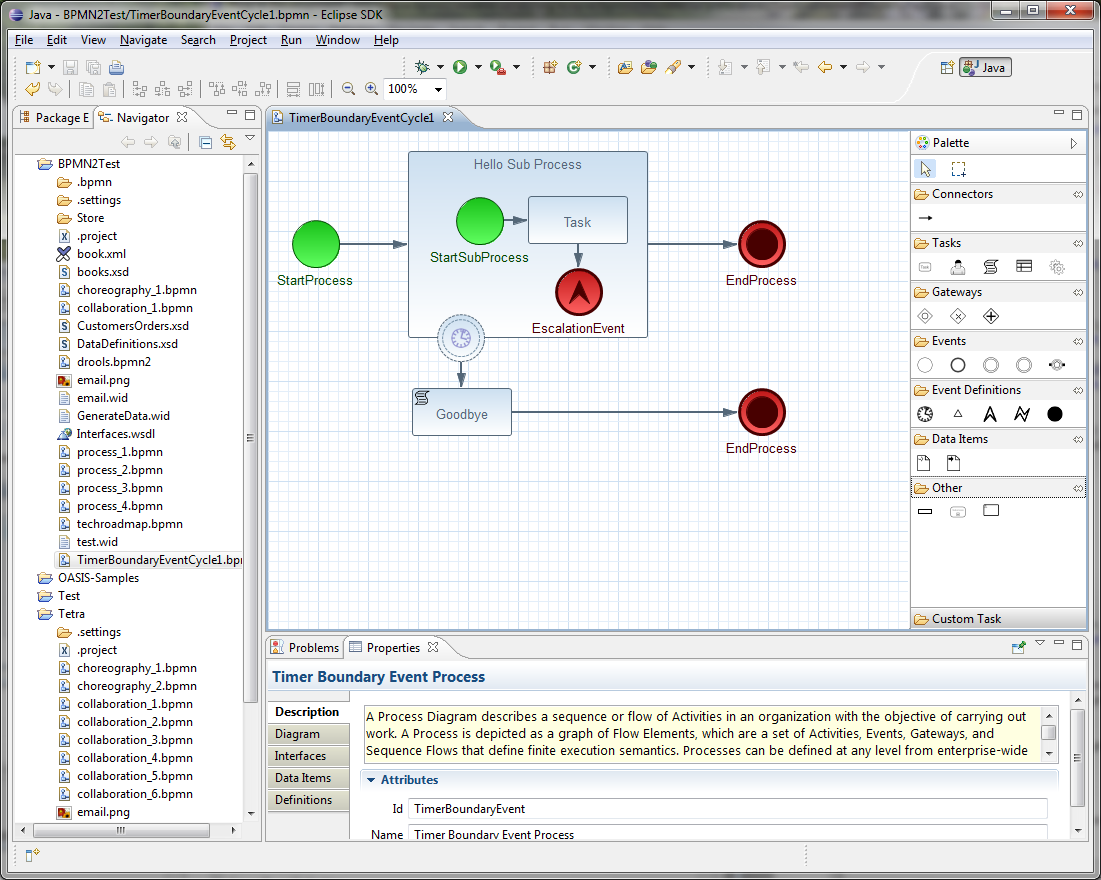

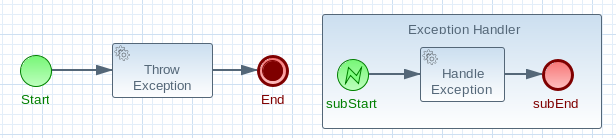

The Eclipse-based tools are a set of plugins to the Eclipse IDE and allow you to integrate your business processes in your development environment. It is targeted towards developers and has some wizards to get started, a graphical editor for creating your business processes (using drag and drop) and a lot of advanced testing and debugging capabilities.

It includes the following features:

- Wizard for creating a new jBPM project

- A graphical editor for BPMN 2.0 processes

- The ability to plug in your own domain-specific nodes

- Validation

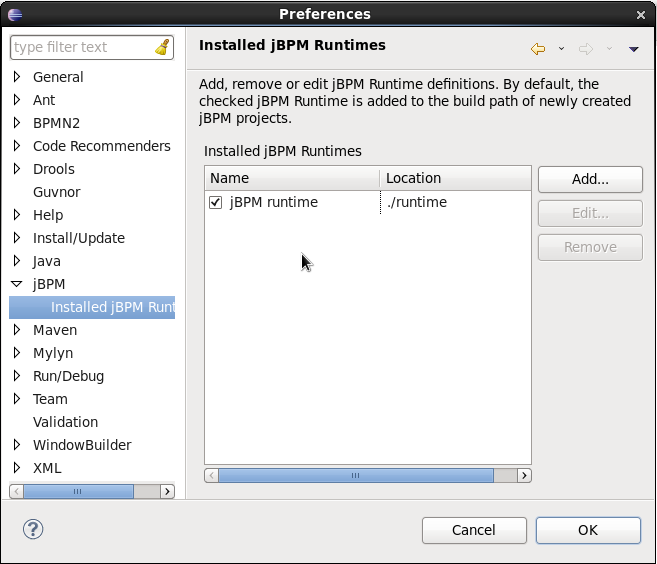

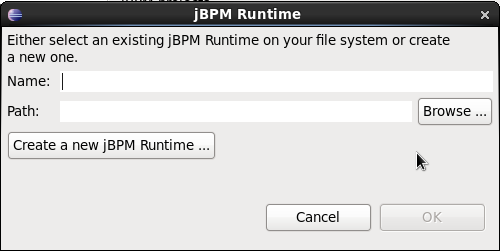

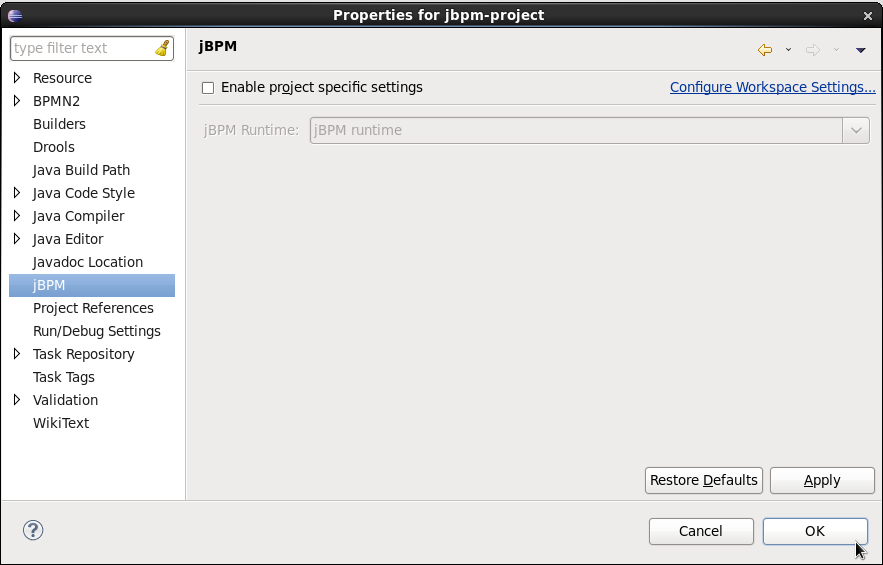

- Runtime support (so you can select which version of jBPM you would like to use)

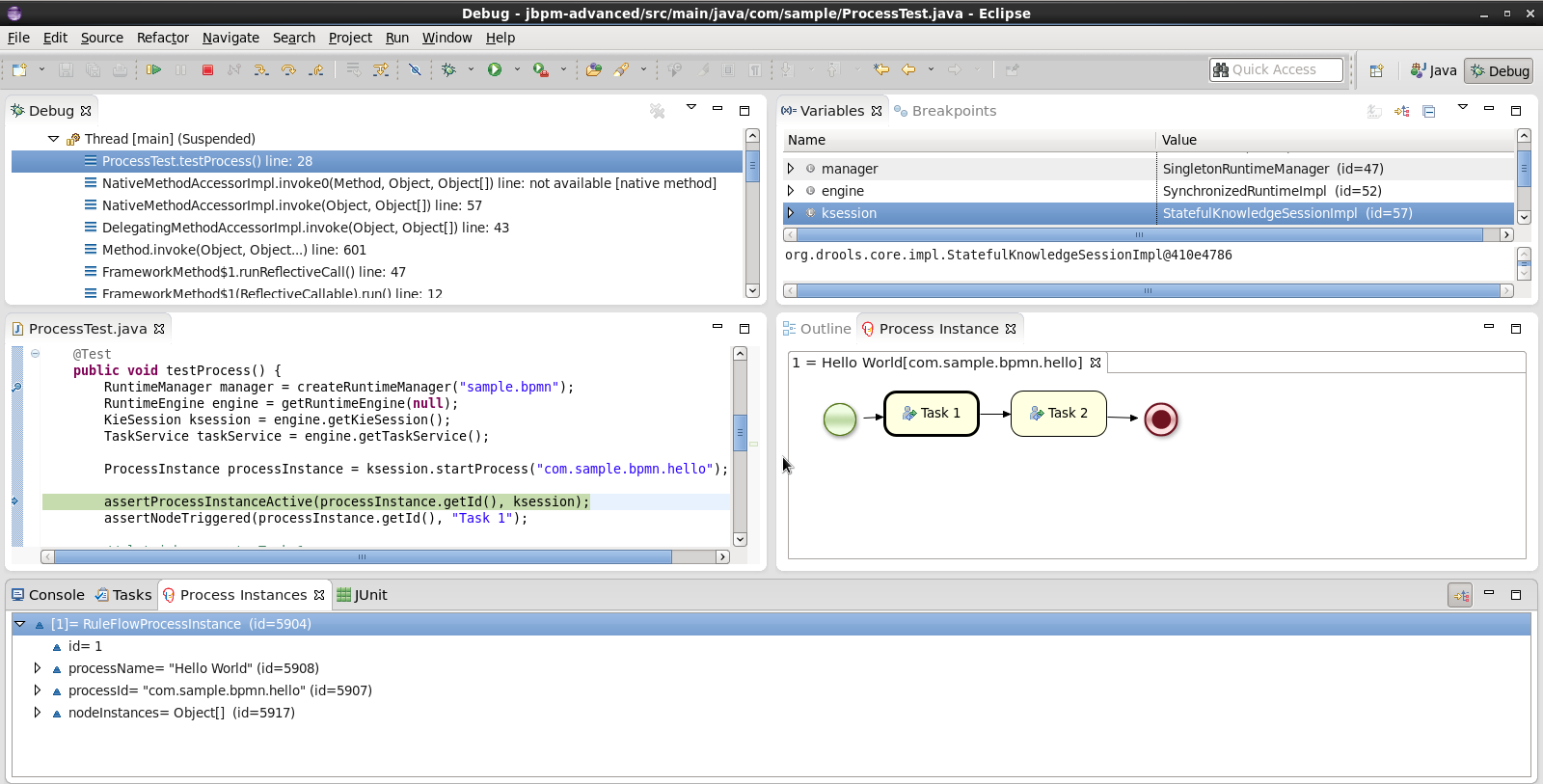

- Graphical debugging to see all running process instances of a selected session, to visualize the current state of one specific process instance, etc.

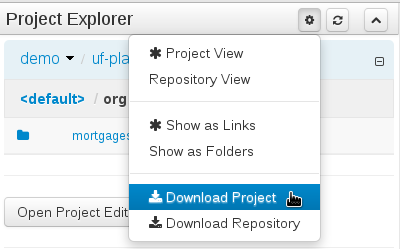

All releases can be downloaded from SourceForge. Select the version you want to download and then select which artifact you want:

bin: all the jBPM binaries (JARs) and their dependencies

src: the sources of the core components

docs: the documentation

examples: some jBPM examples, can be imported into Eclipse

installer: the jbpm-installer, downloads and installs a demo setup of jBPM

installer-full: the jbpm-installer, downloads and installs a demo setup of jBPM, already contains a number of dependencies prepackages (so they don't need to be downloaded separately)

If you like to take a quick tutorial that will guide you through most of the components using a simple example, take a look at the Installer chapter. This will teach you how to download and use the installer to create a demo setup, including most of the components. It uses a simple example to guide you through the most important features. Screencasts are available to help you out as well.

If you like to read more information first, the following chapters first focus on the core engine (API, BPMN 2.0, etc.). Further chapters will then describe the other components and other more complex topics like domain-specific processes, flexible processes, etc. After reading the core chapters, you should be able to jump to other chapters that you might find interesting.

You can also start playing around with some examples that are offered in a separate download. Check out the examples chapter to see how to start playing with these.

After reading through these chapters, you should be ready to start creating your own processes and integrate the engine with your application. These processes can be started from the installer or be started from scratch.

Here are a lot of useful links part of the jBPM community:

A feed of blog entries related to jBPM

A user forum for asking questions and giving answers

A JIRA bug tracking system for bugs, feature requests and roadmap

A continuous build server for getting the latest snapshots

Please feel free to join us in our IRC channel at chat.freenode.net #jbpm. This is where most of the real-time discussion about the project takes place and where you can find most of the developers most of their time as well. Don't have an IRC client installed? Simply go to http://webchat.freenode.net/, input your desired nickname, and specify #jbpm. Then click login to join the fun.

The jBPM code itself is using the Apache License v2.0.

Some other components we integrate with have their own license:

The new Eclipse BPMN2 plugin is Eclipse Public License (EPL) v1.0.

The web-based designer is based on Oryx/Wapama and is MIT License

The Drools project is Apache License v2.0.

jBPM now uses git for its source code version control system. The sources of the jBPM project can be found here (including all releases starting from jBPM 5.0-CR1):

https://github.com/droolsjbpm/jbpm

The source of some of the other components we integrate with can be found here:

If you're interested in building the source code, contributing, releasing, etc. make sure to read this README.

We are often asked "How do I get involved". Luckily the answer is simple, just write some code and submit it :) There are no hoops you have to jump through or secret handshakes. We have a very minimal "overhead" that we do request to allow for scalable project development. Below we provide a general overview of the tools and "workflow" we request, along with some general advice.

If you contribute some good work, don't forget to blog about it :)

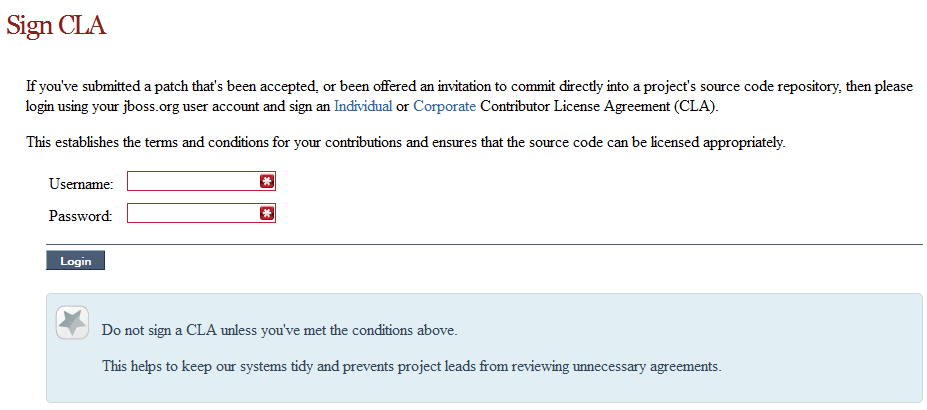

Signing to jboss.org will give you access to the JBoss wiki, forums and JIRA. Go to http://www.jboss.org/ and click "Register".

The only form you need to sign is the contributor agreement, which is fully automated via the web. As the image below says "This establishes the terms and conditions for your contributions and ensures that source code can be licensed appropriately"

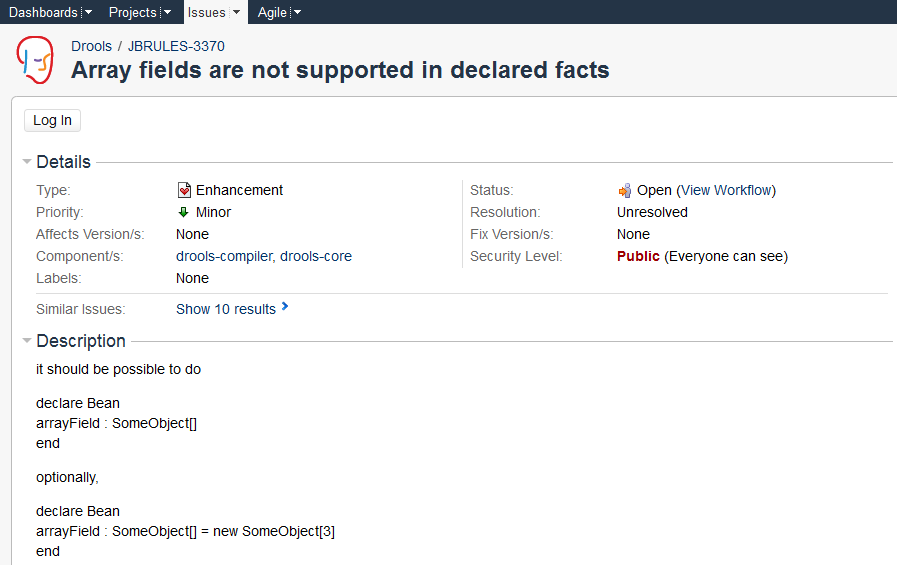

To be able to interact with the core development team you will need to use JIRA, the issue tracker. This ensures that all requests are logged and allocated to a release schedule and all discussions captured in one place. Bug reports, bug fixes, feature requests and feature submissions should all go here. General questions should be undertaken at the mailing lists.

Minor code submissions, like format or documentation fixes do not need an associated JIRA issue created.

https://issues.jboss.org/browse/JBRULES (Drools)

https://issues.jboss.org/browse/JBPM

https://issues.jboss.org/browse/GUVNOR

With the contributor agreement signed and your requests submitted to JIRA you should now be ready to code :) Create a GitHub account and fork any of the Drools, jBPM or Guvnor repositories. The fork will create a copy in your own GitHub space which you can work on at your own pace. If you make a mistake, don't worry blow it away and fork again. Note each GitHub repository provides you the clone (checkout) URL, GitHub will provide you URLs specific to your fork.

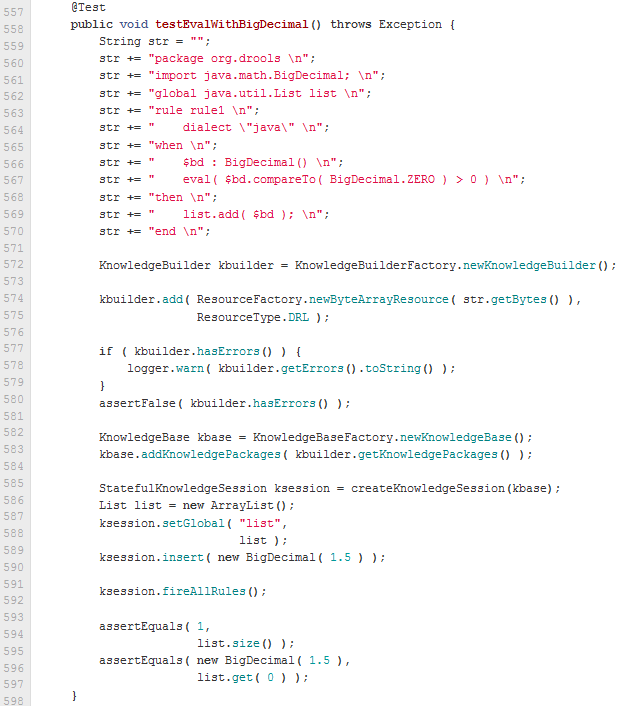

When writing tests, try and keep them minimal and self contained. We prefer to keep the DRL fragments within the test, as it makes for quicker reviewing. If their are a large number of rules then using a String is not practical so then by all means place them in separate DRL files instead to be loaded from the classpath. If your tests need to use a model, please try to use those that already exist for other unit tests; such as Person, Cheese or Order. If no classes exist that have the fields you need, try and update fields of existing classes before adding a new class.

There are a vast number of tests to look over to get an idea, MiscTest is a good place to start.

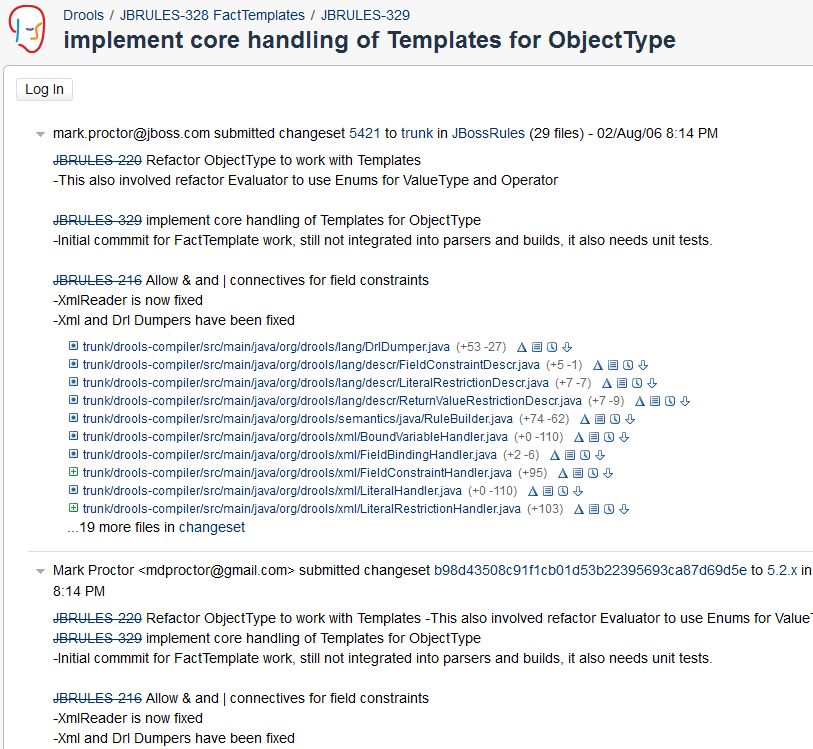

When you commit, make sure you use the correct conventions. The commit must start with the JIRA issue id, such as JBRULES-220. This ensures the commits are cross referenced via JIRA, so we can see all commits for a given issue in the same place. After the id the title of the issue should come next. Then use a newline, indented with a dash, to provide additional information related to this commit. Use an additional new line and dash for each separate point you wish to make. You may add additional JIRA cross references to the same commit, if it's appropriate. In general try to avoid combining unrelated issues in the same commit.

Don't forget to rebase your local fork from the original master and then push your commits back to your fork.

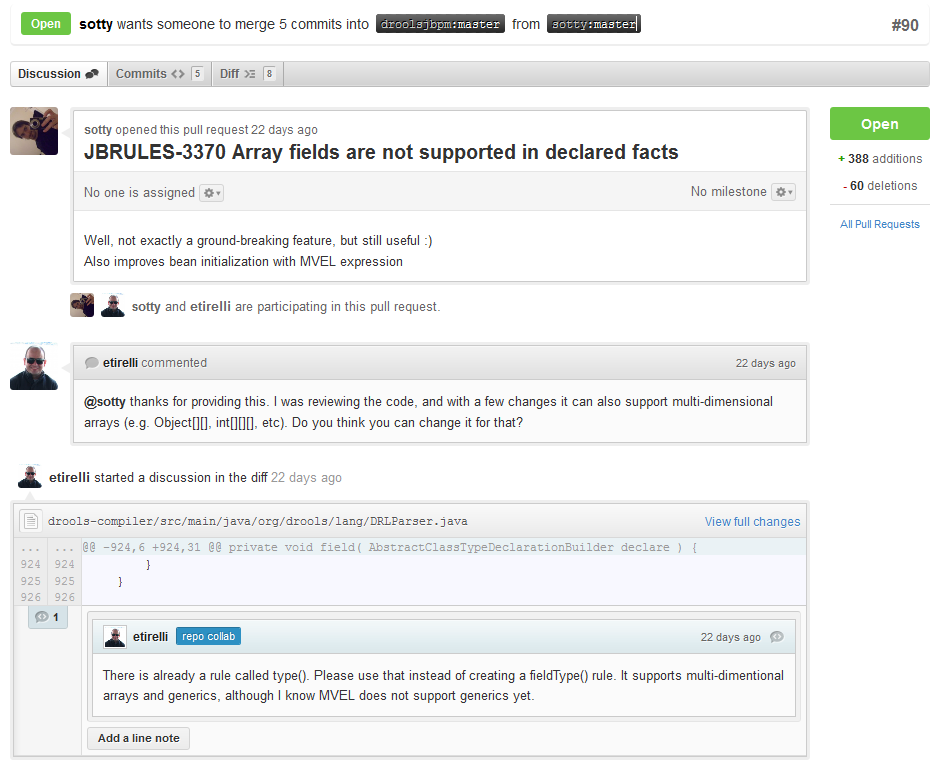

With your code rebased from original master and pushed to your personal GitHub area, you can now submit your work as a pull request. If you look at the top of the page in GitHub for your work area their will be a "Pull Request" button. Selecting this will then provide a gui to automate the submission of your pull request.

The pull request then goes into a queue for everyone to see and comment on. Below you can see a typical pull request. The pull requests allow for discussions and it shows all associated commits and the diffs for each commit. The discussions typically involve code reviews which provide helpful suggestions for improvements, and allows for us to leave inline comments on specific parts of the code. Don't be disheartened if we don't merge straight away, it can often take several revisions before we accept a pull request. Luckily GitHub makes it very trivial to go back to your code, do some more commits and then update your pull request to your latest and greatest.

It can take time for us to get round to responding to pull requests, so please be patient. Submitted tests that come with a fix will generally be applied quite quickly, where as just tests will often way until we get time to also submit that with a fix. Don't forget to rebase and resubmit your request from time to time, otherwise over time it will have merge conflicts and core developers will general ignore those.

You can always contact the jBPM community for assistance.

IRC: #jbpm at chat.freenode.net

This script assumes you have Java JDK 1.6+ (set as JAVA_HOME), and Ant 1.7+ installed. If you don't, use the following links to download and install them:

Java: http://java.sun.com/javase/downloads/index.jsp

Ant: http://ant.apache.org/bindownload.cgi

Tip

To check whether Java and Ant are installed correctly, type the following commands inside a command prompt:

java -version

ant -version

This should return information about which version of Java and Ant you are currently using.

First of all, you need to download the installer and unzip it to your local file system. There are two versions

full installer - which already contains a lot of the dependencies that are necessary during the installation

minimal installer - which only contains the installer and will download all dependencies

In general, it is probably best to download the full installer: jBPM-{version}-installer-full.zip

You can also find the latest snapshot release here (only minimal installer) here:

https://hudson.jboss.org/jenkins/job/jBPM/lastSuccessfulBuild/artifact/jbpm-distribution/target/

The easiest way to get started is to simply run the installation script to install the demo setup. The demo install will setup all the web tooling (on top of WildFly) and Eclipse tooling in a pre-configured setup. Go into the jbpm-installer folder where you unzipped the installer and (from a command prompt) run:

ant install.demoThis will:

Download WildFly application server

Configure and deploy the web tooling

Download Eclipse

Install the Drools and jBPM Eclipse plugin

Install the Eclipse BPMN 2.0 Modeler

Running this command could take a while (REALLY, not kidding, we are for example downloading an Eclipse installation, even if you downloaded the full installer, specifically for your operating system).

Tip

The script always shows which file it is downloading (you could for example check whether it is still downloading by checking the whether the size of the file in question in the jbpm-installer/lib folder is still increasing). If you want to avoid downloading specific components (because you will not be using them or you already have them installed somewhere else), check below for running only specific parts of the demo or directing the installer to an already installed component.

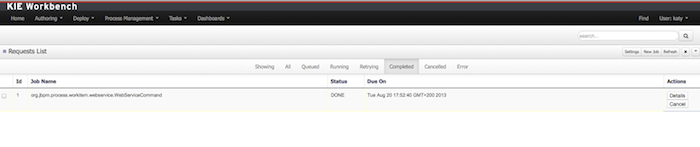

Once the demo setup has finished, you can start playing with the various components by starting the demo setup:

ant start.demoThis will:

Start H2 database server

Start WildFly application server

Start Eclipse

Now wait until the process management console comes up:

http://localhost:8080/jbpm-console

Note

It could take a minute to start up the application server and web application. If the web page doesn't show up after a while, make sure you don't have a firewall blocking that port, or another application already using the port 8080. You can always take a look at the server log jbpm-installer/wildfly-8.1.0.Final/standalone/log/server.log

Finally, if you also want to use the DashBuilder for reporting (which is implemented as a separate war), you can now also install this:

ant install.dashboard.into.jbossOnce everything is started, you can start playing with the Eclipse and web tooling, as explained in the following sections.

If you only want to try out the web tooling and do not wish to download and install the Eclipse tooling, you can use these alternative commands:

ant install.demo.noeclipse

ant start.demo.noeclipseSimilarly, if you only want to try out the Eclipse tooling and do not wish to download and install the web tooling, you can use these alternative commands:

ant install.demo.eclipse

ant start.demo.eclipseNow continue with the 10-minute tutorials. Once you're done playing and you want to shut down the demo setup, you can use:

ant stop.demoIf at any point in time would like to start over with a clean demo setup - meaning all changes you did inside the web tooling and/or saved in the database will be lost, you can run the following command (after which you can run the installer again from scratch, note that this cannot be undone):

ant clean.demoOpen up the process management console:

http://localhost:8080/jbpm-console

Note

It could take a minute to start up the AS and web application. If the web page doesn't show up after a while, make sure you don't have a firewall blocking that port, or another application already using the port 8080. You can always take a look at the server log jbpm-installer/jboss-as-7.1.1.Final/standalone/log/server.log

Log in, using krisv / krisv as username / password.

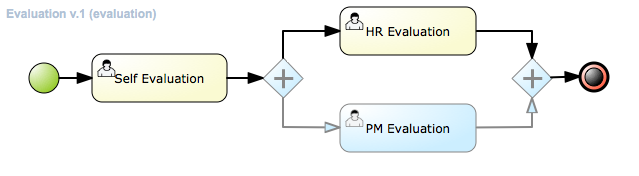

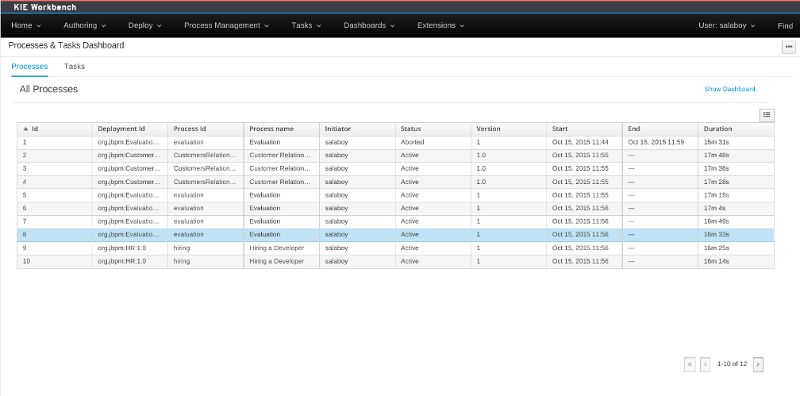

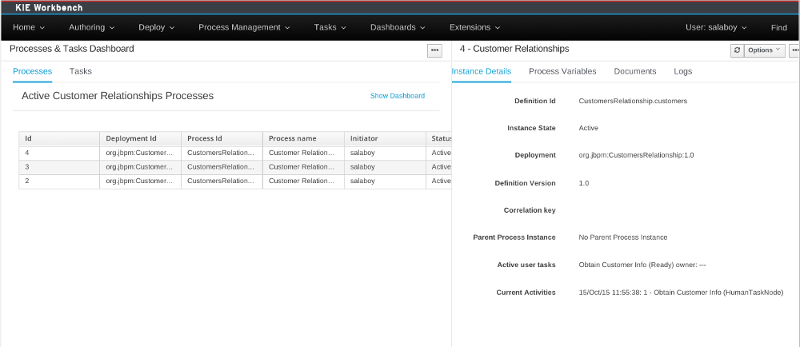

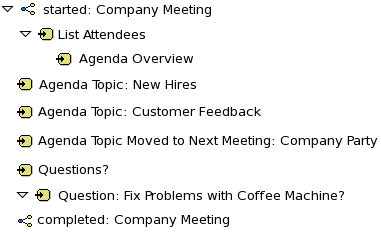

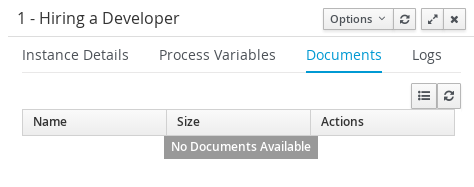

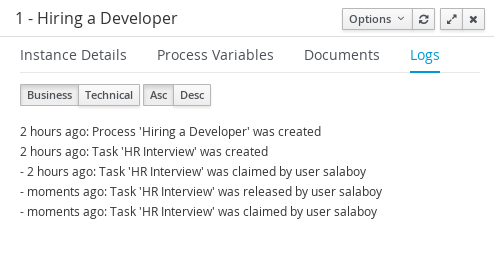

Using a prebuilt Evaluation example, the following screencast gives an overview of how to manage your process instances. It shows you:

- How to build and deploy a process

- How to start a new process instance

- How to look up the current status of a running process instance

- How to look up your tasks

- How to complete a task

- How to generate reports to monitor your process execution

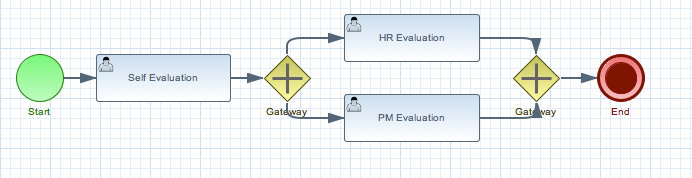

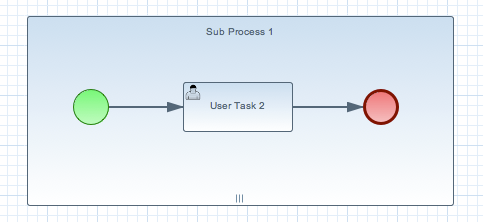

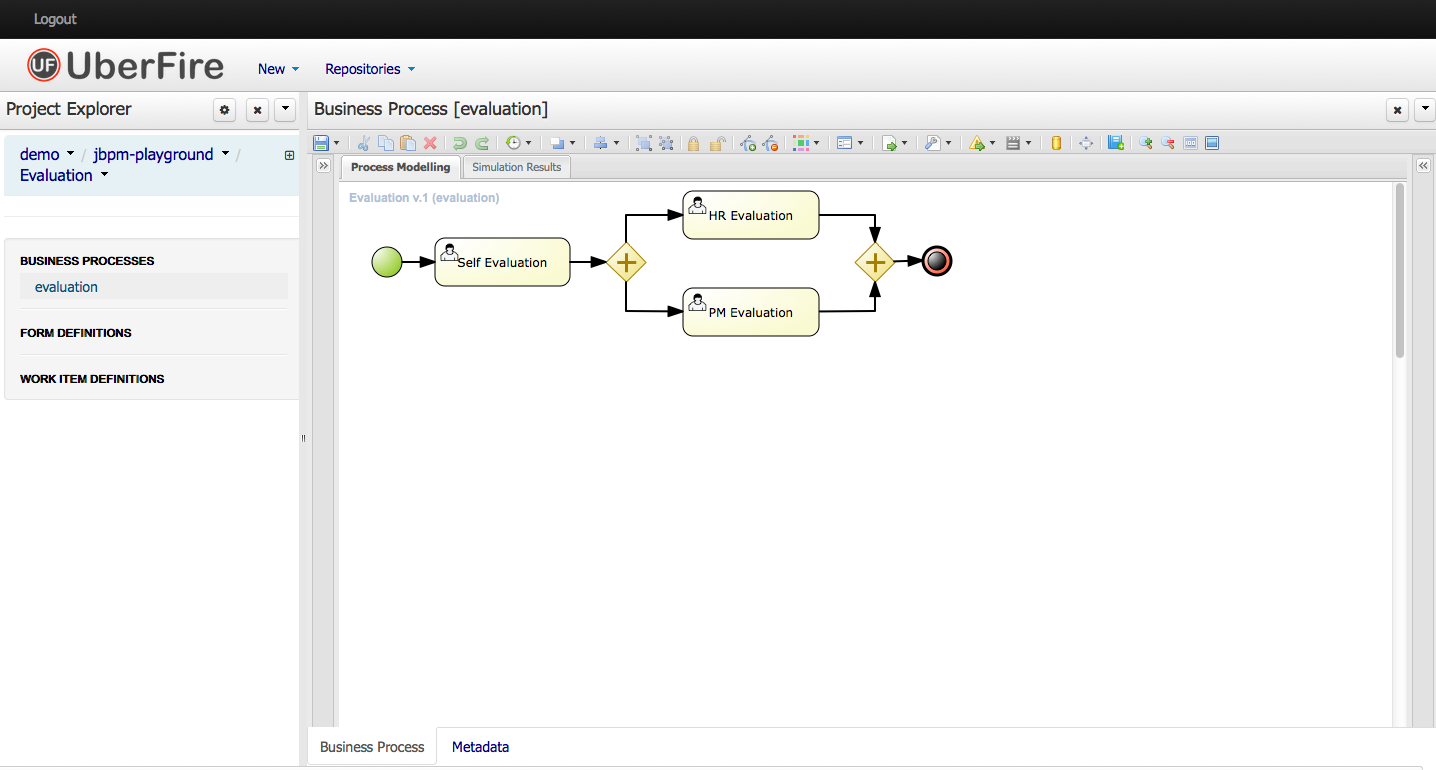

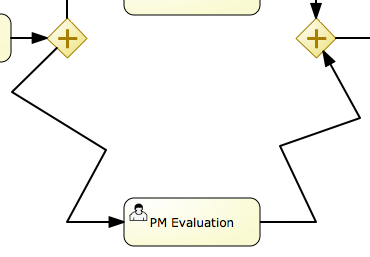

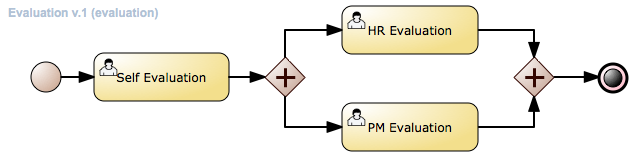

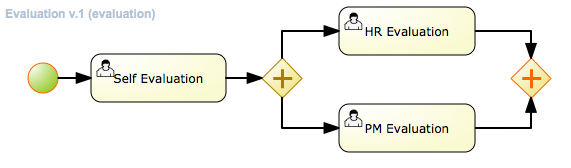

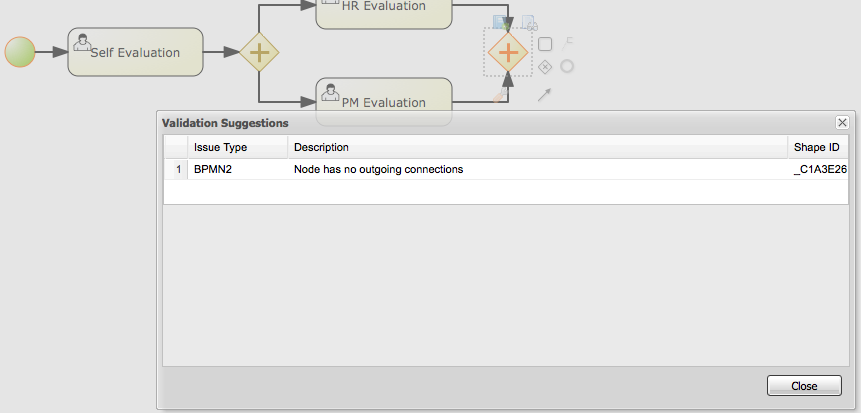

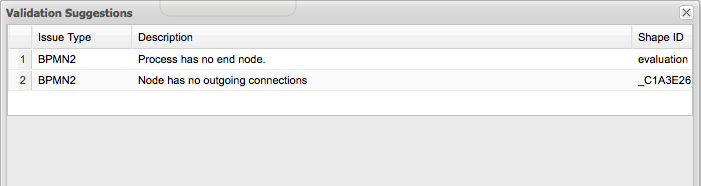

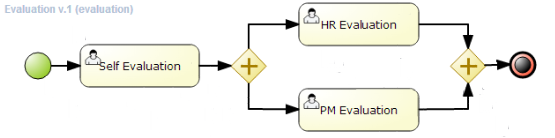

Figure 3.1.

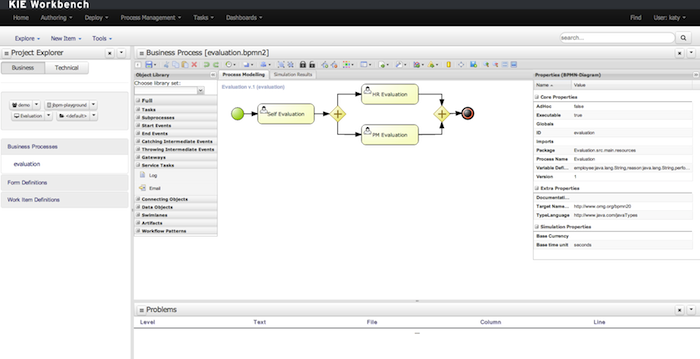

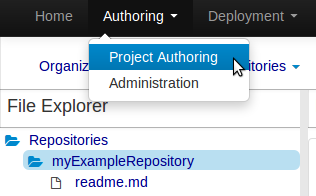

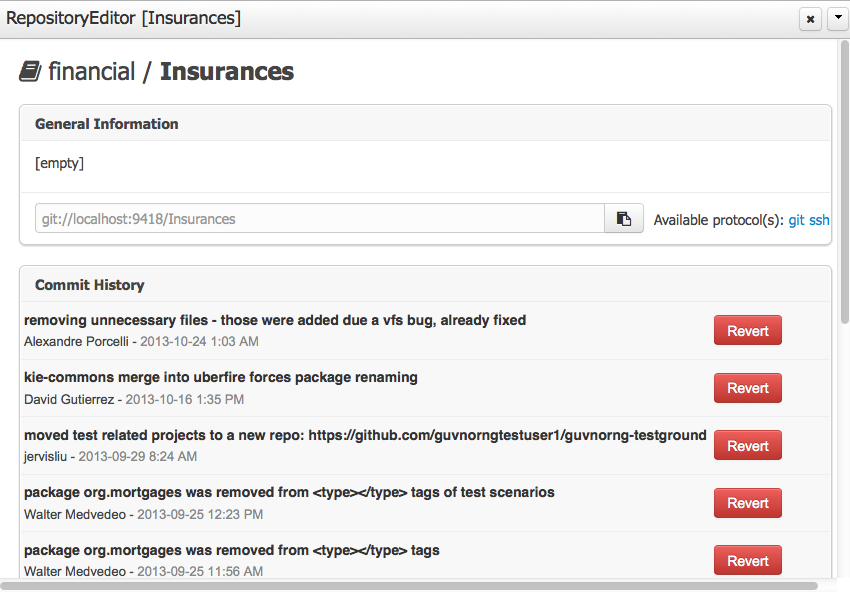

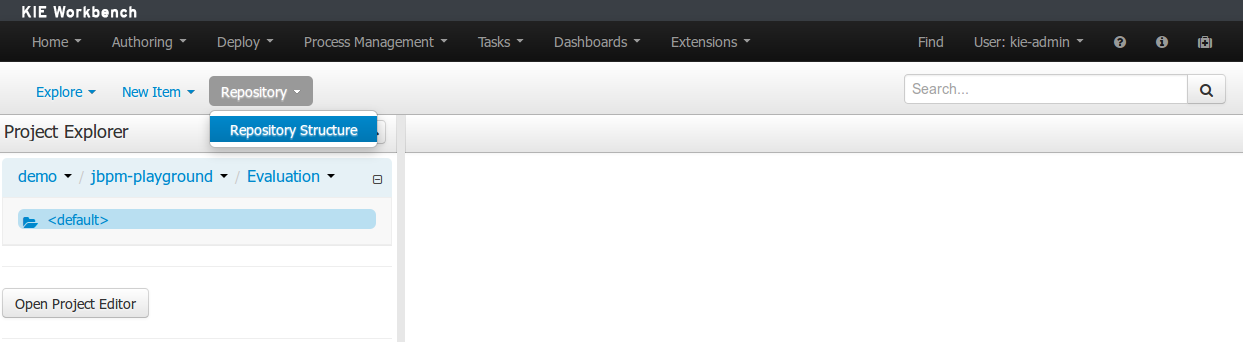

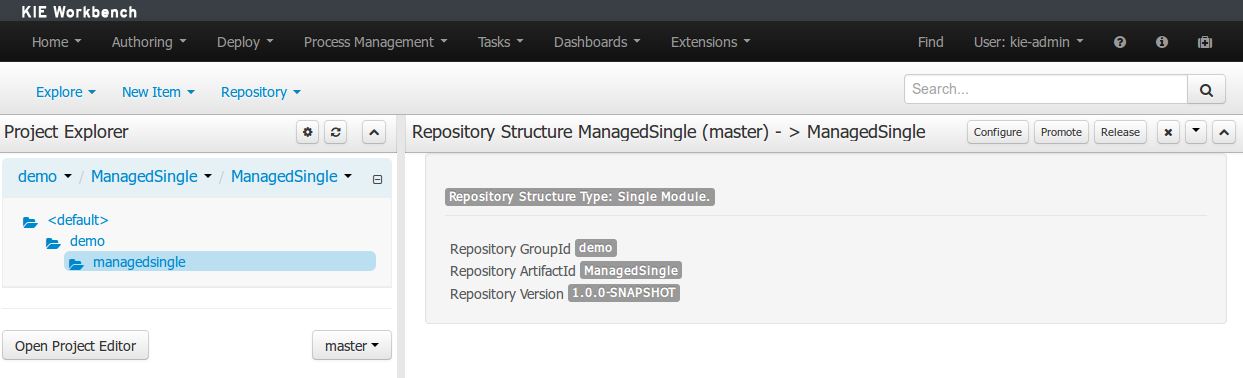

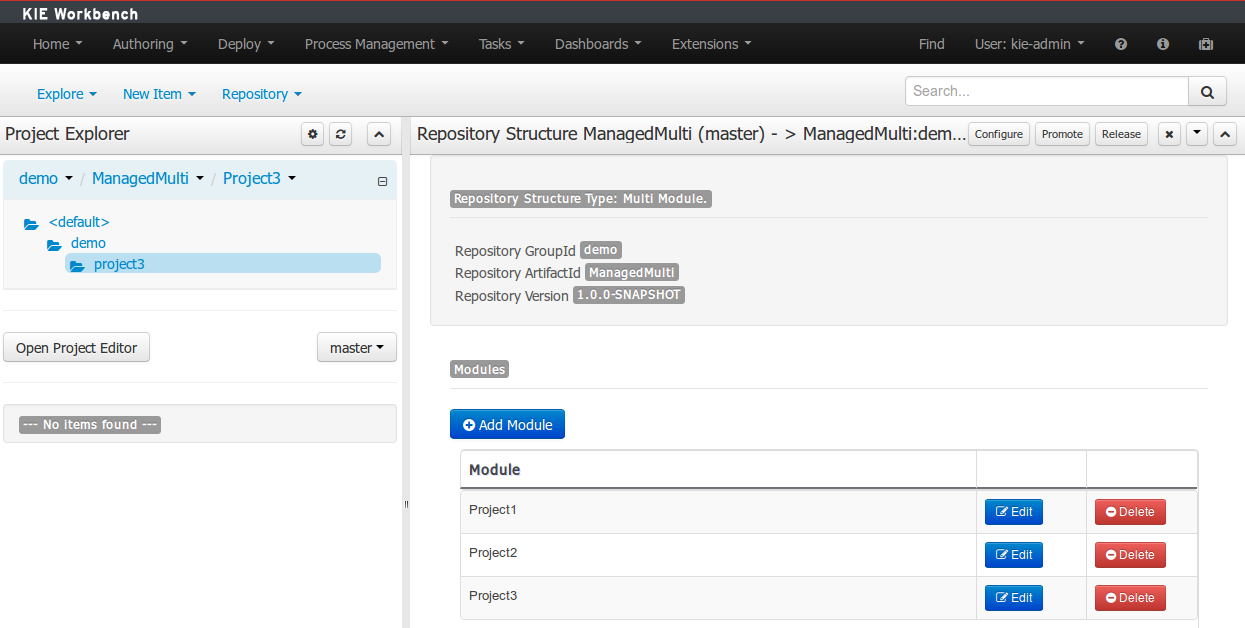

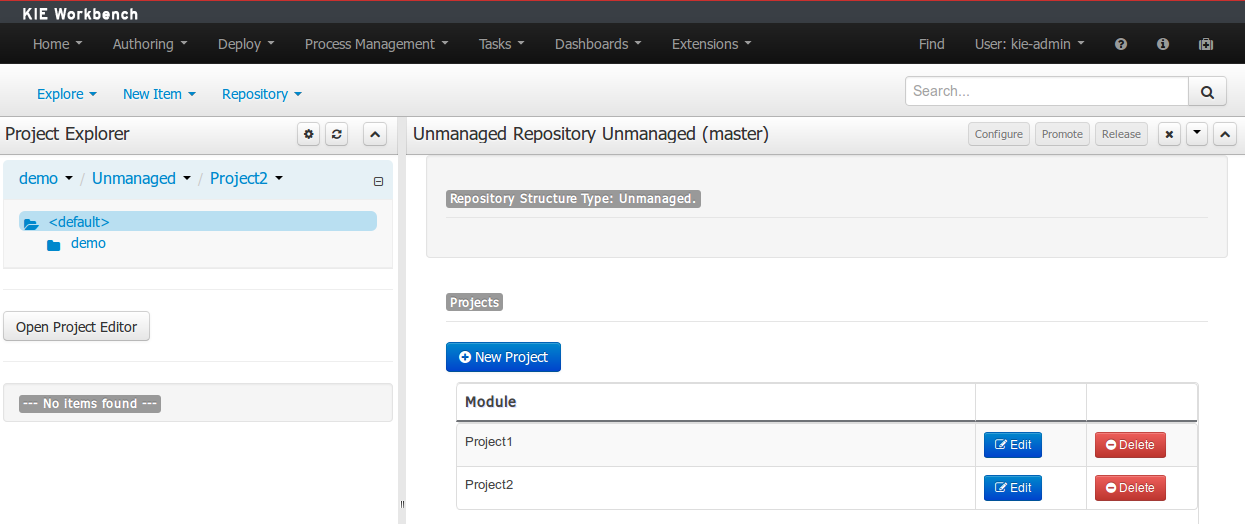

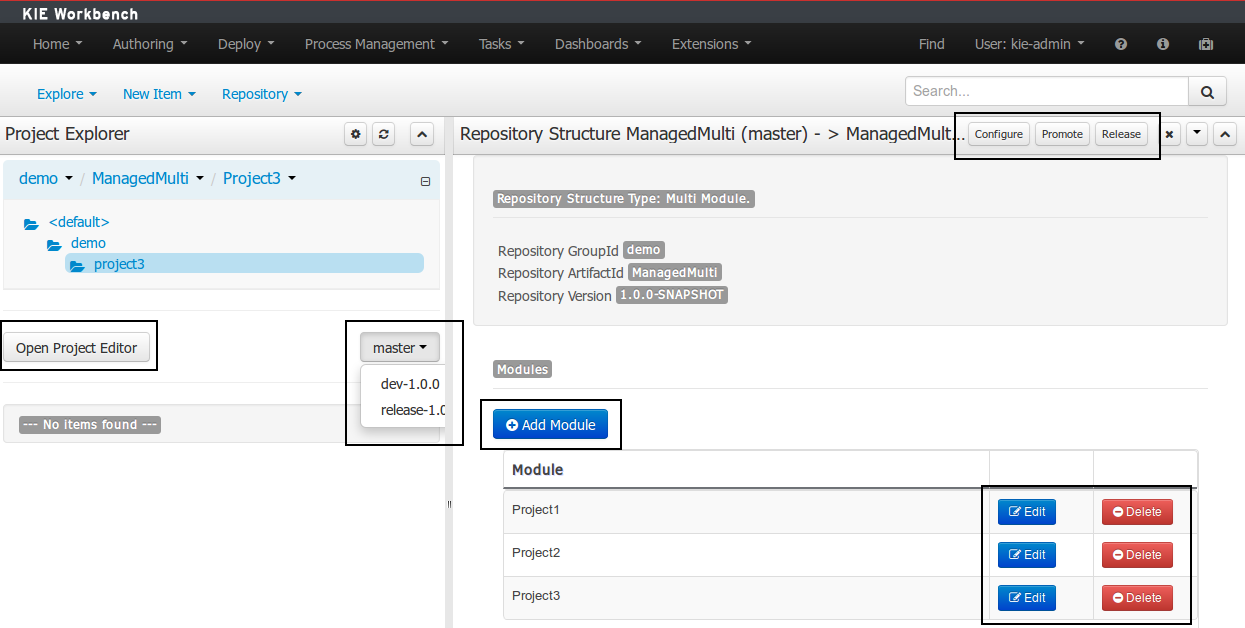

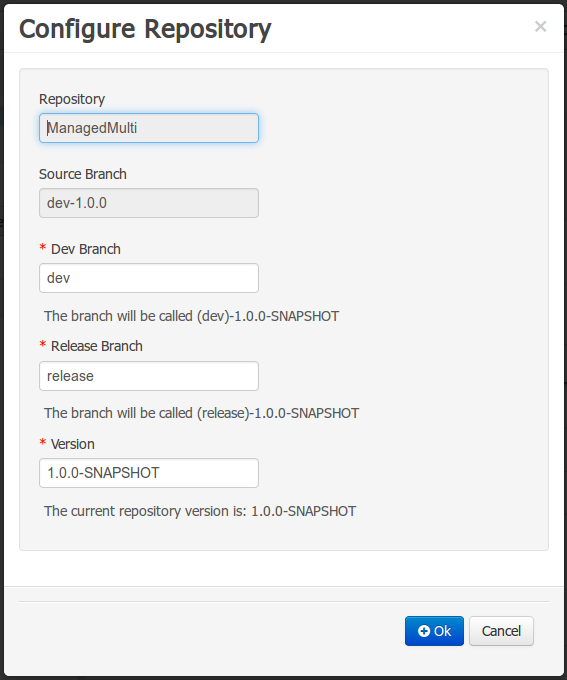

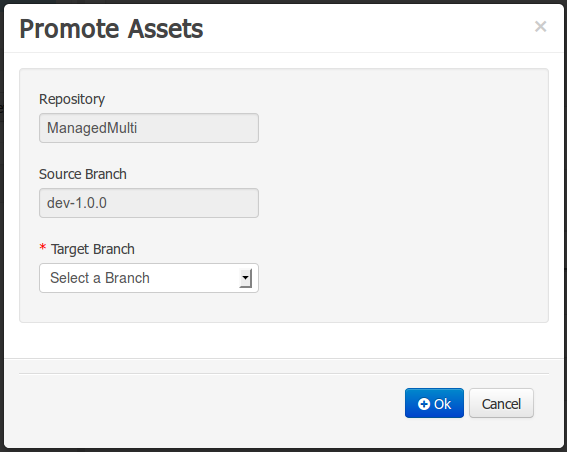

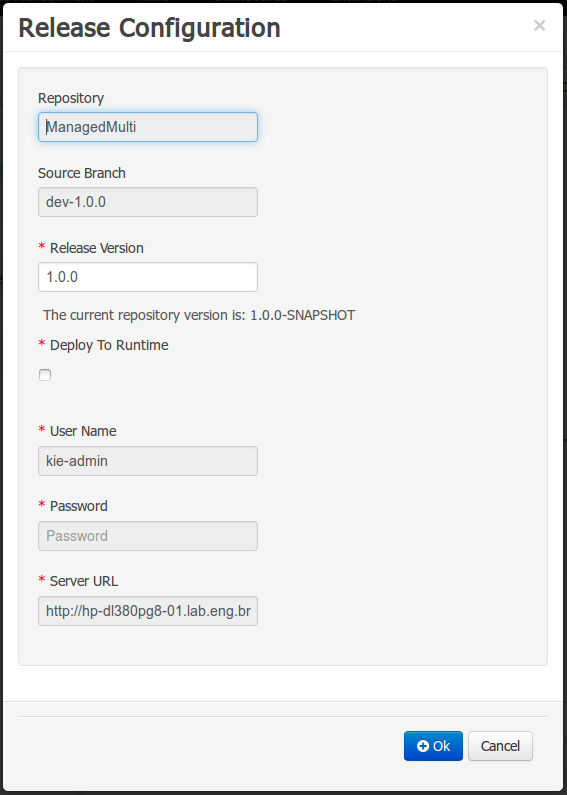

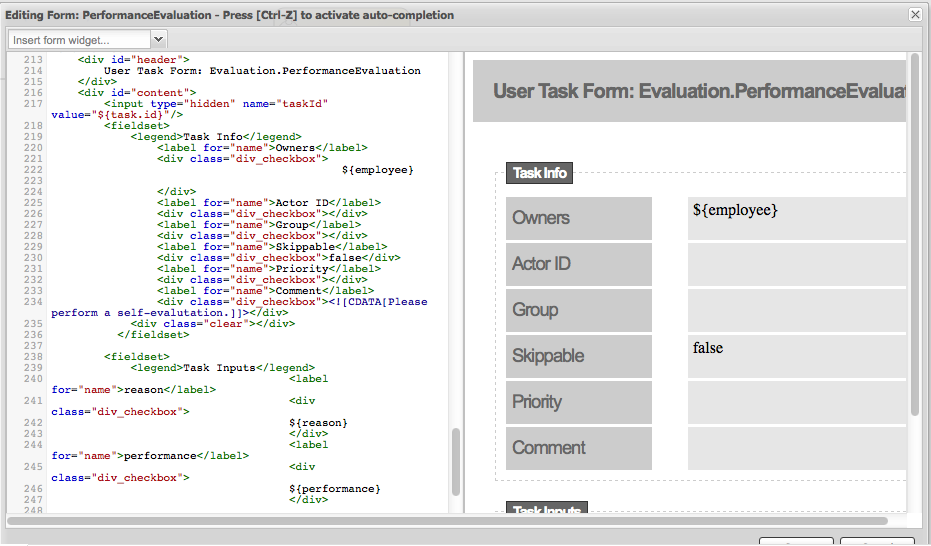

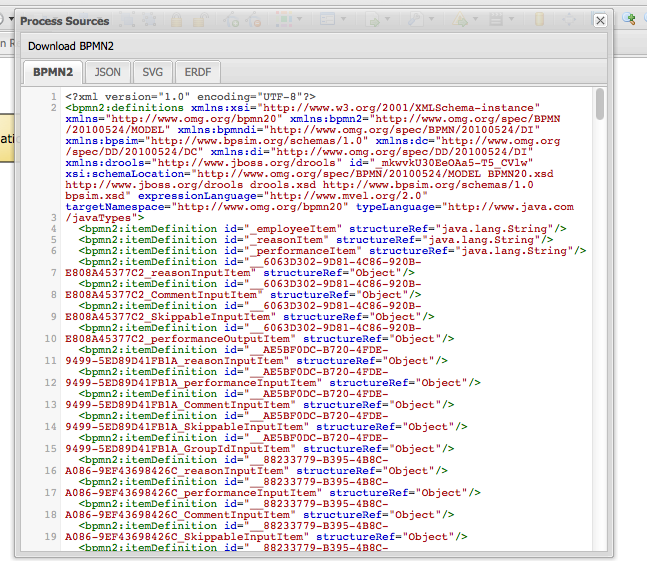

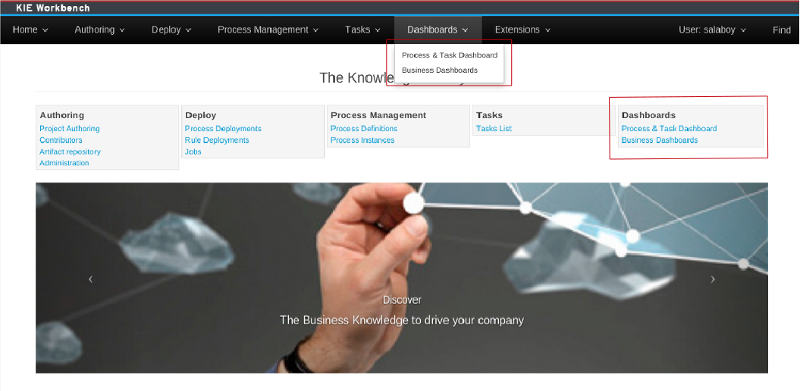

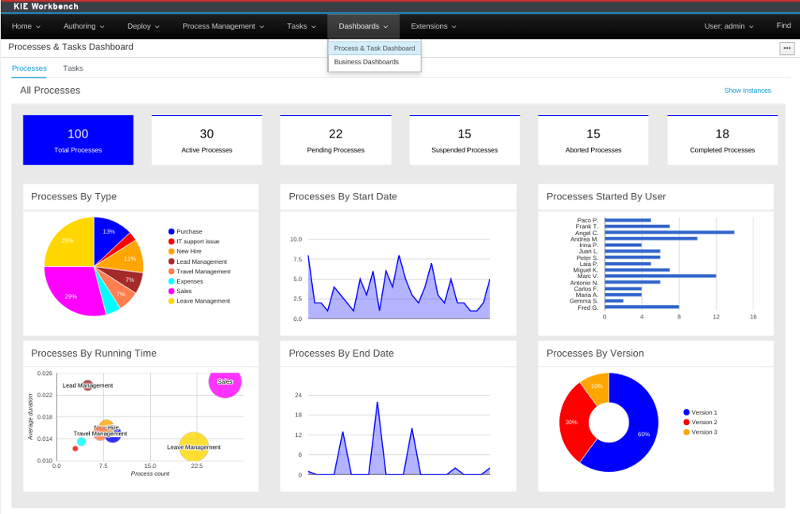

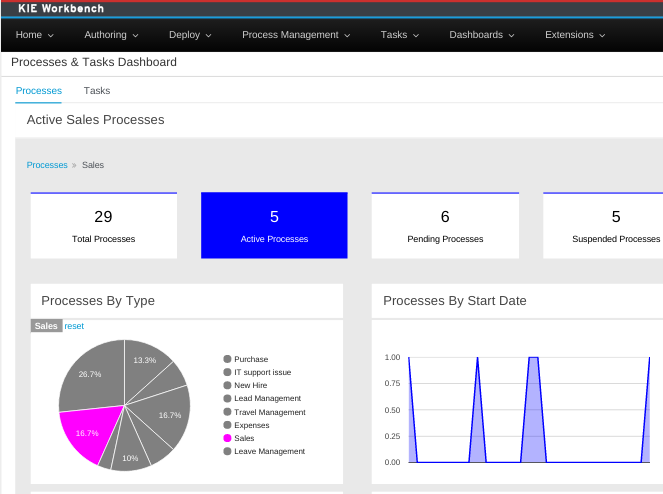

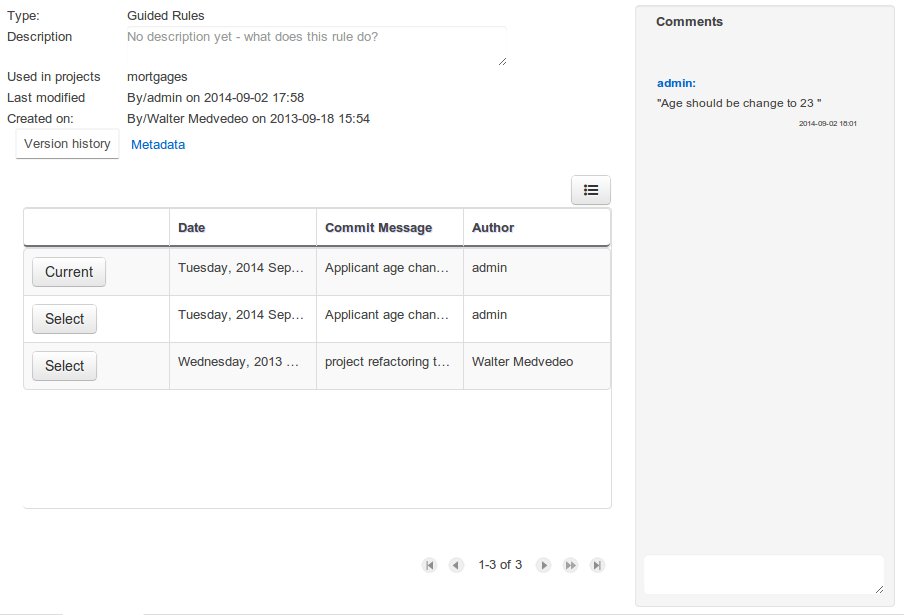

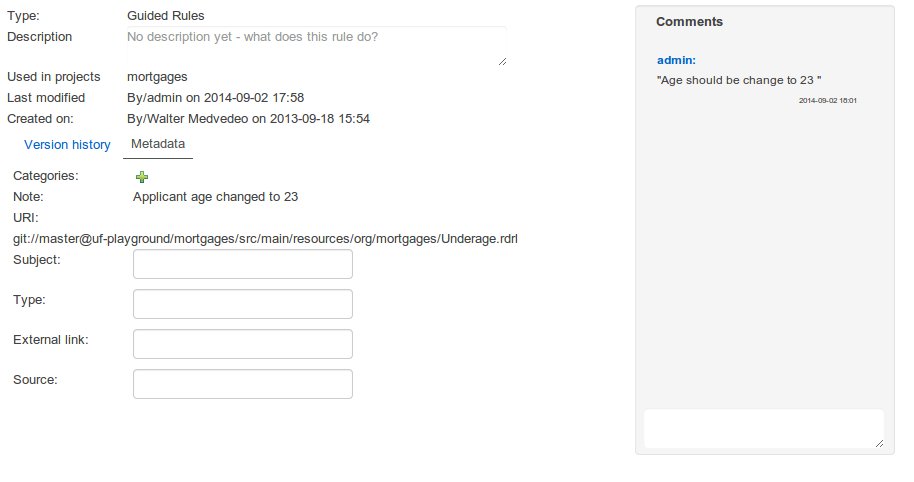

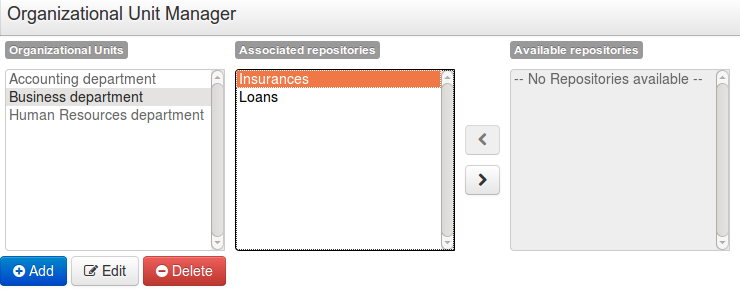

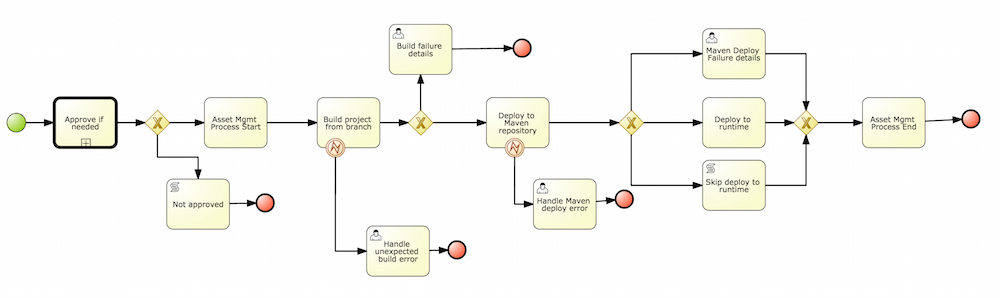

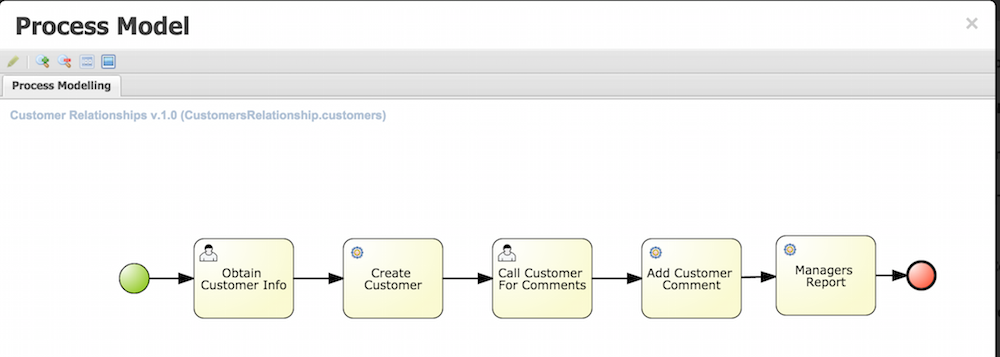

The workbench supports the entire life cycle of your business processes: authoring, deployment, process management, tasks and dashboards.

- The project authoring perspective allows you to look at existing repositories, where each

project can contain business processes (but also business rules, data models, forms, etc.). By default,

the workbench will download two sample playground repositories, containing examples to look at.

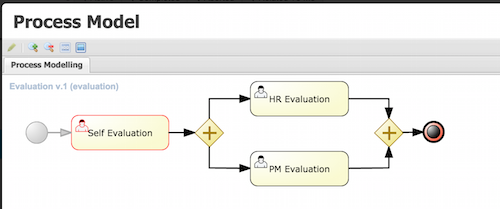

- In this screencast, the Evaluation project inside the jbpm-playground repository is used.

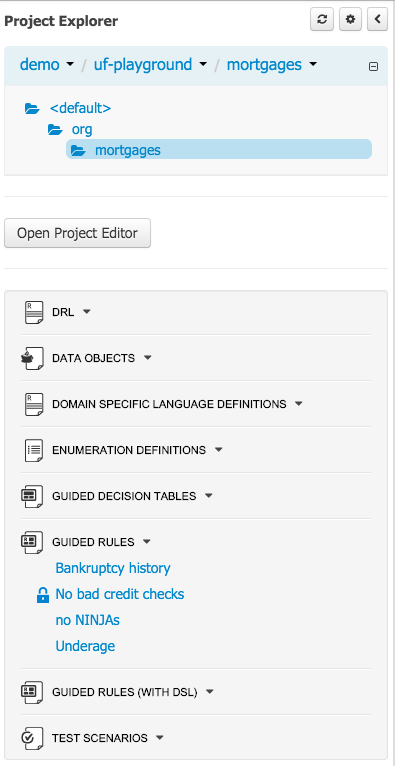

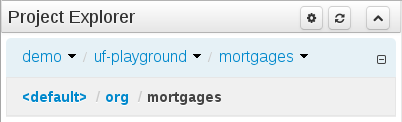

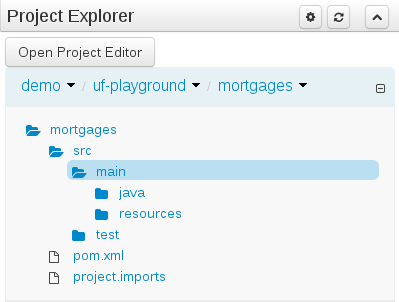

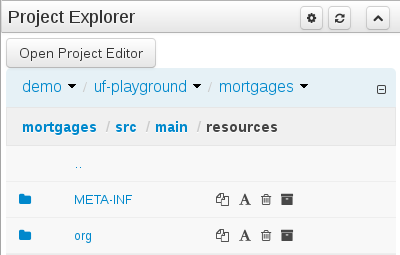

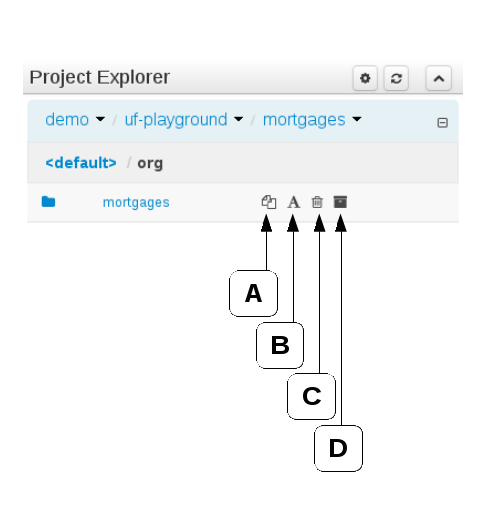

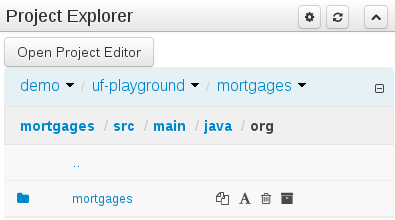

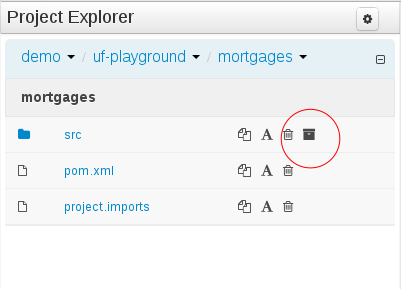

- The project explorer shows all available artefacts:

- evaluation: business process describing the evaluation process as a sequence of tasks

- evaluation-taskform: process form to start the evaluation process

- PerformanceEvaluation-taskform: task form to perform the evaluation tasks

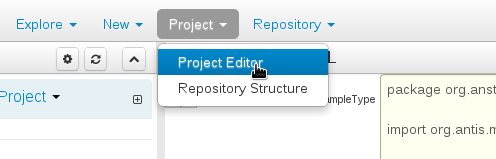

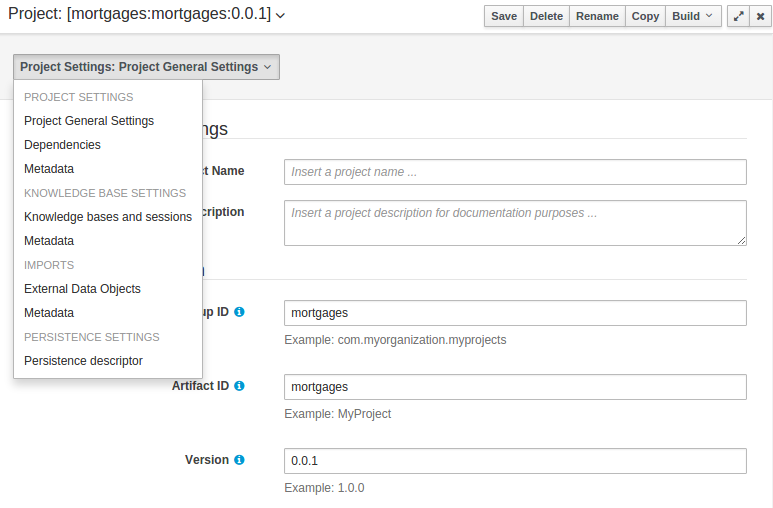

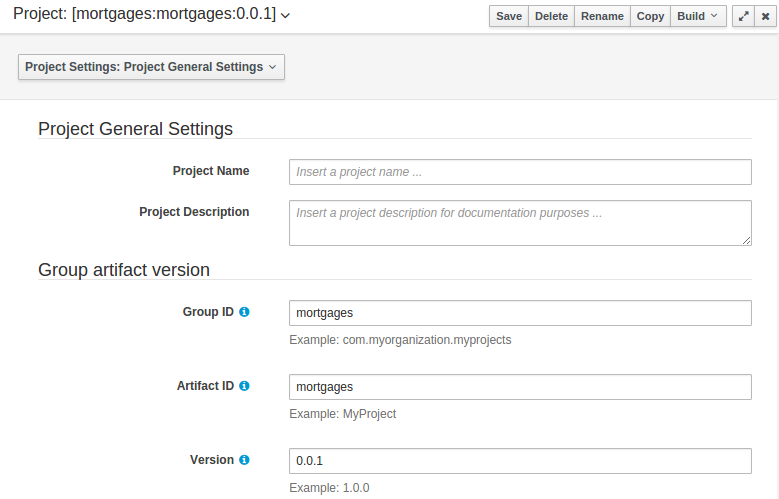

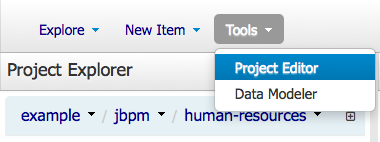

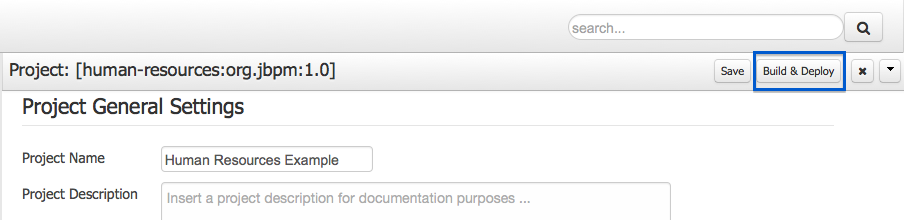

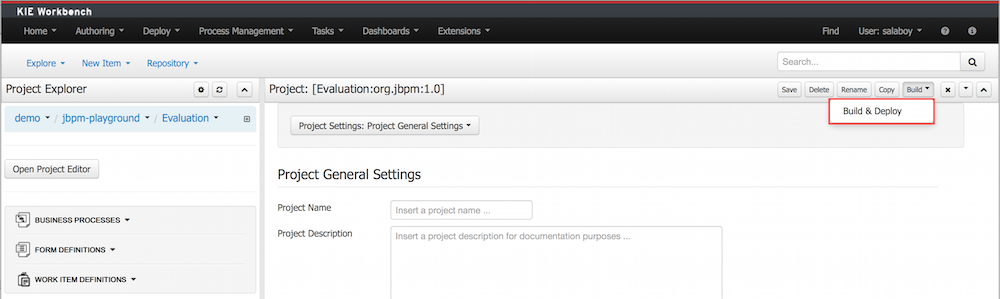

- To make a process available for execution, you need to successfully build and deploy it first. To do so, open up the Project Editor (from the Tools menu) and click Build & Deploy.

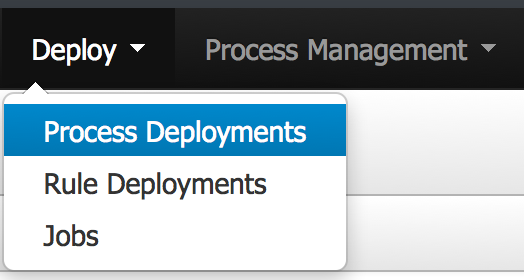

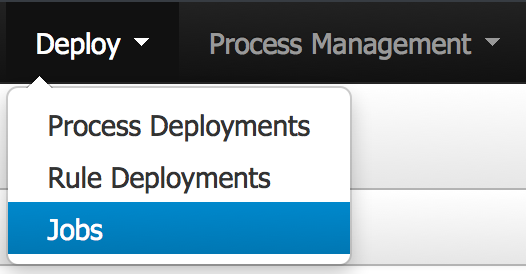

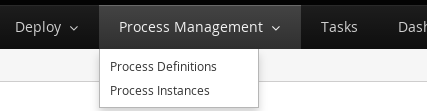

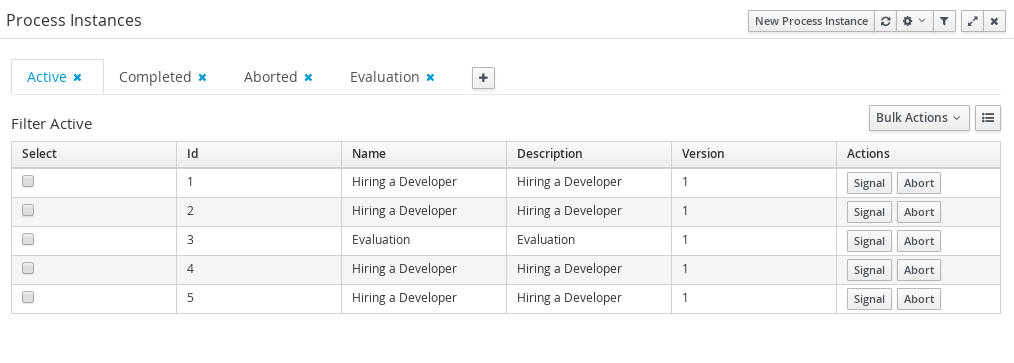

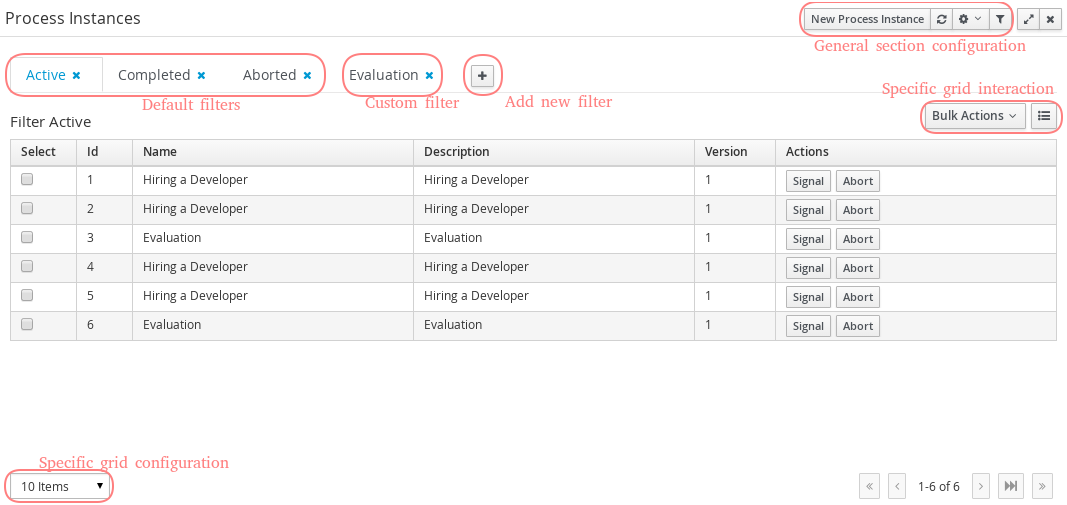

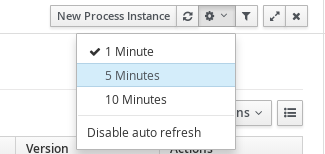

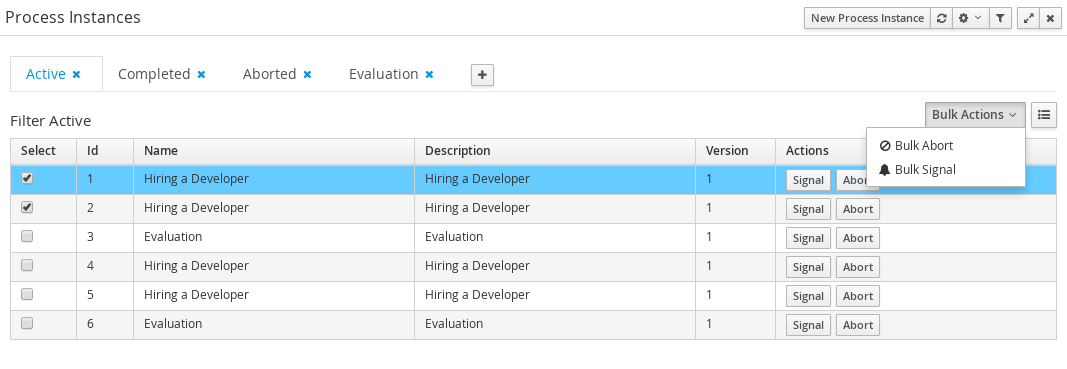

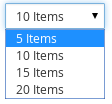

- To manage your process definitions and instances, click on the "Process Management" menu option

at the top menu bar an select one of available options depending on you interest:

- Process Definitions - lists all available process definitions

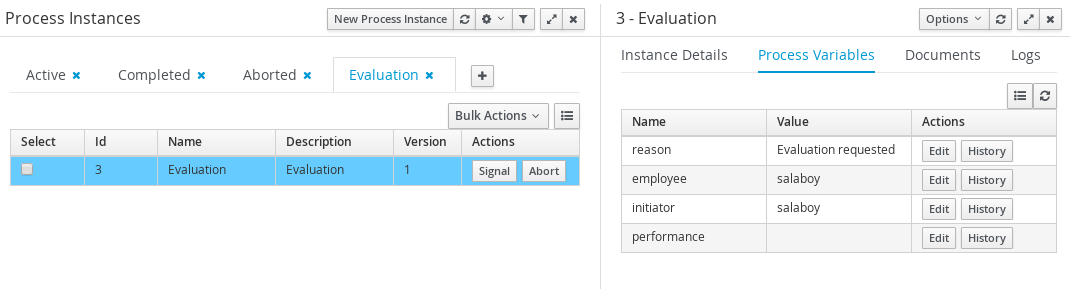

- Process Instances - lists all active process instances (allows to show completed, aborted as well by changing filter criteria)

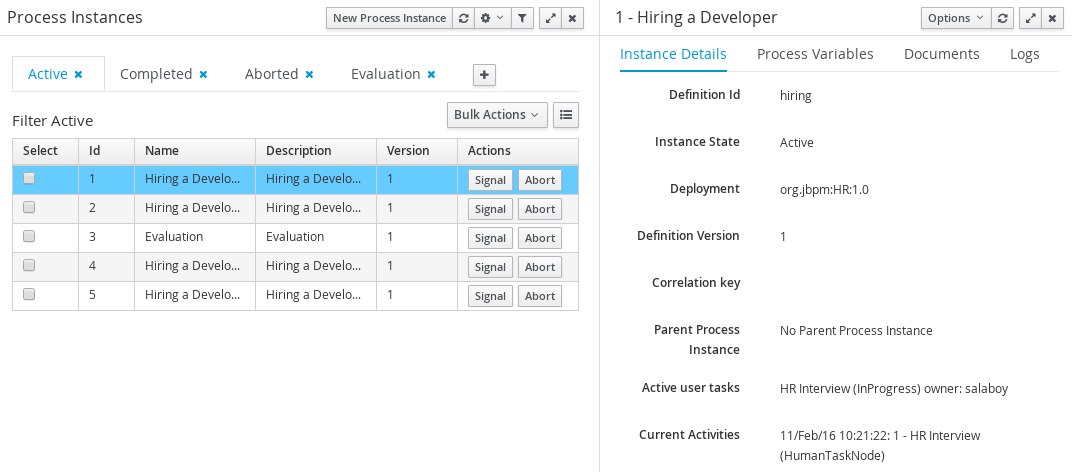

- Process definitions panel allow you to start a new process instance by clicking on the "Play"

button. The process form (as defined in the project) will be shown, where you need to fill in the

necessary information to start the process. In this case, you need to fill the user you want to start

an evaluation for (in this case use "krisv") and a reason for the request, after which you can complete

the form. Some details about the process instance that was just started will be shown in the process

instance details panel. From there you can access additional details:

The process instance that you just started is first requiring a self-evaluation of the user and is waiting until the user has completed this task.

- Process model - to visualize current state of the process

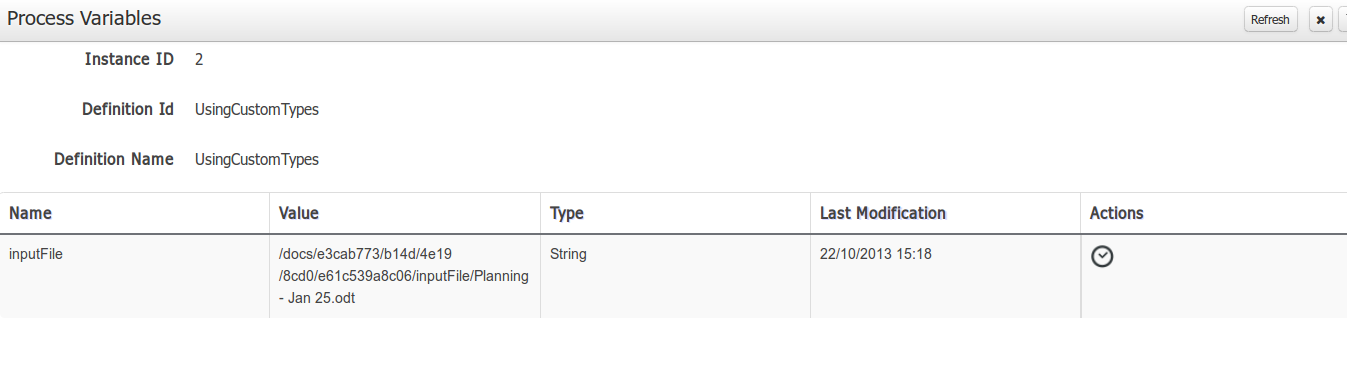

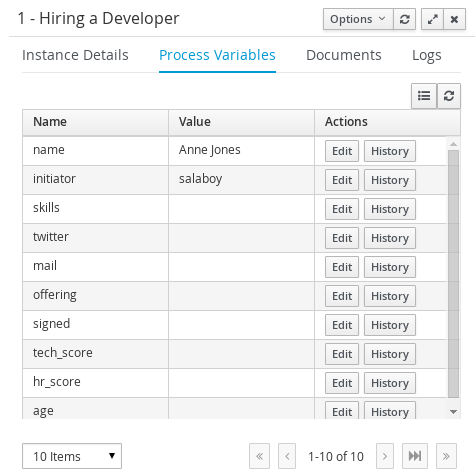

- Process variables - to see current values of process variables

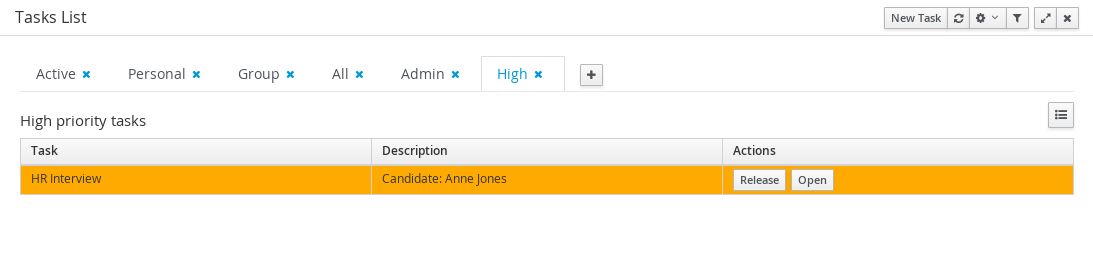

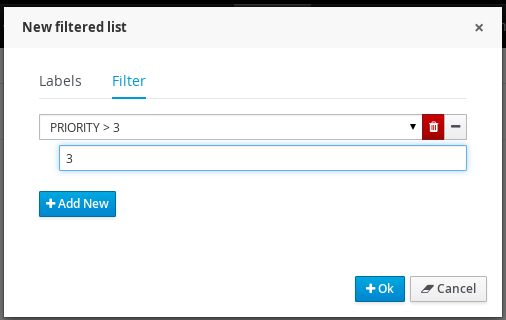

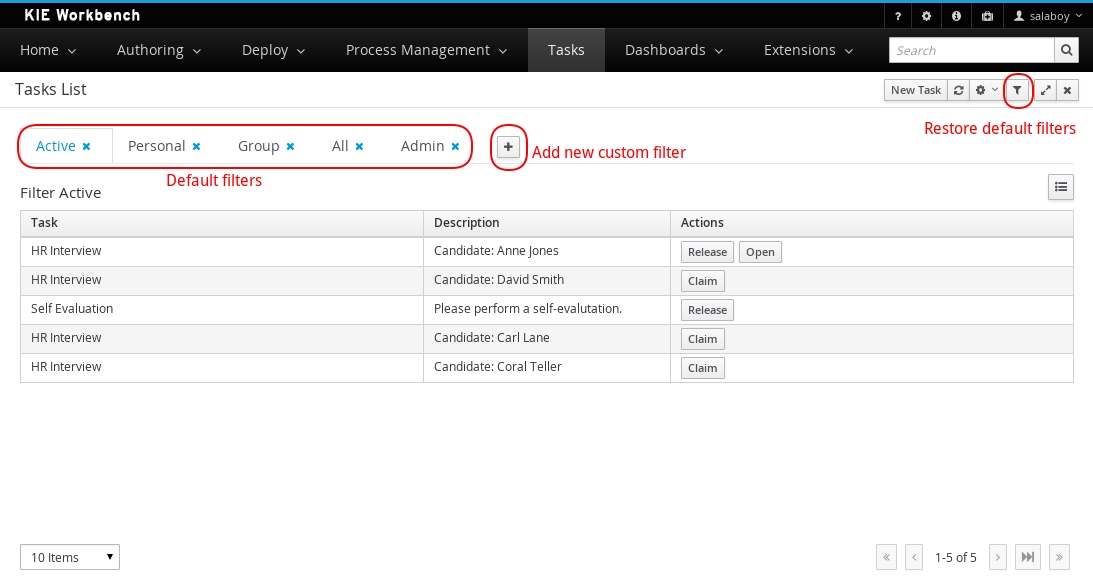

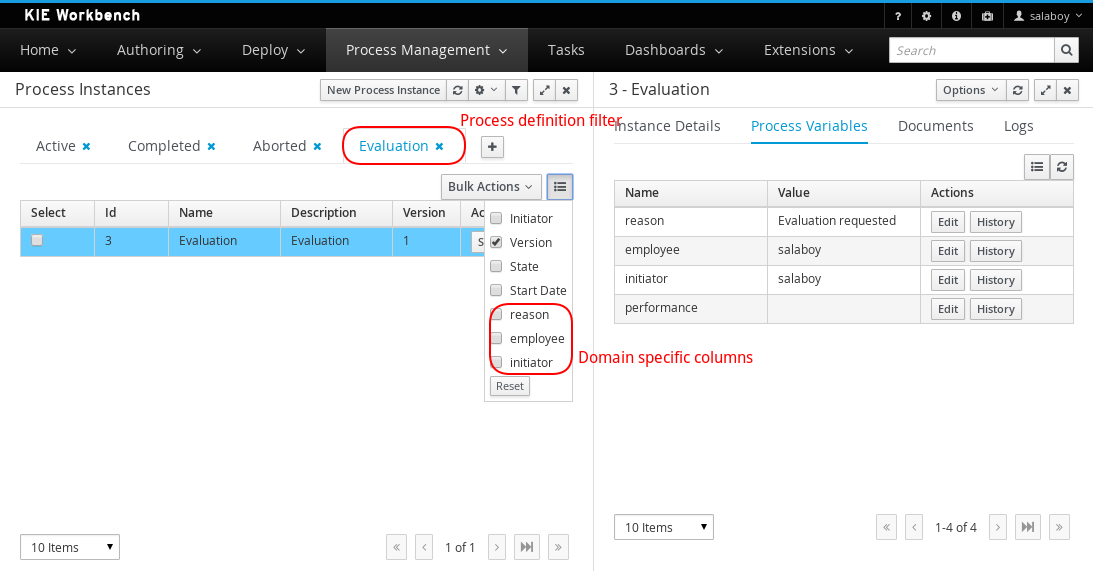

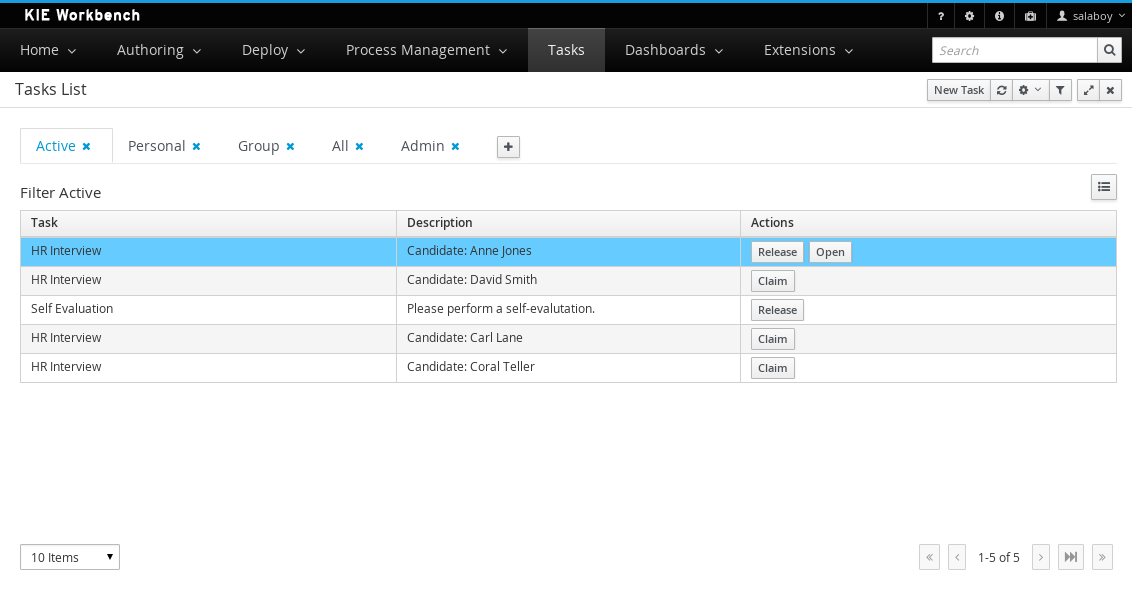

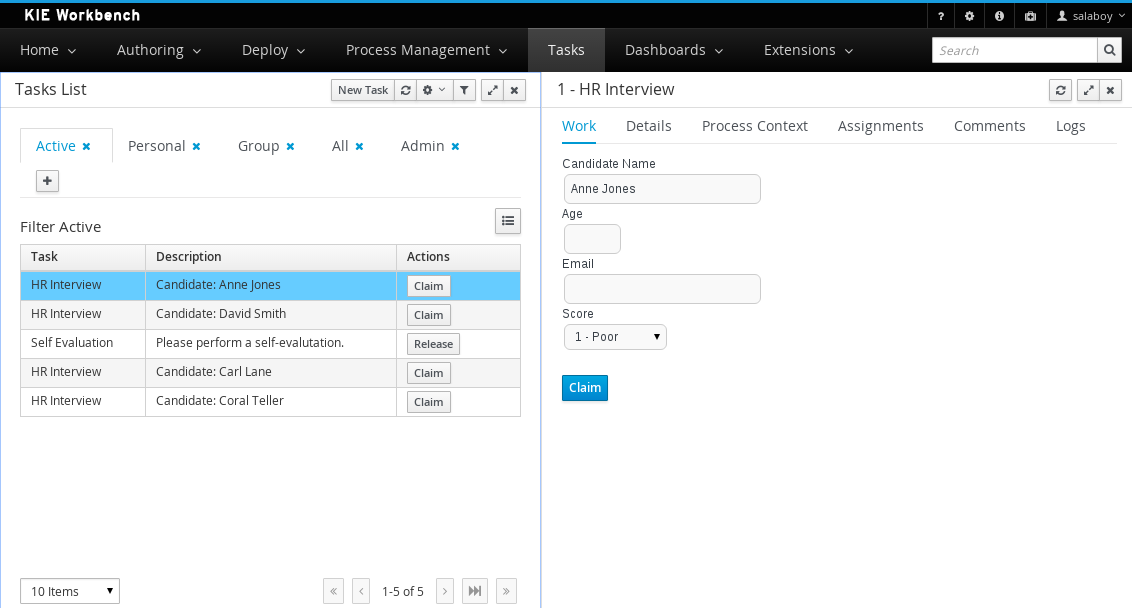

- To see the tasks that have been assigned to you, choose the "Tasks" menu option on the top bar and select "Task List" (you may need to click refresh to update your task view). The personal tasks table should show a "Performance Evaluation" task reserved for you. After starting the task, you can complete the task, which will open up the task form related to this task. You can fill in the necessary data and then complete the form and close the window. After completing the task, you could check the "Process Instances" once more to check the progress of your process instance. You should be able to see that the process is now waiting for your HR manager and project manager to also perform an evaluation. You could log in as "john" / "john" and "mary" / "mary" to complete these tasks.

- After starting and/or completing a few process instances and human tasks, you can generate a report of what has happened so far. Under "Dashboards", select "Process & Task Dashboard". This is a set of see predefined charts that allow users to spot what is going on in the system. Charts can be fully customized as well, as explained in the Business Activity Monitoring chapter.

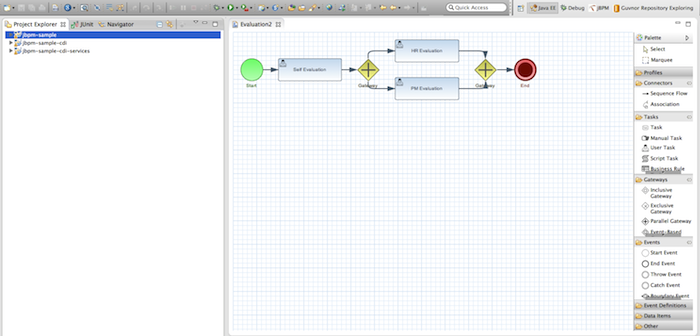

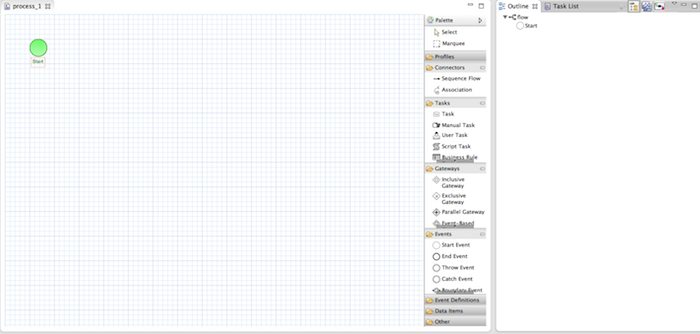

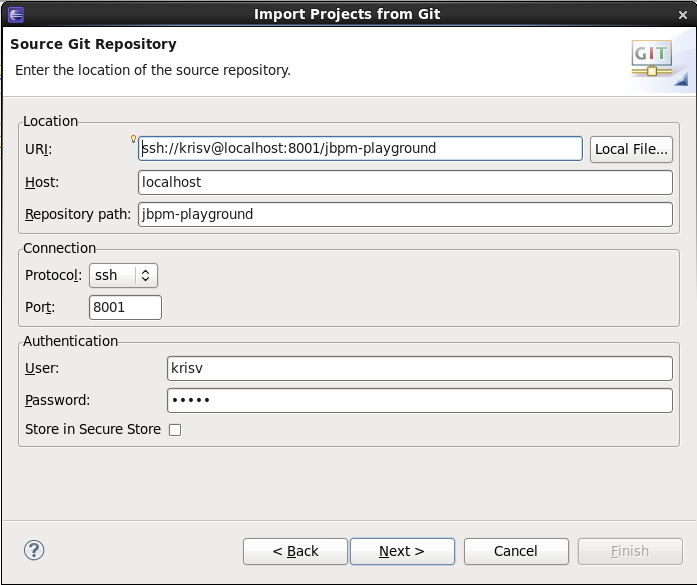

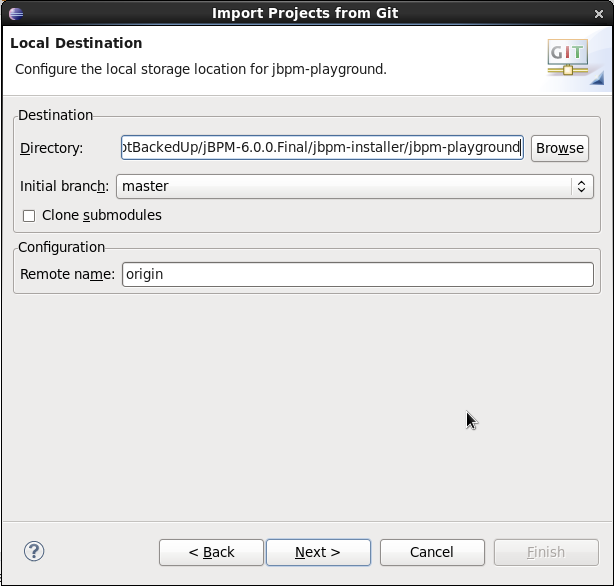

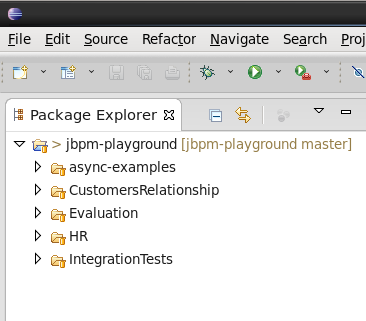

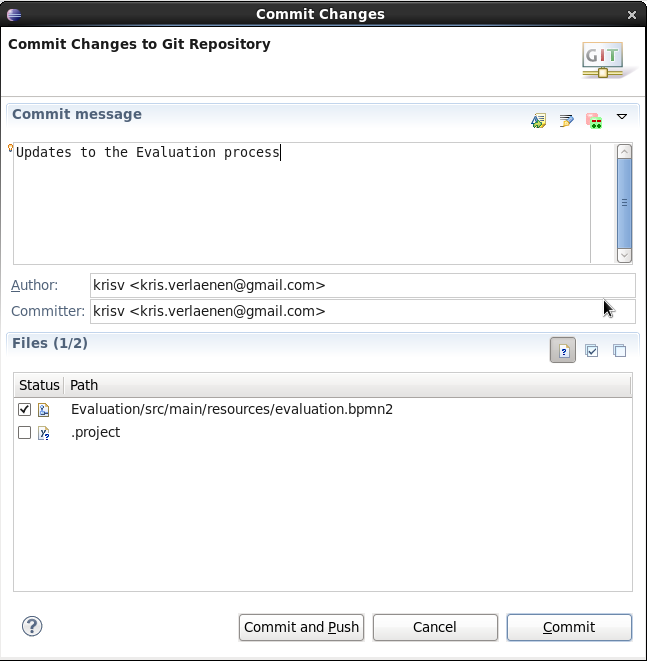

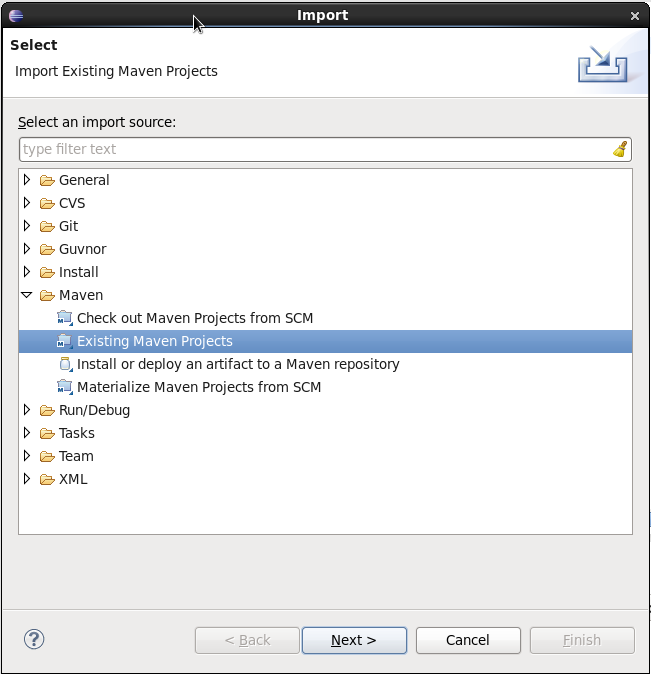

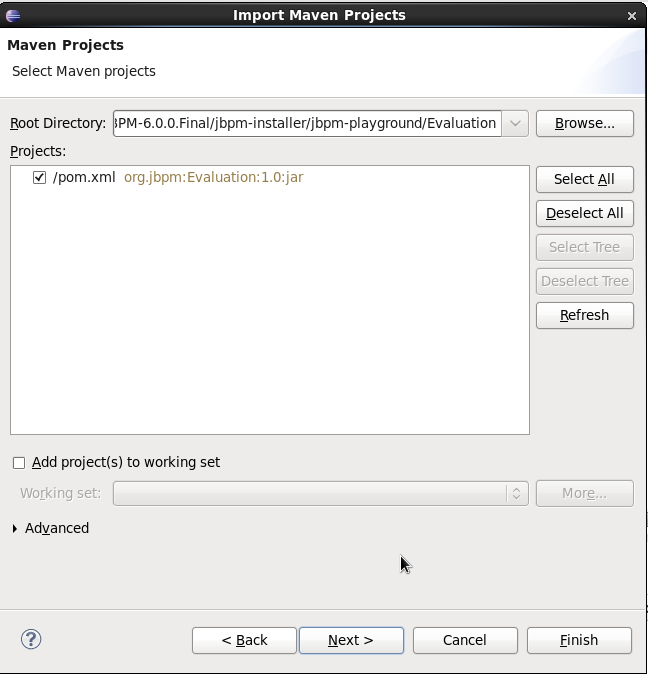

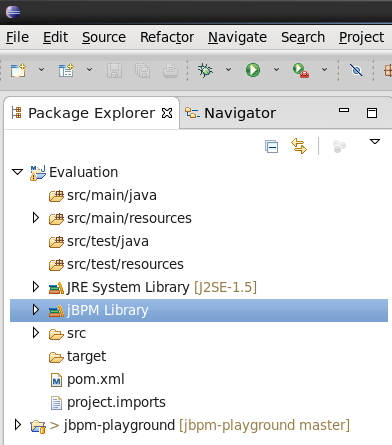

The following screencast gives an overview of how to use the Eclipse tooling. It shows you:

- How to import and execute the evaluation sample project

- Import the evaluation project (included in the jbpm-installer)

- Open the Evaluation.bpmn process

- Open the com.sample.ProcessTest Java class

- Execute the ProcessTest class to run the process

- How to create a new jBPM project (including sample process and JUnit test)

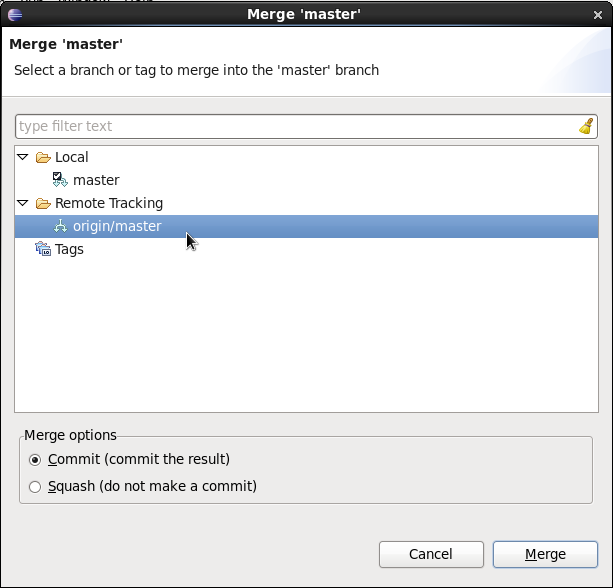

Figure 3.2.

You can import the evaluation project - a sample included in the jbpm-installer - by selecting "File -> Import ...", select "Existing Projects into Workspace" and browse to the jbpm-installer/sample/evaluation folder and click "Finish". You can open up the evaluation process and the ProcessTest class. To execute the class, right-click on it and select "Run as ... - Java Application". The console should show how the process was started and how the different actors in the process completed the tasks assigned to them, to complete the process instance.

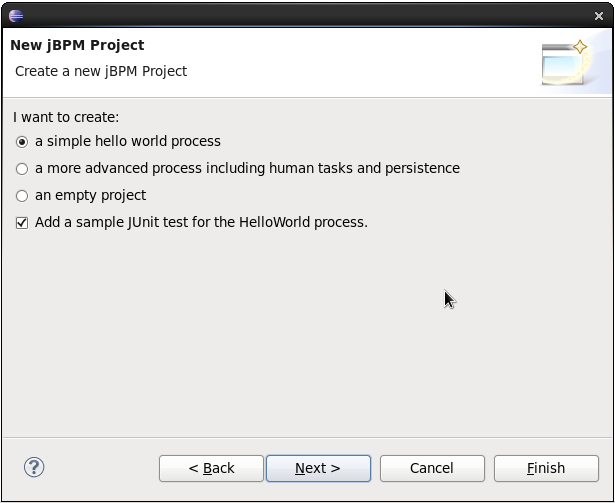

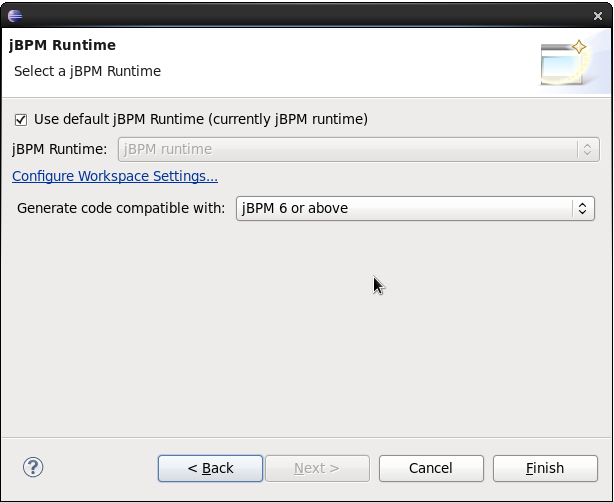

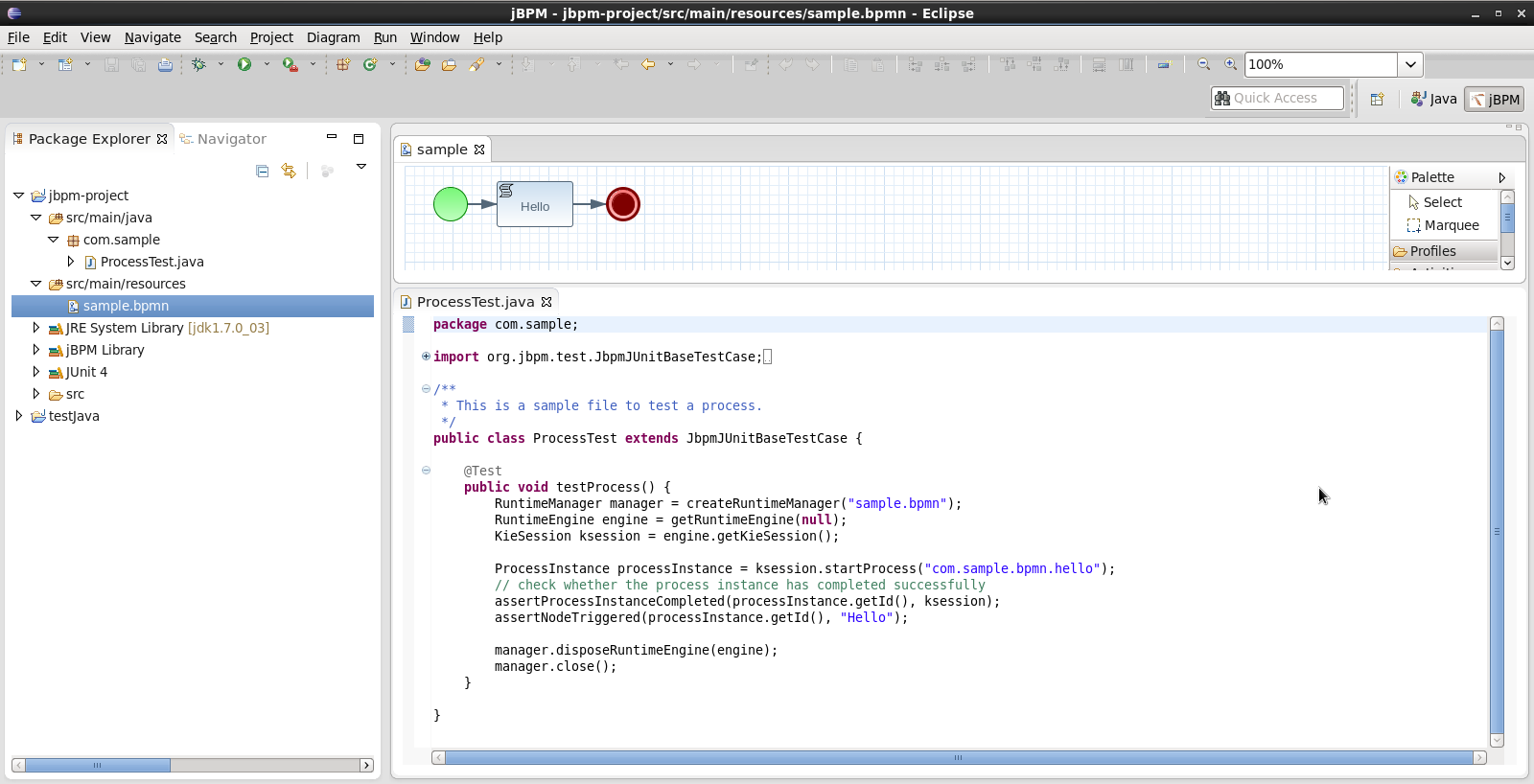

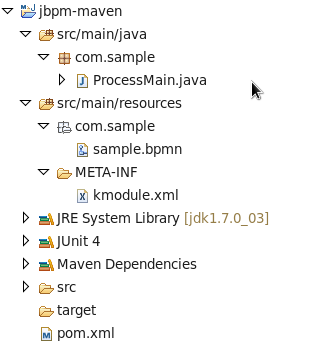

You could also create a new project using the jBPM project wizard. The sample projects contain a process and an associated Java file to start the process. Select "File - New ... - Project ..." and under the "jBPM" category, select "jBPM project" and click "Next". Give the project a name and click "Next". You can choose from a simple HelloWorld example or a slightly more advanced example using persistence and human tasks. If you select the latter and click Finish, you should see a new project containing a "sample.bpmn" process and a "com.sample.ProcessTest" JUnit test class. You can open the BPMN2 process by double-clicking it. To execute the process, right-click on ProcessTest.java and select "Run As - Java Application".

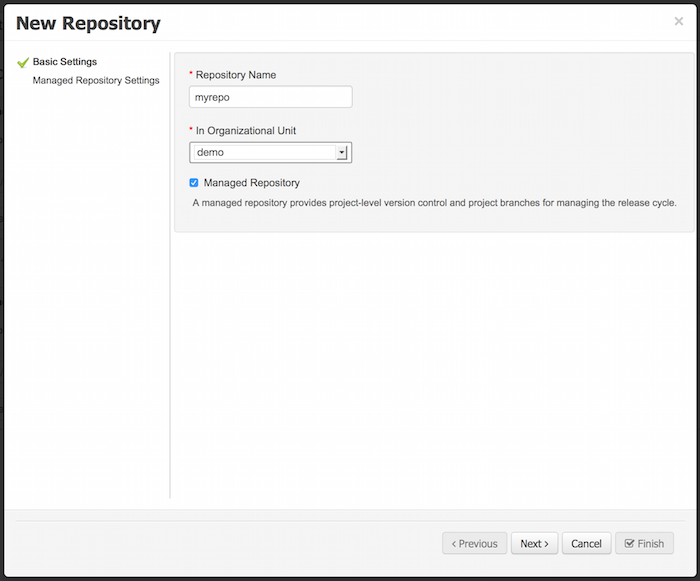

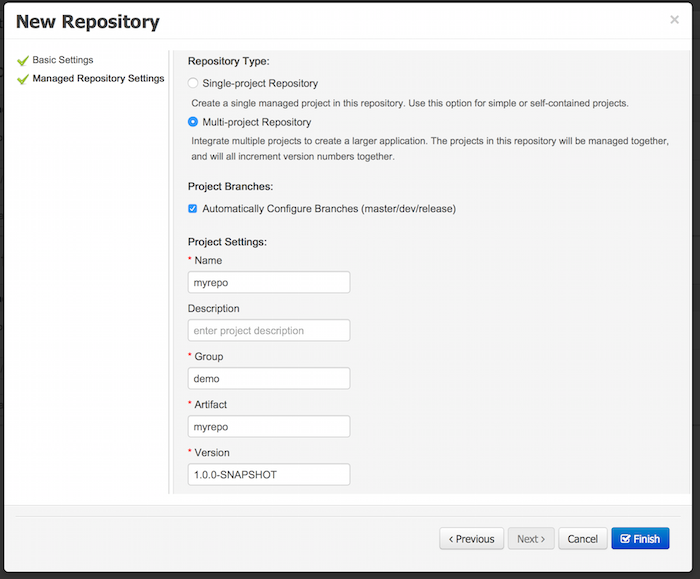

The workbench by default brings two sample playground repositories (by cloning the jbpm-playground repository hosted on GitHub). In cases where this is not wanted (access to Internet might not be available or there might be a need to start with a completely clean installation of the workbench) this default behavior can be turned off. To do so, change the following system property in the start.jboss target to false in the build.xml:

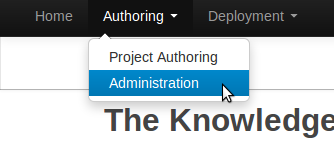

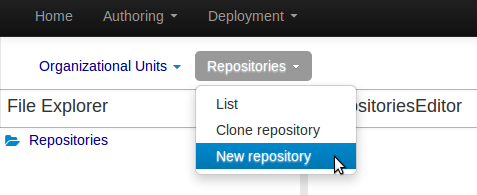

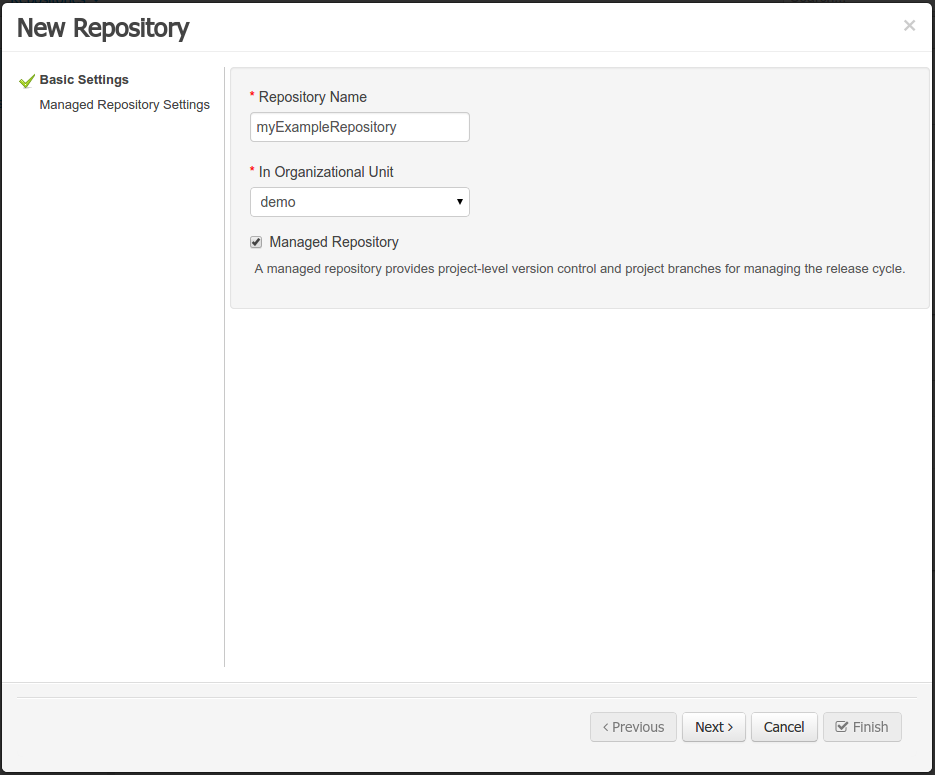

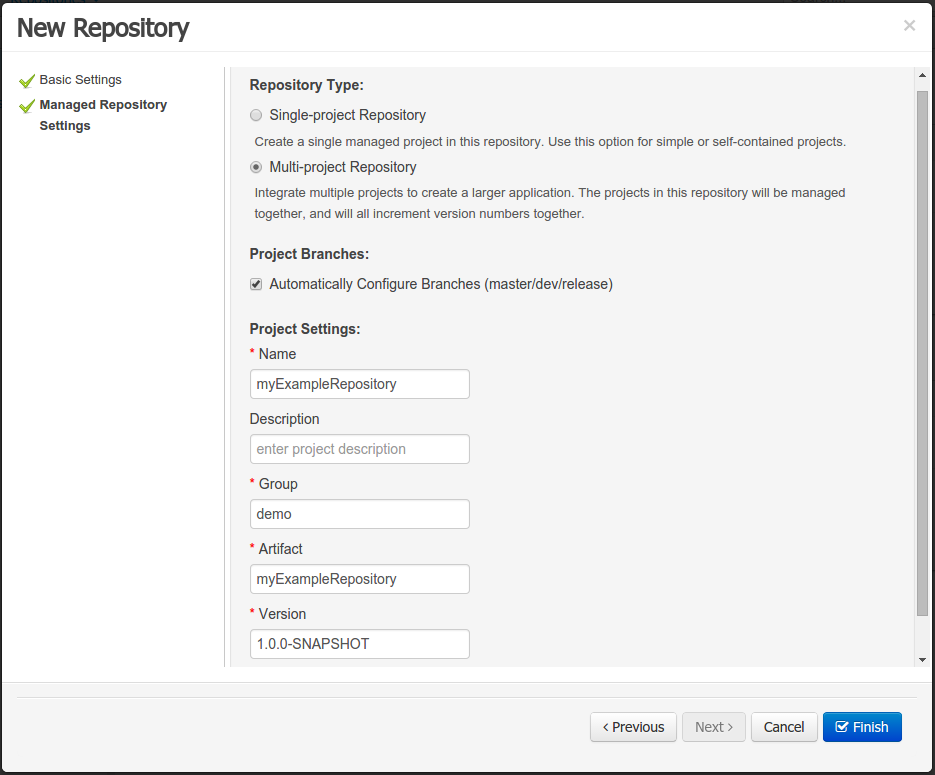

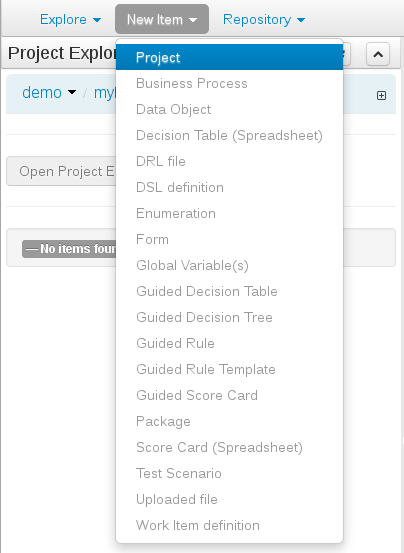

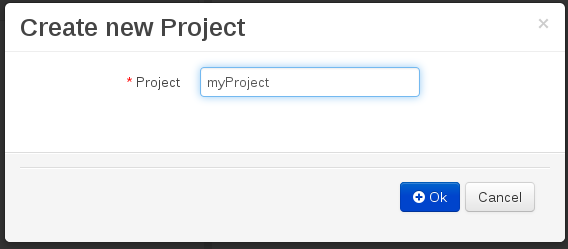

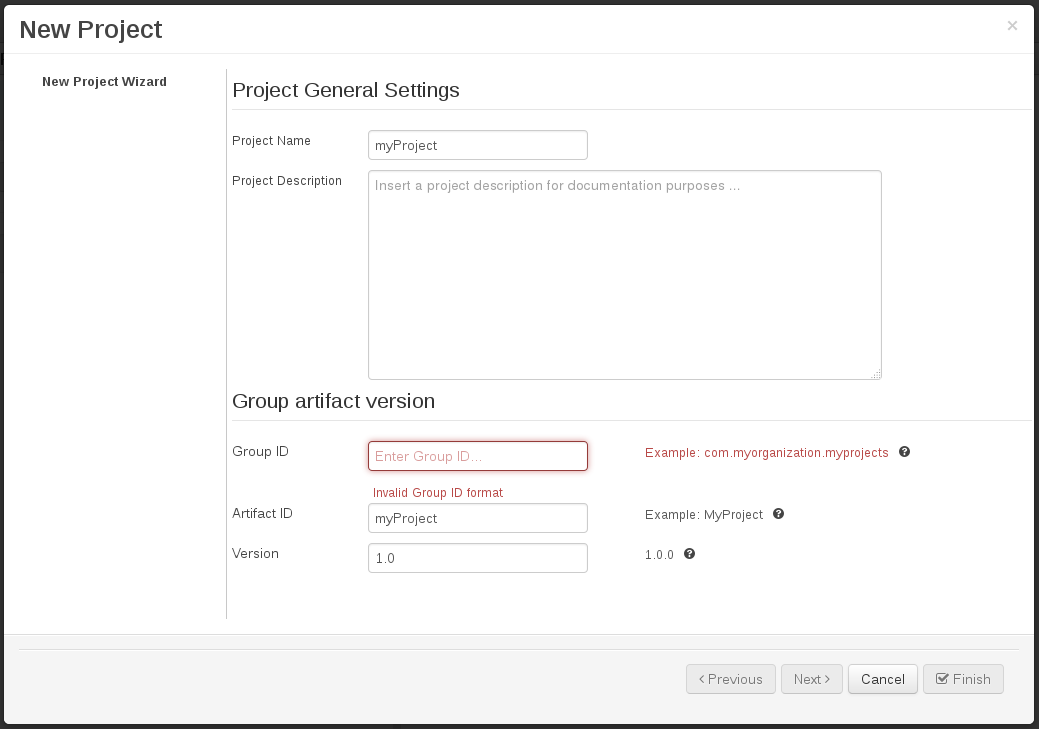

-Dorg.kie.demo=falseNote that this will create a completely empty version of the workbench. To be able to start modeling processes, the following elements need to be created first:

- Organizational unit

- Repository (new or clone existing one)

- Project

The workbench web application is using the "default" security domain for authenticating and authorizing users (as specified in the WEB-INF/jboss-web.xml inside the WARs).

The application server is configured by default to use properties files for specifying users. Note that this is for demo purposes only (as passwords and roles are stored in simple property files). The security domain is configured in the standalone.xml configuration file as follows:

<security-domain name="other" cache-type="default">

<authentication>

<login-module code="UsersRoles" flag="required">

<module-option name="usersProperties" value="${jboss.server.config.dir}/users.properties"/>

<module-option name="rolesProperties" value="${jboss.server.config.dir}/roles.properties"/>

</login-module>

</authentication>

</security-domain>By default, these configuration files contain the following users:

Table 3.1. Default users

| Name | Password | Workbench roles | Task roles |

|---|---|---|---|

| admin | admin | admin,analyst | |

| krisv | krisv | admin,analyst | |

| john | john | analyst | Accounting,PM |

| mary | mary | analyst | HR |

| sales-rep | sales-rep | analyst | sales |

| jack | jack | analyst | IT |

| katy | katy | analyst | HR |

| salaboy | salaboy | admin,analyst | IT,HR,Accounting |

Authentication can be customized by editing the authentication and configuration files in the jbpm-installer/auth folder and/or by changing the standalone-*.xml files in the jbpm-installer folder. Note that you need to rerun the installer to make sure the modified files are copied and picked correctly.

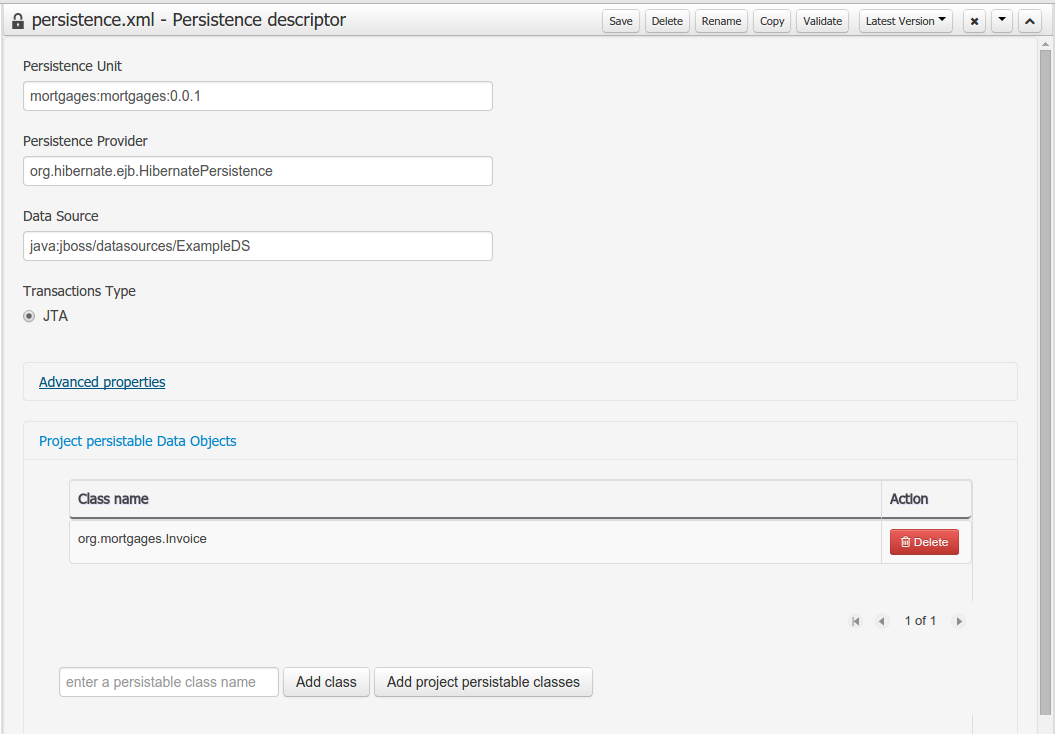

jBPM uses the Java Persistence API specification (v2) to allow users to configure whatever

datasource they want to use to persist runtime data. As a result, the instructions below describe

how you should configure a datasource when using JPA on JBoss application server (e.g. AS7, EAP6

or Wildfly8) using a persistence.xml file and configuring your datasource and

driver in your application server's standalone.xml, similar to how you would

configure any other application using JPA on JBoss application server. The installer automates some

of this (like copying the right files to the right location after installation).

By default, the jbpm-installer uses an H2 database for persisting runtime data. In this section we will:

modify the persistence settings for runtime persistence of process instance state

test the startup with our new settings!

You will need a local instance of a database, in this case we will use MySQL.

In the MySQL database used in this quickstart, create a single user:

- user/schema "jbpm" with password "jbpm" (which will be used to persist all entities)

If you end up using different names for your user/schemas, please make a note of where we insert "jbpm" in the configuration files.

If you want to try this quickstart with another database, a section at the end of this quickstart describes what you may need to modify.

The following files define the persistence settings for the jbpm-installer demo:

- jbpm-installer/db/jbpm-persistence-JPA2.xml

- Application server configuration

- standalone-*.xml

Tip

There are multiple standalone.xml files available (depending on whether you are using JBoss AS7, JBoss EAP6 or Wildfly8 and whether you are running the normal or full profile). The full profile is required to use the JMS component for remote integration, so will be used by default by the installer. Best practice is to update all standalone.xml files to have consistent setup but most important is to have standalone-full-wildfly-8.1.0.Final.xml properly configured as this is used by default by the installer.

Do the following:

- Disable H2 default database and enable MySQL database in build.properties

You might want to update the db driver jar name and download url to whatever version of the jar matches your installation.# default is H2 # H2.version=1.3.168 # db.name=h2 # db.driver.jar.name=${db.name}.jar # db.driver.download.url=http://repo1.maven.org/maven2/com/h2database/h2/${H2.version}/h2-${H2.version}.jar #mysql db.name=mysql db.driver.module.prefix=com/mysql db.driver.jar.name=mysql-connector-java-5.1.18.jar db.driver.download.url=https://repository.jboss.org/nexus/service/local/repositories/central/content/mysql/mysql-connector-java/5.1.18/mysql-connector-java-5.1.18.jar db/jbpm-persistence-JPA2.xml:This is the JPA persistence file that defines the persistence settings used by jBPM for both the process engine information, the logging/BAM information and task service.

In this file, you will have to change the name of the hibernate dialect used for your database.

The original line is:

<property name="hibernate.dialect" value="org.hibernate.dialect.H2Dialect"/>In the case of a MySQL database, you need to change it to:

<property name="hibernate.dialect" value="org.hibernate.dialect.MySQLDialect"/>For those of you who decided to use another database, a list of the available hibernate dialect classes can be found here.

standalone-full-wildfly-8.1.0.Final.xml:Standalone.xmlandstandalone-full.xmlare the configuration for the standalone JBoss application server. When the installer installs the demo, it copies these files to thestandalone/configurationdirectory in the JBoss server directory. Since the installer uses Wildfly8 by default as application server, you probably need to changestandalone-full-wildfly-8.1.0.Final.xml.We need to change the datasource configuration in

standalone-full.xmlso that the jBPM process engine can use our MySQL database. The original file contains (something very similar to) the following lines:<datasource jta="true" jndi-name="java:jboss/datasources/jbpmDS" pool-name="H2DS" enabled="true" use-java-context="true" use-ccm="true"> <connection-url>jdbc:h2:tcp://localhost/~/jbpm-db;MVCC=TRUE</connection-url> <driver>h2</driver> <security> <user-name>sa</user-name> </security> </datasource> <drivers> <driver name="h2" module="com.h2database.h2"> <xa-datasource-class>org.h2.jdbcx.JdbcDataSource</xa-datasource-class> </driver> </drivers>Change the lines to the following:

<datasource jta="true" jndi-name="java:jboss/datasources/jbpmDS" pool-name="MySQLDS" enabled="true" use-java-context="true" use-ccm="true"> <connection-url>jdbc:mysql://localhost:3306/jbpm</connection-url> <driver>mysql</driver> <security> <user-name>jbpm</user-name> <password>jbpm</password> </security> </datasource>and add an additional driver configuration:

<driver name="mysql" module="com.mysql"> <xa-datasource-class>com.mysql.jdbc.jdbc2.optional.MysqlXADataSource</xa-datasource-class> </driver>To install driver jars in JBoss application server (Wildfly8, EAP6, etc.), it is recommended to install the driver jar as a module. The installer already takes care of this mostly: it will copy the driver jar (you specified in the

build.properties) to the right folder inside the modules directory of your server and put a matching module.xml next to it. For MySQL, this file is calleddb/mysql_module.xml. Open this file and make sure that the file name of the driver jar listed there is identical the driver jar name you specified in thebuild.properties(including the version). Note that, even if you simply uncommented the default MySQL configuration, you will still need to add the right version here.Starting the demoWe've modified all the necessary files at this point. Now would be a good time to make sure your database is started up as well!

The installer script copies this file into the jbpm-console WAR before the WAR is installed on the server. If you have already run the installer, it is recommended to stop the installer and clean it first using

ant stop.demoand

ant clean.demobefore continuing.

Run

ant install.demoto (re)install the wars and copy the necessary configuration files. Once you've done that, (re)start the demo using

ant start.demoProblems?If this isn't working for you, please try the following:

- Please double check the files you've modified: I wrote this, but still made mistakes when changing files!

- Please make sure that you don't secretly have another (unmodified) instance of JBoss AS running.

- If neither of those work (and you're using MySQL), please do then let us know.

If you decide to use a different database with this demo, you need to remember the following when going through the steps above:

- Configuring the jBPM datasource in

standalone.xml:- After locating the

java:jboss/datasources/jbpmDSdatasource, you need to provide the following properties specific to your database:For example:- Change url of your database

- Change the user-name and password

- Change the name of the driver (which you'll create next)

<datasource jta="true" jndi-name="java:jboss/datasources/jbpmDS" pool-name="PostgreSQLDS" enabled="true" use-java-context="true" use-ccm="true"> <connection-url>jdbc:postgresql://localhost:5432/jbpm</connection-url> <driver>postgresql</driver> <security> <user-name>jbpm</user-name> <password>jbpm</password> </security> </datasource> - Add an additional driver configuration:

For example:

- Change the name of the driver to match the name you specified when configuring the datasource in the previous step

- Change the module of the driver: the database driver jar should

be installed as a module (see below) and here you should reference the unique

name of the module. Since the installer can take care of automatically generating

this module for you (see below), this should match the

db.driver.module.prefixproperty inbuild.properties(where forward slashes are replaced by a point). In the example below, I used “org/postgresql” asdb.driver.module.prefixwhich means that I should then useorg.postgresqlas module name for the driver. - Fill in the correct name of the XA datasource class to use

<driver name="postgresql" module="org.postgresql"> <xa-datasource-class>org.postgresql.xa.PGXADataSource</xa-datasource-class> </driver>

- After locating the

- You need to change the dialect in

persistence.xmlto the dialect for your database, for example:<property name="hibernate.dialect" value="org.hibernate.dialect.PostgreSQLDialect"/> - In order to make sure your driver will be correctly installed in

the JBoss application server, there are typically multiple options, like install as a module or

as a deployment. It is recommended to install the driver as a module for AS7, EAP6 and Wildfly8.

For example, for AS7 both ways are explained

here.

If you choose to install driver as JBoss module (recommended), please do the following:

- Install the driver JAR as a module, which is what the install script does.

-

Otherwise, you can modify and install the downloaded JAR as a deployment.

In this case you will have to copy the JAR yourself to the

standalone/deploymentsdirectory.

- In

build.properties, disable the default H2 driver properties# default is H2 # H2.version=1.3.168 # db.name=h2 # db.driver.jar.name=h2-${H2.version}.jar # db.driver.download.url=http://repo1.maven.org/maven2/com/h2database/h2/${H2.version}/h2-${H2.version}.jar - Uncomment one of the other example configs (mysql or postgresql) or create your own:

#postgresql db.name=postgresql db.driver.module.prefix=org/postgresql db.driver.jar.name=postgresql-9.1-902.jdbc4.jar db.driver.download.url=https://repository.jboss.org/nexus/content/repositories/thirdparty-uploads/postgresql/postgresql/9.1-902.jdbc4/postgresql-9.1-902.jdbc4.jar- Change the

db.nameproperty inbuild.propertiesto a name for your database. - Change the

db.driver.module.prefixproperty to a name for the module of your driver. Note that this should match the module property when configuring the driver instandalone.xml(where forward slashes in the prefix here are replaced by a point). In the example above, I used “org/postgresql” asdb.driver.module.prefixwhich means that I should then useorg.postgresqlas module name for the driver. - Change the

db.driver.jar.nameproperty to the name of the jar that contains your database driver. - Change the

db.driver.download.urlproperty to where the driver jar can be downloaded. Alternatively, you could manually download the jar yourself, and place it in thedb/driversfolder, using the same name as you specified in thedb.driver.jar.nameproperty.

- Change the

- Lastly, you'll have to create the

db/${db.name}_module.xmlfile. As an example you can use db/mysql_module.xml, so just make a copy of it and:For example, the top of the file would look like:- Change the name of the module to match the driver module name above

- Change the name of the module resource path to the name of the

db.driver.jar.nameproperty.

<module xmlns="urn:jboss:module:1.0" name="org.postgresql"> <resources> <resource-root path="postgresql-9.1-902.jdbc4.jar"/> </resources>

By default the demo setup makes use of Hibernate auto DDL generation capabilities to build up the complete database schema, including all tables, sequences, etc. This might not always be welcomed (by your database administrator), and thus the installer provides DDL scripts for most popular databases.

Table 3.2. DDL scripts

| Database name | Location |

|---|---|

| db2 | jbpm-installer/db/ddl-scripts/db2 |

| derby | jbpm-installer/db/ddl-scripts/derby |

| h2 | jbpm-installer/db/ddl-scripts/h2 |

| hsqldb | jbpm-installer/db/ddl-scripts/hsqldb |

| mysql5 | jbpm-installer/db/ddl-scripts/mysql5 |

| mysqlinnodb | jbpm-installer/db/ddl-scripts/mysqlinnodb |

| oracle | jbpm-installer/db/ddl-scripts/oracle |

| postgresql | jbpm-installer/db/ddl-scripts/postgresql |

| sqlserver | jbpm-installer/db/ddl-scripts/sqlserver |

| sqlserver2008 | jbpm-installer/db/ddl-scripts/sqlserver2008 |

DDL scripts are provided for both jBPM and Quartz schemas although Quartz schema DDL script is only required when the timer service should be configured with Quartz database job store. See the section on timers for additional details.

This can be used to initially create the database schema, but it can also serve as the basis for any\ optimization that needs to be applied - such as indexes, etc.

jBPM installer ant script performs most of the work automatically and usually does not require additional attention but in case it does, here is a list of available targets that might be needed to perform some of the steps manually.

Table 3.3. jBPM installer available targets

| Target | Description |

|---|---|

| clean.db | cleans up database used by jBPM demo (applies only to H2 database) |

| clean.demo | cleans up entire installation so new installation can be performed |

| clean.demo.noeclipse | same as clean.demo but does not remove Eclipse |

| clean.eclipse | removes Eclipse and its workspace |

| clean.generated.ddl | removes DDL scripts generated if any |

| clean.jboss | removes application server with all its deployments |

| clean.jboss.repository | removes repository content for demo setup (guvnor Maven repo, niogit, etc) |

| download.dashboard | downloads jBPM dashboard component (BAM) |

| download.db.driver | downloads DB driver configured in build.properties |

| download.ddl.dependencies | downloads all dependencies required to run DDL script generation tool |

| download.droolsjbpm.eclipse | downloads Drools and jBPM Eclipse plugin |

| download.eclipse | downloads Eclipse distribution |

| download.jboss | downloads JBoss Application Server |

| download.jBPM.bin | downloads jBPM binary distribution (jBPM libs and its dependencies) |

| download.jBPM.console | downloads jBPM console for JBoss AS |

| install.dashboard.into.jboss | installs jBPM dashboard into JBoss AS |

| install.db.files | installs DB driver as JBoss module |

| install.demo | installs complete demo environment |

| install.demo.eclipse | installs Eclipse with all jBPM plugins, no server installation |

| install.demo.noeclipse | similar to install.demo but skips Eclipse installation |

| install.dependencies | installs custom libraries (such as work item handlers, etc) into the jBPM console |

| install.droolsjbpm-eclipse.into.eclipse | installs droolsjbpm Eclipse plugin into Eclipse |

| install.eclipse | install Eclipse IDE |

| install.jboss | installs JBoss AS |

| install.jBPM-console.into.jboss | installs jBPM console application into JBoss AS |

Some common issues are explained below.

Q: What if the installer complains it cannot download component X?

A: Are you connected to the Internet? Do you have a firewall turned on? Do you require a proxy? It might be possible that one of the locations we're downloading the components from is temporarily offline. Try downloading the components manually (possibly from alternate locations) and put them in the jbpm-installer/lib folder.

Q: What if the installer complains it cannot extract / unzip a certain JAR/WAR/zip?

A: If your download failed while downloading a component, it is possible that the installer is trying to use an incomplete file. Try deleting the component in question from the jbpm-installer/lib folder and reinstall, so it will be downloaded again.

Q: What if I have been changing my installation (and it no longer works) and I want to start over again with a clean installation?

A: You can use ant clean.demo to remove all the installed components, so you end up with a fresh installation again.

Q: I sometimes see exceptions when trying to stop or restart certain services, what should I do?

A: If you see errors during shutdown, are you sure the services were still running? If you see exceptions during restart, are you sure the service you started earlier was successfully shutdown? Maybe try killing the services manually if necessary.

Q: Something seems to be going wrong when running Eclipse but I have no idea what. What can I do?

A: Always check the consoles for output like error messages or stack traces. You can also check the Eclipse Error Log for exceptions. Try adding an audit logger to your session to figure out what's happening at runtime, or try debugging your application.

Q: Something seems to be going wrong when running the a web-based application like the jbpm-console. What can I do?

A: You can check the server log for possible exceptions: jbpm-installer/jboss-as-{version}/standalone/log/server.log (for JBoss AS7).

For all other questions, try contacting the jBPM community as described in the Getting Started chapter.

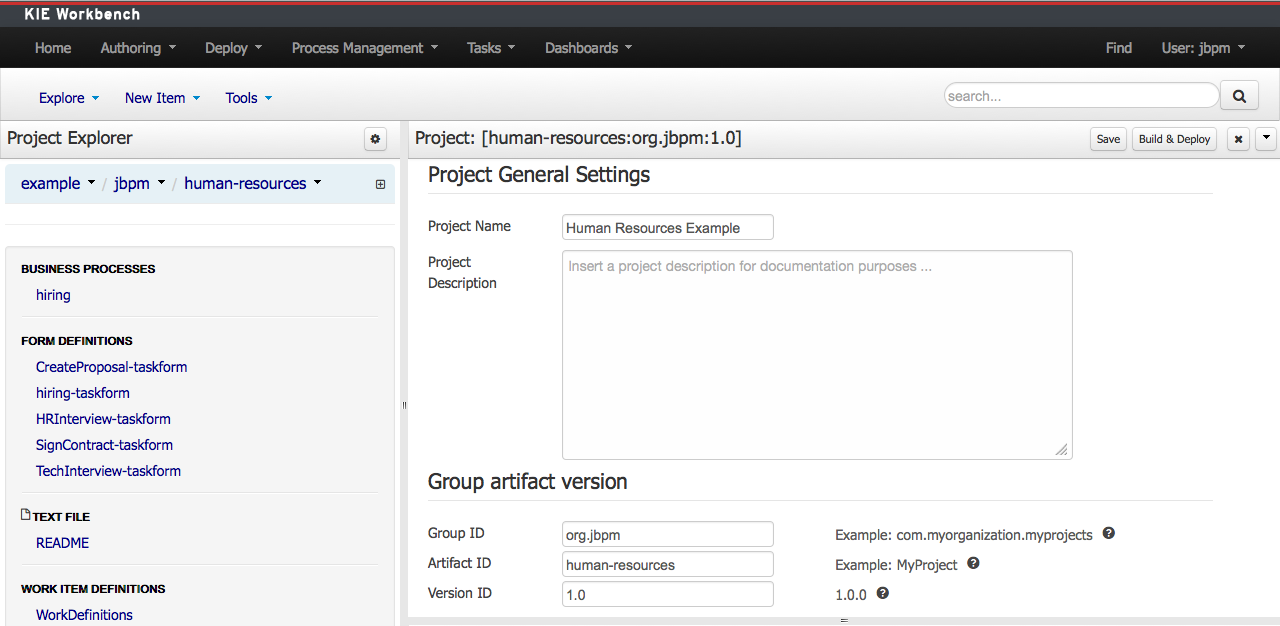

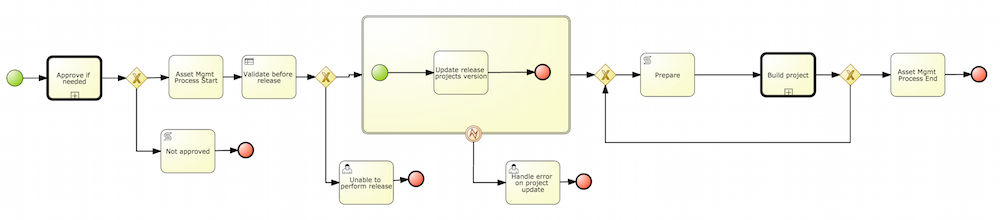

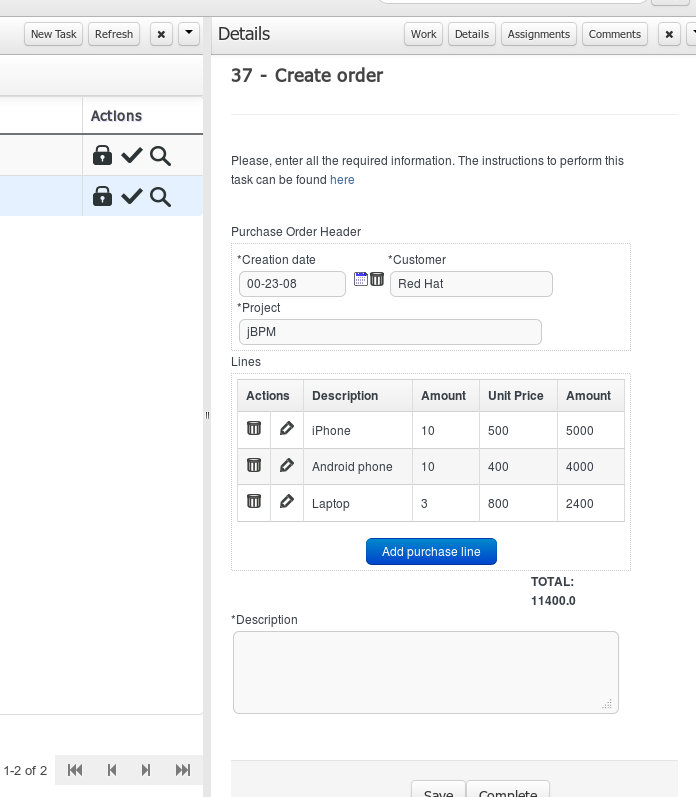

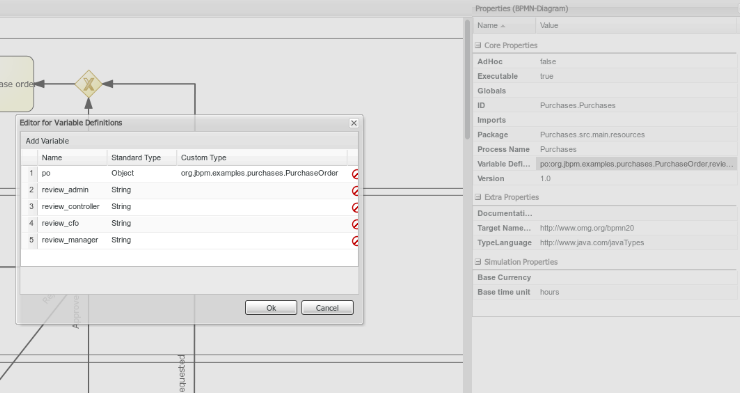

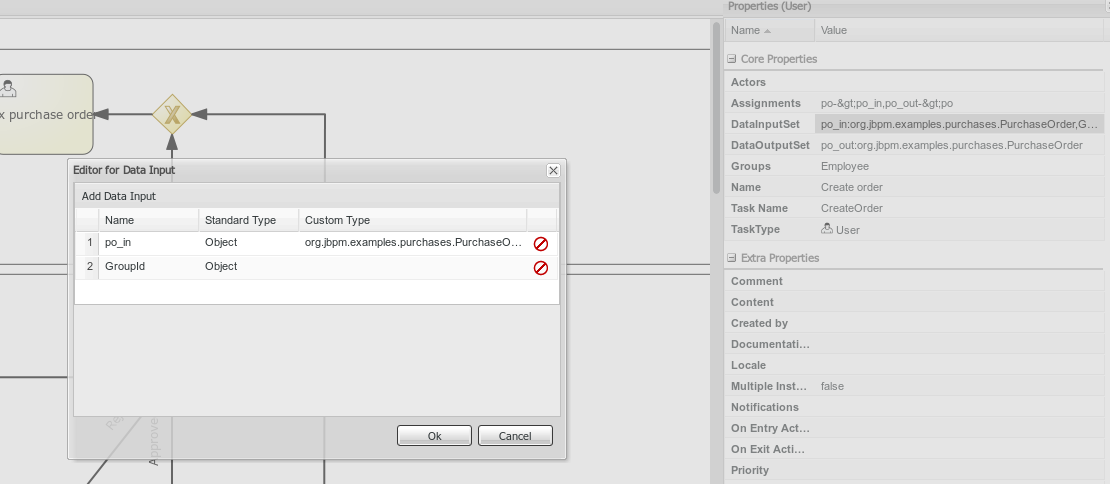

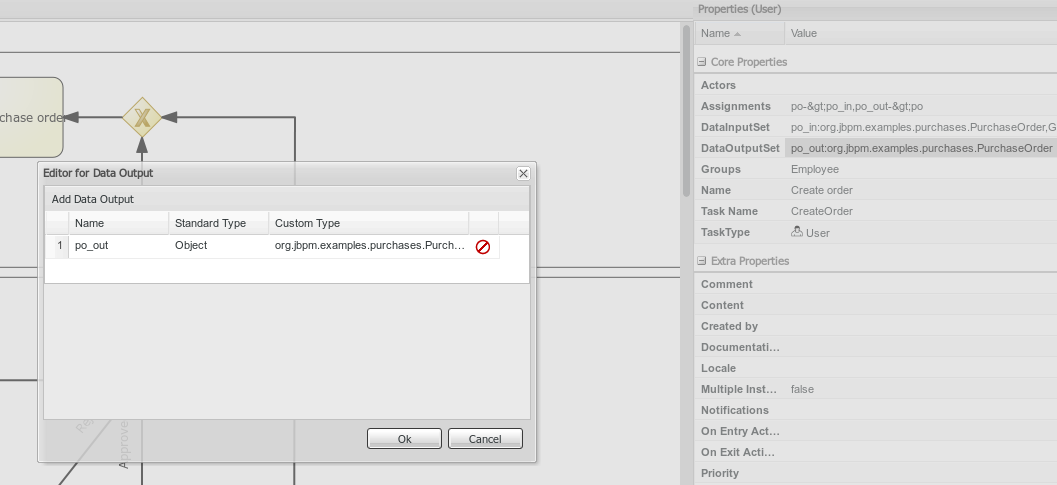

This chapter provides a walkthrough of a pre-made Red Hat JBoss BPM Suite example project. In this chapter, you will:

Import a number of pre-made example projects into your JBoss BPM Suite

Learn about the Human Resources Example and its usecase

Build and deploy the example project

Instantiate and explore the project

The project, together with many other example projects, can be imported through github. To learn how to import projects through github, see the JBoss BPM Suite Getting Started Guide.

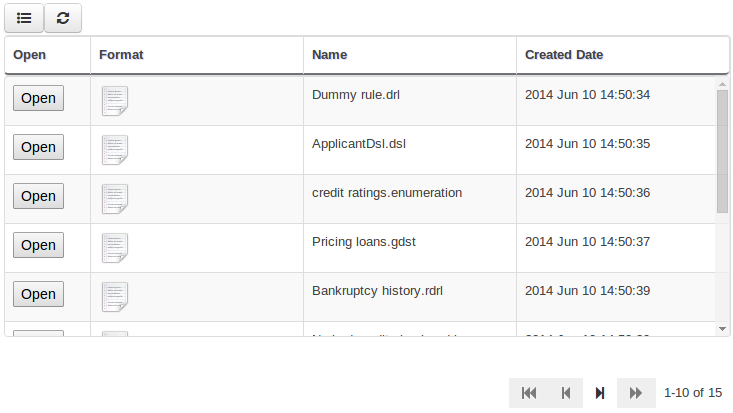

The web-based workbench by default will install two sample repositories that contain various sample projects that help you getting started. This section shows different examples that can be found in the jbpm-playground repository (also available here: https://github.com/droolsjbpm/jbpm-playground). All these examples are high level and business oriented.

If you want to contribute with these examples please get in touch with any member of the jBPM/Drools Team.

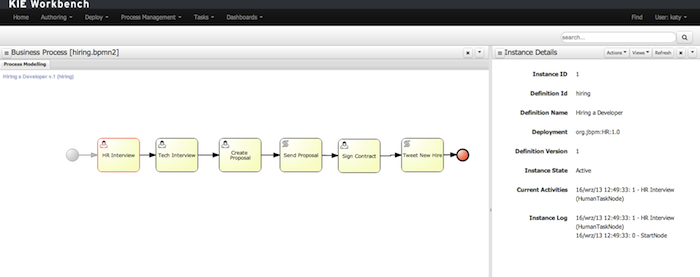

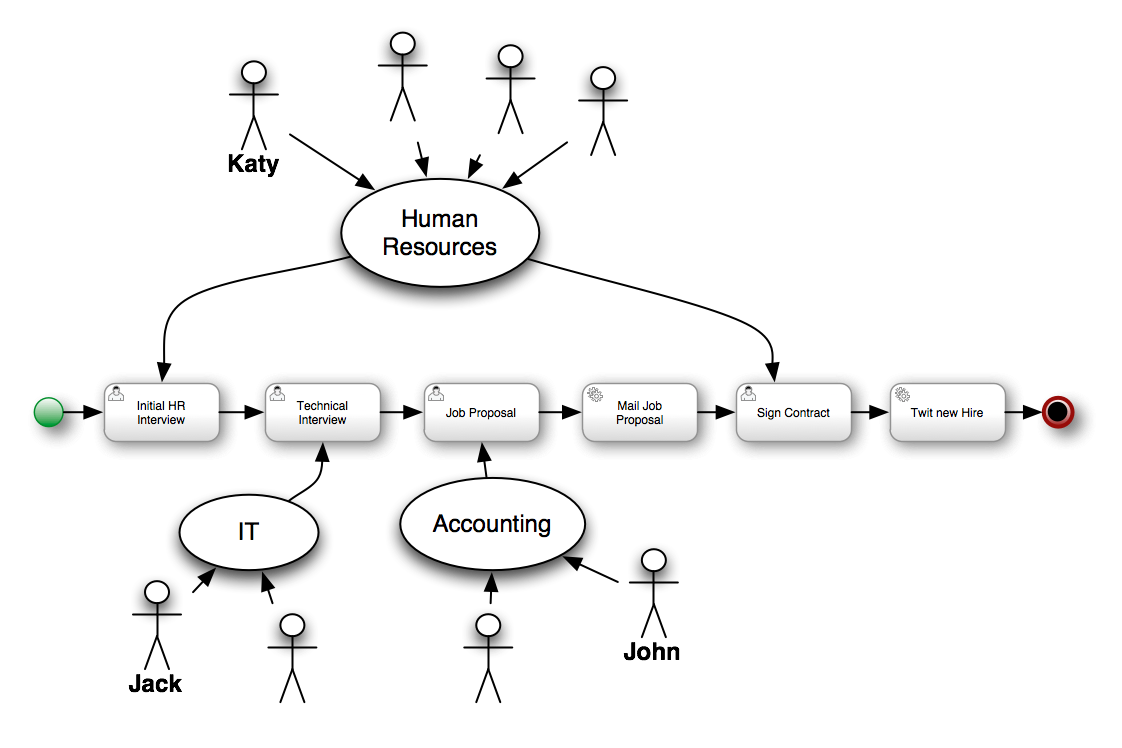

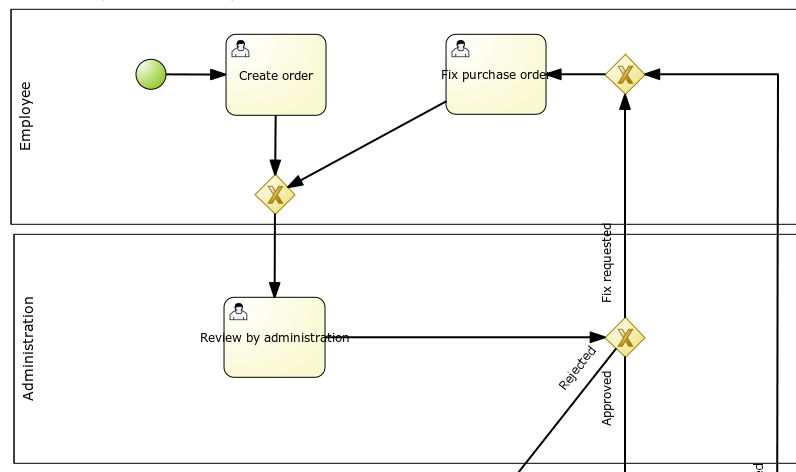

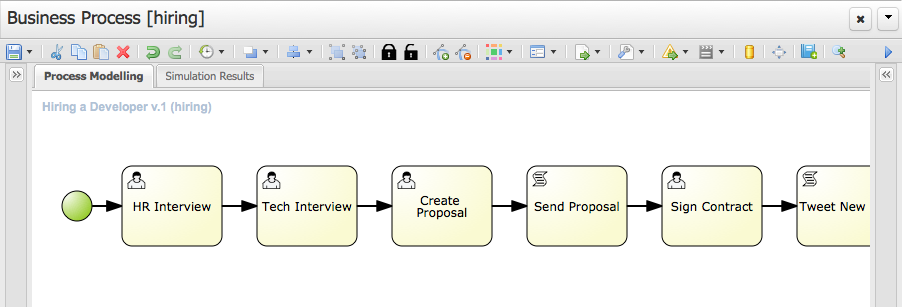

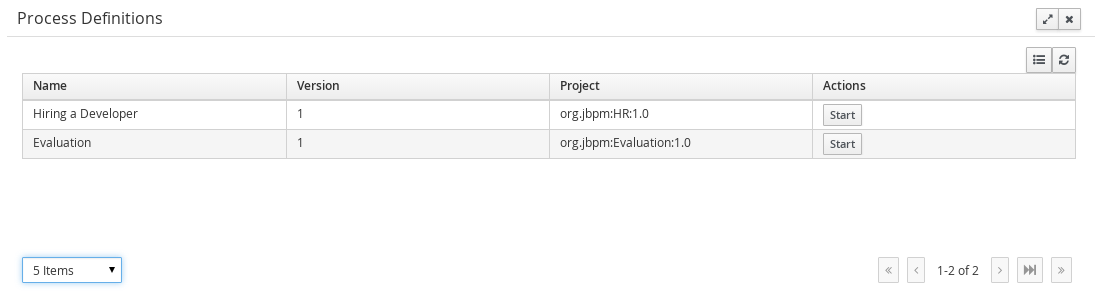

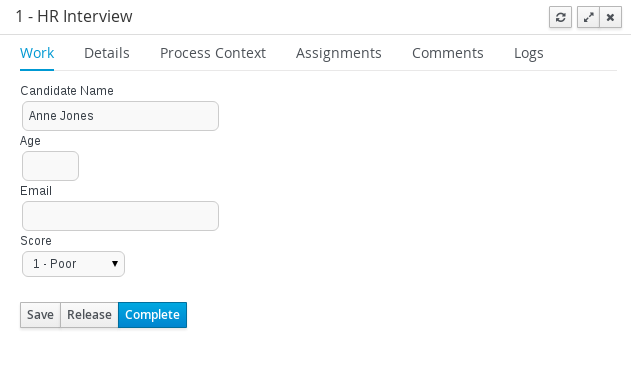

The Human Resource Example's use case can be described as follows: A company wants to hire new developers. In this process, three departments (that is the Human resources, IT, and Accounting) are involved. These departments are represented by three users: Katy, Jack, and John respectively.

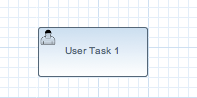

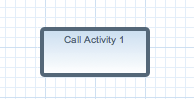

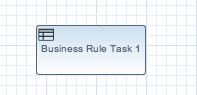

Note that only four out of the six defined activities within the business process are User Tasks. User Tasks require human interaction. The other two tasks are Service Tasks, which are automated and connected to other systems.

Each instance of the process will follow certain actions:

The human resources team performs the initial interview with the candidate

The IT department team performs the technical interview

Based on the output from the previous two steps, the accounting team creates a job proposal

When the proposal has been drafted, it is automatically sent to the candidate via email

If the candidate accepts the proposal, a new meeting to sign the contract is scheduled

Finally, if the candidate accepts the proposal, the system posts a message about the new hire using Twitter service connector

Note, that Jack, John, and Katy may represent any employee within the company with appropriate role assigned.

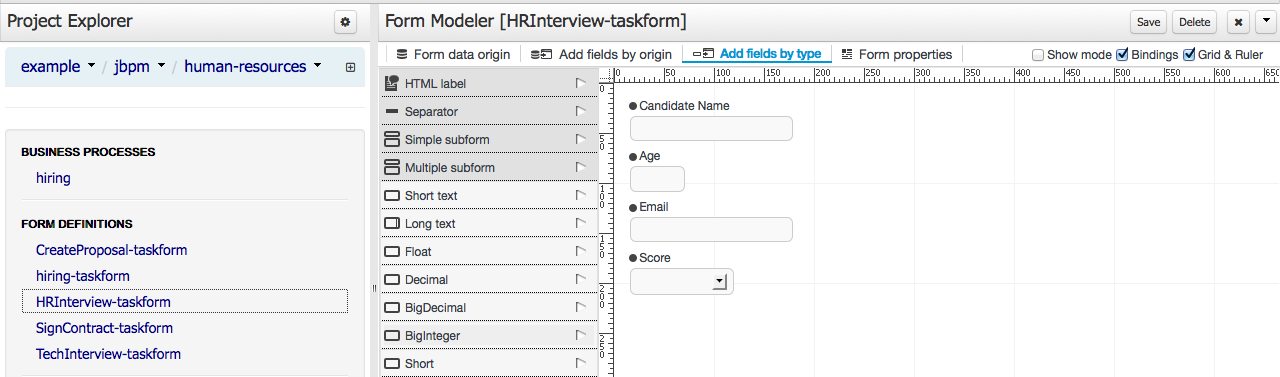

The Authoring Perspective contains the hiring.bpmn2 process and a set of forms for each human task. You may explore these knowledge assets by clicking on them. Notice that different editors open for different types of assets. If you click on the Business Process file, the Process Designer opens:

You may also explore the forms. Notice that the Form Modeller will be opened, which allows you to edit the forms to fit your requirements.

In order to build the Project, you need to go to the Authoring Perspective and open the Project Editor panel:

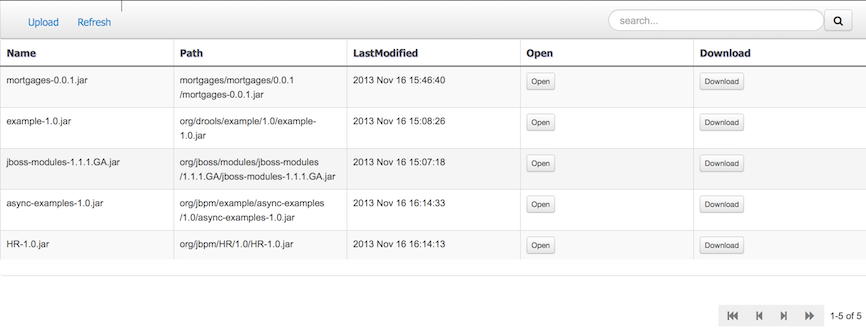

Once you open the Project Editor, you will see in the top right corner of the panel . This button allows you to create a new JAR artifact that will be deployed to the runtime environment as a new deployment unit.

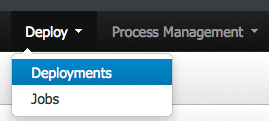

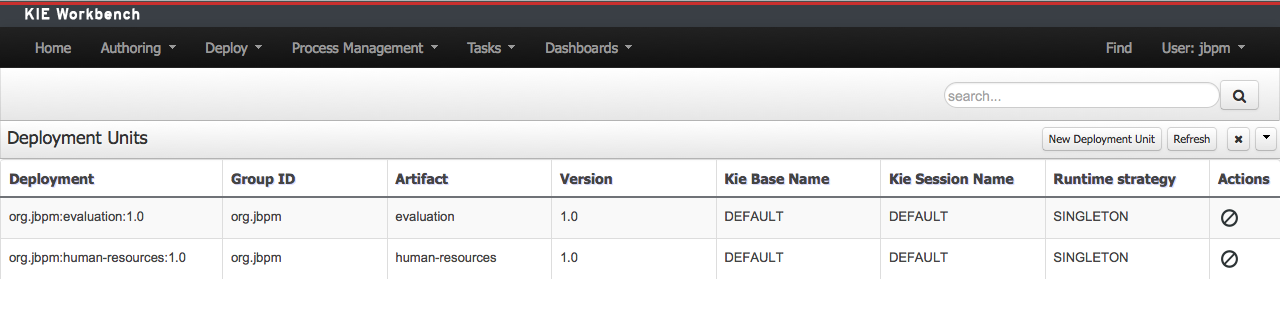

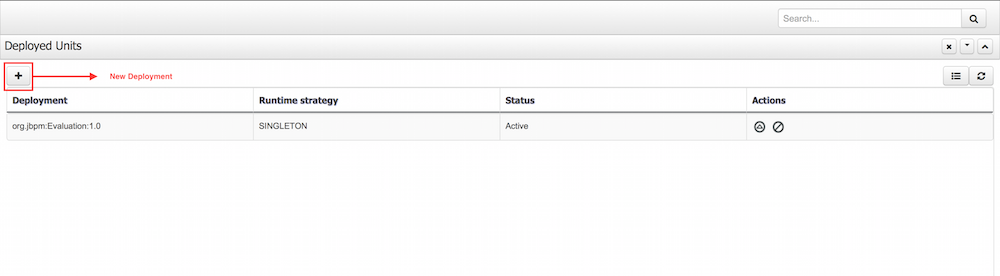

Once you get the visual notification that the project was built and deployed successfully, you may verify its presence in the Deployments tab. To do so, click → .

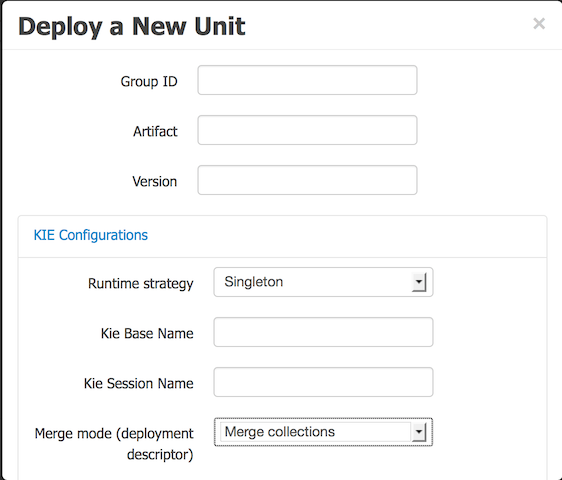

You may find all the deployed units in the Deployments tab. When you Build & Deploy a project from the Project Editor, it is automatically deployed using the default configurations. Therefore, Singleton Strategy, the default KIE Base and the default KIE Session will be used.

In case you wish a more advanced deployment, you may undeploy and re-deploy your artifacts using their GAV and selecting non-default settings. By doing so, you will be able to set a different strategy, or use non-default KIE Base or KIE Session.

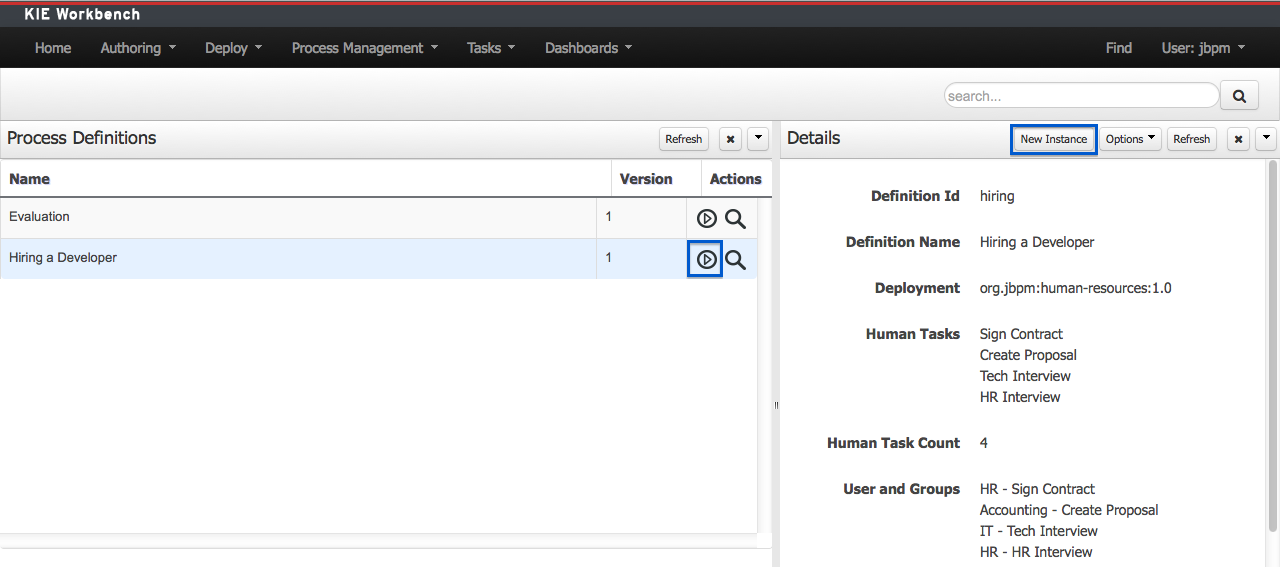

Once your artifact that contains the process definition is deployed, the Process Definition will become available in → .

In order to create new Process Instances click → .

The Process Definitions tab contains all the available process definitions in the runtime environment. In order to add new process definitions, build and deploy a new project.

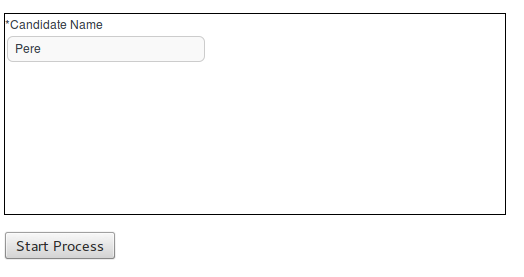

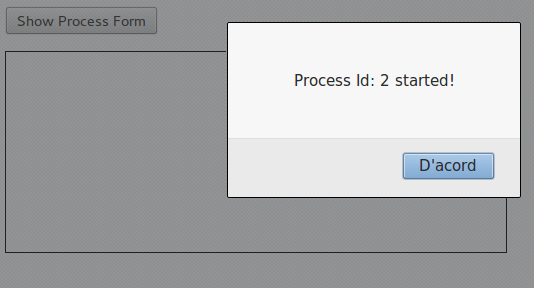

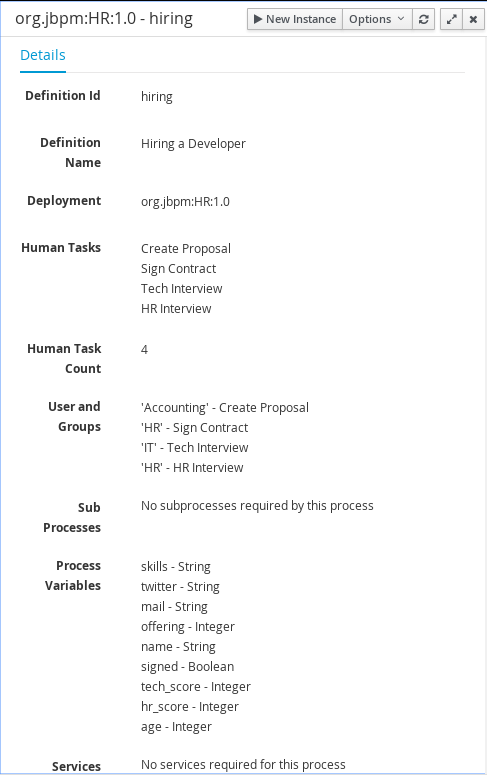

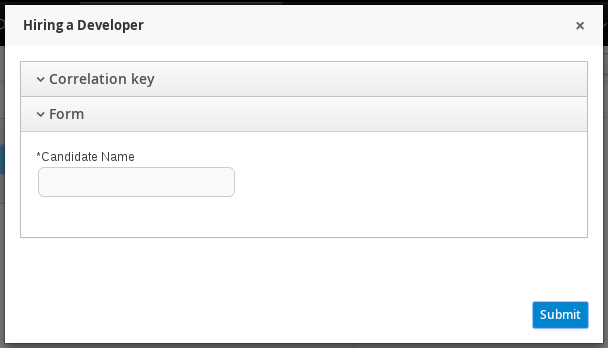

Figure 4.3. Starting Process Instances

You may start process instances using any of the two highlighted options

Most processes require additional information to create a new process instance. This is done through forms. For this project, fill in the name of the candidate that is to be interviewed.

By clicking the start button, a new process instance will be created. This creates the first task, that is available for the Human Resources team. In order to see the task, you need to logout and log in as a user with the appropriate role assigned, that is someone from the Human Resources.

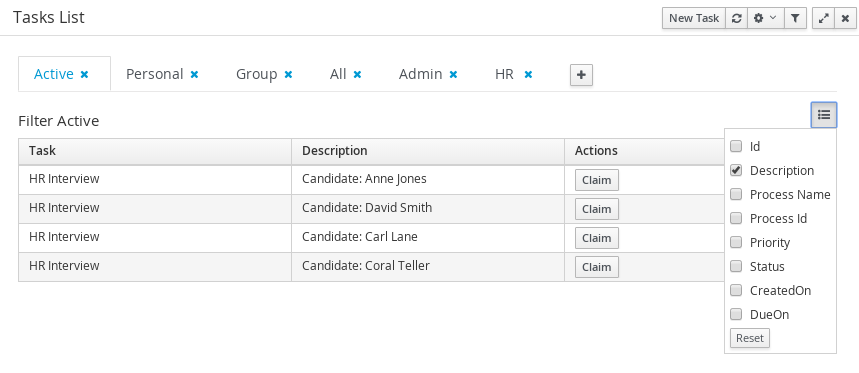

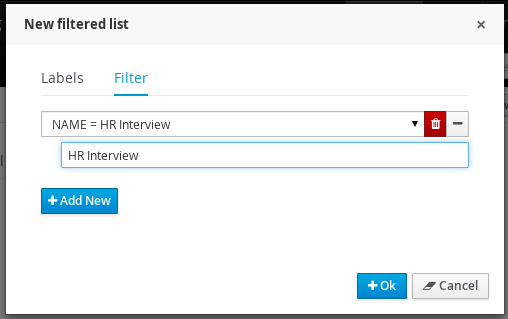

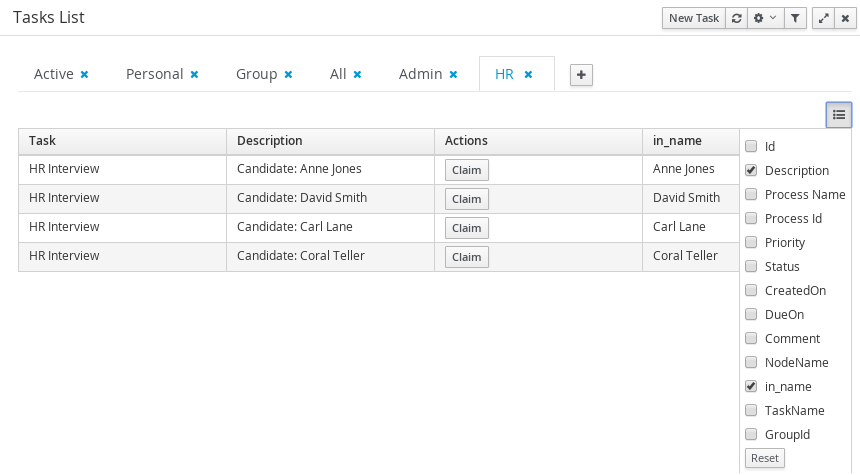

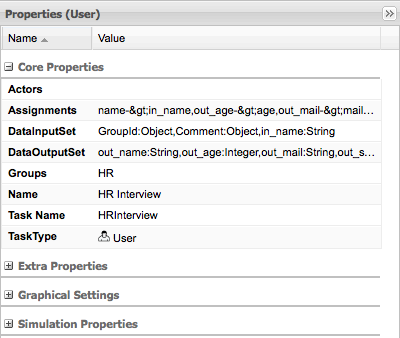

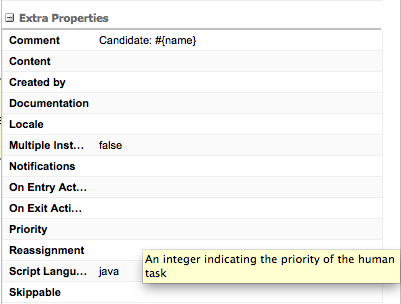

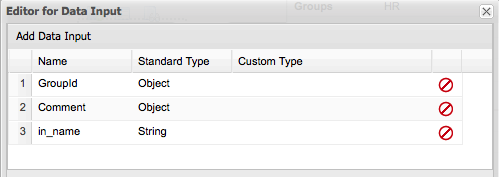

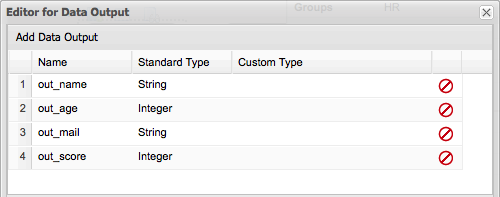

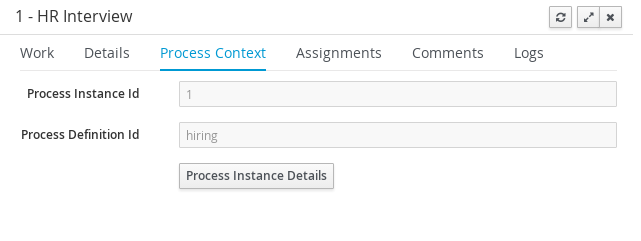

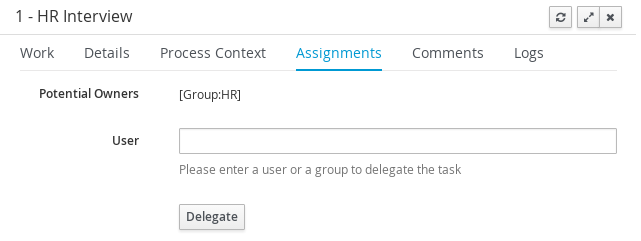

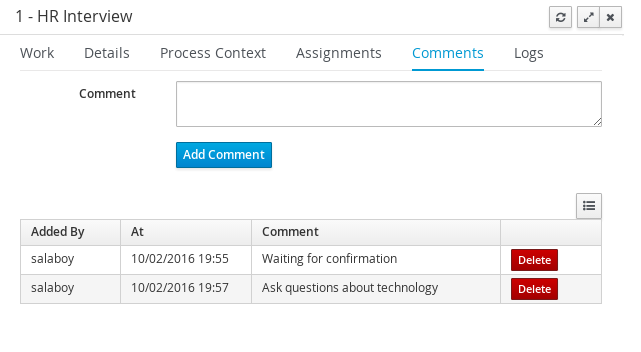

Once you have started the process, you may interact with the human tasks. To do so, click → . Note that in order to see the tasks in the task list, you need to belong to specific user groups, for which the task is designed. For example, the HR Interview task is visible only for the members of the HR group, and the Tech Interview Task is visible only to the members of the IT group.

Using the jBPM Core Engine

Table of Contents

- 5. Core Engine API

- 6. Processes

- 7. Human Tasks

- 8. Persistence and Transactions

This chapter introduces the API you need to load processes and execute them. For more detail on how to define the processes themselves, check out the chapter on BPMN 2.0.

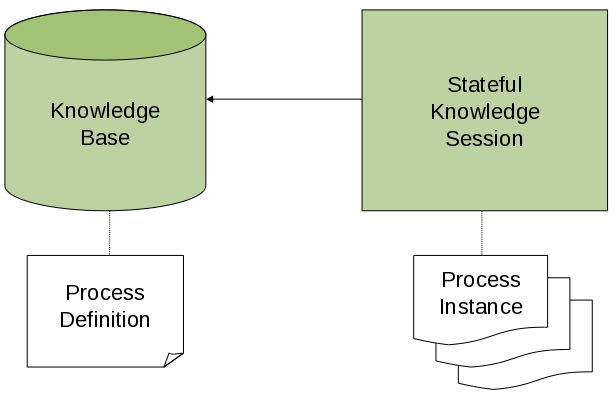

To interact with the process engine (for example, to start a process), you need to set up a session. This session will be used to communicate with the process engine. A session needs to have a reference to a knowledge base, which contains a reference to all the relevant process definitions. This knowledge base is used to look up the process definitions whenever necessary. To create a session, you first need to create a knowledge base, load all the necessary process definitions (this can be from various sources, like from classpath, file system or process repository) and then instantiate a session.

Once you have set up a session, you can use it to start executing processes. Whenever a process is started, a new process instance is created (for that process definition) that maintains the state of that specific instance of the process.

For example, imagine you are writing an application to process sales orders. You could then define one or more process definitions that define how the order should be processed. When starting up your application, you first need to create a knowledge base that contains those process definitions. You can then create a session based on this knowledge base so that, whenever a new sales order comes in, a new process instance is started for that sales order. That process instance contains the state of the process for that specific sales request.

A knowledge base can be shared across sessions and usually is only created once, at the start of the application (as creating a knowledge base can be rather heavy-weight as it involves parsing and compiling the process definitions). Knowledge bases can be dynamically changed (so you can add or remove processes at runtime).

Sessions can be created based on a knowledge base and are used to execute processes and interact with the engine. You can create as many independent session as you need and creating a session is considered relatively lightweight. How many sessions you create is up to you. In general, most simple cases start out with creating one session that is then called from various places in your application. You could decide to create multiple sessions if for example you want to have multiple independent processing units (for example, if you want all processes from one customer to be completely independent from processes for another customer, you could create an independent session for each customer) or if you need multiple sessions for scalability reasons. If you don't know what to do, simply start by having one knowledge base that contains all your process definitions and create one session that you then use to execute all your processes.

The jBPM project has a clear separation between the API the users should be interacting with and the actual implementation classes. The public API exposes most of the features we believe "normal" users can safely use and should remain rather stable across releases. Expert users can still access internal classes but should be aware that they should know what they are doing and that the internal API might still change in the future.

As explained above, the jBPM API should thus be used to (1) create a knowledge base that contains your process definitions, and to (2) create a session to start new process instances, signal existing ones, register listeners, etc.

The jBPM API allows you to first create a knowledge base. This knowledge base should include all your process definitions that might need to be executed by that session. To create a knowledge base, use a KieHelper to load processes from various resources (for example from the classpath or from the file system), and then create a new knowledge base from that helper. The following code snippet shows how to create a knowledge base consisting of only one process definition (using in this case a resource from the classpath).

KieHelper kieHelper = new KieHelper();

KieBase kieBase = kieHelper

.addResource(ResourceFactory.newClassPathResource("MyProcess.bpmn"))

.build();

The ResourceFactory has similar methods to load files from file system, from URL, InputStream, Reader, etc.

This is considered manual creation of knowledge base and while it is simple it is not recommended for real application development but more for try outs. Following you'll find recommended and much more powerful way of building knowledge base, knowledge session and more - RuntimeManager.

Once you've loaded your knowledge base, you should create a session to interact with the engine. This session can then be used to start new processes, signal events, etc. The following code snippet shows how easy it is to create a session based on the previously created knowledge base, and to start a process (by id).

KieSession ksession = kbase.newKieSession();

ProcessInstance processInstance = ksession.startProcess("com.sample.MyProcess");

The ProcessRuntime interface defines all the session methods

for interacting with processes, as shown below.

/**

* Start a new process instance. The process (definition) that should

* be used is referenced by the given process id.

*

* @param processId The id of the process that should be started

* @return the ProcessInstance that represents the instance of the process that was started

*/

ProcessInstance startProcess(String processId);

/**

* Start a new process instance. The process (definition) that should

* be used is referenced by the given process id. Parameters can be passed

* to the process instance (as name-value pairs), and these will be set

* as variables of the process instance.

*

* @param processId the id of the process that should be started

* @param parameters the process variables that should be set when starting the process instance

* @return the ProcessInstance that represents the instance of the process that was started

*/

ProcessInstance startProcess(String processId,

Map<String, Object> parameters);

/**

* Signals the engine that an event has occurred. The type parameter defines

* which type of event and the event parameter can contain additional information

* related to the event. All process instances that are listening to this type

* of (external) event will be notified. For performance reasons, this type of event

* signaling should only be used if one process instance should be able to notify

* other process instances. For internal event within one process instance, use the

* signalEvent method that also include the processInstanceId of the process instance

* in question.

*

* @param type the type of event

* @param event the data associated with this event

*/

void signalEvent(String type,

Object event);

/**

* Signals the process instance that an event has occurred. The type parameter defines

* which type of event and the event parameter can contain additional information

* related to the event. All node instances inside the given process instance that

* are listening to this type of (internal) event will be notified. Note that the event

* will only be processed inside the given process instance. All other process instances

* waiting for this type of event will not be notified.

*

* @param type the type of event

* @param event the data associated with this event

* @param processInstanceId the id of the process instance that should be signaled

*/

void signalEvent(String type,

Object event,

long processInstanceId);

/**

* Returns a collection of currently active process instances. Note that only process

* instances that are currently loaded and active inside the engine will be returned.

* When using persistence, it is likely not all running process instances will be loaded

* as their state will be stored persistently. It is recommended not to use this

* method to collect information about the state of your process instances but to use

* a history log for that purpose.

*

* @return a collection of process instances currently active in the session

*/

Collection<ProcessInstance> getProcessInstances();

/**

* Returns the process instance with the given id. Note that only active process instances

* will be returned. If a process instance has been completed already, this method will return

* null.

*

* @param id the id of the process instance

* @return the process instance with the given id or null if it cannot be found

*/

ProcessInstance getProcessInstance(long processInstanceId);

/**

* Aborts the process instance with the given id. If the process instance has been completed

* (or aborted), or the process instance cannot be found, this method will throw an

* IllegalArgumentException.

*

* @param id the id of the process instance

*/

void abortProcessInstance(long processInstanceId);

/**

* Returns the WorkItemManager related to this session. This can be used to

* register new WorkItemHandlers or to complete (or abort) WorkItems.

*

* @return the WorkItemManager related to this session

*/

WorkItemManager getWorkItemManager();

The session provides methods for registering and removing listeners.

A ProcessEventListener can be used to listen to process-related events,

like starting or completing a process, entering and leaving a node, etc. Below,

the different methods of the ProcessEventListener class are shown.

An event object provides access to related information, like the process instance

and node instance linked to the event. You can use this API to register your own

event listeners.

public interface ProcessEventListener {

void beforeProcessStarted( ProcessStartedEvent event );

void afterProcessStarted( ProcessStartedEvent event );

void beforeProcessCompleted( ProcessCompletedEvent event );

void afterProcessCompleted( ProcessCompletedEvent event );

void beforeNodeTriggered( ProcessNodeTriggeredEvent event );

void afterNodeTriggered( ProcessNodeTriggeredEvent event );

void beforeNodeLeft( ProcessNodeLeftEvent event );

void afterNodeLeft( ProcessNodeLeftEvent event );

void beforeVariableChanged(ProcessVariableChangedEvent event);

void afterVariableChanged(ProcessVariableChangedEvent event);

}A note about before and after events: these events typically act like a stack, which means that any events that occur as a direct result of the previous event, will occur between the before and the after of that event. For example, if a subsequent node is triggered as result of leaving a node, the node triggered events will occur inbetween the beforeNodeLeftEvent and the afterNodeLeftEvent of the node that is left (as the triggering of the second node is a direct result of leaving the first node). Doing that allows us to derive cause relationships between events more easily. Similarly, all node triggered and node left events that are the direct result of starting a process will occur between the beforeProcessStarted and afterProcessStarted events. In general, if you just want to be notified when a particular event occurs, you should be looking at the before events only (as they occur immediately before the event actually occurs). When only looking at the after events, one might get the impression that the events are fired in the wrong order, but because the after events are triggered as a stack (after events will only fire when all events that were triggered as a result of this event have already fired). After events should only be used if you want to make sure that all processing related to this has ended (for example, when you want to be notified when starting of a particular process instance has ended.

Also note that not all nodes always generate node triggered and/or node left events. Depending on the type of node, some nodes might only generate node left events, others might only generate node triggered events. Catching intermediate events for example are not generating triggered events (they are only generating left events, as they are not really triggered by another node, rather activated from outside). Similarly, throwing intermediate events are not generating left events (they are only generating triggered events, as they are not really left, as they have no outgoing connection).

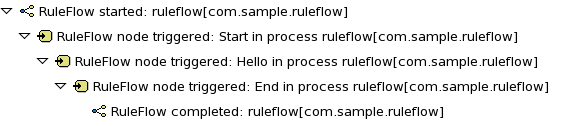

jBPM out-of-the-box provides a listener that can be used to create an audit log (either to the console or the a file on the file system). This audit log contains all the different events that occurred at runtime so it's easy to figure out what happened. Note that these loggers should only be used for debugging purposes. The following logger implementations are supported by default:

Console logger: This logger writes out all the events to the console.

File logger: This logger writes out all the events to a file using an XML representation. This log file might then be used in the IDE to generate a tree-based visualization of the events that occurred during execution.

Threaded file logger: Because a file logger writes the events to disk only when closing the logger or when the number of events in the logger reaches a predefined level, it cannot be used when debugging processes at runtime. A threaded file logger writes the events to a file after a specified time interval, making it possible to use the logger to visualize the progress in realtime, while debugging processes.

The KieServices lets you add a KieRuntimeLogger to

your session, as shown below. When creating a console logger, the knowledge session

for which the logger needs to be created must be passed as an argument. The file

logger also requires the name of the log file to be created, and the threaded file

logger requires the interval (in milliseconds) after which the events should be saved.

You should always close the logger at the end of your application.

import org.kie.api.KieServices;

import org.kie.api.logger.KieRuntimeLogger;

...

KieRuntimeLogger logger = KieServices.Factory.get().getLoggers().newFileLogger(ksession, "test");

// add invocations to the process engine here,

// e.g. ksession.startProcess(processId);

...

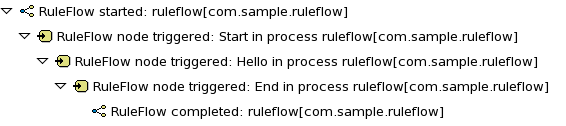

logger.close();The log file that is created by the file-based loggers contains an XML-based overview of all the events that occurred at runtime. It can be opened in Eclipse, using the Audit View in the Drools Eclipse plugin, where the events are visualized as a tree. Events that occur between the before and after event are shown as children of that event. The following screenshot shows a simple example, where a process is started, resulting in the activation of the Start node, an Action node and an End node, after which the process was completed.

A common requirement when working with processes is ability to assign a given process instance some sort of business identifier that can be later on referenced without knowing the actual (generated) id of the process instance. To provide such capabilities, jBPM allows to use CorrelationKey that is composed of CorrelationProperties. CorrelationKey can have either single property describing it (which is in most cases) but it can be represented as multi valued properties set.

Correlation capabilities are provided as part of interface

CorrelationAwareProcessRuntimethat exposes following methods:

/**

* Start a new process instance. The process (definition) that should

* be used is referenced by the given process id. Parameters can be passed

* to the process instance (as name-value pairs), and these will be set

* as variables of the process instance.

*

* @param processId the id of the process that should be started

* @param correlationKey custom correlation key that can be used to identify process instance

* @param parameters the process variables that should be set when starting the process instance

* @return the ProcessInstance that represents the instance of the process that was started

*/

ProcessInstance startProcess(String processId, CorrelationKey correlationKey, Map<String, Object> parameters);

/**

* Creates a new process instance (but does not yet start it). The process

* (definition) that should be used is referenced by the given process id.

* Parameters can be passed to the process instance (as name-value pairs),

* and these will be set as variables of the process instance. You should only

* use this method if you need a reference to the process instance before actually

* starting it. Otherwise, use startProcess.

*

* @param processId the id of the process that should be started

* @param correlationKey custom correlation key that can be used to identify process instance

* @param parameters the process variables that should be set when creating the process instance

* @return the ProcessInstance that represents the instance of the process that was created (but not yet started)

*/

ProcessInstance createProcessInstance(String processId, CorrelationKey correlationKey, Map<String, Object> parameters);

/**

* Returns the process instance with the given correlationKey. Note that only active process instances

* will be returned. If a process instance has been completed already, this method will return

* null.

*

* @param correlationKey the custom correlation key assigned when process instance was created

* @return the process instance with the given id or null if it cannot be found

*/

ProcessInstance getProcessInstance(CorrelationKey correlationKey);

Correlation is usually used with long running processes and thus require persistence to be enabled to be able to permanently store correlation information.

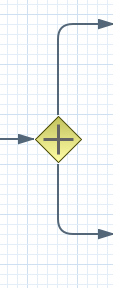

In the following text, we will refer to two types of "multi-threading": logical and technical. Technical multi-threading is what happens when multiple threads or processes are started on a computer, for example by a Java or C program. Logical multi-threading is what we see in a BPM process after the process reaches a parallel gateway, for example. From a functional standpoint, the original process will then split into two processes that are executed in a parallel fashion.

Of course, the jBPM engine supports logical multi-threading: for example, processes that include a parallel gateway. We've chosen to implement logical multi-threading using one thread: a jBPM process that includes logical multi-threading will only be executed in one technical thread. The main reason for doing this is that multiple (technical) threads need to be be able to communicate state information with each other if they are working on the same process. This requirement brings with it a number of complications. While it might seem that multi-threading would bring performance benefits with it, the extra logic needed to make sure the different threads work together well means that this is not guaranteed. There is also the extra overhead incurred because we need to avoid race conditions and deadlocks.

In general, the jBPM engine executes actions in serial. For example,

when the engine encounters a script task in a process, it will synchronously

execute that script and wait for it to complete before continuing execution.

Similarly, if a process encounters a parallel gateway, it will sequentially

trigger each of the outgoing branches, one after the other. This is possible

since execution is almost always instantaneous, meaning that it is extremely

fast and produces almost no overhead. As a result, the user will usually

not even notice this. Similarly, action scripts in a process are also synchronously

executed, and the engine will wait for them to finish before continuing the

process. For example, doing a Thread.sleep(...) as part of

a script will not make the engine continue execution elsewhere but will

block the engine thread during that period.

The same principle applies to service tasks. When a service task is

reached in a process, the engine will also invoke the handler of this service

synchronously. The engine will wait for the completeWorkItem(...)

method to return before continuing execution. It is important that your

service handler executes your service asynchronously if its execution is

not instantaneous.

An example of this would be a service task that invokes an external service. Since the delay in invoking this service remotely and waiting for the results might be too long, it might be a good idea to invoke this service asynchronously. This means that the handler will only invoke the service and will notify the engine later when the results are available. In the mean time, the process engine then continues execution of the process.